Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2026: | J |

| 2025: | JFMAMJ |

| JASOND | |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 246 |

| Programming | 100 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 23 |

| Physics | 21 |

| Perl | 17 |

| Biology | 16 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Wed, 28 Jan 2026

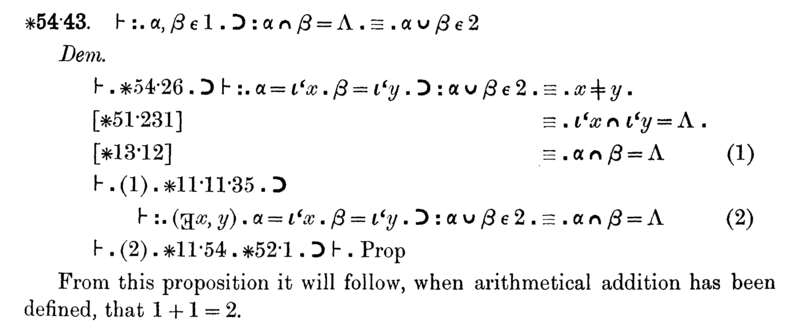

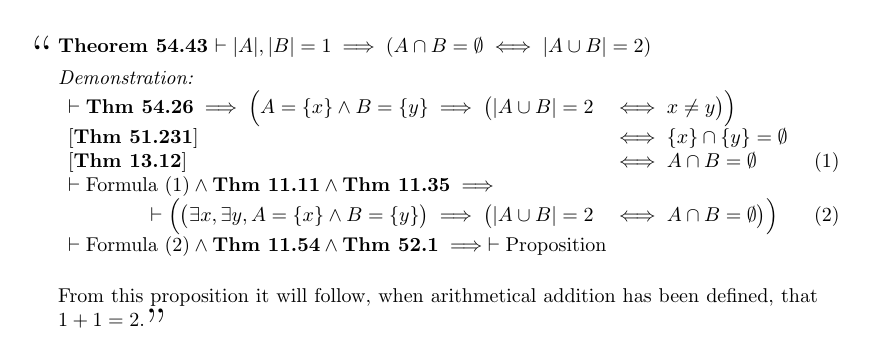

A couple of years back I wrote an article about this bit of mathematical folklore:

Mathematical folklore contains a story about how Acta Quandalia published a paper proving that all partially uniform k-quandles had the Cosell property, and then a few months later published another paper proving that no partially uniform k-quandles had the Cosell property. And in fact, goes the story, both theorems were quite true, which put a sudden end to the investigation of partially uniform k-quandles.

I have an non-apocryphal update in this space! In episode 94 of the podcast “My Favorite Theorem”, Jeremy Alm of Lamar University reports:

My main dissertation result was a conditional result. And about four years after I graduated, a Hungarian graduate student proved that my condition, like my additional hypothesis, held in only trivial cases.

(At 04:15)

In the earlier article, I had said:

Suppose you had been granted a doctorate on the strength of your thesis on the properties of objects from some class which was subsequently shown to be empty. Wouldn't you feel at least a bit like a fraud?

In the podcast, Alm introduces this as evidence that he “wasn't very good at algebra”. Fortunately, he added, it was after he had graduated.

The episode title is “In Which Every Thing Happens or it Doesn't”. I started listening to it because I expected it to be about the ergodic theorem, and I'd like to understand the ergodic theorem. But it turned out to be about the Rado graph. This is fine with me, since I love the Rado graph. (Who doesn't?)

[Other articles in category /math] permanent link

Thu, 01 May 2025

A puzzle about balancing test tubes in a centrifuge

!!\def\nk#1#2{\left\langle{#1 \atop #2}\right\rangle} \def\dd#1{\nk{12}{#1}} !!

Suppose a centrifuge has !!n!! slots, arranged in a circle around the center, and we have !!k!! test tubes we wish to place into the slots. If the tubes are not arranged symmetrically around the center, the centrifuge will explode.

(By "arranged symmetrically around the center, I mean that if the center is at !!(0,0)!!, then the sum of the positions of the tubes must also be at !!(0,0)!!.)

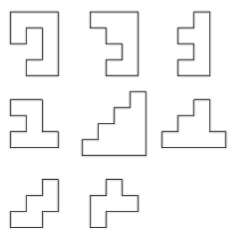

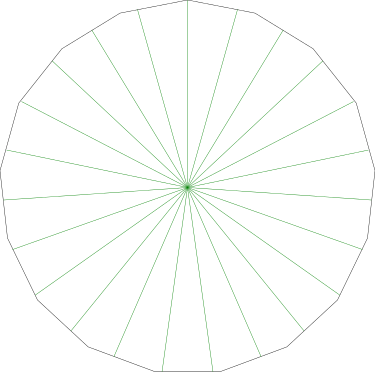

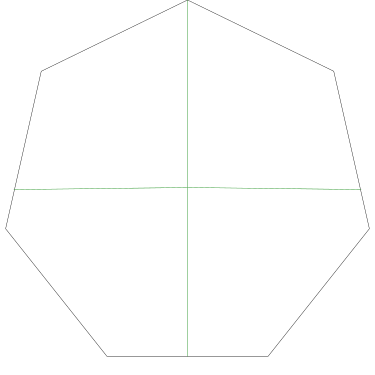

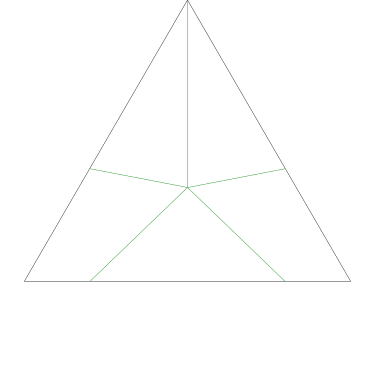

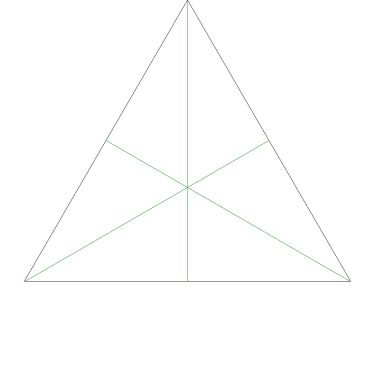

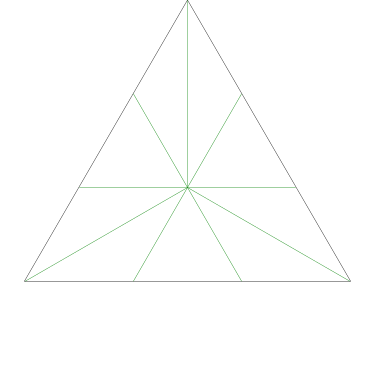

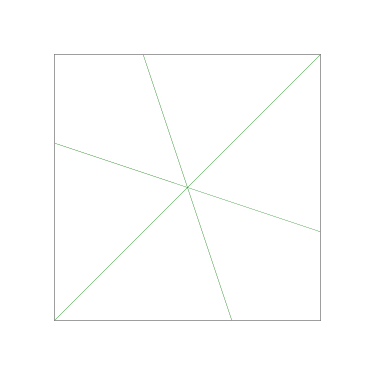

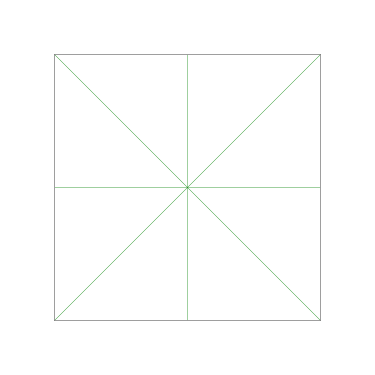

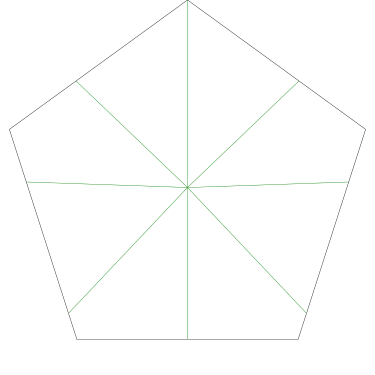

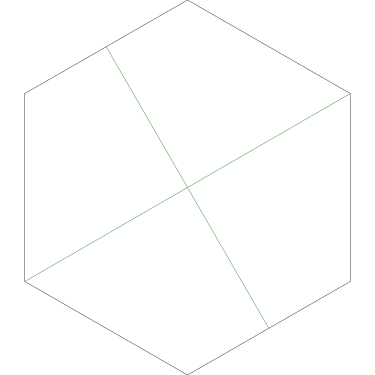

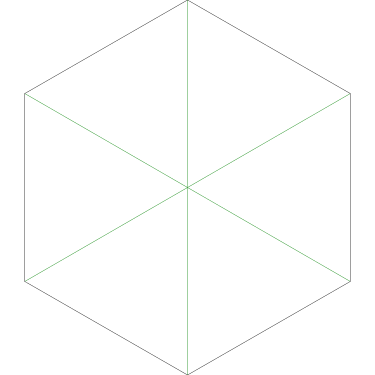

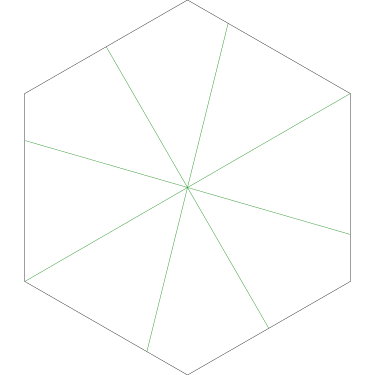

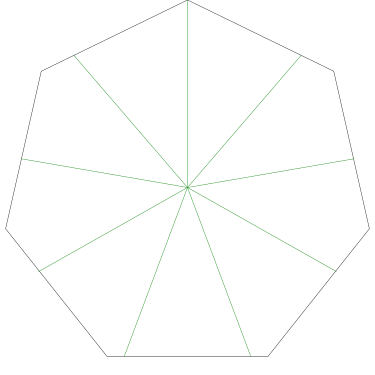

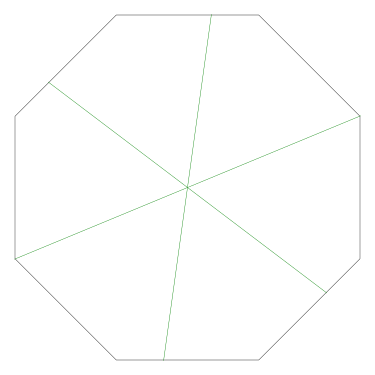

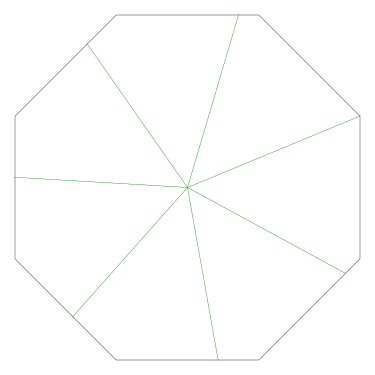

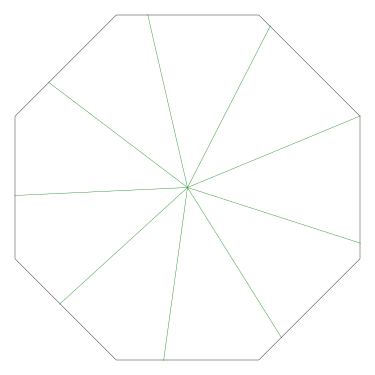

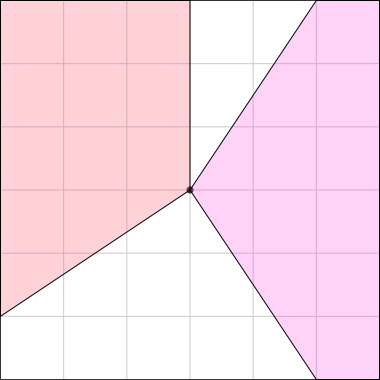

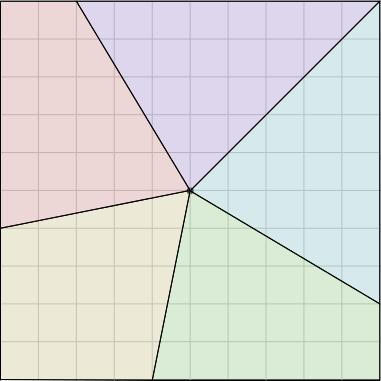

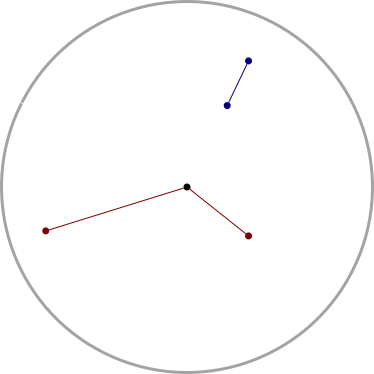

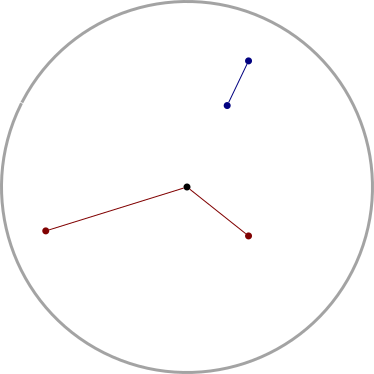

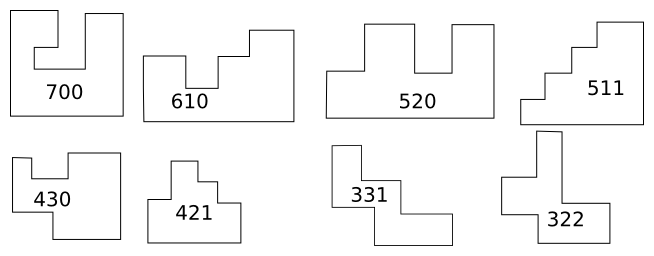

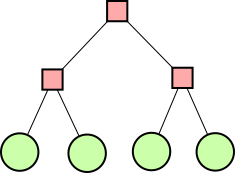

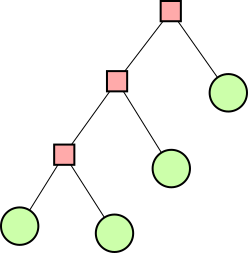

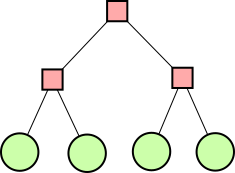

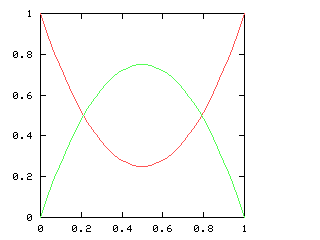

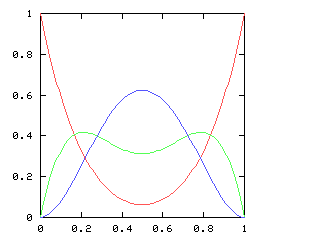

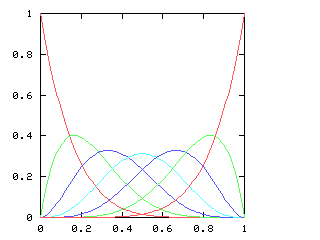

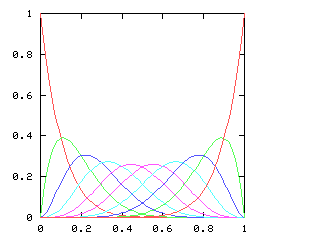

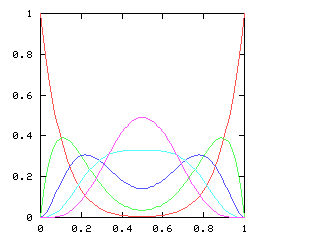

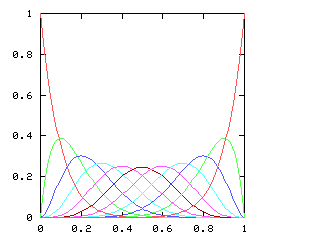

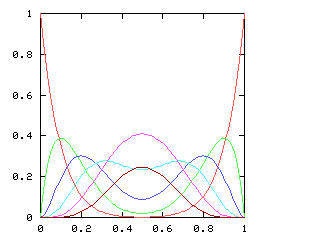

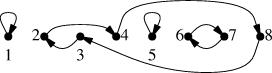

Let's consider the example of !!n=12!!. Clearly we can arrange !!2!!, !!3!!, !!4!!, or !!6!! tubes symmetrically:

Equally clearly we can't arrange only !!1!!. Also it's easy to see we can do !!k!! tubes if and only if we can also do !!n-k!! tubes, which rules out !!n=12, k=11!!.

From now on I will write !!\nk nk!! to mean the problem of balancing !!k!! tubes in a centrifuge with !!n!! slots. So !!\dd 2, \dd 3, \dd 4, !! and !!\dd 6!! are possible, and !!\dd 1!! and !!\dd{11}!! are not. And !!\nk nk!! is solvable if and only if !!\nk n{n-k}!! is.

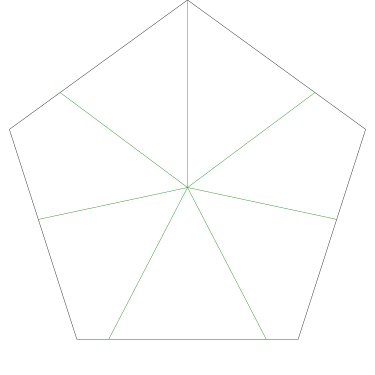

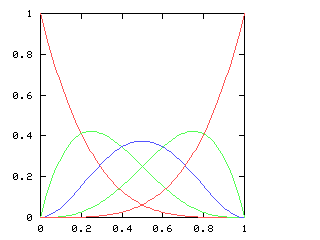

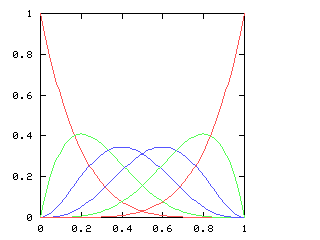

It's perhaps a little surprising that !!\dd7!! is possible. If you just ask this to someone out of nowhere they might have a happy inspiration: “Oh, I'll just combine the solutions for !!\dd3!! and !!\dd4!!, easy.” But that doesn't work because two groups of the form !!3i+j!! and !!4i+j!! always overlap.

For example, if your group of !!4!! is the slots !!0, 3, 6, 9!! then you can't also have your group of !!3!! be !!1, 5, 9!!, because slot !!9!! already has a tube in it.

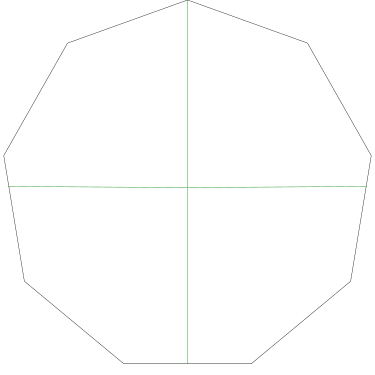

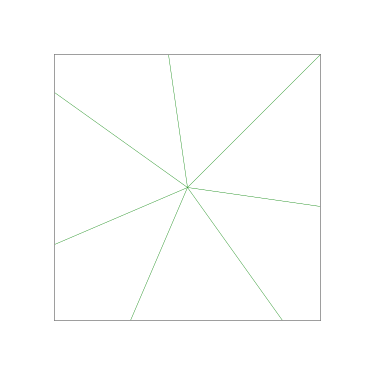

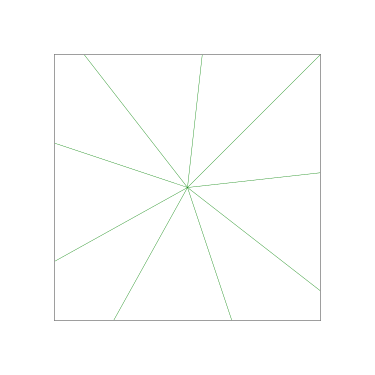

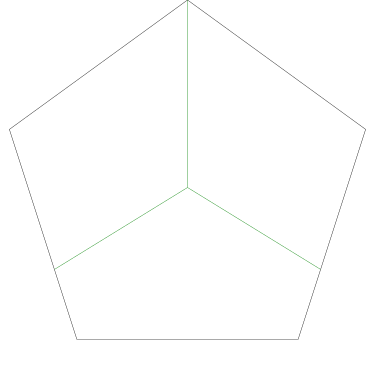

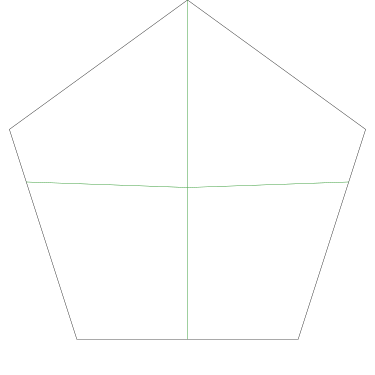

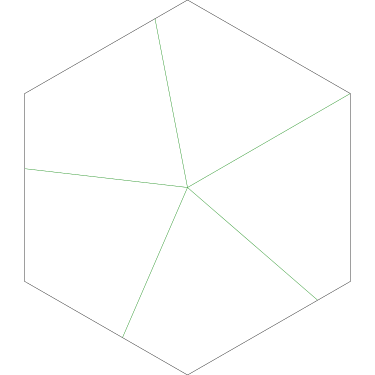

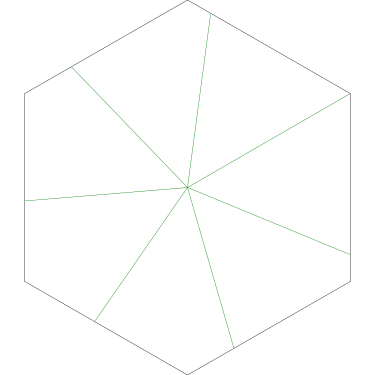

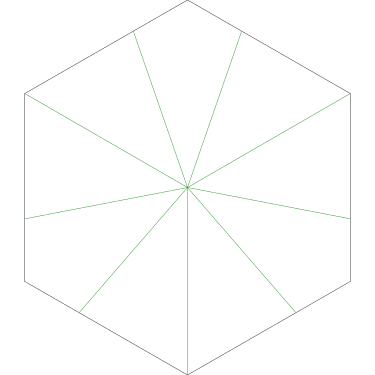

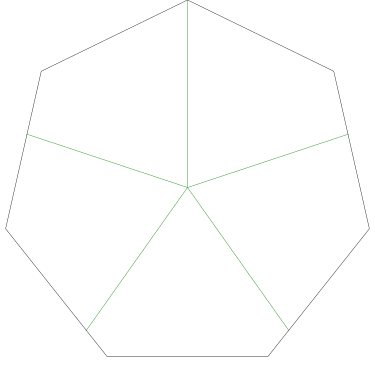

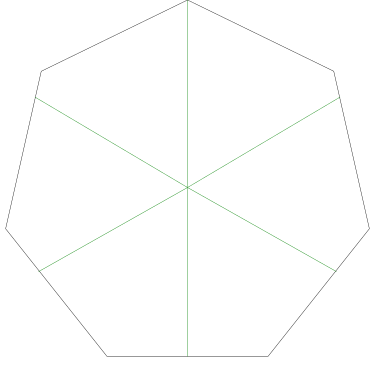

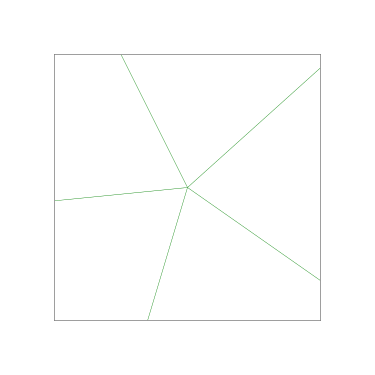

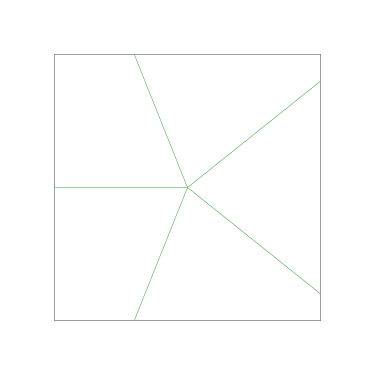

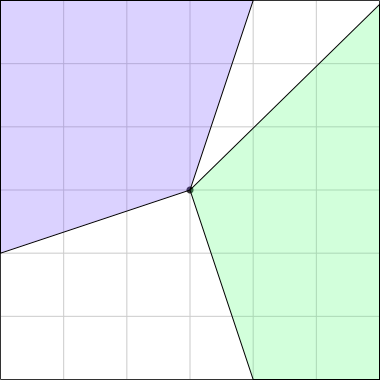

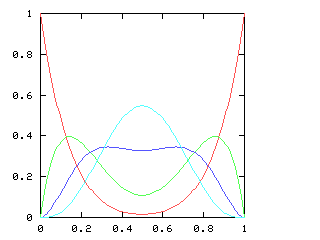

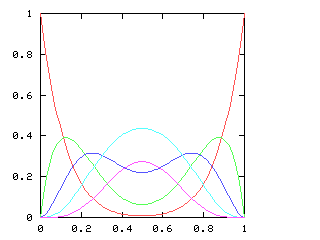

The other balanced groups of !!3!! are blocked in the same way. You cannot solve the puzzle with !!7=3+4!!; you have to do !!7=3+2+2!! as below left. The best way to approach this is to do !!\dd5!!, as below right. This is easy, since the triangle only blocks three of the six symmetric pairs. Then you replace the holes with tubes and the tubes with holes to turn !!\dd5!! into !!\dd{12-5}=\dd7!!.

Given !!n!! and !!k!!, how can we decide whether the centrifuge can be safely packed?

Clearly you can solve !!\nk nk!! when !!n!! is a multiple of !!k>1!!, but the example of !!\dd5!! (or !!\dd7!!) shows this isn't a necessary condition.

A generalization of this is that !!\nk nk!! is always solvable if !!\gcd(n,k) > 1!! since you can easily balance !!g = \gcd(n, k)!! tubes at positions !!0, \frac ng, \frac{2n}g, \dots, \frac {(g-1)n}g!!, then do another !!g!! tubes one position over, and so on. For example, to do !!\dd8!! you just put first four tubes in slots !!0, 3, 6, 9!! and the next four one position over, in slots !!1, 4, 7, 10!!.

An interesting counterexample is that the strategy for !!\dd7!!, where we did !!7=3+2+2!!, cannot be extended to !!\nk{14}9!!. One would want to do !!k=7+2!!, but there is no way to arrange the tubes so that the group of !!2!! doesn't conflict with the group of !!7!!, which blocks one slot from every pair.

But we can see that this must be true without even considering the geometry. !!\nk{14}9!! is the reverse of !!\nk{14}{14-9} = \nk{14}5!!, which impossible: the only nontrivial divisors of !!n=14!! are !!2!! and !!7!!, so !!k!! must be a sum of !!2!!s and !!7!!s, and !!5!! is not.

You can't fit !!k=3+5=8!! tubes when !!n=15!!, but again the reason is a bit tricky. When I looked at !!8!! directly, I did a case analysis to make sure that the !!3!!-group and the !!5!!-group would always conflict. But again there was an easier was to see this: !!8=15-7!! and !!7!! clearly won't work, as !!7!! is not a sum of !!3!!s and !!5!!s. I wonder if there's an example where both !!k!! and !!n-k!! are not obvious?

For !!n=20!!, every !!k!! works except !!k=3,17!! and the always-impossible !!k=1,19!!.

What's the answer in general? I don't know.

Addenda

20250502

Now I am amusing myself thinking about the perversity of a centrifuge with a prime number of slots, say !!13!!. If you use it at all, you must fill every slot. I hope you like explosions!

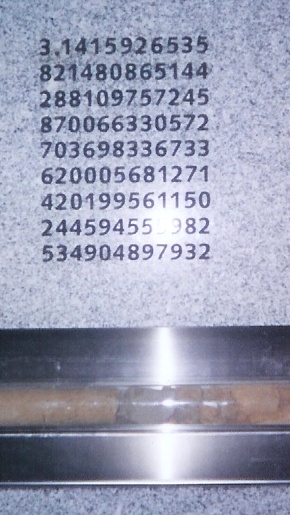

While I did not explode any centrifuges in university chemistry, I did once explode an expensive Liebig condenser.

Condenser setup by Mario Link from an original image by Arlen on Flickr. Licensed cc-by-2.0, provided via Wikimedia Commons.

20250503

Michael Lugo informs me that a complete solution may be found on Matt Baker's math blog. I have not yet looked at this myself.

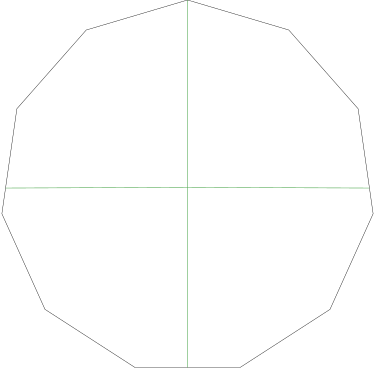

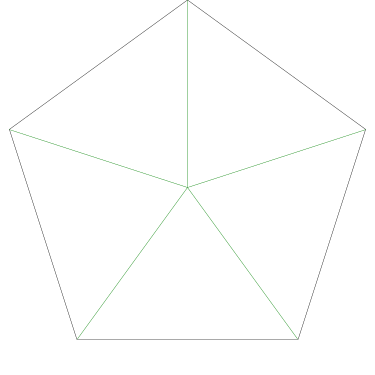

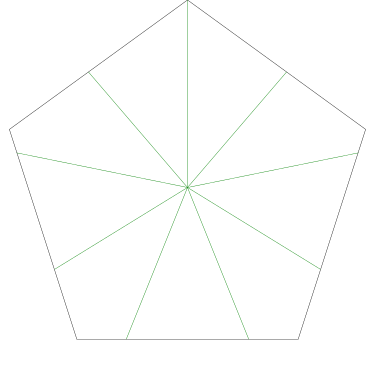

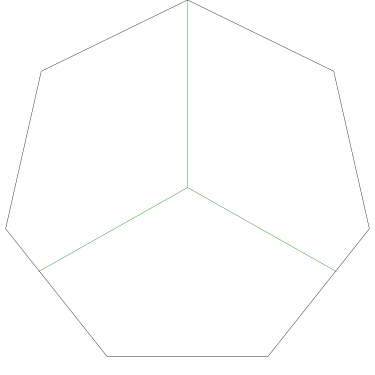

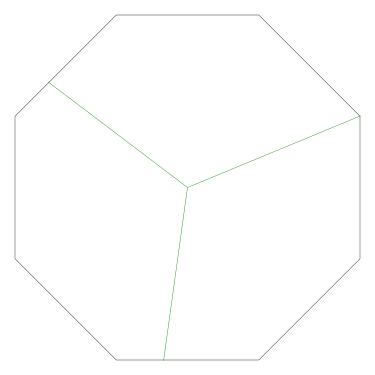

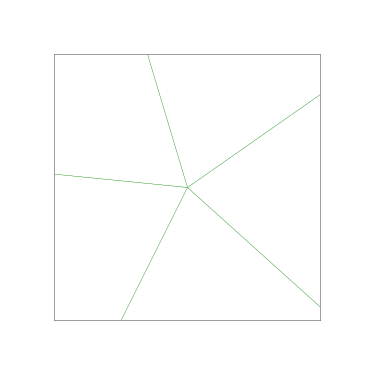

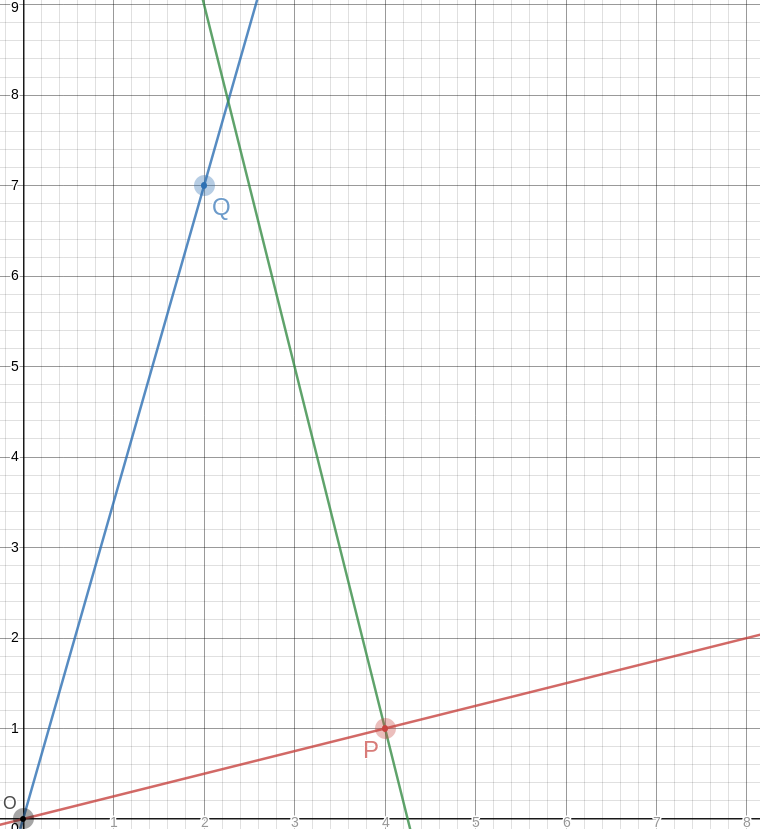

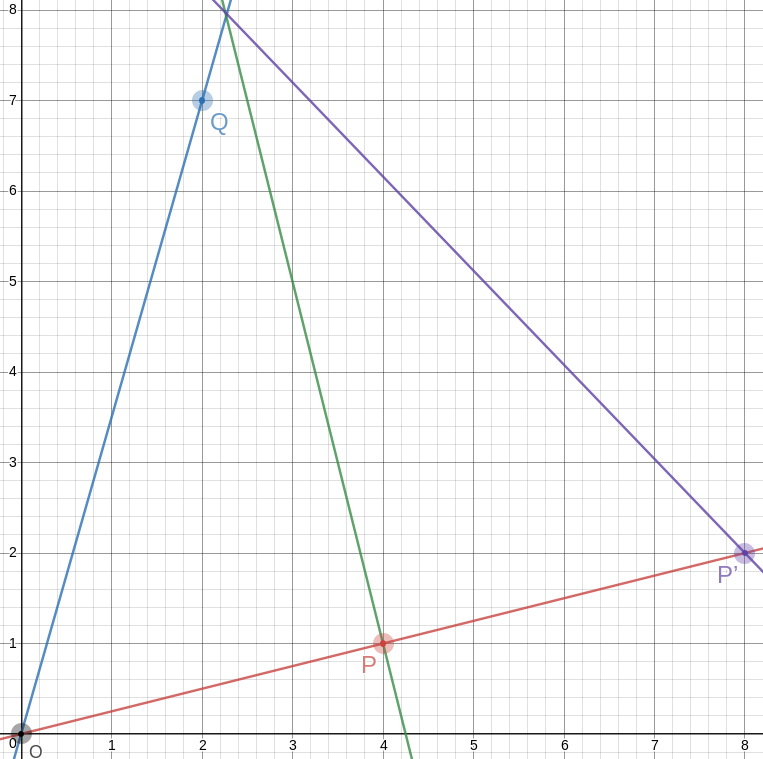

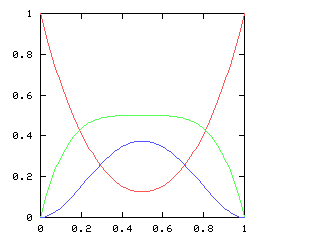

Omar Antolín points out an important consideration I missed: it may be necessary to subtract polygons. Consider !!\nk{30}6!!. This is obviously possible since !!6\mid 30!!. But there is a more interesting solution. We can add the pentagon !!{0, 6, 12, 18, 24}!! to the digons !!{5, 20}!! and !!{10, 25}!! to obtain the solution $${0,5,6,10,12,18, 20, 24, 25}.$$

Then from this we can subtract the triangle !!{0, 10, 20}!! to obtain $${5, 6, 12, 18, 24, 25},$$ a solution to !!\nk{30}6!! which is not a sum of regular polygons:

Thanks to Dave Long for pointing out a small but significant error, which I have corrected.

20250505

- Robin Houston points out this video, The centrifuge Problem with Holly Krieger, on the Numberphile channel.

[Other articles in category /math] permanent link

Wed, 30 Apr 2025

Proof by insufficient information

Content warning: rambly

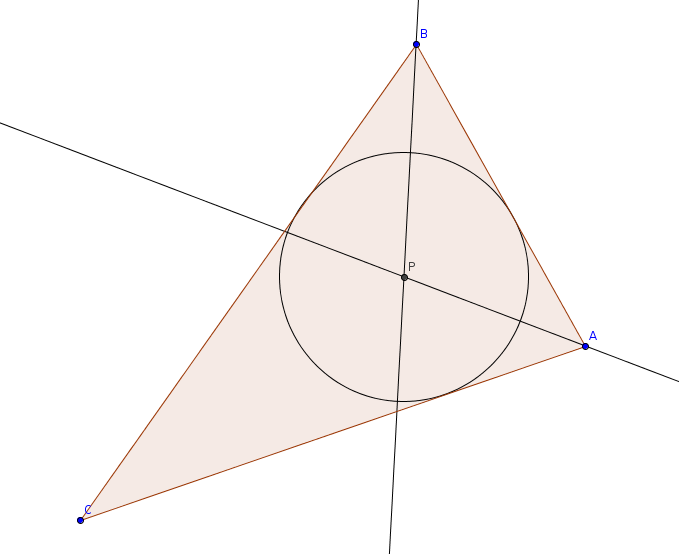

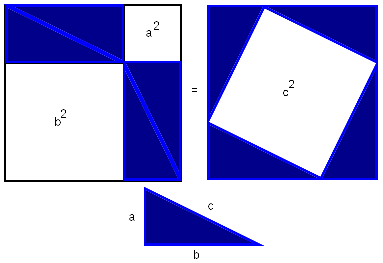

Given the coordinates of the three vertices of a triangle, can we find the area? Yes. If by no other method, we can use the Pythagorean theorem to find the lengths of the edges, and then Heron's formula to compute the area from that.

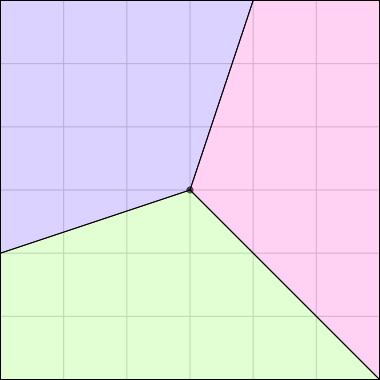

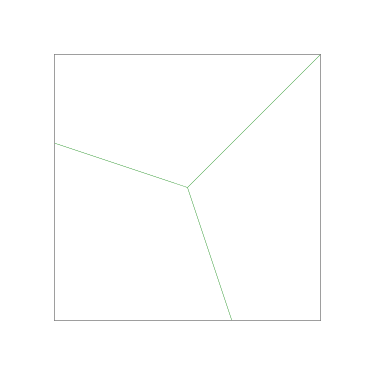

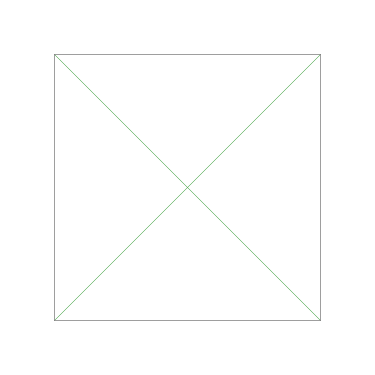

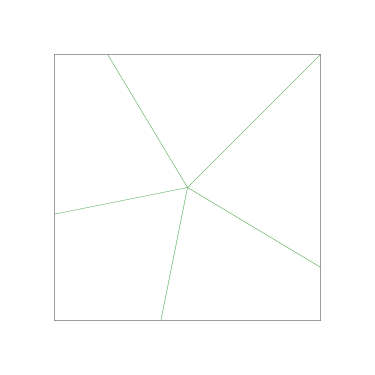

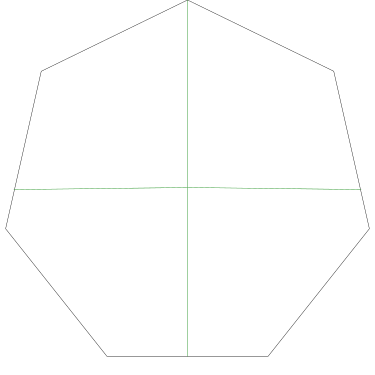

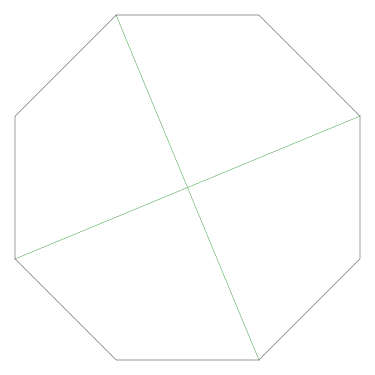

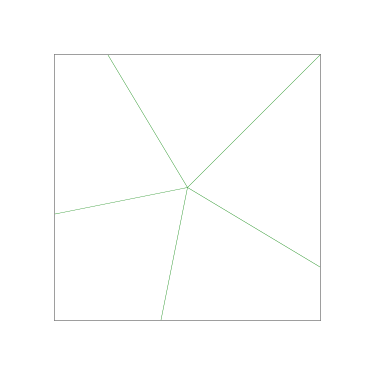

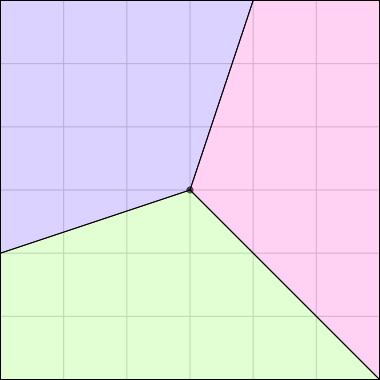

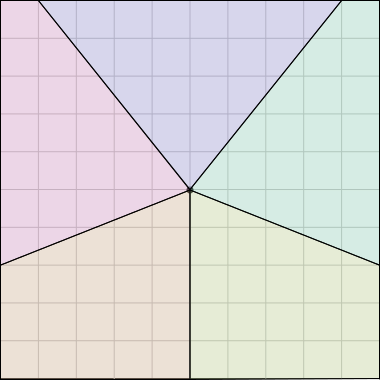

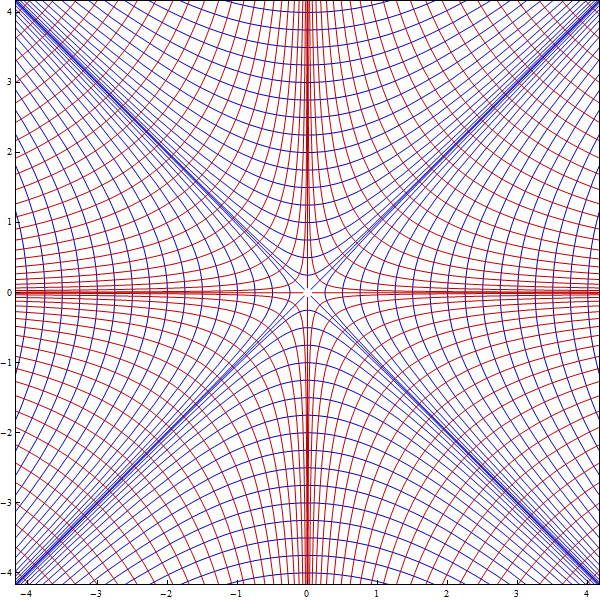

Now, given the coordinates of the four vertices of a quadrilateral, can we find the area? And the answer is, no, there is no method to do that, because there is not enough information:

These three quadrilaterals have the same vertices, but different areas. Just knowing the vertices is not enough; you also need their order.

I suppose one could abstract this: Let !!f!! be the function that maps the set of vertices to the area of the quadrilateral. Can we calculate values of !!f!!? No, because there is no such !!f!!, it is not well-defined.

Put that way it seems less interesting. It's just another example of the principle that, just because you put together a plausible sounding description of some object, you cannot infer that such an object must exist. One of the all-time pop hits here is:

Let !!ε!! be the smallest [real / rational] number strictly greater than !!0!!…

which appears on Math SE quite frequently. Another one I remember is someone who asked about the volume of a polyhedron with exactly five faces, all triangles. This is a fallacy at the ontological level, not the mathematical level, so when it comes up I try to demonstrate it with a nonmathematical counterexample, usually something like “the largest purple hat in my closet” or perhaps “the current Crown Prince of the Ottoman Empire”. The latter is less good because it relies on the other person to know obscure stuff about the Ottoman Empire, whatever that is.

This is also unfortunately also the error in Anselm's so-called “ontological proof of God”. A philosophically-minded friend of mine once remarked that being known for the discovery of the ontological proof of God is like being known for the discovery that you can wipe your ass with your hand.

Anyway, I'm digressing. The interesting part of the quadrilateral thing, to me, is not so much that !!f!! doesn't exist, but the specific reasoning that demonstrates that it can't exist. I think there are more examples of this proof strategy, where we prove nonexistence by showing there is not enough information for the thing to exist, but I haven't thought about it enough to come up with one.

There is a proof, the so-called “information-theoretic proof”, that a comparison sorting algorithm takes at least !!O(n\log n)!! time, based on comparing the amount of information gathered from the comparisons (one bit each) with that required to distinguish all !!n! !! possible permutations (!!\log_2 n! \ge n\log_2 n!! bits total). I'm not sure that's what I'm looking for here. But I'm also not sure it isn't, or why I feel it might be different.

Addenda

20250430

Carl Muckenhoupt suggests that logical independence proofs are of the same sort. He says, for example:

Is there a way to prove the parallel postulate from Euclid's other axioms? No, there is not enough information. Here are two geometric models that produce different results.

This is just the sort of thing I was looking for.

20250503

Rik Signes has allowed me to reveal that he was the source of the memorable disparagement of Anselm's dumbass argument.

[Other articles in category /math] permanent link

Tue, 25 Mar 2025

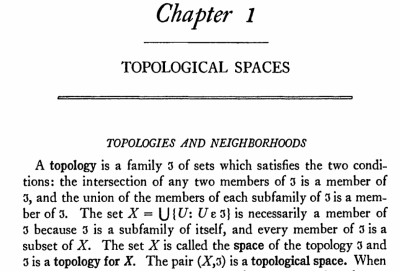

The mathematical past is a foreign country

A modern presentation of the Peano axioms looks like this:

- !!0!! is a natural number

- If !!n!! is a natural number, then so is the result of appending an !!S!! to the beginning of !!n!!

- Nothing else is a natural number

This baldly states that zero is a natural number.

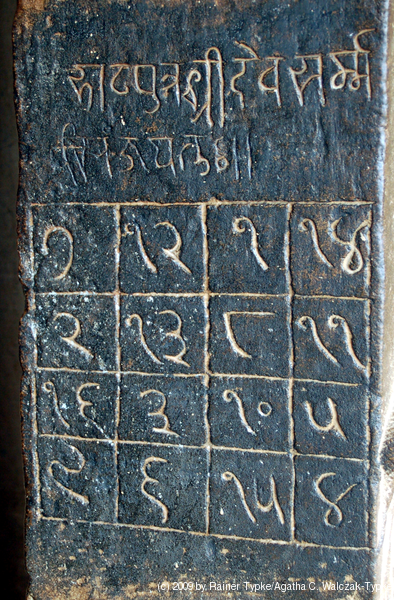

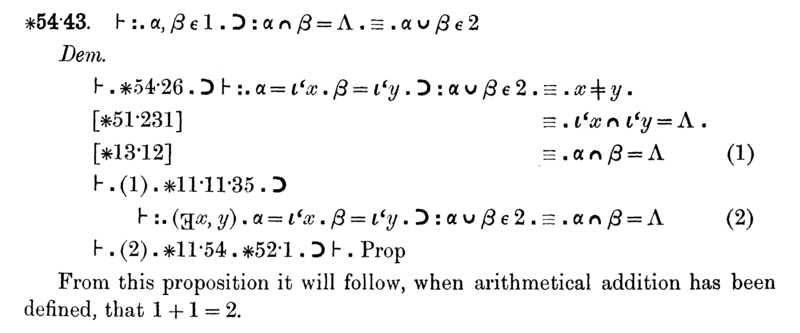

I think this is a 20th-century development. In 1889, the natural numbers started at !!1!!, not at !!0!!. Peano's Arithmetices principia, nova methodo exposita (1889) is the source of the Peano axioms and in it Peano starts the natural numbers at !!1!!, not at !!0!!:

There's axiom 1: !!1\in\Bbb N!!. No zero. I think starting at !! 0!! may be a Bourbakism.

In a modern presentation we define addition like this:

$$ \begin{array}{rrl} (i) & a + 0 = & a \\ (ii) & a + Sb = & S(a+b) \end{array} $$

Peano doesn't have zero, so he doesn't need item !!(i)!!. His definition just has !!(ii)!!.

But wait, doesn't his inductive definition need to have a base case? Maybe something like this?

\begin{array}{rrl} (i') & a + 1 = & Sa \\ \end{array}

Nope, Peano has nothing like that. But surely the definition must have a base case? How can Peano get around that?

Well, by modern standards, he cheats!

Peano doesn't have a special notation like !!S!! for successor. Where a modern presentation might write !!Sa!! for the successor of the number !!a!!, Peano writes “!!a + 1!!”.

So his version of !!(ii)!! looks like this:

$$ a + (b + 1) = (a + b) + 1 $$

which is pretty much a symbol-for-symbol translation of !!(ii)!!. But if we try to translate !!(i')!! similarly, it looks like this:

$$ a + 1 = a + 1 $$

That's why Peano didn't include it: to him, it was tautological.

But to modern eyes that last formula is deceptive because it equivocates between the "!!+ 1!!" notation that is being used to represent the successor operation (on the right) and the addition operation that Peano is trying to define (on the left). In a modern presentation, we are careful to distinguish between our formal symbol for a successor, and our definition of the addition operation.

Peano, working pre-Frege and pre-Hilbert, doesn't have the same concept of what this means. To Peano, constructing the successor of a number, and adding a number to the constant !!1!!, are the same operation: the successor operation is just adding !!1!!.

But to us, !!Sa!! and !!a+S0!! are different operations that happen to yield the same value. To us, the successor operation is a purely abstract or formal symbol manipulation (“stick an !!S!! on the front”). The fact that it also has an arithmetic interpretation, related to addition, appears only once we contemplate the theorem $$\forall a. a + S0 = Sa.$$ There is nothing like this in Peano.

It's things like this that make it tricky to read older mathematics books. There are deep philosophical differences about what is being done and why, and they are not usually explicit.

Another example: in the 19th century, the abstract presentation of group theory had not yet been invented. The phrase “group” was understood to be short for “group of permutations”, and the important property was closure, specifically closure under composition of permutations. In a 20th century abstract presentation, the closure property is usually passed over without comment. In a modern view, the notation !!G_1\cup G_2!! is not even meaningful, because groups are not sets and you cannot just mix together two sets of group elements without also specifying how to extend the binary operation, perhaps via a free product or something. In the 19th century, !!G_1\cup G_2!! is perfectly ordinary, because !!G_1!! and !!G_2!! are just sets of permutations. One can then ask whether that set is a group — that is, whether it is closed under composition of permutations — and if not, what is the smallest group that contains it.

It's something like a foreign language of a foreign culture. You can try to translate the words, but the underlying ideas may not be the same.

Addendum 20250326

Simon Tatham reminds me that Peano's equivocation has come up here before. I previously discussed a Math SE post in which OP was confused because Bertrand Russell's presentation of the Peano axioms similarly used the notation “!!+ 1!!” for the successor operation, and did not understand why it was not tautological.

[Other articles in category /math] permanent link

Mon, 03 Feb 2025Here's a Math SE pathology that bugs me. OP will ask "I'm trying to prove that groups !!A!! and !!B!! are isomorphic, I constructed this bijection but I see that it's not a homomorphism. Is it sufficient, or do I need to find a bijective homomorphism?"

And respondent !!R!! will reply in the comments "How can a function which is not an homomorphism prove that the groups are isomorphic?"

Which is literally the exact question that OP was asking! "Do I need to find … a homomorphism?"

My preferred reply would be something like "Your function is not enough. You are correct that it needs to be a homomorphism."

Because what problem did OP really have? Clearly, their problem is that they are not sure what it means for two groups to be isomorphic. For the respondent to ask "How can a function which is not an homomorphism prove the the groups are isomorphic" is unhelpful because they know that OP doesn't know the answer to that question.

OP knows too, that's exactly what their question was! They're trying to find out the answer to that exact question! OP correctly identified the gap in their own understanding. Then they formulated a clear, direct question that would address the gap.

THEY ARE ASKING THE EXACT RIGHT QUESTION AND !!R!! DID NOT ANSWER IT

My advice to people answering questions on MSE:

It's all very well for !!R!! to imagine that they are going to be brilliant like Socrates, conducting a dialogue for that ages that draws from OP the realization that the knowledge they sought was within them all along. Except:

- !!R!! is not Socrates

- Nobody has time for this nonsense

- The knowledge was not within them all along

MSE is a site where people go to get answers to their questions. That is its sole and stated purpose. If !!R!! is not going to answer questions, what are they even doing there? In my opinion, just wasting everyone's time.

Important pedagogical note

It's sufficient to say "Your function is not enough", which answers the question.

But it is much better to say "Your function is not enough. You are correct that it needs to be a homomorphism". That acknowledges the student's contribution. It tells them that their analysis of the difficulty was correct!

They may not know what it means for two groups to be isomorphic, but they do know one something almost as good: that they are unsure what it means for two groups to be isomorphic. This is valuable knowledge.

This wise student recognises that they don't know. Socrates said that he was the wisest of all men, because he at least “knew that he didn't know”. If you want to take a lesson from Socrates, take that one, not his stupid theory that all knowledge is already within us.

OP did what students are supposed to do: they reflected on their knowledge, they realized it was inadequate, and they set about rectifying it. This deserves positive reinforcement.

Addenda

This is a real example. I have not altered it, because I am afraid that if I did you would think I was exaggerating.

I have been banging this drum for decades, but I will cut the scroll here. Expect a followup article.

20250206

The threatened followup article, about the EFNet #perl channel

in the early 2000's.

[Other articles in category /math/se] permanent link

Thu, 22 Aug 2024

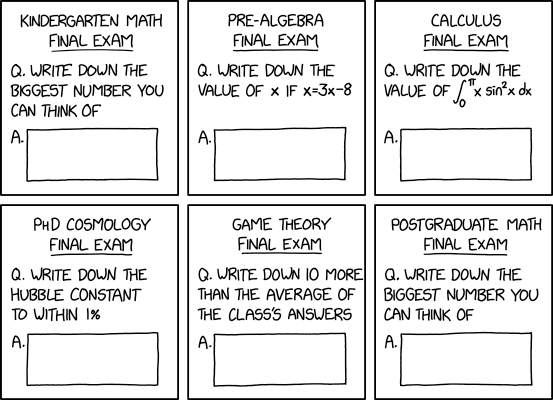

(Source: XKCD “Exam numbers”.)

This post is about the bottom center panel, “Game Theory final exam”.

I don't know much about game theory and I haven't seen any other discussion of this question. But I have a strategy I think is plausible and I'm somewhat pleased with.

(I assume that answers to the exam question must be real numbers — not !!\infty!! — and that “average” here is short for 'arithmetic mean'.)

First, I believe the other players and I must find a way to agree on what the average will be, or else we are all doomed. We can't communicate, so we should choose a Schelling point and hope that everyone else chooses the same one. Fortunately, there is only one distinguished choice: zero. So I will try to make the average zero and I will hope that others are trying to do the same.

If we succeed in doing this, any winning entry will therefore be !!10!!. Not all !!n!! players can win because the average must be !!0!!. But !!n-1!! can win, if the one other player writes !!-10(n-1)!!. So my job is to decide whether I will be the loser. I should select a random integer between !!0!! and !!n-1!!. If it is zero, I have drawn a short straw, and will write !!-10(n-1)!!. otherwise I write !!10!!.

(The straw-drawing analogy is perhaps misleading. Normally, exactly one straw is short. Here, any or all of the straws might be short.)

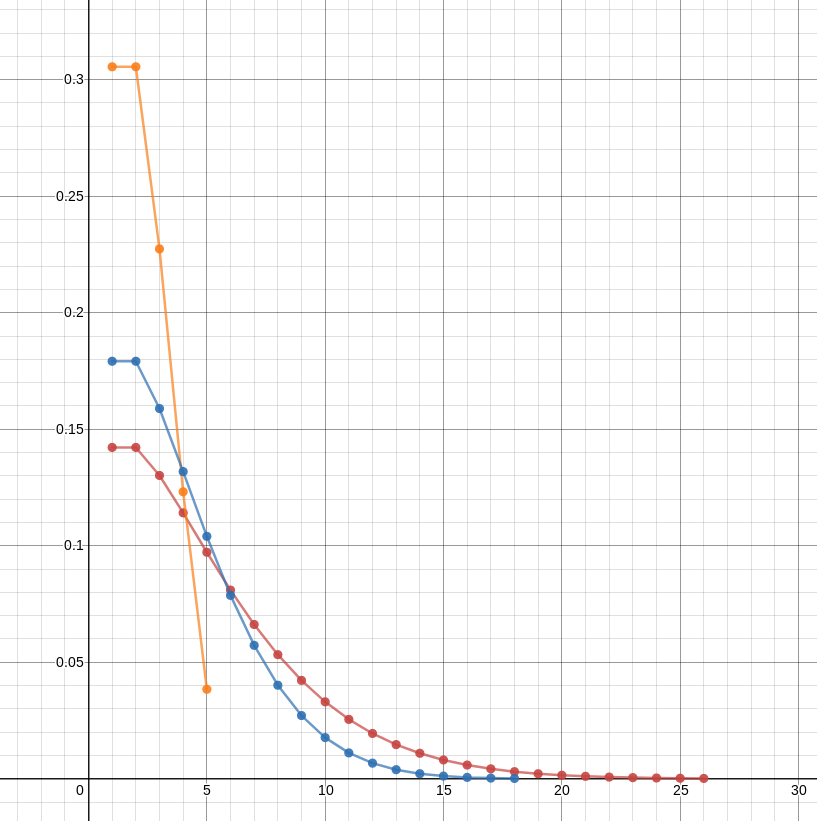

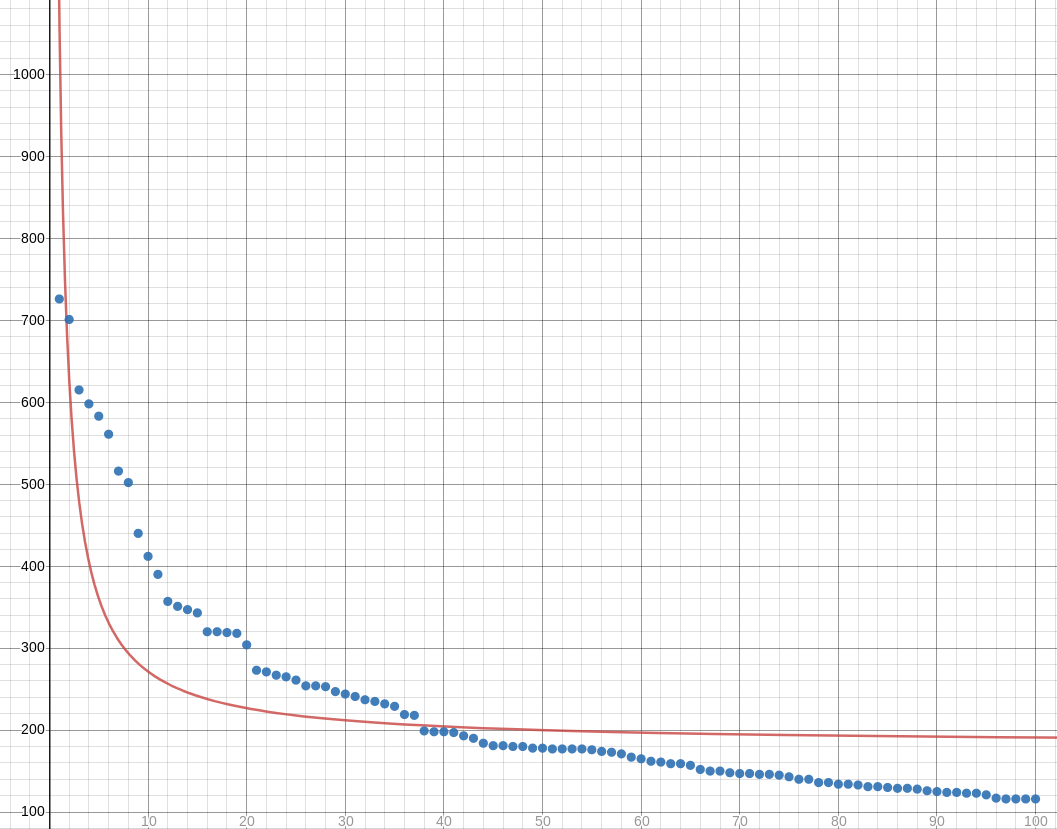

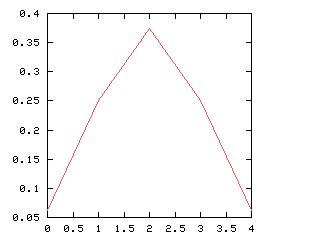

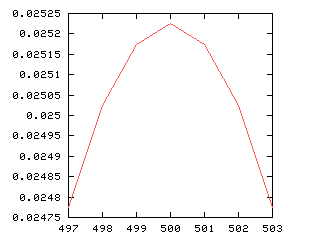

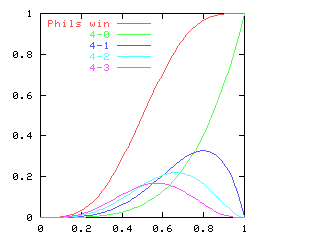

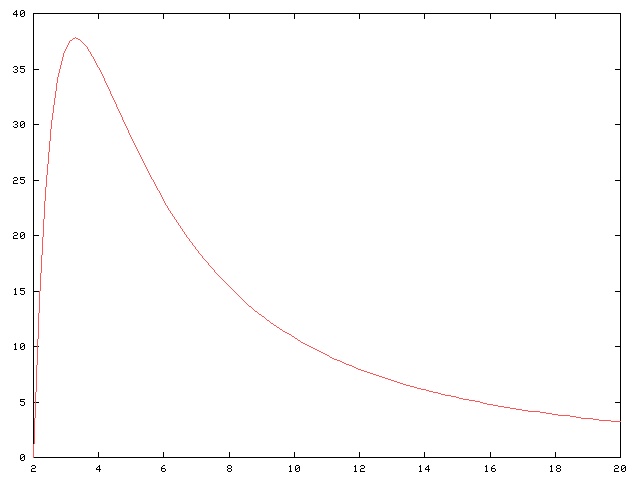

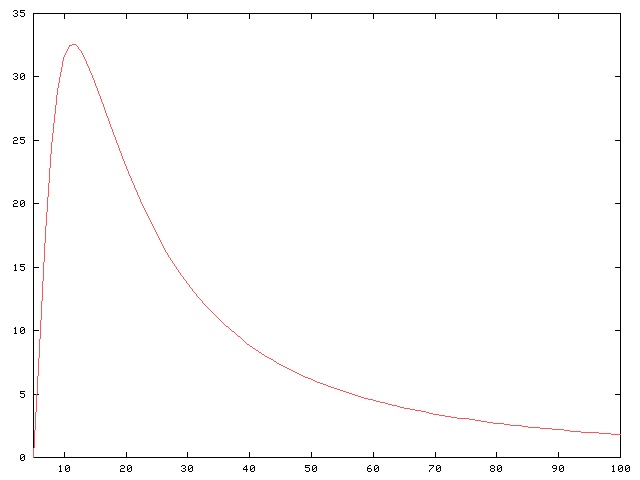

If everyone follows this strategy, then I will win if exactly one person draws a short straw and if that one person isn't me. The former has a probability that rapidly approaches !!\frac1e\approx 36.8\%!! as !!n!! increases, and the latter is !!\frac{n-1}n!!. In an !!n!!-person class, the probability of my winning is $$\left(\frac{n-1}n\right)^n$$ which is already better than !!\frac13!! when !!n= 6!!, and it increases slowly toward !!36.8\%!! after that.

Some miscellaneous thoughts:

The whole thing depends on my idea that everyone will agree on !!0!! as a Schelling point. Is that even how Schelling points work? Maybe I don't understand Schelling points.

I like that the probability !!\frac1e!! appears. It's surprising how often this comes up, often when multiple agents try to coordinate without communicating. For example, in ALOHAnet a number of ground stations independently try to send packets to a single satellite transceiver, but if more than one tries to send a packet at a particular time, the packets are garbled and must be retransmitted. At most !!\frac1e!! of the available bandwidth can be used, the rest being lost to packet collisions.

The first strategy I thought of was plausible but worse: flip a coin, and write down !!10!! if it is heads and !!-10!! if it is tails. With this strategy I win if exactly !!\frac n2!! of the class flips heads and if I do too. The probability of this happening is only $$\frac{n\choose n/2}{2^n}\cdot \frac12 \approx \frac1{\sqrt{2\pi n}}.$$ Unlike the other strategy, this decreases to zero as !!n!! increases, and in no case is it better than the first strategy. It also fails badly if the class contains an odd number of people.

Thanks to Brian Lee for figuring out the asymptotic value of !!4^{-n}\binom{2n}{n}!! so I didn't have to.

Just because this was the best strategy I could think of in no way means that it is the best there is. There might have been something much smarter that I did not think of, and if there is then my strategy will sabotage everyone else.

Game theorists do think of all sorts of weird strategies that you wouldn't expect could exist. I wrote an article about one a few years back.

Going in the other direction, even if !!n-1!! of the smartest people all agree on the smartest possible strategy, if the !!n!!th person is Leeroy Jenkins, he is going to ruin it for everyone.

If I were grading this exam, I might give full marks to anyone who wrote down either !!10!! or !!-10(n-1)!!, even if the average came out to something else.

For a similar and also interesting but less slippery question, see Wikipedia's article on Guess ⅔ of the average. Much of the discussion there is directly relevant. For example, “For Nash equilibrium to be played, players would need to assume both that everyone else is rational and that there is common knowledge of rationality. However, this is a strong assumption.” LEEROY JENKINS!!

People sometimes suggest that the real Schelling point is for everyone to write !!\infty!!. (Or perhaps !!-\infty!!.)

Feh.

If the class knows ahead of time what the question will be, the strategy becomes a great deal more complicated! Say there are six students. At most five of them can win. So they get together and draw straws to see who will make a sacrifice for the common good. Vidkun gets the (unique) short straw, and agrees to write !!-50!!. The others accordingly write !!10!!, but they discover that instead of !!-50!!, Vidkun has written !!22!! and is the only person to have guessed correctly.

I would be interested to learn if there is a playable Nash equilibrium under these circumstances. It might be that the optimal strategy is for everyone to play as if they didn't know what the question was beforehand!

Suppose the players agree to follow the strategy I outlined, each rolling a die and writing !!-50!! with probability !!\frac16!!, and !!10!! otherwise. And suppose that although the others do this, Vidkun skips the die roll and unconditionally writes !!10!!. As before, !!n-1!! players (including Vidkun) win if exactly one of them rolls zero. Vidkun's chance of winning increases. Intuitively, the other players' chances of winning ought to decrease. But by how much? I think I keep messing up the calculation because I keep getting zero. If this were actually correct, it would be a fascinating paradox!

[Other articles in category /math] permanent link

Mon, 05 Aug 2024

Everyday examples of morphisms with one-sided inverses

Like almost everyone except Alexander Grothendieck, I understand things better with examples. For instance, how do you explain that

$$(f\circ g)^{-1} = g^{-1} \circ f^{-1}?$$

Oh, that's easy. Let !!f!! be putting on your shoes and !!f^{-1}!! be taking off your shoes. And let !!g!! be putting on your socks and !!g^{-1}!! be taking off your socks.

Now !!f\circ g!! is putting on your socks and then your shoes. And !!g^{-1} \circ f^{-1}!! is taking off your shoes and then your socks. You can't !!f^{-1} \circ g^{-1}!!, that says to take your socks off before your shoes.

(I see a topologist jumping up and down in the back row, desperate to point out that the socks were never inside the shoes to begin with. Sit down please!)

Sometimes operations commute, but not in general. If you're teaching group theory to high school students and they find nonabelian operations strange, the shoes-and-socks example is an unrebuttable demonstration that not everything is abelian.

(Subtraction is not a good example here, because subtracting !!a!! and then !!b!! is the same as subtracting !!b!! and then !!a!!. When we say that subtraction isn't commutative, we're talking about something else.)

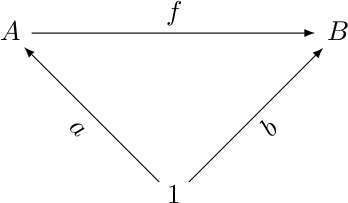

Anyway this weekend I was thinking about very very elementary category theory (the only kind I know) and about left and right inverses. An arrow !!f : A\to B!! has a left inverse !!g!! if

$$g\circ f = 1_A.$$

Example of this are easy. If !!f!! is putting on your shoes, then !!g!! is taking them off again. !!A!! is the state of shoelessness and !!B!! is the state of being shod. This !!f!! has a left inverse and no right inverse. You can't take the shoes off before you put them on.

But I wanted an example of an !!f!! with right inverse and no left inverse:

$$f\circ h = 1_B$$

and I was pretty pleased when I came up with one involving pouring the cream pitcher into your coffee, which has no left inverse that gets you back to black coffee. But you can ⸢unpour⸣ the cream if you do it before mixing it with the coffee: if you first put the cream back into the carton in the refrigerator, then the pouring does get you to black coffee.

But now I feel silly. There is a trivial theorem that if !!g!! is a left inverse of !!f!!, then !!f!! is a right inverse of !!g!!. So the shoe example will do for both. If !!f!! is putting on your shoes, then !!g!! is taking them off again. And just as !!f!! has a left inverse and no right inverse, because you can't take your shoes off before putting them on, !!g!! has a right inverse (!!f!!) and no left inverse, because you can't take your shoes off before putting them on.

This reminds me a little of the time I tried to construct an example to show that “is a blood relation of" is not a transitive relation. I had this very strange and elaborate example involving two sets of sisters-in-law. But the right example is that almost everyone is the blood relative of both of their parents, who nevertheless are not (usually) blood relations.

[Other articles in category /math] permanent link

Fri, 05 Jul 2024

A triviality about numbers that look like abbc

Looking at license plates the other day I noticed that if you have a four-digit number !!N!! with digits !!abbc!!, and !!a+c=b!!, then !!N!! will always be a multiple of !!37!!. For example, !!4773 = 37\cdot 129!! and !!1776 = 37\cdot 48!!.

Mathematically this is uninteresting. The proof is completely trivial. (Such a number is simply !!1110a +111c!!, and !!111=3\cdot 37!!.)

But I thought that if someone had pointed this out to me when I was eight or nine, I would have been very pleased. Perhaps if you have a mathematical eight- or nine-year-old in your life, they will be pleased if you share this with them.

[Other articles in category /math] permanent link

Tue, 23 Apr 2024

Well, I guess I believe everything now!

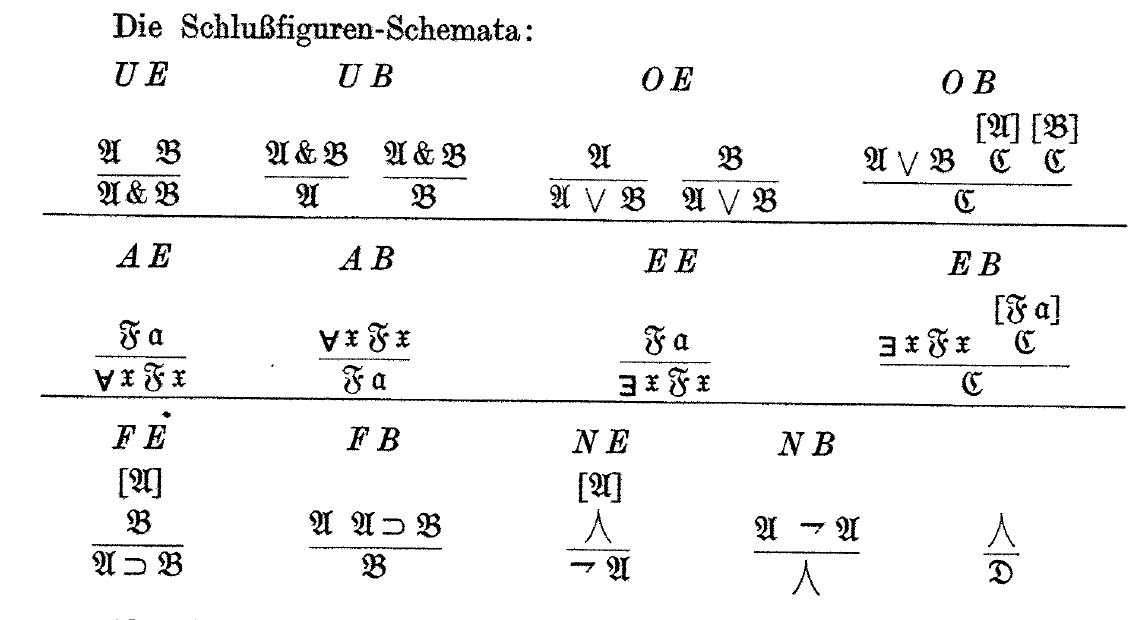

The principle of explosion is that in an inconsistent system everything is provable: if you prove both !!P!! and not-!!P!! for any !!P!!, you can then conclude !!Q!! for any !!Q!!:

$$(P \land \lnot P) \to Q.$$

This is, to put it briefly, not intuitive. But it is awfully hard to get rid of because it appears to follow immediately from two principles that are intuitive:

If we can prove that !!A!! is true, then we can prove that at least one of !!A!! or !!B!! is true. (In symbols, !!A\to(A\lor B)!!.)

If we can prove that at least one of !!A!! or !!B!! is true, and we can prove that !!A!! is false, then we may conclude that that !!B!! is true. (Symbolically, !!(A\lor B) \to (\lnot A\to B)!!.).

Then suppose that we have proved that !!P!! is both true and false. Since we have proved !!P!! true, we have proved that at least one of !!P!! or !!Q!! is true. But because we have also proved that !!P!! is false, we may conclude that !!Q!! is true. Q.E.D.

This proof is as simple as can be. If you want to get rid of this, you have a hard road ahead of you. You have to follow Graham Priest into the wilderness of paraconsistent logic.

Raymond Smullyan observes that although logic is supposed to model ordinary reasoning, it really falls down here. Nobody, on discovering the fact that they hold contradictory beliefs, or even a false one, concludes that therefore they must believe everything. In fact, says Smullyan, almost everyone does hold contradictory beliefs. His argument goes like this:

Consider all the things I believe individually, !!B_1, B_2, \ldots!!. I believe each of these, considered separately, is true.

However, I also believe that I'm not infallible, and that at least one of !!B_1, B_2, \ldots!! is false, although I don't know which ones.

Therefore I believe both !!\bigwedge B_i!! (because I believe each of the !!B_i!! separately) and !!\lnot\bigwedge B_i!! (because I believe that not all the !!B_i!! are true).

And therefore, by the principle of explosion, I ought to believe that I believe absolutely everything.

Well anyway, none of that was exactly what I planned to write about. I was pleased because I noticed a very simple, specific example of something I believed that was clearly inconsistent. Today I learned that K2, the second-highest mountain in the world, is in Asia, near the border of Pakistan and westernmost China. I was surprised by this, because I had thought that K2 was in Kenya somewhere.

But I also knew that the highest mountain in Africa was Kilimanjaro. So my simultaneous beliefs were flatly contradictory:

- K2 is the second-highest mountain in the world.

- Kilimanjaro is not the highest mountain in the world, but it is the highest mountain in Africa

- K2 is in Africa

Well, I guess until this morning I must have believed everything!

[Other articles in category /math/logic] permanent link

Sat, 13 Apr 2024

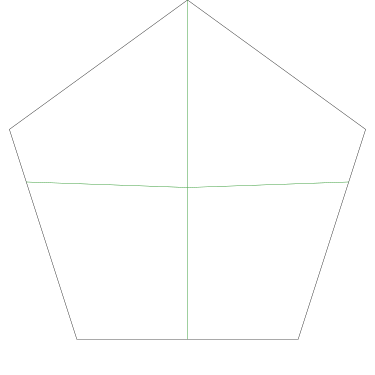

3-coloring the vertices of an icosahedron

I don't know that I have a point about this, other than that it makes me sad.

A recent Math SE post (since deleted) asked:

How many different ways are there to color the vertices of the icosahedron with 3 colors such that no two adjacent vertices have the same color?

I would love to know what was going on here. Is this homework? Just someone idly wondering?

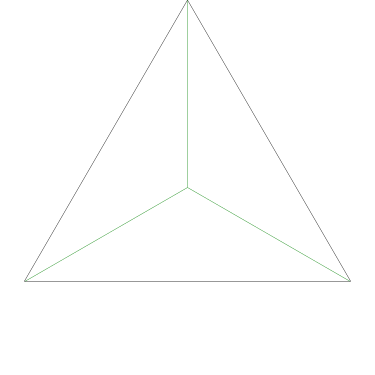

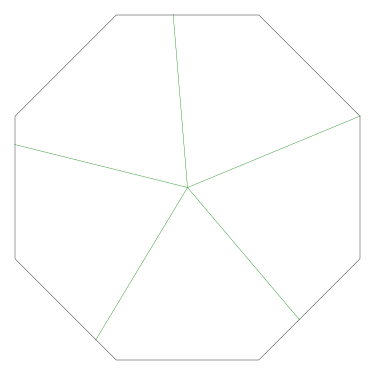

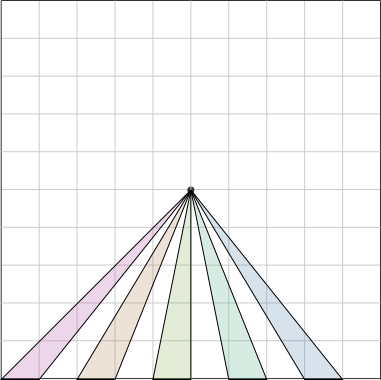

Because the interesting thing about this question is (assuming that the person knows what an icosahedron is, etc.) it should be solvable in sixty seconds by anyone who makes the least effort. If you don't already see it, you should try. Try what? Just take an icosahedron, color the vertices a little, see what happens. Here, I'll help you out, here's a view of part of the end of an icosahedron, although I left out most of it. Try to color it with 3 colors so that no two adjacent vertices have the same color, surely that will be no harder than coloring the whole icosahedron.

The explanation below is a little belabored, it's what OP would have discovered in seconds if they had actually tried the exercise.

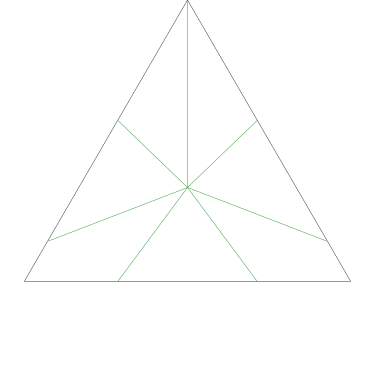

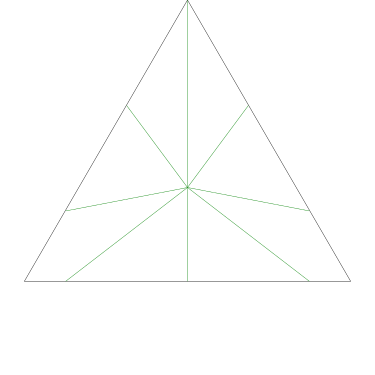

Let's color the middle vertex, say blue.

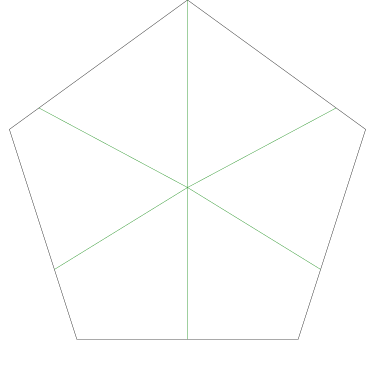

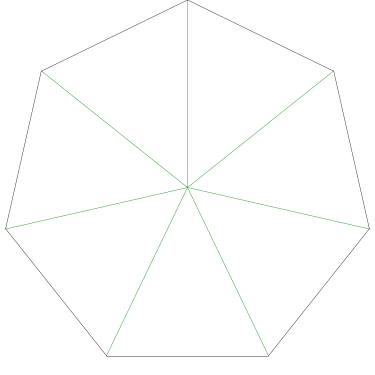

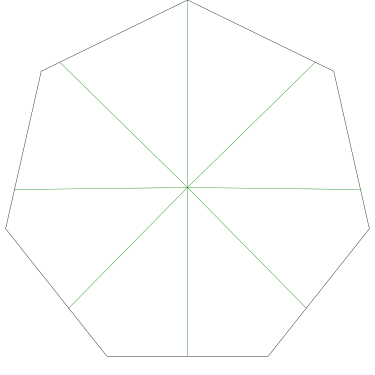

The five vertices around the edge can't be blue, they must be the other two colors, say red and green, and the two colors must alternate:

Ooops, there's no color left for the fifth vertex.

The phrasing of the question, “how many” makes the problem sound harder than it is: the answer is zero because we can't even color half the icosahedron.

If OP had even tried, even a little bit, they could have discovered this. They didn't need to have had the bright idea of looking at a a partial icosahedron. They could have grabbed one of the pictures from Wikipedia and started coloring the vertices. They would have gotten stuck the same way. They didn't have to try starting in the middle of my diagram, starting at the edge works too: if the top vertex is blue, the three below it must be green-red-green, and then the bottom two are forced to be blue, which isn't allowed. If you just try it, you win immediately. The only way to lose is not to play.

Before the post was deleted I suggested in a comment “Give it a try, see what happens”. I genuinely hoped this might be helpful. I'll probably never know if it was.

Like I said, I would love to know what was going on here. I think maybe this person could have used a dose of Lower Mathematics.

Just now I wondered for the first time: what would it look like if I were to try to list the principles of Lower Mathematics? “Try it and see” is definitely in the list.

Then I thought: How To Solve It has that sort of list and something like “try it and see” is probably on it. So I took it off the shelf and found: “Draw a figure”, “If you cannot solve the proposed problem”, “Is it possible to satisfy the condition?”. I didn't find anything called “fuck around with it and see what you learn” but it is probably in there under a different name, I haven't read the book in a long time. To this important principle I would like to add “fuck around with it and maybe you will stumble across the answer by accident” as happened here.

Mathematics education is too much method, not enough heuristic.

[Other articles in category /math] permanent link

Wed, 06 Mar 2024

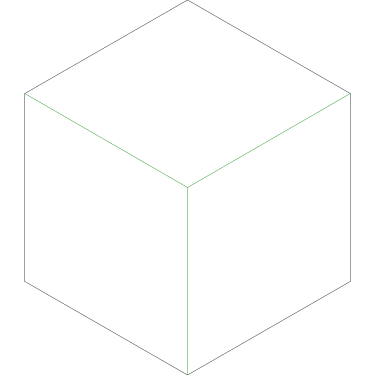

Optimal boxes with and without lids

Sometime around 1986 or so I considered the question of the dimensions that a closed cuboidal box must have to enclose a given volume but use as little material as possible. (That is, if its surface area should be minimized.) It is an elementary calculus exercise and it is unsurprising that the optimal shape is a cube.

Then I wondered: what if the box is open at the top, so that it has only five faces instead of six? What are the optimal dimensions then?

I did the calculus, and it turned out that the optimal lidless box has a square base like the cube, but it should be exactly half as tall.

For example the optimal box-with-lid enclosing a cubic meter is a 1×1×1 cube with a surface area of !!6!!.

Obviously if you just cut off the lid of the cubical box and throw it away you have a one-cubic-meter lidless box with a surface area of !!5!!. But the optimal box-without-lid enclosing a cubic meter is shorter, with a larger base. It has dimensions $$2^{1/3} \cdot 2^{1/3} \cdot \frac{2^{1/3}}2$$

and a total surface area of only !!3\cdot2^{2/3} \approx 4.76!!. It is what you would get if you took an optimal complete box, a cube, that enclosed two cubic meters, cut it in half, and threw the top half away.

I found it striking that the optimal lidless box was the same proportions as the optimal complete box, except half as tall. I asked Joe Keane if he could think of any reason why that should be obviously true, without requiring any calculus or computation. “Yes,” he said. I left it at that, imagining that at some point I would consider it at greater length and find the quick argument myself.

Then I forgot about it for a while.

Last week I remembered again and decided it was time to consider it at greater length and find the quick argument myself. Here's the explanation.

Take the cube and saw it into two equal halves. Each of these is a lidless five-sided box like the one we are trying to construct. The original cube enclosed a certain volume with the minimum possible material. The two half-cubes each enclose half the volume with half the material.

If there were a way to do better than that, you would be able to make a lidless box enclose half the volume with less than half the material. Then you could take two of those and glue them back together to get a complete box that enclosed the original volume with less than the original amount of material. But we already knew that the cube was optimal, so that is impossible.

[Other articles in category /math] permanent link

Sat, 02 Dec 2023Content warning: grumpy complaining. This was a frustrating month.

Need an intuitive example for how "P is necessary for Q" means "Q⇒P"?

This kind of thing comes up pretty often. Why are there so many ways that the logical expression !!Q\implies P!! can appear in natural language?

- If !!Q!!, then !!P!!

- !!Q!! implies !!P!!

- !!P!! if !!Q!!

- !!Q!! is sufficient for !!P!!

- !!P!! is necessary for !!Q!!

Strange, isn't it? !!Q\land P!! is much simpler: “Both !!Q!! and !!P!! are true” is pretty much it.

Anyway this person wanted an intuitive example of “!!P!! is necessary for !!Q!!”

I suggested:

Suppose that it is necessary to have a ticket (!!P!!) in order to board a certain train (!!Q!!). That is, if you board the train (!!Q!!), then you have a ticket (!!P!!).

Again this follows the principle that rule enforcement is a good thing when you are looking for intuitive examples. Keeping ticketless people off the train is something that the primate brain is wired up to do well.

My first draft had “board a train” in place of “board a certain train”. One commenter complained:

many people travel on trains without a ticket, worldwide

I was (and am) quite disgusted by this pettifogging.

I said “Suppose that…”. I was not claiming that the condition applies to every train in all of history.

OP had only asked for an example, not some universal principle.

Does ...999.999... = 0?

This person is asking one of those questions that often puts Math StackExchange into the mode of insisting that the idea is completely nonsensical, when it is actually very close to perfectly mundane mathematics. (Previously: [1] [2] [3] ) That didn't happen this time, which I found very gratifying.

Normally, decimal numerals have a finite integer part on the left of the decimal point, and an infinite fractional part on the right of the decimal point, as with (for example) !!\frac{13}{3} = 4.333\ldots!!. It turns out to work surprisingly well to reverse this, allowing an infinite integer part on the left and a finite fractional part on the right, for example !!\frac25 = \ldots 333.4!!. For technical reasons we usually do this in base !!p!! where !!p!! is prime; it doesn't work as well in base !!10!!. But it works well enough to use: If we have the base-10 numeral !!\ldots 9999.0!! and we add !!1!!, using the ordinary elementary-school right-to-left addition algorithm, the carry in the units place goes to the tens place as usual, then the next carry goes to the hundreds place and so on to infinity, leaving us with !!\ldots 0000.0!!, so that !!\ldots 9999.0!! can be considered a representation of the number !!-1!!, and that means we don't need negation signs.

In fact this system is fundamental to the way numbers are represented in computer arithmetic. Inside the computer the integer !!-1!! is literally represented as the base-2 numeral !!11111111\;11111111\;11111111\;11111111!!, and when we add !!1!! to it the carry bit wanders off toward infinity on the left. (In the computer the numeral is finite, so we simulate infinity by just discarding the carry bit when it gets too far away.)

Once you've seen this a very reasonable next question is whether you can have numbers that have an infinite sequence of digits on both sides. I think something goes wrong here — for one thing it is no longer clear how to actually do arithmetic. For the infinite-to-the-left numerals arithmetic is straightforward (elementary-school algorithms go right-to-left anyway) and for the standard infinite-to-the-right numerals we can sort of fudge it. (Try multiplying the infinite decimal for !!\sqrt 2!! by itself and see what trouble you get into. Or simpler: What's !!4.666\ldots \times 3!!?)

OP's actual question was: If !!\ldots 9999.0 !! can be considered to represent !!-1!!, and if !!0.9999\ldots!! can be considered to represent !!1!!, can we add them and conclude that !!\ldots 9999.9999\ldots = 0!!?

This very deserving question got a good answer from someone who was not me. This was a relief, because my shameful answer was pure shitpostery. It should have been heavily downvoted, but wasn't. The gods of Math SE karma are capricious.

Why define addition with successor?

Ugh, so annoying. OP had read (Bertrand Russell's explanation of) the Peano definition of addition, and did not understand it. Several people tried hard to explain, but communication was not happening. Or, perhaps, OP was more interested in having an argument than in arriving at an understanding. I lost a bit of my temper when they claimed:

Russell's so-called definition of addition (as quoted in my question) is nothing but a tautology: ….

I didn't say:

If you think Bertrand Russell is stupid, it's because you're stupid.

although I wanted to at first. The reply I did make is still not as measured as I would like, and although it leaves this point implicit, the point is still there. I did at least shut up after that. I had answered OP's question as well as I was able, and carrying on a complex discussion in the comments is almost never of value.

Why is Ramanujan considered a great mathematician?

This was easily my best answer of the month, but the question was deleted, so you will only be able to see it if you have enough Math SE reputation.

OP asked a perfectly reasonable question: Ramanujan gets a lot of media hype for stuff like this:

$${\sqrt {\phi +2}}-\phi ={\cfrac {e^{{-2\pi /5}}}{1+{\cfrac {e^{{-2\pi }}}{1+{\cfrac {e^{{-4\pi }}}{1+{\cfrac {e^{{-6\pi }}}{1+\,\cdots }}}}}}}}$$

which is not of any obvious use, so “why is it given such high regard?”

OP appeared to be impugning a famous mathematician, and Math SE always responds badly to that; their heroes must not be questioned. And even worse, OP mentioned the notorious non-fact that $$1+2+3+\ldots =-\frac1{12}$$ which drives Math SE people into a frothing rage.

One commenter argued:

Mathematics is not inherently about its "usefulness". Even if you can't find practical use for those formulas, you still have to admit that they are by no means trivial

I think this is fatuous. OP is right here, and the commenter is wrong. Mathematicians are not considered great because they produce wacky and impractical equations. They are considered great because they solve problems, invent techniques that answer previously impossible questions, and because they contribute insights into deep and complex issues.

Some blockhead even said:

Most of the mathematical results are useless. Mathematics is more like an art.

Bullshit. Mathematics is about trying to understand stuff, not about taping a banana to the wall. I replied:

I don't think “mathematics is not inherently about its usefulness" is an apt answer here. Sometimes mathematical results have application to physics or engineering. But for many mathematical results the application is to other parts of mathematics, and mathematicians do judge the ‘usefulness’ of results on this basis. Consider for example Mochizuki's field of “inter-universal Teichmüller theory”. This was considered interesting only as long as it appeared that it might provide a way to prove the !!abc!! conjecture. When that hope collapsed, everyone lost interest in it.

My answer to OP elaborated on this point:

The point of these formulas wasn't that they were useful in themselves. It's that in order to find them he had to have a deep understanding of matters that were previously unknown. His contribution was the deep understanding.

I then discussed Hardy's book on the work he did with Ramanujan and Hardy's own estimation of Ramanujan's work:

The first chapter is somewhat negative, as it summarizes the parts of Ramanujan's work that he felt didn't have lasting value — because Hardy's next eleven chapters are about the work that he felt did have value.

So if OP wanted a substantive and detailed answer to their question, that would be the first place to look.

I also did an arXiv search for “Ramanujan” and found many recent references, including one with “applications to the Ramanujan !!τ!!-function”, and concluded:

The !!\tau!!-function is the subject of the entire chapter 10 of Hardy's book and appears to still be of interest as recently as last Monday.

The question was closed as “opinion-based” (a criticism that I think my answer completely demolishes) and then it was deleted. Now if someone else trying to find out why Ramanujan is held in high regard they will not be able to find my factual, substantive answer.

Screw you, Math SE. This month we both sucked.

[Other articles in category /math/se] permanent link

Mon, 27 Nov 2023

Uncountable sets for seven-year-olds

I was recently talking to a friend whose seven-year old had been reading about the Hilbert Hotel paradoxes. One example: The hotel is completely full when a bus arrives with 53 passengers seeking rooms. Fortunately the hotel has a countably infinite number of rooms, and can easily accomodate 53 more guests even when already full.

My friend mentioned that his kid had been unhappy with the associated discussion of uncountable sets, since the explanation he got involved something about people whose names are infinite strings, and it got confusing. I said yes, that is a bad way to construct the example, because names are not infinite strings, and even one infinite string is hard to get your head around. If you're going to get value out of the hotel metaphor, you waste an opportunity if you abandon it for some weird mathematical abstraction. (“Okay, Tyler, now let !!\mathscr B!! be a projection from a vector bundle onto a compact Hausdorff space…”)

My first attempt on the spur of the moment involved the guests belonging to clubs, which meet in an attached convention center with a countably infinite sequence of meeting rooms. The club idea is good but my original presentation was overcomplicated and after thinking about the issue a little more I sent this email with my ideas for how to explain it to a bright seven-year-old.

Here's how I think it should go. Instead of a separate hotel and convention center, let's just say that during the day the guests vacate their rooms so that clubs can meet in the same rooms. Each club is assigned one guest room that they can use for their meeting between the hours of 10 AM to 4 PM. The guest has to get out of the room while that is happening, unless they happen to be a member of the club that is meeting there, in which case they may stay.

If you're a guest in the hotel, you might be a member of the club that meets in your room, or you might not be a member of the club that meets in your room, in which case you have to leave and go to a meeting of one of your clubs in some other room.

We can paint the guest room doors blue and green: blue, if the guest there is a member of the club that meets in that room during the day, and green if they aren't. Every door is now painted blue or green, but not both.

Now I claim that when we were assigning clubs to rooms, there was a club we missed that has nowhere to meet. It's the Green Doors Club of all the guests who are staying in rooms with green doors.

If we did assign the Green Doors Club a guest room in which to meet, that door would be painted green or blue.

The Green Doors Club isn't meeting in a room with a blue door. The Green Doors Club only admits members who are staying in rooms with green doors. That guest belongs to the club that meets in their room, and it isn't the Green Doors Club because the guest's door is blue.

But the Green Doors Club isn't meeting in a room with a green door. We paint a door green when the guest is not a member of the club that meets in their room, and this guest is a member of the Green Doors Club.

So however we assigned the clubs to the rooms, we must have missed out on assigning a room to the Green Doors Club.

One nice thing about this is that it works for finite hotels too. Say you have a hotel with 4 guests and 4 rooms. Well, obviously you can't assign a room to each club because there are 16 possible clubs and only 4 rooms. But the blue-green argument still works: you can assign any four clubs you want to the four rooms, then paint the doors, then figure out who is in the Green Doors Club, and then observe that, in fact, the Green Doors Club is not one of the four clubs that got a room.

Then you can reassign the clubs to rooms, this time making sure that the Green Doors Club gets a room. But now you have to repaint the doors, and when you do you find out that membership in the Green Doors Club has changed: some new members were admitted, or some former members were expelled, so the club that meets there is no longer the Green Doors Club, it is some other club. (Or if the Green Doors Club is meeting somewhere, you will find that you have painted the doors wrong.)

I think this would probably work. The only thing that's weird about it is that some clubs have an infinite number of members so that it's hard to see how they could all squeeze into the same room. That's okay, not every member attends every meeting of every club they're in, that would be impossible anyway because everyone belongs to multiple clubs.

But one place you could go from there is: what if we only guarantee rooms to clubs with a finite number of members? There are only a countably infinite number of clubs then, so they do all fit into the hotel! Okay, Tyler, but what happens to the Green Door Club then? I said all the finite clubs got rooms, and we know the Green Door Club never gets a room, so what can we conclude?

It's tempting to try to slip in a reference to Groucho Marx, but I think it's unlikely that that will do anything but confuse matters.

[ Previously ]

[ Update: My friend said he tried it and it didn't go over as well as I thought it might. ]

[Other articles in category /math] permanent link

Fri, 24 Nov 2023

Math SE report 2023-09: Sense and reference, Wason tasks, what is a sequence?

Proving there is only one proof?

OP asks:

In mathematics, is it possible to prove that there is only one (shortest) proof of a given theorem (say, in ZFC)?

This was actually from back in July, when there was a fairly substantive answer. But it left out what I thought was a simpler, non-substantive answer: For a given theorem !!T!! it's actually quite simple to prove that there is (or isn't) only one proof of !!T!!: just generate all possible proofs in order by length until you find the shortest proofs of !!T!!, and then stop before you generate anything longer than those. There are difficult and subtle issues in provability theory, but this isn't one of them.

I say “non-substantive” because it doesn't address any of the possibly interesting questions of why a theorem would have only one proof, or multiple proofs, or what those proofs would look like, or anything like that. It just answers the question given: is it possible to prove that there is only one shortest proof.

So depending on what OP was looking for, it might be very unsatisfying. Or it might be hugely enlightening, to discover that this seemingly complicated question actually has a simple answer, just because proofs can be systematically enumerated.

This comes in handy in more interesting contexts. Gödel showed that arithmetic contains a theorem whose shortest proof is at least one million steps long! He did it by constructing an arithmetic formula !!G!! which can be interpreted as saying:

!!G!! cannot be proved in less than one million steps.

If !!G!! is false, it can be proved (in less than one million steps) and our system is inconsistent. So assuming that our axioms are consistent, then !!G!! is true and either:

- There is no proof of at all of !!G!!, or

- There are proofs of !!G!! but the shortest one is at least a million steps

Which is it? It can't be (1) because there is a proof of !!G!!: simply generate every single proof of one million steps or fewer, and check at the last line of each one to make sure that it is not !!G!!. So it must be (2).

What counts as a sequence, and how would we know that it isn't deceiving?

This is a philosophical question: What is a sequence, really? And:

if I write down random numbers with no pattern at all except for the fact that it gets larger, is it a viable sequence?

And several other related questions that are actually rather subtle: Is a sequence defined by its elements, or by some external rule? If the former how can you know when a sequence is linear, when you can only hope to examine a finite prefix?

I this is a great question because I think a sequence, properly construed, is both a rule and its elements. The definition says that a sequence of elements of !!S!! is simply a function !!f:\Bbb N\to S!!. This definition is a sort of spherical cow: it's a nice, simple model that captures many of the mathematical essentials of the thing being modeled. It works well for many purposes, but you get into trouble if you forget that it's just a model. It captures the denotation, but not the sense. I wouldn't yak so much about this if it wasn't so often forgotten. But the sense is the interesting part. If you forget about it, you lose the ability to ask questions like

Are sequences !!s_1!! and !!s_2!! the same sequence?

If all you have is the denotation, there's only one way to answer this question:

By definition, yes, if and only if !!s_1!! and !!s_2!! are the same function.

and there is nothing further to say about it. The question is pointless and the answer is useless. Sometimes the meaning is hidden a little deeper. Not this time. If we push down into the denotation, hoping for meaning, we find nothing but more emptiness:

Q: What does it mean to say that !!s_1!! and !!s_2!! are the same function?

A: It means that the sets $$S_1 = \{ \langle i, s_1(i) \rangle \mid i\in \Bbb N\}$$ and $$S_2 = \{ \langle i, s_2(i) \rangle \mid i\in \Bbb N\}$$ have exactly the same elements.

We could keep going down this road, but it goes nowhere and having gotten to the end we would have seen nothing worth seeing.

But we do ask and answer this kind of question all the time. For example:

- !!S_1(n)!! is the infinite sequence of odd numbers starting at !!1!!

- !!S_2(n)!! is the infinite sequence of numbers that are the difference between a square and its previous square, starting at !!1^2-0^2!!

Are sequences !!S_1!! and !!S_2!! the same sequence? Yes, yes, of course they are, don't focus on the answer. Focus on the question! What is this question actually asking?

The real essence of the question is not about the denotation, about just the elements. Rather: we're given descriptions of two possible computations, and the question is asking if these two computations will arrive at the same results in each case. That's the real question.

Well, I started this blog article back in October and it's still not ready because I got stuck writing about this question. I think the answer I gave on SE is pretty good, OP asked what is essentially a philosophical question and the backbone of my answer is on the level of philosophy rather than mathematics.

[ Addendum: On review, I am pleasantly surprised that this section of the blog post turned out both coherent and relevant. I really expected it to be neither. A Thanksgiving miracle! ]

Can inequalities be added the way that equations can be added?

OP says:

Suppose you have !!x + y > 6!! and !!x - y > 4!!. Adding the inequalities, the !!y!! terms cancel and you end up with … !!x > 5!!. It is not intuitively obvious to me that this holds true … I can see that you can't subtract inequalities, but is it always okay to add them?

I have a theory that if someone is having trouble with the intuitive meaning of some mathematical property, it's a good idea to turn it into a question about fair allocation of resources, or who has more of some commodity, because human brains are good at monkey tasks like seeing who got cheated when the bananas were shared out.

About ten years ago someone asked for an intuitive explanation of why you could add !!\frac a2!! to both sides of !!\frac a2 < \frac b2!! to get !!\frac a2+\frac a2 < \frac a2 + \frac b2!!. I said:

Say I have half a bag of cookies, that's !!\frac a2!! cookies, and you have half a carton of cookies, that's !!\frac b2!! cookies, and the carton is bigger than the bag, so you have more than me, so that !!\frac a2 < \frac b2!!.

Now a friendly djinn comes along and gives you another half a bag of cookies, !!\frac a2!!. And to be fair he gives me half a bag too, also !!\frac a2!!.

So you had more cookies before, and the djinn gave each of us an extra half a bag. Then who has more now?

I tried something similar this time around:

Say you have two bags of cookies, !!a!! and !!b!!. A friendly baker comes by and offers to trade with you: you will give the baker your bag !!a!! and in return you will get a larger bag !!c!! which contains more cookies. That is, !! a \lt c !!. You like cookies, so you agree.

Then the baker also trades your bag !!b!! for a bigger bag !!d!!.

Is it possible that you might not have more cookies than before you made the trades? … But that's what it would mean if !! a\lt c !! and !! b\lt d !! but not !! a+b \lt c+d !! too.

Someday I'll write up a whole blog article about this idea, that puzzles in arithmetic sometimes become intuitively obvious when you turn them into questions about money or commodities, and that puzzles in logic sometimes become intuitively obvious when you turn them into questions about contract and rule compliance.

I don't remember why I decided to replace the djinn with a baker this time around. The cookies stayed the same though. I like cookies. Here's another cookie example, this time to explain why !!1\div 0.5 = 2!!.

What is the difference between "for all" and "there exists" in set builder notation?

This is the same sort of thing again. OP was was asking about

$$B = \{n \in \mathbb{N} : \forall x \in \mathbb{N} \text{ and } n=2^x\}$$

but attempting to understand this is trying to swallow two pills at once. One pill is the logic part (what role is the !!\forall!! playing) and the other pill is the arithmetic part having to do with powers of !!2!!. If you're trying to understand the logic part and you don't have an instantaneous understanding of powers of !!2!!, it can be helpful to simplify matters by replacing the arithmetic with something you understand intuitively. In place of the relation !!a = 2^b!! I like to use the relation “!!a!! is the mother of !!b!!”, which everyone already knows.

Are infinities included in the closure of the real set !!\overline{\mathbb{R}}!!

This is a good question by the Chip Buchholtz criterion: The answer is much longer than the question was. OP wants to know if the closure of !!\Bbb R!! is just !!\Bbb R!! or if it's some larger set like !![-\infty, \infty]!!. They are running up against the idea that topological closure is not an absolute notion; it only makes sense in the context of an enclosing space.

I tried to draw an analogy between the closure and the complement of a set: Does the complement of the real numbers include the number !!i!!? Well, it depends on the context.

OP preferred someone else's answer, and I did too, saying:

I thought your answer was better because it hit all the important issues more succinctly!

I try to make things very explicit, but the downside of that is that it makes my answers longer, and shorter is generally better than longer. Sometimes it works, and sometimes it doesn't.

Vacuous falsehood - does it exist, and are there examples?

I really liked this question because I learned something from it. It brought me up short: “Huh,” I said. “I never thought about that.” Three people downvoted the question, I have no idea why.

I didn't know what a vacuous falsity would be either but I decided that since the negation of a vacuous truth would be false it was probably the first thing to look at. I pulled out my stock example of vacuous truth, which is:

All my rubies are red.

This is true, because all rubies are red, but vacuously so because I don't own any rubies.

Since this is a vacuous truth, negating it ought to give us a vacuous falsity, if there is such a thing:

I have a ruby that isn't red.

This is indeed false. And not in the way one would expect! A more typical false claim of this type would be:

I have a belt that isn't leather.

This is also false, in rather a different way. It's false, but not vacuously so, because to disprove it you have to get my belts out of the closet and examine them.

Now though I'm not sure I gave the right explanation in my answer. I said:

In the vacuously false case we don't even need to read the second half of the sentence:

there is a ruby in my vault that …… The irrelevance of the “…is not red” part is mirrored exactly in the irrelevance of the “… are red” part in the vacuously true statement:

all the rubies in my vault are …

But is this the right analogy? I could have gone the other way:

In the vacuously false case we don't even need to read the first half of the sentence:

there is a ruby … that is not red… The irrelevance of the “… in my vault …” part is mirrored exactly in the irrelevance of the “… are red” part in the vacuously true statement:

all the rubies in my vault are …

Ah well, this article has been drying out on the shelf for a month now, I'm making an editorial decision to publish it without thinking about it any more.

[Other articles in category /math/se] permanent link

Fri, 20 Oct 2023

The discrete logarithm, shorter and simpler

I recently discussed the “discrete logarithm” method for multiplying integers, and I feel like I took too long and made it seem more complicated and mysterious than it should have been. I think I'm going to try again.

Suppose for some reason you found yourself needing to multiply a lot of powers of !!2!!. What's !!4096·512!!? You could use the conventional algorithm:

$$ \begin{array}{cccccccc} & & & & 4 & 0 & 9 & 6 \\ × & & & & & 5 & 1 & 2 \\ \hline % & & & & 8 & 1 & 9 & 2 \\ & & & 4 & 0 & 9 & 6 & \\ & 2 & 0 & 4 & 8 & 0 & & \\ \hline % & 2 & 0 & 9 & 7 & 1 & 5 & 2 \end{array} $$

but that's a lot of trouble, and a simpler method is available. You know that $$2^i\cdot 2^j = 2^{i+j}$$

so if you had an easy way to convert $$2^i\leftrightarrow i$$ you could just convert the factors to exponents, add the exponents, and convert back. And all that's needed is a simple table:

\begin{array}{rr} 0 & 1\\ 1 & 2\\ 2 & 4\\ 3 & 8\\ 4 & 16\\ 5 & 32\\ 6 & 64\\ 7 & 128\\ 8 & 256\\ 9 & 512\\ 10 & 1\,024\\ 11 & 2\,048\\ 12 & 4\,096\\ 13 & 8\,192\\ 14 & 16\,384\\ 15 & 32\,768\\ 16 & 65\,536\\ 17 & 131\,072\\ 18 & 262\,144\\ 19 & 524\,288\\ 20 & 1\,048\,576\\ 21 & 2\,097\,152\\ \vdots & \vdots \\ \end{array}

We check the table, and find that $$4096\cdot512 = 2^{12}\cdot 2^9 = 2^{12+9} = 2^{21} = 2097152.$$ Easy-peasy.

That is all very well but how often do you find yourself having to multiply a lot of powers of !!2!!? This was a lovely algorithm but with very limited application.

What Napier (the inventor of logarithms) realized was that while not every number is an integer power of !!2!!, every number is an integer power of !!1.00001!!, or nearly so. For example, !!23!! is very close to !!1.00001^{313\,551}!!. Napier made up a table, just like the one above, except with powers of !!1.00001!! instead of powers of !!2!!. Then to multiply !!x\cdot y!! you would just find numbers close to !!x!! and !!y!! in Napier's table and use the same algorithm. (Napier's original table used powers of !!0.9999!!, but it works the same way for the same reason.)

There's another way to make it work. Consider the integers mod !!101!!, called !!\Bbb Z_{101}!!. In !!\Bbb Z_{101}!!, every number is an integer power of !!2!!!

For example, !!27!! is a power of !!2!!. It's simply !!2^7!!, because if you multiply out !!2^7!! you get !!128!!, and !!128\equiv 27\pmod{101}!!.

Or:

$$\begin{array}{rcll} 14 & \stackrel{\pmod{101}}{\equiv} & 10\cdot 101 & + 14 \\ & = & 1010 & + 14 \\ & = & 1024 \\ & = & 2^{10} \end{array} $$

Or:

$$\begin{array}{rcll} 3 & \stackrel{\pmod{101}}{\equiv} & 5844512973848570809\cdot 101 & + 3 \\ & = & 590295810358705651709 & + 3 \\ & = & 590295810358705651712 \\ & = & 2^{69} \end{array} $$

Anyway that's the secret. In !!\Bbb Z_{101}!! the silly algorithm that quickly multiplies powers of !!2!! becomes more practical, because in !!\Bbb Z_{101}!!, every number is a power of !!2!!.

What works for !!101!! works in other cases larger and more interesting. It doesn't work to replace !!101!! with !!7!! (try it and see what goes wrong), but we can replace it with !!107, 797!!, or !!297779!!. The key is that if we want to replace !!101!! with !!n!! and !!2!! with !!a!!, we need to be sure that there is a solution to !!a^i=b\pmod n!! for every possible !!b!!. (The jargon term here is that !!a!! must be a “primitive root mod !!n!!”. !!2!! is a primitive root mod !!101!!, but not mod !!7!!.)

Is this actually useful for multiplication? Perhaps not, but it does have cryptographic applications. Similar to how multiplying is easy but factoring seems difficult, computing !!a^i\pmod n!! for given !!a, i, n!! is easy, but nobody knows a quick way in general to reverse the calculation and compute the !!i!! for which !!a^i\pmod n = m!! for a given !!m!!. When !!n!! is small we can simply construct a lookup table with !!n-1!! entries. But if !!n!! is a !!600!!-digit number, the table method is impractical. Because of this, Alice and Bob can find a way to compute a number !!2^i!! that they both know, but someone else, seeing !!2^i!! can't easily figure out what the original !!i!! was. See Diffie-Hellman key exchange for more details.

[ Also previously: Percy Ludgate's weird variation on this ]

[Other articles in category /math] permanent link

Sun, 15 Oct 2023[ Addendum 20231020: This came out way longer than it needed to be, so I took another shot at it, and wrote a much simpler explanation of the same thing that is only one-third as long. ]

A couple days ago I discussed the weird little algorithm of Percy Ludgate's, for doing single-digit multiplication using a single addition and three scalar table lookups. In Ludgate's algorithm, there were two tables, !!T_1!! and !!T_2!!, satisfying the following properties:

$$ \begin{align} T_2(T_1(n)) & = n \tag{$\color{darkgreen}{\spadesuit}$} \\ T_2(T_1(a) + T_1(b)) & = ab. \tag{$\color{purple}{\clubsuit}$} \end{align} $$

This has been called the “Irish logarithm” method because of its resemblance to ordinary logarithms. Normally in doing logarithms we have a magic logarithm function !!\ell!! with these properties:

$$ \begin{align} \ell^{-1}(\ell(n)) & = n \tag{$\color{darkgreen}{\spadesuit}$} \\ \ell^{-1}(\ell(a) + \ell(b)) & = ab. \tag{$\color{purple}{\clubsuit}$} \end{align} $$

(The usual notation for !!\ell(x)!! is of course “!!\log x!!” or “!!\ln x!!” or something of that sort, and !!\ell^{-1}(x)!! is usually written !!e^x!! or !!10^x!!.)

The properties of Ludgate's !!T_1!! and !!T_2!! are formally identical, with !!T_1!! playing the role of the logarithm function !!\ell!! and !!T_2!! playing the role of its inverse !!\ell^{-1}!!. Ludgate's versions are highly restricted, to reduce the computation to something simple enough that it can be implemented with brass gears.

Both !!T_1!! and !!T_2!! map positive integers to positive integers, and can be implemented with finite lookup tables. The ordinary logarithm does more, but is technically much more difficult. With the ordinary logarithm you are not limited to multiplying single digit integers, as with Ludgate's weird little algorithm. You can multiply any two real numbers, and the multiplication still requires only one addition and three table lookups. But the cost is huge! The tables are much larger and more complex, and to use them effectively you have to deal with fractional numbers, perform table interpolation, and worry about error accumulation.

It's tempting at this point to start explaining the history and use of logarithm tables, slide rules, and so on, but this article has already been delayed once, so I will try to resist. I will do just one example, with no explanation, to demonstrate the flavor. Let's multiply !!7!! by !!13!!.

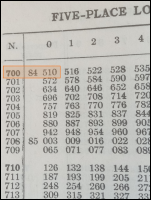

I look up !!7!! in my table of logarithms and find that !!\log_{10} 7 \approx 0.84510!!.

I look up !!13!! similarly and find that !!\log_{10} 13 \approx 1.11394!!.

I add !!0.84510 + 1.1394 = 1.95904!!.

I do a reverse lookup on !!1.95904!! and find that the result is approximately !!91.00!!.

If I were multiplying !!7.236!! by !!13.877!!, I would be willing to accept all these costs, and generations of scientists and engineers did accept them. But for !!7.0000×13.000 = 91.000!! the process is ridiculous. One might wonder if there wasn't some analogous technique that would retain the small, finite tables, and permits multiplication of integers, using only integer calculations throughout. And there is!

Now I am going to demonstrate an algorithm, based on logarithms, that exactly multiplies any two integers !!a!! and !!b!!, as long as !!ab ≤ 100!!. Like Ludgate's and the standard algorithm, it will use one addition and three lookups in tables. Unlike the standard algorithm, the tables will be small, and will contain only integers.

Here is the table of the !!\ell!! function, which corresponds to Ludgate's !!T_1!!:

$$ \begin{array}{rrrrrrrrrrr} {\tiny\color{gray}{1}} & 0, & 1, & \color{darkblue}{69}, & 2, & 24, & 70, & \color{darkgreen}{9}, & 3, & 38, & 25, \\ {\tiny\color{gray}{11}} & 13, & \color{darkblue}{71}, & \color{darkgreen}{66}, & 10, & 93, & 4, & 30, & 39, & 96, & 26, \\ {\tiny\color{gray}{21}} & 78, & 14, & 86, & 72, & 48, & 67, & 7, & 11, & 91, & 94, \\ {\tiny\color{gray}{31}} & 84, & 5, & 82, & 31, & 33, & 40, & 56, & 97, & 35, & 27, \\ {\tiny\color{gray}{41}} & 45, & 79, & 42, & 15, & 62, & 87, & 58, & 73, & 18, & 49, \\ {\tiny\color{gray}{51}} & 99, & 68, & 23, & 8, & 37, & 12, & 65, & 92, & 29, & 95, \\ {\tiny\color{gray}{61}} & 77, & 85, & 47, & 6, & 90, & 83, & 81, & 32, & 55, & 34, \\ {\tiny\color{gray}{71}} & 44, & 41, & 61, & 57, & 17, & 98, & 22, & 36, & 64, & 28, \\ {\tiny\color{gray}{81}} & \color{darkred}{76}, & 46, & 89, & 80, & 54, & 43, & 60, & 16, & 21, & 63, \\ {\tiny\color{gray}{91}} & 75, & 88, & 53, & 59, & 20, & 74, & 52, & 19, & 51, & 50\hphantom{,} \\ \end{array} $$

(If we only want to multiply numbers with !!1\le a, b \le 9!! we only need the first row, but with the full table we can also compute things like !!7·13=91!!.)

Like !!T_2!!, this is not really a two-dimensional array. It just a list of !!100!! numbers, arranged in rows to make it easy to find the !!81!!st number when you need it. The small gray numerals in the margin are a finding aid. If you want to look up !!\ell(81)!! you can see that it is !!\color{darkred}{76}!! without having to count up !!81!! elements. This element is highlighted in red in the table above.

Note that the elements are numbered from !!1!! to !!100!!, whereas all the other tables in these articles have been zero-indexed. I wondered if there was a good way to fix this, but there really isn't. !!\ell!! is analogous to a logarithm function, and the one thing a logarithm function really must do is to have !!\log 1 = 0!!. So too here; we have !!\ell(1) = 0!!.

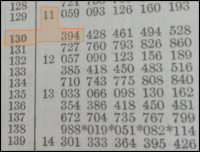

We also need an !!\ell^{-1}!! table analogous to Ludgate's !!T_2!!:

$$ \begin{array}{rrrrrrrrrrr} {\tiny\color{gray}{0}} & 1, & 2, & 4, & 8, & 16, & 32, & 64, & 27, & 54, & 7, \\ {\tiny\color{gray}{10}} & 14, & 28, & 56, & 11, & 22, & 44, & 88, & 75, & 49, & 98, \\ {\tiny\color{gray}{20}} & 95, & 89, & 77, & 53, & 5, & 10, & 20, & 40, & 80, & 59, \\ {\tiny\color{gray}{30}} & 17, & 34, & 68, & 35, & 70, & 39, & 78, & 55, & 9, & 18, \\ {\tiny\color{gray}{40}} & \color{darkblue}{36}, & 72, & 43, & 86, & 71, & 41, & 82, & 63, & 25, & 50, \\ {\tiny\color{gray}{50}} & 100, & 99, & 97, & 93, & 85, & 69, & 37, & 74, & 47, & 94, \\ {\tiny\color{gray}{60}} & 87, & 73, & 45, & 90, & 79, & 57, & 13, & 26, & 52, & 3, \\ {\tiny\color{gray}{70}} & 6, & 12, & 24, & 48, & 96, & \color{darkgreen}{91}, & \color{darkred}{81}, & 61, & 21, & 42, \\ {\tiny\color{gray}{80}} & 84, & 67, & 33, & 66, & 31, & 62, & 23, & 46, & 92, & 83, \\ {\tiny\color{gray}{90}} & 65, & 29, & 58, & 15, & 30, & 60, & 19, & 38, & 76, & 51\hphantom{,} \\ \end{array} $$

Like !!\ell^{-1}!! and !!T_2!!, this is just a list of !!100!! numbers in order.

As the notation suggests, !!\ell^{-1}!! and !!\ell!! are inverses. We already saw that the first table had !!\ell(81)=\color{darkred}{76}!! and !!\ell(1) = 0!!. Going in the opposite direction, we see from the second table that !!\ell^{-1}(76)= \color{darkred}{81}!! (again in red) and !!\ell^{-1}(0)=1!!. The elements of !!\ell!! tell you where to find numbers in the !!\ell^{-1}!! table. Where is !!17!! in the second table? Look at the !!17!!th element in the first table. !!\ell(17) = 30!!, so !!17!! is at position !!30!! in the second table.

Before we go too deeply into how these were constructed, let's try the !!7×13!! example we did before. The algorithm is just !!\color{purple}{\clubsuit}!!:

$$ \begin{align} % \ell^{-1}(\ell(a) + \ell(b)) & = ab\tag{$\color{purple}{\clubsuit}$} \\ 7·13 &= \ell^{-1}(\ell(7) + \ell(13)) \\ &= \ell^{-1}(\color{darkgreen}{9} + \color{darkgreen}{66}) \\ &= \ell^{-1}(75) \\ &= \color{darkgreen}{91} \end{align} $$

(The relevant numbers are picked out in green in the two tables.)

As promised, with three table lookups and a single integer addition.

What if the sum in the middle exceeds !!99!!? No problem, the !!\ell^{-1}!! table wraps around, so that element !!100!! is the same as element !!0!!:

$$ \begin{align} % \ell^{-1}(\ell(a) + \ell(b)) & = ab\tag{$\color{purple}{\clubsuit}$} \\ 3·12 &= \ell^{-1}(\ell(3) + \ell(12)) \\ &= \ell^{-1}(\color{darkblue}{69} + \color{darkblue}{71}) \\ &= \ell^{-1}(140) \\ &= \ell^{-1}(40) &\text{(wrap around)}\\ &= \color{darkblue}{36} \end{align} $$

How about that.

(This time the relevant numbers are picked out in blue.)

I said this only computes !!ab!! when the product is at most !!100!!. That is not quite true. If you are willing to ignore a small detail, this algorithm will multiply any two numbers. The small detail is that the multiplication will be done mod !!101!!. That is, instead of the exact answer, you get one that differs from it by a multiple of !!101!!. Let's do an example to see what I mean when I say it works even for products bigger than !!100!!:

$$ \begin{align} % \ell^{-1}(\ell(a) + \ell(b)) & = ab\tag{$\color{purple}{\clubsuit}$} \\ 16·26 &= \ell^{-1}(\ell(16) + \ell(26)) \\ &= \ell^{-1}(4 + 67) \\ &= \ell^{-1}(71) \\ &= 12 \end{align} $$

This tell us that !!16·26 = 12!!. The correct answer is actually !!16·26 = 416!!, and indeed !!416-12 = 404!! which is a multiple of !!101!!. The reason this happens is that the elements of the second table, !!\ell^{-1}!!, are not true integers, they are mod !!101!! integers.

Okay, so what is the secret here? Why does this work? It should jump out at you that it is often the case that an entry in the !!\ell^{-1}!! table is twice the previous entry:

$$\ell^{-1}(1+n) = 2\cdot \ell^{-1}(n)$$

In fact, this is true everywhere, if you remember that the numbers are not ordinary integers but mod !!101!! integers. For example, the number that follows !!64!!, in place of !!64·2=128!!, is !!27!!. But !!27\equiv 128\pmod{101}!! because they differ by a multiple of !!101!!. From a mod !!101!! point of view, it doesn't matter wther we put !!27!! or !!128!! after !!64!!, as they are the same thing.

Those two facts are the whole secret of the !!\ell^{-1}!! table:

- Each element is twice the one before, but

- The elements are not quite ordinary numbers, but mod !!101!! numbers where !!27=128=229=330=\ldots!!.

Certainly !!\ell^{-1}(0) = 2^0 = 1!!. And every entry in the !!\ell^{-1}!! is twice the previous one, if you are thinking in mod !!101!!. The two secrets are actually one secret:

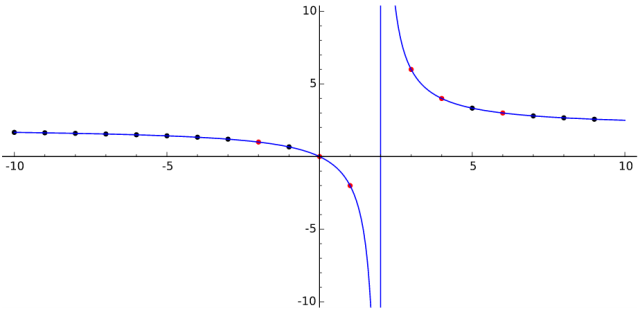

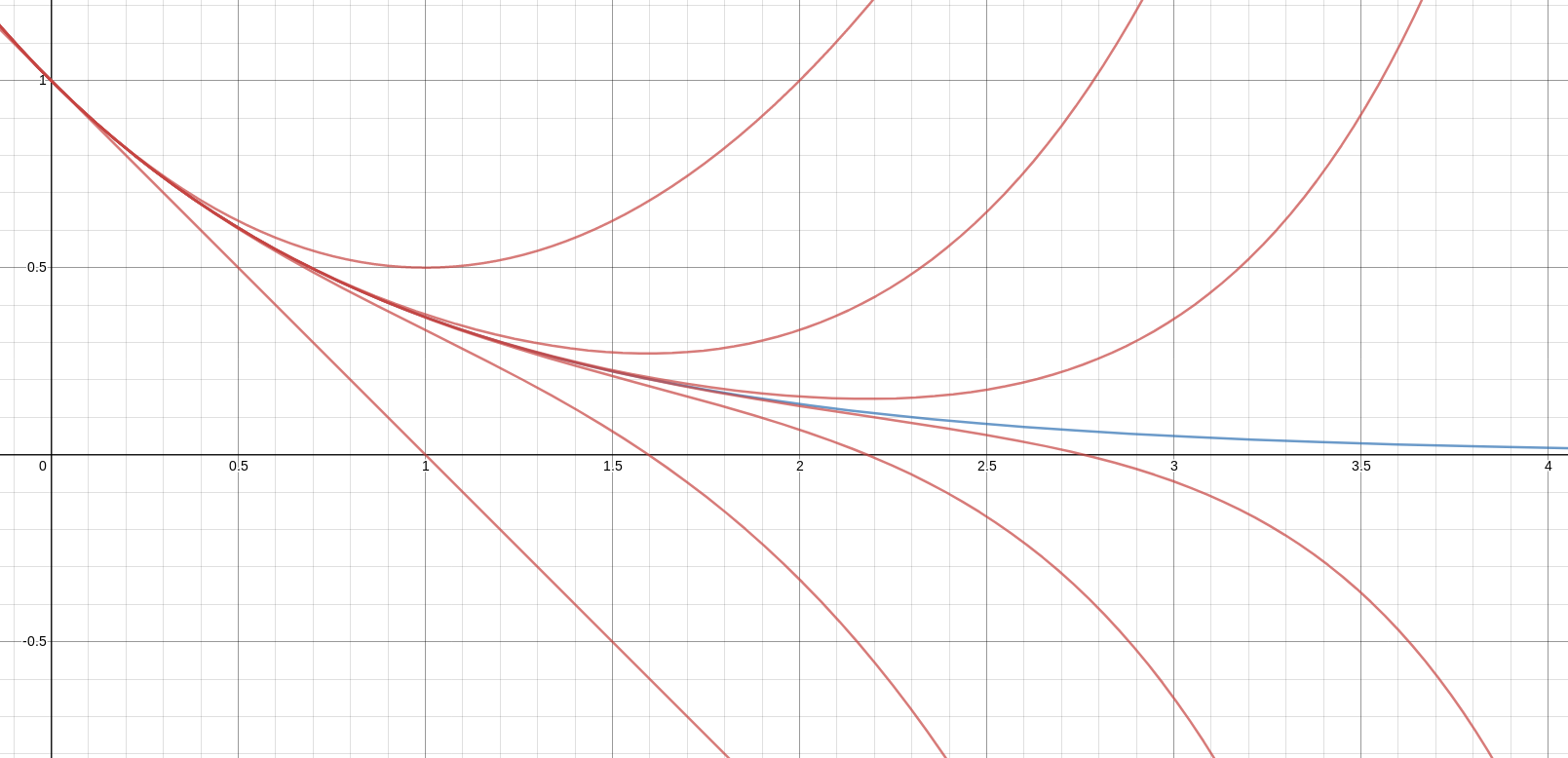

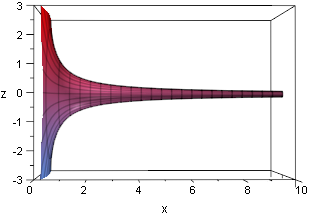

$$\ell^{-1}(n) = 2^n\pmod{101}.$$