Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2025: | JFMAM |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 245 |

| Programming | 99 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 22 |

| Physics | 21 |

| Perl | 17 |

| Biology | 15 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Sat, 16 Dec 2023

My Git pre-commit hook contained a footgun

The other day I made some changes to a program, but when I ran the tests they failed in a very bizarre way I couldn't understand. After a bit of investigation I still didn't understand. I decided to try to narrow down the scope of possible problems by reverting the code to the unmodified state, then introducing changes from one file at a time.

My plan was: commit all the new work, reset the working directory back to the last good commit, and then start pulling in file changes. So I typed in rapid succession:

git add -u

git commit -m 'broken'

git branch wat

git reset --hard good

So the complete broken code was on the new branch wat.

Then I wanted to pull in the first file from wat. But when I

examined wat there were no changes.

Wat.

I looked all around the history and couldn't find the changes. The

wat branch was there but it was on the current commit, the one with

none of the changes I wanted. I checked in the reflog for the commit

and didn't see it.

Eventually I looked back in my terminal history and discovered the

problem: I had a Git pre-commit hook which git-commit had

attempted to run before it made the new commit. It checks for strings

I don't usually intend to commit, such as XXX and the like.

This time one of the files had something like that. My pre-commit

hook had printed an error message and exited with a failure status, so

git-commit aborted without making the commit. But I had typed the

commands in quick succession without paying attention to what they

were saying, so I went ahead with the git-reset without even seeing

the error message. This wiped out the working tree changes that I had

wanted to preserve.

Fortunately the git-add had gone through, so the modified files were

in the repository anyway, just hard to find. And even more

fortunately, last time this happened to me, I wrote up

instructions about what to do.

This time around recovery was quicker and easier. I knew I only

needed to recover stuff from the last add command, so instead of

analyzing every loose object in the repository, I did

find .git/objects -mmin 10 --type f

to locate loose objects that had been modified in the last ten minutes. There were only half a dozen or so. I was able to recover the lost changes without too much trouble.

Looking back at that previous article, I see that it said:

it only took about twenty minutes… suppose that it had taken much longer, say forty minutes instead of twenty, to rescue the lost blobs from the repository. Would that extra twenty minutes have been time wasted? No! … The rescue might have cost twenty extra minutes, but if so it was paid back with forty minutes of additional Git expertise…

To that I would like to add, the time spent writing up the blog article was also well-spent, because it meant that seven years later I didn't have to figure everything out again, I just followed my own instructions from last time.

But there's a lesson here I'm still trying to figure out. Suppose I want to prevent this sort of error in the future. The obvious answer is “stop splatting stuff onto the terminal without paying attention, jackass”, but that strategy wasn't sufficient this time around and I couldn't think of any way to make it more likely to work next time around.

You have to play the hand you're dealt. If I can't fix myself, maybe I

can fix the software. I would like to make some changes to the

pre-commit hook to make it easier to recover from something like

this.

My first idea was that the hook could unconditionally save the staged changes somewhere before it started, and then once it was sure that it would complete it could throw away the saved changes. For example, it might use the stash for this.

(Although, strangely, git-stash does

not seem to have an easy way to say “stash the current changes, but

without removing them from the working tree”. Maybe git-stash save

followed by git-stash apply would do what I wanted? I have not yet

experimented with it.)

Rather than using the stash, the hook might just commit everything

(with commit -n to prevent infinite loops) and then reset the commit

immediately, before doing whatever it was planning to do. Then if it

was successful, Git would make a second, permanent commit and we could

forget about the one made by the hook. But if something went wrong,

the hook's commit would still be in the reflog. This doubles the

number of commits you make. That doesn't take much time, because Git

commit creation is lightning fast. But it would tend to clutter up

the reflog.

Thinking on it now, I wonder if a better approach isn't to turn the pre-commit hook into a post-commit hook. Instead of a pre-commit hook that does this:

- Check for errors in staged files

- If there are errors:

- Fix the files (if appropriate)

- Print a message

- Fail

- Otherwise:

- Exit successfully

- (

git-commitcontinues and commits the changes)

- If there are errors:

How about a post-commit hook that does this:

- Check for errors in the files that changed in the current head commit

- If there are errors:

- Soft-reset back to the previous commit

- Fix the files (if appropriate)

- Print a message

- Fail

- Otherwise:

- Exit successfully

- If there are errors:

Now suppose I ignore the failure, and throw away the staged changes. It's okay, the changes were still committed and the commit is still in the reflog. This seems clearly better than my earlier ideas.

I'll consider it further and report back if I actually do anything about this.

Larry Wall once said that too many programmers will have a problem, think of a solution, and implement it, but it works better if you can think of several solutions, then implement the one you think is best.

That's a lesson I think I have learned. Thanks, Larry.

Addendum

I see that Eric Raymond's version of the jargon file, last revised December 2003, omits “footgun”. Surely this word is not that new? I want to see if it was used on Usenet prior to that update, but Google Groups search is useless for this question. Does anyone have suggestions for how to proceed?

[Other articles in category /prog/git] permanent link

Fri, 15 Dec 2023

Recent addenda to articles 202311: Christenings in Tel Aviv

[ Content warning: extremly miscellaneous. ]

Wow, has it really been 7 months since I did one of these? Surprising, then, how few there are. (I omitted the ones that seemed trivial, and the ones that turned into complete articles.)

Back in 2018 I wrote an article that mentioned two alleys in Tel Aviv and quoted an article from Haaretz that said (in part):

A wealthy American businessman … had christened the streets without official permission… .

Every time I go back to read this I am brought up short by the word “christened”, in an article in Haaretz, in connection with the naming of streets in Tel Aviv. A christening is a specifically Christian baptism and naming ceremony. It's right there in the word!

Orwell's essay on Politics and the English Language got into my blood when I was quite young. Orwell's thesis is that language is being warped by the needs of propaganda. The world is full of people who (in one of Orwell's examples) want to slip the phrase “transfer of population” past you before you can realize that what it really means is “millions of peasants are robbed of their farms and sent trudging along the roads with no more than they can carry”. Writers are exposed to so much of this purposefully vague language that they learn to imitate it even when they are not trying to produce propaganda.

I don't mean to say that that's what the Haaretz writer was doing, intentionally or unintentionally. My claim is only that in this one case, because she wasn't thinking carefully about the meanings of the words she chose, she chose a hilariously inept one. Because of an early exposure to Orwell, that kind of mischoice jumps out at me.

This is hardly the most memorable example I have. The prize for that belongs to my mother, who once, when she was angry with me, called me a “selfish bastard”. This didn't have the effect she intended, because I was so distracted by the poor word choice.

Anyway, the Orwell thing is good. Brief and compelling. Full of good style advice. Check it out.

In 2019, I wrote an article about men who are the husbands of someone important and gave as examples the billionaire husband of Salma Hayek and the Nobel prizewinning husband of Marie Curie. I was not expecting that I would join this august club myself! In April, Slate ran an article about my wife in which I am referred to only as “Kim's husband”. (Judy Blume's husband is also mentioned, and having met him, I am proud to be in the same club.)

Also, just today I learned that Antoine Veil is interred in the Panthéon, but only because he was married to Simone Veil.

In an ancient article about G.H. Hardy I paraphrased from memory something Hardy had said about Ramanujan. In latter years Hardy's book become became available on the Internet, so I was able to append the exact quotation.

A few years ago I wrote a long article about eggplants in which I asked:

Wasn't there a classical Latin word for eggplant? If so, what was it? Didn't the Romans eat eggplant? How do you conquer the world without any eggplants?

I looked into this a bit and was amazed to discover that the Romans did not eat eggplant. I can only suppose that it was because they didn't have any, poor benighted savages. No wonder the Eastern Roman Empire lasted three times as long.

[Other articles in category /addenda] permanent link

Sun, 03 Dec 2023Over the weekend a Gentle Reader sent me an anecdote about getting lost in a Czech zoo. He had a map with a compass rose, and the points of the compass were labeled SVZJ. Gentle Reader expected that S and V were south and west, as they are in many European languages. (For example, Danish has syd and vest; English has “south” and “vvest” — sorry, “west”.

Unfortunately in Czech, S and V are sever, “north”, and východ, “east”. Oops.

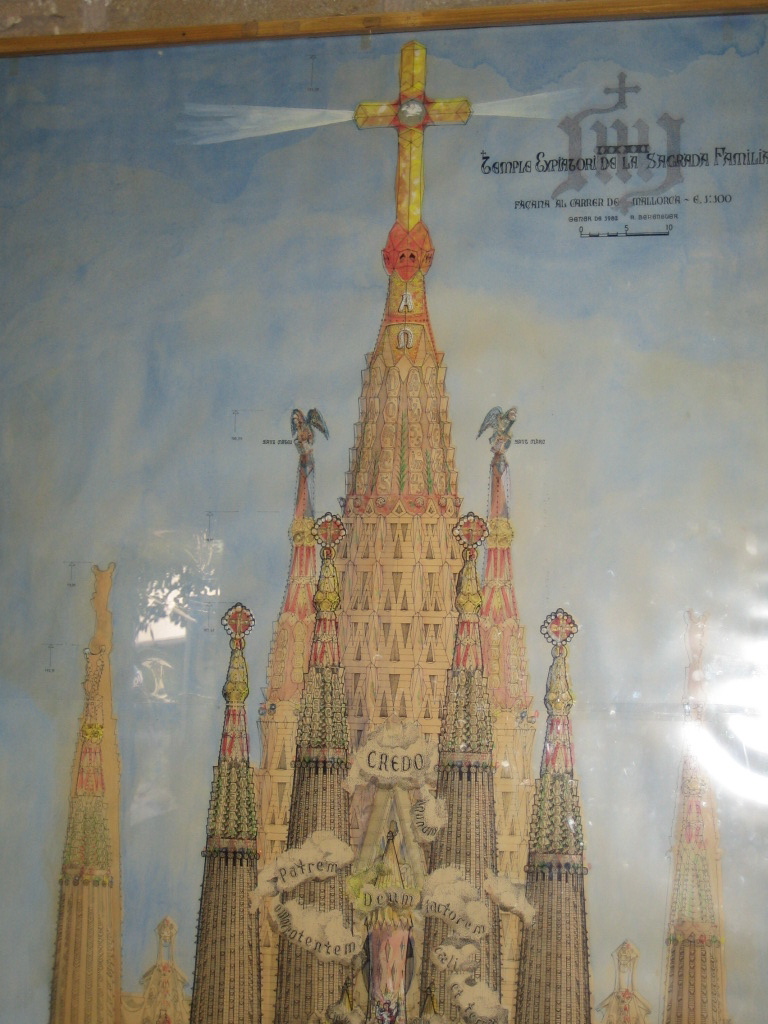

A while back I was thinking about the names of the cardinal directions in Catalán because I was looking at a Catalán map of the Sagrada Família, and observed that the Catalán word for east, llevant is a form of _llevar, which literally means “to rise”, because the east is where the sun rises. (Llevar is from Latin levāre and is akin to words like “levity” and “levitate”.) Similarly the Latin word for “east” is oriēns, from orior, to get up or to arise.

I looked into the Czech a little more and learned that východ, “east”, is also the Czech word for “exit”:

“Aha,” I said. “They use východ for “east” not because that's where the sun comes up but because that's where it enters…”

“…”

“Uh…”

No. Entrance is not exit. Východ is exit. Entrance is vchod.

I dunno, man. I love the Czechs, but this is a little messed up.

Addenda

I think I recall that sever, “north”, is thought to be maybe akin to “shower”, since the north is whence the cold rains come, but maybe I made that up.

- Late update: Nope, I just found that I mentioned the same thing in this earlier article about compass directions. ]

An earlier version of this article had an error about the Catalán. Thanks to Alex Corcoles for pointing this out.

I never mentioned the other Czech compass points. They are: sever, north; východ, east, západ, west, jih, south. Východ seems to be related to Russian восто́к (/vostók/) but I'm not sure how.

20231220: A Gentle Reader asked about the pronunciation of vchod as compared with východ. Is it some unpronounceable Czechism? Nope! I was very pleased with the analogous example I found: the difference is no harder to hear or to say than the difference between “climb” and “keylime”.

[Other articles in category /lang/etym] permanent link

Sat, 02 Dec 2023Content warning: grumpy complaining. This was a frustrating month.

Need an intuitive example for how "P is necessary for Q" means "Q⇒P"?

This kind of thing comes up pretty often. Why are there so many ways that the logical expression !!Q\implies P!! can appear in natural language?

- If !!Q!!, then !!P!!

- !!Q!! implies !!P!!

- !!P!! if !!Q!!

- !!Q!! is sufficient for !!P!!

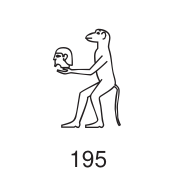

- !!P!! is necessary for !!Q!!

Strange, isn't it? !!Q\land P!! is much simpler: “Both !!Q!! and !!P!! are true” is pretty much it.

Anyway this person wanted an intuitive example of “!!P!! is necessary for !!Q!!”

I suggested:

Suppose that it is necessary to have a ticket (!!P!!) in order to board a certain train (!!Q!!). That is, if you board the train (!!Q!!), then you have a ticket (!!P!!).

Again this follows the principle that rule enforcement is a good thing when you are looking for intuitive examples. Keeping ticketless people off the train is something that the primate brain is wired up to do well.

My first draft had “board a train” in place of “board a certain train”. One commenter complained:

many people travel on trains without a ticket, worldwide

I was (and am) quite disgusted by this pettifogging.

I said “Suppose that…”. I was not claiming that the condition applies to every train in all of history.

OP had only asked for an example, not some universal principle.

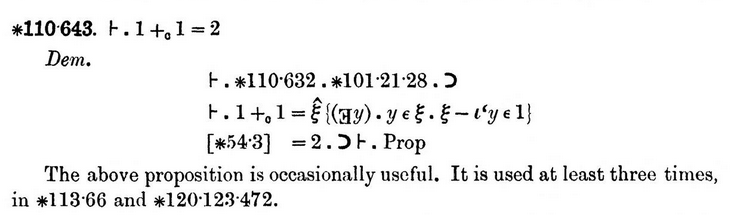

Does ...999.999... = 0?

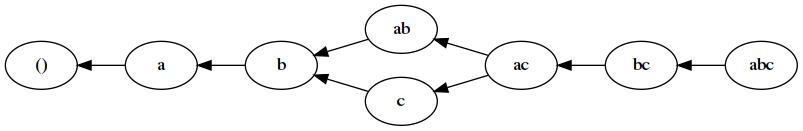

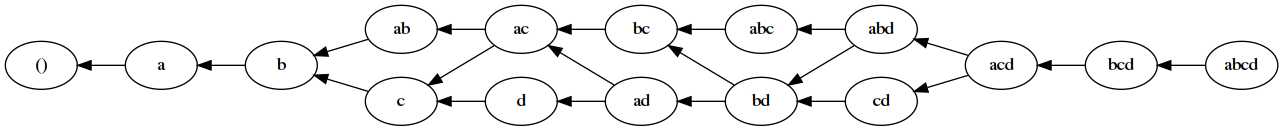

This person is asking one of those questions that often puts Math StackExchange into the mode of insisting that the idea is completely nonsensical, when it is actually very close to perfectly mundane mathematics. (Previously: [1] [2] [3] ) That didn't happen this time, which I found very gratifying.

Normally, decimal numerals have a finite integer part on the left of the decimal point, and an infinite fractional part on the right of the decimal point, as with (for example) !!\frac{13}{3} = 4.333\ldots!!. It turns out to work surprisingly well to reverse this, allowing an infinite integer part on the left and a finite fractional part on the right, for example !!\frac25 = \ldots 333.4!!. For technical reasons we usually do this in base !!p!! where !!p!! is prime; it doesn't work as well in base !!10!!. But it works well enough to use: If we have the base-10 numeral !!\ldots 9999.0!! and we add !!1!!, using the ordinary elementary-school right-to-left addition algorithm, the carry in the units place goes to the tens place as usual, then the next carry goes to the hundreds place and so on to infinity, leaving us with !!\ldots 0000.0!!, so that !!\ldots 9999.0!! can be considered a representation of the number !!-1!!, and that means we don't need negation signs.

In fact this system is fundamental to the way numbers are represented in computer arithmetic. Inside the computer the integer !!-1!! is literally represented as the base-2 numeral !!11111111\;11111111\;11111111\;11111111!!, and when we add !!1!! to it the carry bit wanders off toward infinity on the left. (In the computer the numeral is finite, so we simulate infinity by just discarding the carry bit when it gets too far away.)

Once you've seen this a very reasonable next question is whether you can have numbers that have an infinite sequence of digits on both sides. I think something goes wrong here — for one thing it is no longer clear how to actually do arithmetic. For the infinite-to-the-left numerals arithmetic is straightforward (elementary-school algorithms go right-to-left anyway) and for the standard infinite-to-the-right numerals we can sort of fudge it. (Try multiplying the infinite decimal for !!\sqrt 2!! by itself and see what trouble you get into. Or simpler: What's !!4.666\ldots \times 3!!?)

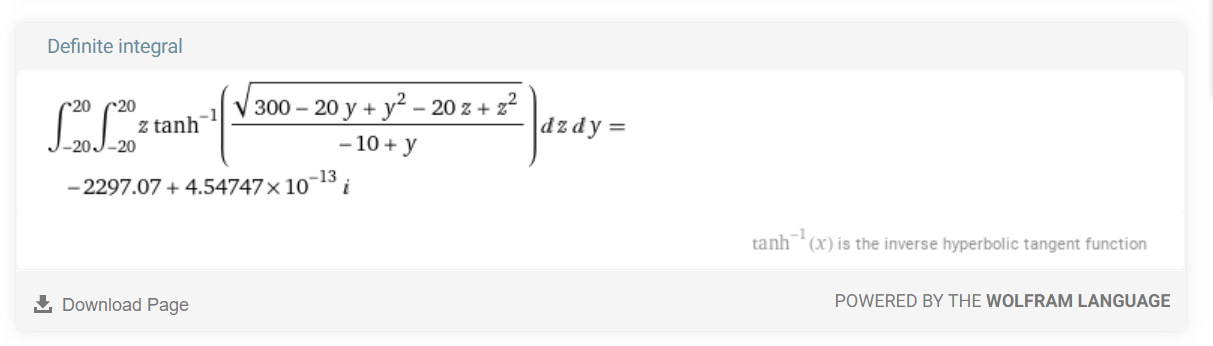

OP's actual question was: If !!\ldots 9999.0 !! can be considered to represent !!-1!!, and if !!0.9999\ldots!! can be considered to represent !!1!!, can we add them and conclude that !!\ldots 9999.9999\ldots = 0!!?

This very deserving question got a good answer from someone who was not me. This was a relief, because my shameful answer was pure shitpostery. It should have been heavily downvoted, but wasn't. The gods of Math SE karma are capricious.

Why define addition with successor?

Ugh, so annoying. OP had read (Bertrand Russell's explanation of) the Peano definition of addition, and did not understand it. Several people tried hard to explain, but communication was not happening. Or, perhaps, OP was more interested in having an argument than in arriving at an understanding. I lost a bit of my temper when they claimed:

Russell's so-called definition of addition (as quoted in my question) is nothing but a tautology: ….

I didn't say:

If you think Bertrand Russell is stupid, it's because you're stupid.

although I wanted to at first. The reply I did make is still not as measured as I would like, and although it leaves this point implicit, the point is still there. I did at least shut up after that. I had answered OP's question as well as I was able, and carrying on a complex discussion in the comments is almost never of value.

Why is Ramanujan considered a great mathematician?

This was easily my best answer of the month, but the question was deleted, so you will only be able to see it if you have enough Math SE reputation.

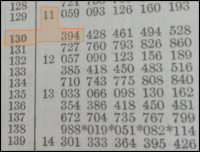

OP asked a perfectly reasonable question: Ramanujan gets a lot of media hype for stuff like this:

$${\sqrt {\phi +2}}-\phi ={\cfrac {e^{{-2\pi /5}}}{1+{\cfrac {e^{{-2\pi }}}{1+{\cfrac {e^{{-4\pi }}}{1+{\cfrac {e^{{-6\pi }}}{1+\,\cdots }}}}}}}}$$

which is not of any obvious use, so “why is it given such high regard?”

OP appeared to be impugning a famous mathematician, and Math SE always responds badly to that; their heroes must not be questioned. And even worse, OP mentioned the notorious non-fact that $$1+2+3+\ldots =-\frac1{12}$$ which drives Math SE people into a frothing rage.

One commenter argued:

Mathematics is not inherently about its "usefulness". Even if you can't find practical use for those formulas, you still have to admit that they are by no means trivial

I think this is fatuous. OP is right here, and the commenter is wrong. Mathematicians are not considered great because they produce wacky and impractical equations. They are considered great because they solve problems, invent techniques that answer previously impossible questions, and because they contribute insights into deep and complex issues.

Some blockhead even said:

Most of the mathematical results are useless. Mathematics is more like an art.

Bullshit. Mathematics is about trying to understand stuff, not about taping a banana to the wall. I replied:

I don't think “mathematics is not inherently about its usefulness" is an apt answer here. Sometimes mathematical results have application to physics or engineering. But for many mathematical results the application is to other parts of mathematics, and mathematicians do judge the ‘usefulness’ of results on this basis. Consider for example Mochizuki's field of “inter-universal Teichmüller theory”. This was considered interesting only as long as it appeared that it might provide a way to prove the !!abc!! conjecture. When that hope collapsed, everyone lost interest in it.

My answer to OP elaborated on this point:

The point of these formulas wasn't that they were useful in themselves. It's that in order to find them he had to have a deep understanding of matters that were previously unknown. His contribution was the deep understanding.

I then discussed Hardy's book on the work he did with Ramanujan and Hardy's own estimation of Ramanujan's work:

The first chapter is somewhat negative, as it summarizes the parts of Ramanujan's work that he felt didn't have lasting value — because Hardy's next eleven chapters are about the work that he felt did have value.

So if OP wanted a substantive and detailed answer to their question, that would be the first place to look.

I also did an arXiv search for “Ramanujan” and found many recent references, including one with “applications to the Ramanujan !!τ!!-function”, and concluded:

The !!\tau!!-function is the subject of the entire chapter 10 of Hardy's book and appears to still be of interest as recently as last Monday.

The question was closed as “opinion-based” (a criticism that I think my answer completely demolishes) and then it was deleted. Now if someone else trying to find out why Ramanujan is held in high regard they will not be able to find my factual, substantive answer.

Screw you, Math SE. This month we both sucked.

[Other articles in category /math/se] permanent link

Fri, 01 Dec 2023

Obsolete spellings and new ligatures in the names of famous persons

There's this technique you learn in elementary calculus called l'Hospital's rule or l'Hôpital's rule, depending on where and when you learned it. It's named for Guillaume l'Hospital or Guillaume l'Hôpital.

In modern French the ‘s’ is silent before certain consonants, and sometime in the 18th century it became standard to omit it, instead putting a circumflex over the preceding vowel to show that the ‘s’ was lurking silently. You can see the same thing in many French words, where the relationship with English becomes clear if you remember that the circumflex indicates a silent letter ‘s’. For example

- côte (coste, coast)

- fête (feste, feast)

- île (isle, isle)

- pâté (paste, paste)

and of course

- hôpital (hospital, hospital)

But the spelling change from ‘os’ to ‘ô’ didn't become common until the 18th century and l'Hôpital, who died in 1704, spelled his name the old way, as “l'Hospital”. The spelling with the circumflex is in some sense an anachronism. I've always felt a little funny about this. I suppose the old spelling looks weird to francophones but I'm not a francophone and it seems weird to me to spell it in a way that l'Hospital himself would not have recognized.

For a long time I felt this way about English names also, and spelled Shakespeare's name “Shakspere”. I eventually gave up on this, because I thought it would confuse people. But I still think about the question every time I have to spell it and wonder what Shakespeare would have thought. Perhaps he would have thought nothing of it, living as he did in a time of less consistent orthography.

To find out the common practice, I went to the German Wikipedia page for Karl Gauss, for whom there a similar issue arises. They spell it the modern way, “Gauß”. But now another issue intrudes: They spell it “Carl” and not “Karl”! If the name were completely modernized, wouldn't it be “Karl Gauß” and not “Carl Gauß”? Is “Carl” still a thing in German?

Gauss is glowering down at me from his picture on an old ten-mark banknote I keep on my wall, so I checked just now and Deutsche Bundesbank also spells it ”Carl Gauß”. (The caption sprouts forth from his left shoulder.)

Now I wonder why I checked the German Wikipedia for Gauss before checking the French Wikipedia for l'Hôpital. Pure stupidity on my part. French Wikipedia uniformly spells it the modern way, with the circumflex.

I suppose I will have to change my practice, and feel the same strangeness whenever I write “Gauß” or “l'Hôpital” as I do when I write “Shakespeare”.

Addenda

Math SE search for l'Hôpital produces 9,336 hits including many that omit the ‘s’ entirely, “l'Hopital”. A search for l'Hospital produces a surprisingly large 5,593 hits.

I also consulted the Chicago Manual of Style but found nothing helpful.

I once knew a graduate student named Chris Geib, who explained to me that his German ancestors had probably been named “Geiß” (“goat”) but that the ẞ was misinterpreted at some point.

[Other articles in category /lang] permanent link

Mon, 27 Nov 2023

Uncountable sets for seven-year-olds

I was recently talking to a friend whose seven-year old had been reading about the Hilbert Hotel paradoxes. One example: The hotel is completely full when a bus arrives with 53 passengers seeking rooms. Fortunately the hotel has a countably infinite number of rooms, and can easily accomodate 53 more guests even when already full.

My friend mentioned that his kid had been unhappy with the associated discussion of uncountable sets, since the explanation he got involved something about people whose names are infinite strings, and it got confusing. I said yes, that is a bad way to construct the example, because names are not infinite strings, and even one infinite string is hard to get your head around. If you're going to get value out of the hotel metaphor, you waste an opportunity if you abandon it for some weird mathematical abstraction. (“Okay, Tyler, now let !!\mathscr B!! be a projection from a vector bundle onto a compact Hausdorff space…”)

My first attempt on the spur of the moment involved the guests belonging to clubs, which meet in an attached convention center with a countably infinite sequence of meeting rooms. The club idea is good but my original presentation was overcomplicated and after thinking about the issue a little more I sent this email with my ideas for how to explain it to a bright seven-year-old.

Here's how I think it should go. Instead of a separate hotel and convention center, let's just say that during the day the guests vacate their rooms so that clubs can meet in the same rooms. Each club is assigned one guest room that they can use for their meeting between the hours of 10 AM to 4 PM. The guest has to get out of the room while that is happening, unless they happen to be a member of the club that is meeting there, in which case they may stay.

If you're a guest in the hotel, you might be a member of the club that meets in your room, or you might not be a member of the club that meets in your room, in which case you have to leave and go to a meeting of one of your clubs in some other room.

We can paint the guest room doors blue and green: blue, if the guest there is a member of the club that meets in that room during the day, and green if they aren't. Every door is now painted blue or green, but not both.

Now I claim that when we were assigning clubs to rooms, there was a club we missed that has nowhere to meet. It's the Green Doors Club of all the guests who are staying in rooms with green doors.

If we did assign the Green Doors Club a guest room in which to meet, that door would be painted green or blue.

The Green Doors Club isn't meeting in a room with a blue door. The Green Doors Club only admits members who are staying in rooms with green doors. That guest belongs to the club that meets in their room, and it isn't the Green Doors Club because the guest's door is blue.

But the Green Doors Club isn't meeting in a room with a green door. We paint a door green when the guest is not a member of the club that meets in their room, and this guest is a member of the Green Doors Club.

So however we assigned the clubs to the rooms, we must have missed out on assigning a room to the Green Doors Club.

One nice thing about this is that it works for finite hotels too. Say you have a hotel with 4 guests and 4 rooms. Well, obviously you can't assign a room to each club because there are 16 possible clubs and only 4 rooms. But the blue-green argument still works: you can assign any four clubs you want to the four rooms, then paint the doors, then figure out who is in the Green Doors Club, and then observe that, in fact, the Green Doors Club is not one of the four clubs that got a room.

Then you can reassign the clubs to rooms, this time making sure that the Green Doors Club gets a room. But now you have to repaint the doors, and when you do you find out that membership in the Green Doors Club has changed: some new members were admitted, or some former members were expelled, so the club that meets there is no longer the Green Doors Club, it is some other club. (Or if the Green Doors Club is meeting somewhere, you will find that you have painted the doors wrong.)

I think this would probably work. The only thing that's weird about it is that some clubs have an infinite number of members so that it's hard to see how they could all squeeze into the same room. That's okay, not every member attends every meeting of every club they're in, that would be impossible anyway because everyone belongs to multiple clubs.

But one place you could go from there is: what if we only guarantee rooms to clubs with a finite number of members? There are only a countably infinite number of clubs then, so they do all fit into the hotel! Okay, Tyler, but what happens to the Green Door Club then? I said all the finite clubs got rooms, and we know the Green Door Club never gets a room, so what can we conclude?

It's tempting to try to slip in a reference to Groucho Marx, but I think it's unlikely that that will do anything but confuse matters.

[ Previously ]

[ Update: My friend said he tried it and it didn't go over as well as I thought it might. ]

[Other articles in category /math] permanent link

Sun, 26 Nov 2023

A Qmail example of dealing with unavoidable race conditions

[ I recently posted about a race condition bug reported by Joe Armstrong and said “this sort of thing is now in the water we swim in, but it wasn't yet [in those days of olde].” This is more about that. ]

I learned a lot by reading everything Dan Bernstein wrote about

the design of qmail. A good deal of

it is about dealing with potential issues just like Armstrong's. The

mail server might crash at any moment, perhaps because someone

unplugged the server. In DJB world, it is unacceptable for mail to be

lost, ever, and also for the mail queue structures to be corrupted if

there was a crash. That sounds obvious, right? Apparently it wasn't;

sendmail would do those things.

(I know someone wants to ask what about Postfix? At the time Qmail was released, Postfix was still called ‘VMailer’. The ‘V’ supposedly stood for “Venema” but the joke was that the ‘V’ was actually for “vaporware” because that's what it was.)

A few weeks ago I was explaining one of Qmail's data structures to a junior programmer. Suppose a local user queues an outgoing message that needs to be delivered to 10,000 recipients in different places. Some of the deliveries may succeed immediately. Others will need to be retried, perhaps repeatedly. Eventually (by default, ten days) delivery will time out and a bounce message will be delivered back to the sender, listing the recipients who did not receive the delivery. How does Qmail keep track of this information?

2023 junior programmer wanted to store a JSON structure or something. That is not what Qmail does. If the server crashes halfway through writing a JSON file, it will be corrupt and unreadable. JSON data can be written to a temporary file and the original can be replaced atomically, but suppose you succeed in delivering the message to 9,999 of the 10,000 recipients and the system crashes before you can atomically update the file? Now the deliveries will be re-attempted for those 9,999 recipients and they will get duplicate copies.

Here's what Qmail does instead. The file in the queue directory is in the following format:

Trecip1@host1■Trecip2@host2■…Trecip10000@host10000■

where ■ represents a zero byte. To 2023 eyes this is strange and uncouth, but to a 20th-century system programmer, it is comfortingly simple.

When Qmail wants to attempt a delivery to recip1346@host1346 it has

located that address in the file and seen that it has a T (“to-do”)

on the front. If it had been a D (“done”) Qmail would know that

delivery to that address had

already succeeded, and it would not attempt it again.

If delivery does succeed, Qmail updates the T to a D:

if (write(fd,"D",1) != 1) { close(fd); break; }

/* further errors -> double delivery without us knowing about it, oh well */

close(fd);

return;

The update of a single byte will be done all at once or not at all. Even writing two bytes is riskier: if the two bytes span a disk block boundary, the power might fail after only one of the modified blocks has been written out. With a single byte nothing like that can happen. Absent a catastrophic hardware failure, the data structure on the disk cannot become corrupted.

Mail can never be lost. The only thing that can go wrong here is if the local system crashes in between the successful delivery and the updating of the byte; in this case the delivery will be attempted again, to that one user.

Addenda

I think the data structure could even be updated concurrently by more than one process, although I don't think Qmail actually does this. Can you run multiple instances of

qmail-sendthat share a queue directory? (Even if you could, I can't think of any reason it would be a good idea.)I had thought the update was performed by

qmail-remote, but it appears to be done byqmail-send, probably for security partitioning reasons.qmail-localruns as a variable local user, so it mustn't have permission to modify the queue file, or local users would be able to steal email.qmail-remotedoesn't have this issue, but it would be foolish to implement the same functionality in two places without a really good reason.

[Other articles in category /prog] permanent link

Sat, 25 Nov 2023

Puzzling historical artifact in “Programming Erlang”?

Lately I've been reading Joe Armstrong's book Programming Erlang, and today I was brought up short by this passage from page 208:

Why Spawning and Linking Must Be an Atomic Operation

Once upon a time Erlang had two primitives,

spawnandlink, andspawn_link(Mod, Func, Args)was defined like this:spawn_link(Mod, Func, Args) -> Pid = spawn(Mod, Func, Args), link(Pid), Pid.Then an obscure bug occurred. …

Can you guess the obscure bug? I don't think I'm unusually skilled at concurrent systems programming, and I'm certainly no Joe Armstrong, but I thought the problem was glaringly obvious:

The spawned process died before the link statement was called, so the process died but no error signal was generated.

I scratched my head over this for quite some time. Not over the technical part, but about how famous expert Joe Amstrong could have missed this.

Eventually I decided that it was just that this sort of thing is now in the water we swim in, but it wasn't yet in the primeval times Armstrong was writing about. Sometimes problems are ⸢obvious⸣ because it's thirty years later and everyone has thirty years of experience dealing with those problems.

Another example

I was reminded of a somewhat similar example. Before the WWW came, a sysadmin's view of network server processes was very different than it is now. We thought of them primarily as attack surfaces, and ran as few as possible, as little as possible, and tried hard to prevent anyone from talking to them.

Partly this was because encrypted, authenticated communications

protocols were still an open research area. We now have ssh and

https layers to build on, but in those days we built on sand.

Another reason is that networking itself was pretty new, and we didn't

yet have a body of good technique for designing network services and

protocols, or for partitioning trust.

We didn't know how to write

good servers, and the ones that had been written were bad, often very

bad. Even thirty years ago, sendmail was notorious and had been a

vector for mass security failures, and even something as

innocuous-seeming as finger had turned out to have major issues.

When the Web came along, every sysadmin was thrust into a terrifying new world in which users clamored to write network services that could be talked to at all times by random Internet people all over the world. It was quite a change.

[ I wrote more about system race conditions, but decided to postpone it to Monday. Check back then. ]

[Other articles in category /prog] permanent link

Fri, 24 Nov 2023

Math SE report 2023-09: Sense and reference, Wason tasks, what is a sequence?

Proving there is only one proof?

OP asks:

In mathematics, is it possible to prove that there is only one (shortest) proof of a given theorem (say, in ZFC)?

This was actually from back in July, when there was a fairly substantive answer. But it left out what I thought was a simpler, non-substantive answer: For a given theorem !!T!! it's actually quite simple to prove that there is (or isn't) only one proof of !!T!!: just generate all possible proofs in order by length until you find the shortest proofs of !!T!!, and then stop before you generate anything longer than those. There are difficult and subtle issues in provability theory, but this isn't one of them.

I say “non-substantive” because it doesn't address any of the possibly interesting questions of why a theorem would have only one proof, or multiple proofs, or what those proofs would look like, or anything like that. It just answers the question given: is it possible to prove that there is only one shortest proof.

So depending on what OP was looking for, it might be very unsatisfying. Or it might be hugely enlightening, to discover that this seemingly complicated question actually has a simple answer, just because proofs can be systematically enumerated.

This comes in handy in more interesting contexts. Gödel showed that arithmetic contains a theorem whose shortest proof is at least one million steps long! He did it by constructing an arithmetic formula !!G!! which can be interpreted as saying:

!!G!! cannot be proved in less than one million steps.

If !!G!! is false, it can be proved (in less than one million steps) and our system is inconsistent. So assuming that our axioms are consistent, then !!G!! is true and either:

- There is no proof of at all of !!G!!, or

- There are proofs of !!G!! but the shortest one is at least a million steps

Which is it? It can't be (1) because there is a proof of !!G!!: simply generate every single proof of one million steps or fewer, and check at the last line of each one to make sure that it is not !!G!!. So it must be (2).

What counts as a sequence, and how would we know that it isn't deceiving?

This is a philosophical question: What is a sequence, really? And:

if I write down random numbers with no pattern at all except for the fact that it gets larger, is it a viable sequence?

And several other related questions that are actually rather subtle: Is a sequence defined by its elements, or by some external rule? If the former how can you know when a sequence is linear, when you can only hope to examine a finite prefix?

I this is a great question because I think a sequence, properly construed, is both a rule and its elements. The definition says that a sequence of elements of !!S!! is simply a function !!f:\Bbb N\to S!!. This definition is a sort of spherical cow: it's a nice, simple model that captures many of the mathematical essentials of the thing being modeled. It works well for many purposes, but you get into trouble if you forget that it's just a model. It captures the denotation, but not the sense. I wouldn't yak so much about this if it wasn't so often forgotten. But the sense is the interesting part. If you forget about it, you lose the ability to ask questions like

Are sequences !!s_1!! and !!s_2!! the same sequence?

If all you have is the denotation, there's only one way to answer this question:

By definition, yes, if and only if !!s_1!! and !!s_2!! are the same function.

and there is nothing further to say about it. The question is pointless and the answer is useless. Sometimes the meaning is hidden a little deeper. Not this time. If we push down into the denotation, hoping for meaning, we find nothing but more emptiness:

Q: What does it mean to say that !!s_1!! and !!s_2!! are the same function?

A: It means that the sets $$S_1 = \{ \langle i, s_1(i) \rangle \mid i\in \Bbb N\}$$ and $$S_2 = \{ \langle i, s_2(i) \rangle \mid i\in \Bbb N\}$$ have exactly the same elements.

We could keep going down this road, but it goes nowhere and having gotten to the end we would have seen nothing worth seeing.

But we do ask and answer this kind of question all the time. For example:

- !!S_1(n)!! is the infinite sequence of odd numbers starting at !!1!!

- !!S_2(n)!! is the infinite sequence of numbers that are the difference between a square and its previous square, starting at !!1^2-0^2!!

Are sequences !!S_1!! and !!S_2!! the same sequence? Yes, yes, of course they are, don't focus on the answer. Focus on the question! What is this question actually asking?

The real essence of the question is not about the denotation, about just the elements. Rather: we're given descriptions of two possible computations, and the question is asking if these two computations will arrive at the same results in each case. That's the real question.

Well, I started this blog article back in October and it's still not ready because I got stuck writing about this question. I think the answer I gave on SE is pretty good, OP asked what is essentially a philosophical question and the backbone of my answer is on the level of philosophy rather than mathematics.

[ Addendum: On review, I am pleasantly surprised that this section of the blog post turned out both coherent and relevant. I really expected it to be neither. A Thanksgiving miracle! ]

Can inequalities be added the way that equations can be added?

OP says:

Suppose you have !!x + y > 6!! and !!x - y > 4!!. Adding the inequalities, the !!y!! terms cancel and you end up with … !!x > 5!!. It is not intuitively obvious to me that this holds true … I can see that you can't subtract inequalities, but is it always okay to add them?

I have a theory that if someone is having trouble with the intuitive meaning of some mathematical property, it's a good idea to turn it into a question about fair allocation of resources, or who has more of some commodity, because human brains are good at monkey tasks like seeing who got cheated when the bananas were shared out.

About ten years ago someone asked for an intuitive explanation of why you could add !!\frac a2!! to both sides of !!\frac a2 < \frac b2!! to get !!\frac a2+\frac a2 < \frac a2 + \frac b2!!. I said:

Say I have half a bag of cookies, that's !!\frac a2!! cookies, and you have half a carton of cookies, that's !!\frac b2!! cookies, and the carton is bigger than the bag, so you have more than me, so that !!\frac a2 < \frac b2!!.

Now a friendly djinn comes along and gives you another half a bag of cookies, !!\frac a2!!. And to be fair he gives me half a bag too, also !!\frac a2!!.

So you had more cookies before, and the djinn gave each of us an extra half a bag. Then who has more now?

I tried something similar this time around:

Say you have two bags of cookies, !!a!! and !!b!!. A friendly baker comes by and offers to trade with you: you will give the baker your bag !!a!! and in return you will get a larger bag !!c!! which contains more cookies. That is, !! a \lt c !!. You like cookies, so you agree.

Then the baker also trades your bag !!b!! for a bigger bag !!d!!.

Is it possible that you might not have more cookies than before you made the trades? … But that's what it would mean if !! a\lt c !! and !! b\lt d !! but not !! a+b \lt c+d !! too.

Someday I'll write up a whole blog article about this idea, that puzzles in arithmetic sometimes become intuitively obvious when you turn them into questions about money or commodities, and that puzzles in logic sometimes become intuitively obvious when you turn them into questions about contract and rule compliance.

I don't remember why I decided to replace the djinn with a baker this time around. The cookies stayed the same though. I like cookies. Here's another cookie example, this time to explain why !!1\div 0.5 = 2!!.

What is the difference between "for all" and "there exists" in set builder notation?

This is the same sort of thing again. OP was was asking about

$$B = \{n \in \mathbb{N} : \forall x \in \mathbb{N} \text{ and } n=2^x\}$$

but attempting to understand this is trying to swallow two pills at once. One pill is the logic part (what role is the !!\forall!! playing) and the other pill is the arithmetic part having to do with powers of !!2!!. If you're trying to understand the logic part and you don't have an instantaneous understanding of powers of !!2!!, it can be helpful to simplify matters by replacing the arithmetic with something you understand intuitively. In place of the relation !!a = 2^b!! I like to use the relation “!!a!! is the mother of !!b!!”, which everyone already knows.

Are infinities included in the closure of the real set !!\overline{\mathbb{R}}!!

This is a good question by the Chip Buchholtz criterion: The answer is much longer than the question was. OP wants to know if the closure of !!\Bbb R!! is just !!\Bbb R!! or if it's some larger set like !![-\infty, \infty]!!. They are running up against the idea that topological closure is not an absolute notion; it only makes sense in the context of an enclosing space.

I tried to draw an analogy between the closure and the complement of a set: Does the complement of the real numbers include the number !!i!!? Well, it depends on the context.

OP preferred someone else's answer, and I did too, saying:

I thought your answer was better because it hit all the important issues more succinctly!

I try to make things very explicit, but the downside of that is that it makes my answers longer, and shorter is generally better than longer. Sometimes it works, and sometimes it doesn't.

Vacuous falsehood - does it exist, and are there examples?

I really liked this question because I learned something from it. It brought me up short: “Huh,” I said. “I never thought about that.” Three people downvoted the question, I have no idea why.

I didn't know what a vacuous falsity would be either but I decided that since the negation of a vacuous truth would be false it was probably the first thing to look at. I pulled out my stock example of vacuous truth, which is:

All my rubies are red.

This is true, because all rubies are red, but vacuously so because I don't own any rubies.

Since this is a vacuous truth, negating it ought to give us a vacuous falsity, if there is such a thing:

I have a ruby that isn't red.

This is indeed false. And not in the way one would expect! A more typical false claim of this type would be:

I have a belt that isn't leather.

This is also false, in rather a different way. It's false, but not vacuously so, because to disprove it you have to get my belts out of the closet and examine them.

Now though I'm not sure I gave the right explanation in my answer. I said:

In the vacuously false case we don't even need to read the second half of the sentence:

there is a ruby in my vault that …… The irrelevance of the “…is not red” part is mirrored exactly in the irrelevance of the “… are red” part in the vacuously true statement:

all the rubies in my vault are …

But is this the right analogy? I could have gone the other way:

In the vacuously false case we don't even need to read the first half of the sentence:

there is a ruby … that is not red… The irrelevance of the “… in my vault …” part is mirrored exactly in the irrelevance of the “… are red” part in the vacuously true statement:

all the rubies in my vault are …

Ah well, this article has been drying out on the shelf for a month now, I'm making an editorial decision to publish it without thinking about it any more.

[Other articles in category /math/se] permanent link

Mon, 23 Oct 2023Katara is taking a Data Structures course this year. The most recent assignment gave her a lot of trouble, partly because it was silly and made no sense, but also because she does not yet know an effective process for writing programs, and the course does not attempt to teach her. On the day the last assignment was due I helped her fix the remaining bugs and get it submitted. This is the memo I wrote to her to memorialize the important process issues that I thought of while we were working on it.

You lost a lot of time and energy dealing with issues like: Using

vim; copying files back and forth with scp; losing the network connection; the college shared machine is slow and yucky.It's important to remove as much friction as possible from your basic process. Otherwise it's like trying to cook with dull knives and rusty pots, except worse because it interrupts your train of thought. You can't do good work with bad tools.

When you start the next project, start it in VScode in the beginning. And maybe set aside an hour or two before you start in earnest, just to go through the VSCode tutorial and familiarize yourself with its basic features, without trying to do that at the same time you are actually thinking about your homework. This will pay off quickly.

It's tempting to cut corners when writing code. For example:

It's tempting to use the first variable or function name you think of instead of taking a moment to think of a suggestive one. You had three classes in your project, all with very similar names. You might imagine that this doesn't matter, you can remember which is which. But remembering imposes a tiny cost every time you do it. These tiny costs seem insignificant. But they compound.

It's tempting to use a short, abbreviated variable or method name instead of a longer more recognizable one because it's quicker to type. Any piece of code is read more often than it is written, so that is optimizing in the wrong place. You need to optimize for quick and easy reading, at the cost of slower and more careful writing, not the other way around.

It's tempting to write a long complicated expression instead of two or three shorter ones where the intermediate results are stored in variables. But then every time you look at the long expression you have to pause for a moment to remember what is going on.

It's tempting to repeat the same code over and over instead of taking the time to hide it behind an interface. For example your project was full of

array[d-1900]all over. This minus-1900 thing should have been hidden inside one of the classes (I forget which). Any code outside that had to communicate with this class should have done so with full year numbers like 1926. That way, when you're not in that one class, you can ignore and forget about the issue entirely. Similarly, if code outside a class is doing the same thing in more than once place, it often means that the class needs another method that does that one thing. You add that method, and then the code outside can just call the method when it needs to do the thing. You advance the program by extending the number of operations it can perform without your thinking of them.If something is messy, it is tempting to imagine that it doesn't matter. It does matter. Those costs are small but compound. Invest in cleaning it up messy code, because if you don't the code will get worse and worse until the mess is a serious impediment. This is like what happens when you are cooking if you don't clean up as you go. At first it's only a tiny hindrance, but if you don't do it constantly you find yourself working in a mess, making mistakes, and losing and breaking things.

Debugging is methodical. Always have clear in your mind what question you are trying to answer, and what your plan is for investigating that question. The process looks like this:

I don't like that it is printing out 0 instead of 1. Why is it doing that? Is the printing wrong, or is the printing correct but the data is wrong?

I should go into the function that does the printing, and print out the data in the simplest way possible, to see if it is correct. (If it's already printing out the data in the simplest way possible, the problem must be in the data.)

(Supposing that the it's the data that is bad) Where did the bad data come from? If it came from some other function, what function was it? Did that function make up the wrong data from scratch, or did it get it from somewhere else?

If the function got the data from somewhere else, did it pass it along unchanged or did it modify the data? If it modified the data, was the data correct when the function got it, or was it already wrong?

The goal here is to point the Finger of Blame: What part of the code is really responsible for the problem? First you accuse the code that actually prints the wrong result. Then that code says “Nuh uh, it was like that when I got it, go blame that other guy that gave it to me.” Eventually you find the smoking gun.

Novice programmers often imagine that they can figure out what is wrong from looking at the final output and intuiting the solution Sherlock Holmes style. This is mistaken. Nobody can do this. Debugging is an engineering discipline: You come up with a hypothesis, then test the hypothesis. Then you do it again.

Ask Dad for assistance when appropriate. I promise not to do anything that would violate the honor code.

Something we discussed that I forgot to include in the memo that we discussed is: After you fix something significant, or add significant new functionality, make a checkpoint copy of the entire source code. This can be as simple as simply copying it all into separate folder. That way, when you are fixing the next thing, if you mess up and break everything, it's easy to get back to a known-good state. The computer is really clumsy to use for many tasks, but it's just great at keeping track of information, so exploit that when you can.

I think CS curricula should have a class that focuses specifically on these issues, on the matter of how do you actually write software?

But they never do.

[Other articles in category /prog] permanent link

When I first set up this blog I didn't know how long I would do it, so instead of thinking about how I wanted it to look, I just took the default layout that came with Blosxom, and figured that I would change it when I got around to it. Now I am getting around to it.

The primary problem is that the current implementation (with nested tables!) performs badly, especially on mobile devices and especially on pages with a lot of MathJax. A secondary issue is that it's troublesome to edit. People sometimes laugh at how it looks like a 2006 design (which it is). I don't much care about this. I like the information-dense layout, which I think is distinctive and on-brand.

I would like a redesign that fixes these two problems. The primary goal is to get rid of the nested tables and replace the implementation with something that browsers can handle better, probably something based on CSS Flexbox. It doesn't have to look very different, but it does need to be straightforward enough that I can make the next ten years of changes without a lot of research and experimentation.

If you know someone interested in doing this, please email me a referral or have them get in touch with me. If you are interested in doing it yourself, please send me a proposal. Include a cost estimate, as this would be paid work.

Please do not advertise this on Hacker News, as that would run the risk of my getting 100 proposals, and that would be 50 times as many as I need.

Thanks.

[Other articles in category /meta] permanent link

Sun, 22 Oct 2023We used to have a cat named Chase. To be respectful we would sometimes refer to him as “Mr. Cat”. And sometimes I amused myself by calling him “Señor Gato”.

Yesterday I got to wondering: Where did Spanish get “gato”, which certainly sounds like “cat”, when the Latin is fēles or fēlis (like in “feline’)? And similarly French has chat.

Well, the real question is, where did Latin get fēles? Because Latin also has cattus, which I think sounds like a joke. You're in Latin class, and you're asked to translate cat, but you haven't done your homework, so what do you say? “Uhhhh… ‘cattus’?”

But cattus is postclassical Latin, replacing the original word fēles no more than about 1500 years ago. The word seems to have wandered all over Europe and Western Asia and maybe North Africa, borrowed from one language into another, and its history is thoroughly mixed up. Nobody is sure where it came from originally, beyond “something Germanic”. The OED description of cat runs to 600 words and shrugs its shoulders.

I learned recently that such words (like brinjal, the eggplant) are called Wanderworts, wandering words.

[Other articles in category /lang/etym] permanent link

Sat, 21 Oct 2023The other day I was looking into vindaloo curry and was surprised to learn that the word “vindaloo” is originally Portuguese vin d'alho, a wine and garlic sauce. Amazing.

In Japanese, squashes are called kabocha. (In English this refers to a specific type of squash associated with Japan, but in Japanese it's more generic.) Kabocha is from Portuguese again. The Portuguese introduced squashes to Japan via Cambodia, which in Portuguese is Camboja.

[Other articles in category /lang/etym] permanent link

Fri, 20 Oct 2023

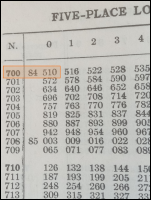

The discrete logarithm, shorter and simpler

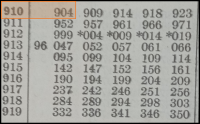

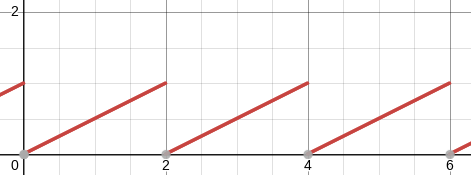

I recently discussed the “discrete logarithm” method for multiplying integers, and I feel like I took too long and made it seem more complicated and mysterious than it should have been. I think I'm going to try again.

Suppose for some reason you found yourself needing to multiply a lot of powers of !!2!!. What's !!4096·512!!? You could use the conventional algorithm:

$$ \begin{array}{cccccccc} & & & & 4 & 0 & 9 & 6 \\ × & & & & & 5 & 1 & 2 \\ \hline % & & & & 8 & 1 & 9 & 2 \\ & & & 4 & 0 & 9 & 6 & \\ & 2 & 0 & 4 & 8 & 0 & & \\ \hline % & 2 & 0 & 9 & 7 & 1 & 5 & 2 \end{array} $$

but that's a lot of trouble, and a simpler method is available. You know that $$2^i\cdot 2^j = 2^{i+j}$$

so if you had an easy way to convert $$2^i\leftrightarrow i$$ you could just convert the factors to exponents, add the exponents, and convert back. And all that's needed is a simple table:

\begin{array}{rr} 0 & 1\\ 1 & 2\\ 2 & 4\\ 3 & 8\\ 4 & 16\\ 5 & 32\\ 6 & 64\\ 7 & 128\\ 8 & 256\\ 9 & 512\\ 10 & 1\,024\\ 11 & 2\,048\\ 12 & 4\,096\\ 13 & 8\,192\\ 14 & 16\,384\\ 15 & 32\,768\\ 16 & 65\,536\\ 17 & 131\,072\\ 18 & 262\,144\\ 19 & 524\,288\\ 20 & 1\,048\,576\\ 21 & 2\,097\,152\\ \vdots & \vdots \\ \end{array}

We check the table, and find that $$4096\cdot512 = 2^{12}\cdot 2^9 = 2^{12+9} = 2^{21} = 2097152.$$ Easy-peasy.

That is all very well but how often do you find yourself having to multiply a lot of powers of !!2!!? This was a lovely algorithm but with very limited application.

What Napier (the inventor of logarithms) realized was that while not every number is an integer power of !!2!!, every number is an integer power of !!1.00001!!, or nearly so. For example, !!23!! is very close to !!1.00001^{313\,551}!!. Napier made up a table, just like the one above, except with powers of !!1.00001!! instead of powers of !!2!!. Then to multiply !!x\cdot y!! you would just find numbers close to !!x!! and !!y!! in Napier's table and use the same algorithm. (Napier's original table used powers of !!0.9999!!, but it works the same way for the same reason.)

There's another way to make it work. Consider the integers mod !!101!!, called !!\Bbb Z_{101}!!. In !!\Bbb Z_{101}!!, every number is an integer power of !!2!!!

For example, !!27!! is a power of !!2!!. It's simply !!2^7!!, because if you multiply out !!2^7!! you get !!128!!, and !!128\equiv 27\pmod{101}!!.

Or:

$$\begin{array}{rcll} 14 & \stackrel{\pmod{101}}{\equiv} & 10\cdot 101 & + 14 \\ & = & 1010 & + 14 \\ & = & 1024 \\ & = & 2^{10} \end{array} $$

Or:

$$\begin{array}{rcll} 3 & \stackrel{\pmod{101}}{\equiv} & 5844512973848570809\cdot 101 & + 3 \\ & = & 590295810358705651709 & + 3 \\ & = & 590295810358705651712 \\ & = & 2^{69} \end{array} $$

Anyway that's the secret. In !!\Bbb Z_{101}!! the silly algorithm that quickly multiplies powers of !!2!! becomes more practical, because in !!\Bbb Z_{101}!!, every number is a power of !!2!!.

What works for !!101!! works in other cases larger and more interesting. It doesn't work to replace !!101!! with !!7!! (try it and see what goes wrong), but we can replace it with !!107, 797!!, or !!297779!!. The key is that if we want to replace !!101!! with !!n!! and !!2!! with !!a!!, we need to be sure that there is a solution to !!a^i=b\pmod n!! for every possible !!b!!. (The jargon term here is that !!a!! must be a “primitive root mod !!n!!”. !!2!! is a primitive root mod !!101!!, but not mod !!7!!.)

Is this actually useful for multiplication? Perhaps not, but it does have cryptographic applications. Similar to how multiplying is easy but factoring seems difficult, computing !!a^i\pmod n!! for given !!a, i, n!! is easy, but nobody knows a quick way in general to reverse the calculation and compute the !!i!! for which !!a^i\pmod n = m!! for a given !!m!!. When !!n!! is small we can simply construct a lookup table with !!n-1!! entries. But if !!n!! is a !!600!!-digit number, the table method is impractical. Because of this, Alice and Bob can find a way to compute a number !!2^i!! that they both know, but someone else, seeing !!2^i!! can't easily figure out what the original !!i!! was. See Diffie-Hellman key exchange for more details.

[ Also previously: Percy Ludgate's weird variation on this ]

[Other articles in category /math] permanent link

Sun, 15 Oct 2023[ Addendum 20231020: This came out way longer than it needed to be, so I took another shot at it, and wrote a much simpler explanation of the same thing that is only one-third as long. ]

A couple days ago I discussed the weird little algorithm of Percy Ludgate's, for doing single-digit multiplication using a single addition and three scalar table lookups. In Ludgate's algorithm, there were two tables, !!T_1!! and !!T_2!!, satisfying the following properties:

$$ \begin{align} T_2(T_1(n)) & = n \tag{$\color{darkgreen}{\spadesuit}$} \\ T_2(T_1(a) + T_1(b)) & = ab. \tag{$\color{purple}{\clubsuit}$} \end{align} $$

This has been called the “Irish logarithm” method because of its resemblance to ordinary logarithms. Normally in doing logarithms we have a magic logarithm function !!\ell!! with these properties:

$$ \begin{align} \ell^{-1}(\ell(n)) & = n \tag{$\color{darkgreen}{\spadesuit}$} \\ \ell^{-1}(\ell(a) + \ell(b)) & = ab. \tag{$\color{purple}{\clubsuit}$} \end{align} $$

(The usual notation for !!\ell(x)!! is of course “!!\log x!!” or “!!\ln x!!” or something of that sort, and !!\ell^{-1}(x)!! is usually written !!e^x!! or !!10^x!!.)

The properties of Ludgate's !!T_1!! and !!T_2!! are formally identical, with !!T_1!! playing the role of the logarithm function !!\ell!! and !!T_2!! playing the role of its inverse !!\ell^{-1}!!. Ludgate's versions are highly restricted, to reduce the computation to something simple enough that it can be implemented with brass gears.

Both !!T_1!! and !!T_2!! map positive integers to positive integers, and can be implemented with finite lookup tables. The ordinary logarithm does more, but is technically much more difficult. With the ordinary logarithm you are not limited to multiplying single digit integers, as with Ludgate's weird little algorithm. You can multiply any two real numbers, and the multiplication still requires only one addition and three table lookups. But the cost is huge! The tables are much larger and more complex, and to use them effectively you have to deal with fractional numbers, perform table interpolation, and worry about error accumulation.

It's tempting at this point to start explaining the history and use of logarithm tables, slide rules, and so on, but this article has already been delayed once, so I will try to resist. I will do just one example, with no explanation, to demonstrate the flavor. Let's multiply !!7!! by !!13!!.

I look up !!7!! in my table of logarithms and find that !!\log_{10} 7 \approx 0.84510!!.

I look up !!13!! similarly and find that !!\log_{10} 13 \approx 1.11394!!.

I add !!0.84510 + 1.1394 = 1.95904!!.

I do a reverse lookup on !!1.95904!! and find that the result is approximately !!91.00!!.

If I were multiplying !!7.236!! by !!13.877!!, I would be willing to accept all these costs, and generations of scientists and engineers did accept them. But for !!7.0000×13.000 = 91.000!! the process is ridiculous. One might wonder if there wasn't some analogous technique that would retain the small, finite tables, and permits multiplication of integers, using only integer calculations throughout. And there is!

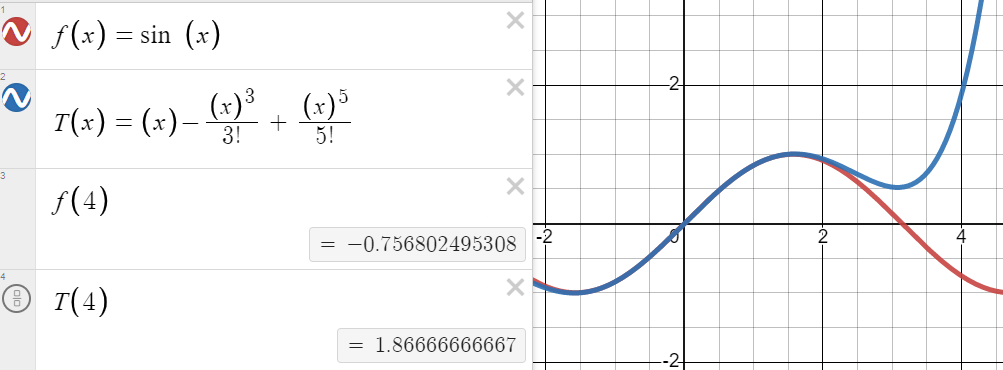

Now I am going to demonstrate an algorithm, based on logarithms, that exactly multiplies any two integers !!a!! and !!b!!, as long as !!ab ≤ 100!!. Like Ludgate's and the standard algorithm, it will use one addition and three lookups in tables. Unlike the standard algorithm, the tables will be small, and will contain only integers.

Here is the table of the !!\ell!! function, which corresponds to Ludgate's !!T_1!!:

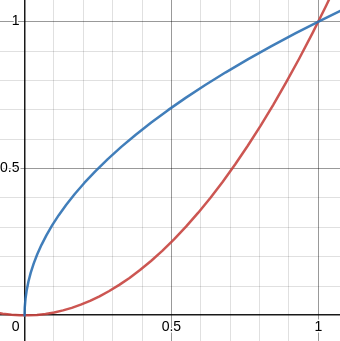

$$ \begin{array}{rrrrrrrrrrr} {\tiny\color{gray}{1}} & 0, & 1, & \color{darkblue}{69}, & 2, & 24, & 70, & \color{darkgreen}{9}, & 3, & 38, & 25, \\ {\tiny\color{gray}{11}} & 13, & \color{darkblue}{71}, & \color{darkgreen}{66}, & 10, & 93, & 4, & 30, & 39, & 96, & 26, \\ {\tiny\color{gray}{21}} & 78, & 14, & 86, & 72, & 48, & 67, & 7, & 11, & 91, & 94, \\ {\tiny\color{gray}{31}} & 84, & 5, & 82, & 31, & 33, & 40, & 56, & 97, & 35, & 27, \\ {\tiny\color{gray}{41}} & 45, & 79, & 42, & 15, & 62, & 87, & 58, & 73, & 18, & 49, \\ {\tiny\color{gray}{51}} & 99, & 68, & 23, & 8, & 37, & 12, & 65, & 92, & 29, & 95, \\ {\tiny\color{gray}{61}} & 77, & 85, & 47, & 6, & 90, & 83, & 81, & 32, & 55, & 34, \\ {\tiny\color{gray}{71}} & 44, & 41, & 61, & 57, & 17, & 98, & 22, & 36, & 64, & 28, \\ {\tiny\color{gray}{81}} & \color{darkred}{76}, & 46, & 89, & 80, & 54, & 43, & 60, & 16, & 21, & 63, \\ {\tiny\color{gray}{91}} & 75, & 88, & 53, & 59, & 20, & 74, & 52, & 19, & 51, & 50\hphantom{,} \\ \end{array} $$

(If we only want to multiply numbers with !!1\le a, b \le 9!! we only need the first row, but with the full table we can also compute things like !!7·13=91!!.)

Like !!T_2!!, this is not really a two-dimensional array. It just a list of !!100!! numbers, arranged in rows to make it easy to find the !!81!!st number when you need it. The small gray numerals in the margin are a finding aid. If you want to look up !!\ell(81)!! you can see that it is !!\color{darkred}{76}!! without having to count up !!81!! elements. This element is highlighted in red in the table above.

Note that the elements are numbered from !!1!! to !!100!!, whereas all the other tables in these articles have been zero-indexed. I wondered if there was a good way to fix this, but there really isn't. !!\ell!! is analogous to a logarithm function, and the one thing a logarithm function really must do is to have !!\log 1 = 0!!. So too here; we have !!\ell(1) = 0!!.

We also need an !!\ell^{-1}!! table analogous to Ludgate's !!T_2!!:

$$ \begin{array}{rrrrrrrrrrr} {\tiny\color{gray}{0}} & 1, & 2, & 4, & 8, & 16, & 32, & 64, & 27, & 54, & 7, \\ {\tiny\color{gray}{10}} & 14, & 28, & 56, & 11, & 22, & 44, & 88, & 75, & 49, & 98, \\ {\tiny\color{gray}{20}} & 95, & 89, & 77, & 53, & 5, & 10, & 20, & 40, & 80, & 59, \\ {\tiny\color{gray}{30}} & 17, & 34, & 68, & 35, & 70, & 39, & 78, & 55, & 9, & 18, \\ {\tiny\color{gray}{40}} & \color{darkblue}{36}, & 72, & 43, & 86, & 71, & 41, & 82, & 63, & 25, & 50, \\ {\tiny\color{gray}{50}} & 100, & 99, & 97, & 93, & 85, & 69, & 37, & 74, & 47, & 94, \\ {\tiny\color{gray}{60}} & 87, & 73, & 45, & 90, & 79, & 57, & 13, & 26, & 52, & 3, \\ {\tiny\color{gray}{70}} & 6, & 12, & 24, & 48, & 96, & \color{darkgreen}{91}, & \color{darkred}{81}, & 61, & 21, & 42, \\ {\tiny\color{gray}{80}} & 84, & 67, & 33, & 66, & 31, & 62, & 23, & 46, & 92, & 83, \\ {\tiny\color{gray}{90}} & 65, & 29, & 58, & 15, & 30, & 60, & 19, & 38, & 76, & 51\hphantom{,} \\ \end{array} $$

Like !!\ell^{-1}!! and !!T_2!!, this is just a list of !!100!! numbers in order.

As the notation suggests, !!\ell^{-1}!! and !!\ell!! are inverses. We already saw that the first table had !!\ell(81)=\color{darkred}{76}!! and !!\ell(1) = 0!!. Going in the opposite direction, we see from the second table that !!\ell^{-1}(76)= \color{darkred}{81}!! (again in red) and !!\ell^{-1}(0)=1!!. The elements of !!\ell!! tell you where to find numbers in the !!\ell^{-1}!! table. Where is !!17!! in the second table? Look at the !!17!!th element in the first table. !!\ell(17) = 30!!, so !!17!! is at position !!30!! in the second table.

Before we go too deeply into how these were constructed, let's try the !!7×13!! example we did before. The algorithm is just !!\color{purple}{\clubsuit}!!:

$$ \begin{align} % \ell^{-1}(\ell(a) + \ell(b)) & = ab\tag{$\color{purple}{\clubsuit}$} \\ 7·13 &= \ell^{-1}(\ell(7) + \ell(13)) \\ &= \ell^{-1}(\color{darkgreen}{9} + \color{darkgreen}{66}) \\ &= \ell^{-1}(75) \\ &= \color{darkgreen}{91} \end{align} $$

(The relevant numbers are picked out in green in the two tables.)

As promised, with three table lookups and a single integer addition.

What if the sum in the middle exceeds !!99!!? No problem, the !!\ell^{-1}!! table wraps around, so that element !!100!! is the same as element !!0!!:

$$ \begin{align} % \ell^{-1}(\ell(a) + \ell(b)) & = ab\tag{$\color{purple}{\clubsuit}$} \\ 3·12 &= \ell^{-1}(\ell(3) + \ell(12)) \\ &= \ell^{-1}(\color{darkblue}{69} + \color{darkblue}{71}) \\ &= \ell^{-1}(140) \\ &= \ell^{-1}(40) &\text{(wrap around)}\\ &= \color{darkblue}{36} \end{align} $$

How about that.

(This time the relevant numbers are picked out in blue.)

I said this only computes !!ab!! when the product is at most !!100!!. That is not quite true. If you are willing to ignore a small detail, this algorithm will multiply any two numbers. The small detail is that the multiplication will be done mod !!101!!. That is, instead of the exact answer, you get one that differs from it by a multiple of !!101!!. Let's do an example to see what I mean when I say it works even for products bigger than !!100!!:

$$ \begin{align} % \ell^{-1}(\ell(a) + \ell(b)) & = ab\tag{$\color{purple}{\clubsuit}$} \\ 16·26 &= \ell^{-1}(\ell(16) + \ell(26)) \\ &= \ell^{-1}(4 + 67) \\ &= \ell^{-1}(71) \\ &= 12 \end{align} $$

This tell us that !!16·26 = 12!!. The correct answer is actually !!16·26 = 416!!, and indeed !!416-12 = 404!! which is a multiple of !!101!!. The reason this happens is that the elements of the second table, !!\ell^{-1}!!, are not true integers, they are mod !!101!! integers.

Okay, so what is the secret here? Why does this work? It should jump out at you that it is often the case that an entry in the !!\ell^{-1}!! table is twice the previous entry:

$$\ell^{-1}(1+n) = 2\cdot \ell^{-1}(n)$$

In fact, this is true everywhere, if you remember that the numbers are not ordinary integers but mod !!101!! integers. For example, the number that follows !!64!!, in place of !!64·2=128!!, is !!27!!. But !!27\equiv 128\pmod{101}!! because they differ by a multiple of !!101!!. From a mod !!101!! point of view, it doesn't matter wther we put !!27!! or !!128!! after !!64!!, as they are the same thing.

Those two facts are the whole secret of the !!\ell^{-1}!! table:

- Each element is twice the one before, but

- The elements are not quite ordinary numbers, but mod !!101!! numbers where !!27=128=229=330=\ldots!!.

Certainly !!\ell^{-1}(0) = 2^0 = 1!!. And every entry in the !!\ell^{-1}!! is twice the previous one, if you are thinking in mod !!101!!. The two secrets are actually one secret:

$$\ell^{-1}(n) = 2^n\pmod{101}.$$

This is why the multiplication algorithm works. Say we want to multiply !!7!! and !!13!! again. We look up !!7!! and !!13!! in !!\ell!!, and find !!\ell(7)=9!! and !!\ell(13)=66!!. What this is really telling us is that

$$ \begin{align} 7 & = 2^{9\hphantom6} \pmod{101} \\ 13 & = 2^{66} \pmod{101} \\ \end{align} $$

so that multiplying !!7\cdot13!! mod !!101!! is the same as multiplying $$2^9\cdot 2^{66}.$$

But multiplying exact powers of !!2!! is easy, since you just add the exponents: !!2^9\cdot2^{66} = 2^{9+66} = 2^{75}!!, whether you are doing it in regular numbers or mod !!101!! numbers. And the !!\ell^{-1}!! table tells us directly that !!2^{75} = 91\pmod{101}!!.

The !!\ell!! function, which is analogous to the regular logarithm, is called a discrete logarithm.

What's going on with Percy Ludgate's algorithm? It's a sort of compressed, limited version of the discrete logarithm.

I had a hope that maybe we could reimplement Ludgate's thing by basing it more directly on discrete logarithms. Say we had the !!\ell^{-1}!! table encoded in a wheel of some sort, with the !!100!! entries in order around the rim. There's a “current position” !!p!!, initially !!0!!, and a “current number” !!2^p!!, initially !!1!!.

On the same axle as the wheel, mount a gear with exactly 100 teeth. We can easily turn the wheel exactly !!q!! positions by taking a straight bar with !!q!! teeth and using it to turn the gear, which turns the wheel. We easily multiply the current number by !!2!! just by turning the wheel one position clockwise.

Multiplying by !!7!! isn't too hard, just turn the wheel !!9!! positions clockwise. We can do this by constructing a short bar with exactly !!9!! teeth and using it to turn the gear. Or maybe we have a meshing gear with !!9!! teeth, on another axle, which we give one full turn. Either way, if the current number was !!5!! before, it's !!35!! after.

Multiplying by !!3!! is rather more of a pain, because we have to turn the wheel !!69!! positions, so we need a bar or a meshing gear with 69 teeth.

(We could get away with one with only !!31!! teeth, if we could turn the wheel the other way, but that seems like it might be more complicated. Hmm, I suppose it would work to use a meshing gear with 31 teeth that engages a second gear (with any number of teeth) that engages the main gear.)

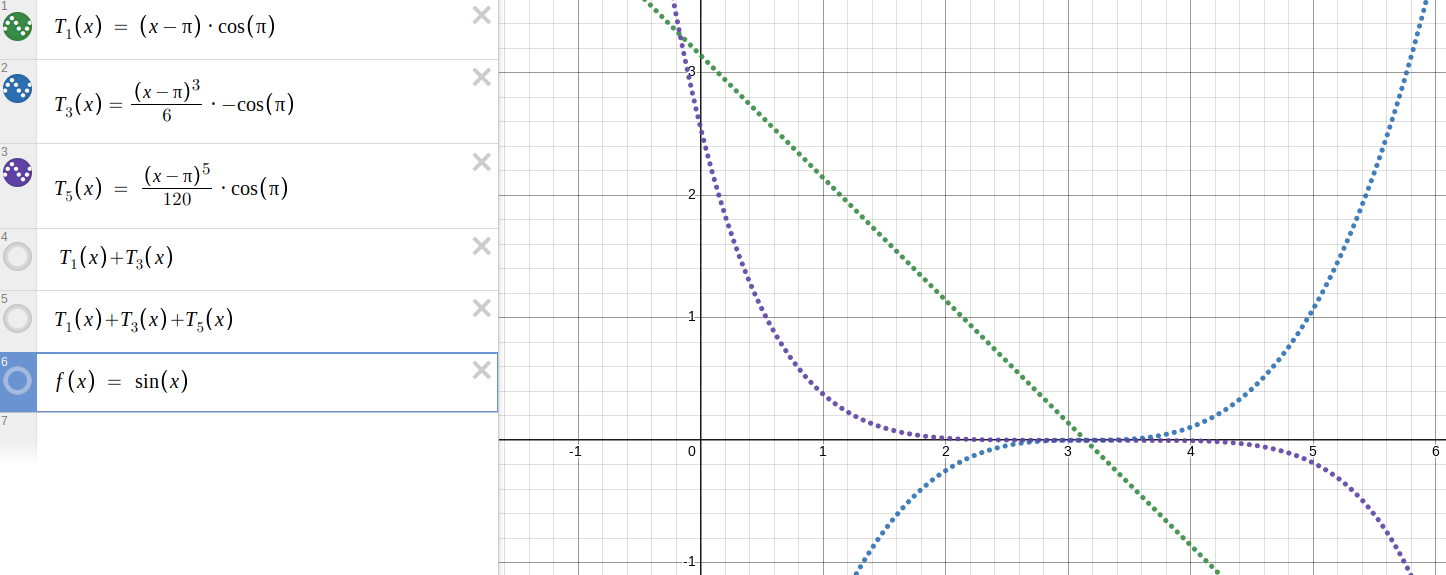

Anyway I took a look to see if there were any better tables do use, and the answer is: maybe! If, instead of a table of !!2^n!!, we use a table of !!26^n!!, then the brass wheel approach performs a little better:

$$ \begin{array}{rrrrrrrrrr} {\tiny\color{gray}{0}} & 1, & 26, & 70, & 2, & 52, & 39, & 4, & 3, & 78, & 8, \\ {\tiny\color{gray}{10}}& 6, & 55, & 16, & 12, & 9, & 32, & 24, & 18, & 64, & 48, \\ {\tiny\color{gray}{20}}& 36, & 27, & 96, & 72, & 54, & 91, & 43, & 7, & 81, & 86, \\ {\tiny\color{gray}{30}}& 14, & 61, & 71, & 28, & 21, & 41, & 56, & 42, & 82, & 11, \\ {\tiny\color{gray}{40}}& 84, & 63, & 22, & 67, & 25, & 44, & 33, & 50, & 88, & 66, \\ {\tiny\color{gray}{50}}& 100, & 75, & 31, & 99, & 49, & 62, & 97, & 98, & 23, & 93, \\ {\tiny\color{gray}{60}}& 95, & 46, & 85, & 89, & 92, & 69, & 77, & 83, & 37, & 53, \\ {\tiny\color{gray}{70}}& 65, & 74, & 5, & 29, & 47, & 10, & 58, & 94, & 20, & 15, \\ {\tiny\color{gray}{80}}& 87, & 40, & 30, & 73, & 80, & 60, & 45, & 59, & 19, & 90, \\ {\tiny\color{gray}{90}}& 17, & 38, & 79, & 34, & 76, & 57, & 68, & 51, & 13, & 35\hphantom{,} \\ \end{array} $$

Multiplying by !!2!! is no longer as simple as turning the wheel one notch clockwise; you have to turn it !!3!! positions counterclockwise. But that seems pretty easy. Multiplying by !!3!! is also rather easy: just turn the wheel !!7!! positions. If the table above is !!T_2!!, then the analogue of Ludgate's !!T_1!! table is:

$$ \begin{array}{cccccccccc} \tiny\color{gray}{1} & \tiny\color{gray}{2} & \tiny\color{gray}{3} & \tiny\color{gray}{4} & \tiny\color{gray}{5} & \tiny\color{gray}{6} & \tiny\color{gray}{7} & \tiny\color{gray}{8} & \tiny\color{gray}{9} \\ 0 & 3 & 7 & 6 & 72 & 10 & 27 & 9 & 14 \\ \end{array} $$

That is, if you want to compute !!3·6!!, you start with the wheel in position !!0!!, then turn it by !!T_1(3) = 7!! positions, then by !!T_1(6) = 10!!, and now it's at position !!17!!, where the current number is !!18!!.

The numbers in the !!T_1!! table are all pretty small, except that to multiply by !!5!! you have to turn by !!72!! positions, which is kinda awful. Still it's only a little worse than in the powers-of-2 version where to multiply by !!3!! you would have to turn the wheel by !!69!! positions. And overall the powers-of-26 table is better: the sum of the !!9!! entries is only !!148!!, which is optimal; the corresponding sum of the entries for the powers-of-2 table is !!216!!.

Who knows, it might work, and even if it didn't work well it might be pretty cool.

[Other articles in category /math] permanent link

Mon, 02 Oct 2023