Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2025: | JFMAM |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 245 |

| Programming | 99 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 22 |

| Physics | 21 |

| Perl | 17 |

| Biology | 15 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Thu, 30 Nov 2017

Another public service announcement about Git.

There are a number of commands everyone learns when they first start out using Git. And there are some that almost nobody learns right away, but that should be the first thing you learn once you get comfortable using Git day to day.

One of these has the uninteresting-sounding name git-rev-parse. Git

has a bewildering variety of notations for referring to commits and

other objects. If you type something like origin/master~3, which

commit is that? git-rev-parse is your window into Git's

understanding of names:

% git rev-parse origin/master~3

37f2bc78b3041541bb4021d2326c5fe35cbb5fbb

A pretty frequent question is: How do I find out the commit ID of the current HEAD? And the answer is:

% git rev-parse HEAD

2536fdd82332846953128e6e785fbe7f717e117a

or if you want it abbreviated:

% git rev-parse --short HEAD

2536fdd

But more important than the command itself is the manual for the command. Whether you expect to use this command, you should read its manual. Because every command uses Git's bewildering variety of notations, and that manual is where the notations are completely documented.

When you use a ref name like master, Git finds it in

.git/refs/heads/master, but when you use origin/master, Git finds

it in .git/refs/remotes/origin/master, and when you use HEAD Git

finds it in .git/HEAD. Why the difference? The git-rev-parse

manual explains what Git is doing here.

Did you know that if you have an annoying long branch name like

origin/martin/f42876-change-tracking you can create a short alias

for it by sticking

ref: origin/martin/f42876-change-tracking

into .git/CT, and from then on you can do git log CT or git

rebase --onto CT or whatever?

Did you know that you can write topic@{yesterday} to mean “whatever

commit topic was pointing to yesterday”?

Did you know that you can write ':/penguin system' to refer to the most

recent commit whose commit message mentions the penguin system, and

that 'HEAD:/penguin system' means the most recent such commit on the

HEAD branch?

Did you know that there's a powerful sublanguage for ranges that you can

give to git-log to specify all sorts of useful things about which

commits you want to look at?

Once I got comfortable with Git I got in the habit of rereading the

git-rev-parse manual every few months, because each time I would

notice some new useful tool.

Check it out. It's an important next step.

[ Previous PSAs:

- Two things (beginners ought to know) about Git

- Git remote branches and Git's missing terminology

- Git's rejected push error

]

[Other articles in category /prog] permanent link

Mon, 27 Nov 2017National Coming Out Day began in the U.S. in 1988, and within couple of years I had started to observe it. A queer person, to observe the event, should make an effort, each October 11, to take the next step of coming out of the closet and being more visible, whatever that “next step” happens to be for them.

For some time I had been wearing a little pin that said BISEXUAL QUEER. It may be a bit hard for younger readers of my blog to understand that in 1990 this was unusual, eccentric, and outré, even in the extremely permissive and liberal environment of the University of Pennsylvania. People took notice of it and asked about it; many people said nothing but were visibly startled.

On October 11 of 1991, in one of the few overtly political acts of my life, I posted a carefully-composed manifesto to the department-wide electronic bulletin board, explaining that I was queer, what that meant for me, and why I thought Coming Out Day was important. Some people told me they thought this was brave and admirable, and others told me they thought it was inappropriate.

As I explained in my essay:

It seemed to me that if lots of queer people came out, that would show everyone that there is no reason to fear queers, and that it is not hard at all to live in a world full of queer people — you have been doing it all your life, and it was so easy you didn't even notice! As more and more queers come out of the closet, queerness will become more and more ordinary and commonplace, and people do not have irrational fear of the ordinary and commonplace.

I'm not sure what I would have said if you has asked me in 1991 whether I thought this extravagant fantasy would actually happen. I was much younger and more naïve than I am now and it's possible that I believed that it was certain to happen. Or perhaps I would have been less optimistic and replied with some variant on “maybe, I hope so”, or “probably not but there are other reasons to do it”. But I am sure that if you had asked me when I thought it would happen I would have guessed it would be a very long time, and that I might not live to see it.

Here we are twenty-five years later and to my amazement, this worked.

Holy cow, it worked just like we hoped! Whether I believed it or not at the time, it happened just as I said! This wild fantasy, this cotton-candy dream, had the result we intended. We did it! And it did not take fifty or one hundred years, I did live to see it. I have kids and that is the world they are growing up in. Many things have gotten worse, but not this thing.

It has not yet worked everywhere. But it will. We will keep chipping away at the resistance, one person at a time. It worked before and it will continue to work. There will be setbacks, but we are an unstoppable tide.

In 1991, posting a public essay was considered peculiar or inappropriate. In 2017, it would be eccentric because it would be unnecessary. It would be like posting a long manifesto about how you were going to stop wearing white shirts and start wearing blue ones. Why would anyone make a big deal of something so ordinary?

In 1991 I had queer co-workers whose queerness was an open secret, not generally known. Those people did not talk about their partners in front of strangers, and I was careful to keep them anonymous when I mentioned them. I had written:

This note is also to try to make other queers more comfortable here: Hi! You are not alone! I am here with you!

This had the effect I hoped, at least in some cases; some of those people came to me privately to thank me for my announcement.

At a different job in 1995 my boss had a same-sex partner that he did not mention. I had guessed that this was the case because all the people with opposite-sex partners did mention them. You could figure out who was queer by keeping a checklist in your mind of who had mentioned their opposite-sex partners, dates, or attractions, and then anyone you had not checked off after six months was very likely queer. (Yes, as a bisexual I am keenly aware that this does not always work.) This man and I both lived in Philadelphia, and one time we happened to get off the train together and his spouse was there to meet him. For a moment I saw a terrible apprehension in the face of this confident and self-possessed man, as he realized he would have to introduce me to his husband: How would I respond? What would I say?

In 2017, these people keep pictures on their desks and bring their partners to company picnics. If I met my boss’ husband he would introduce me without apprehension because if I had a problem with it, it would be my problem. In 2017, my doctor has pictures of her wedding and her wife posted on the Internet for anyone in the world to see, not just her friends or co-workers. Around here, at least, Coming Out Day has turned into an obsolete relic because being queer has turned into a big fat nothing.

And it will happen elsewhere also, it will continue to spread. Because if there was reason for optimism in 1991, how much more so now that visible queer people are not a rare minority but a ubiquitous plurality, now that every person encounters some of us every day, we know that this unlikely and even childish plan not only works, but can succeed faster and better than we even hoped?

HA HA HA TAKE THAT, LOSERS.

[Other articles in category /politics] permanent link

Fri, 24 Nov 2017Over the years many people have written to me to tell me they liked my blog but that I should update it more often. Now those people can see if they were correct. I suspect they will agree that they weren't.

I find that, especially since I quit posting to Twitter, there is a

lot of random crap that I share with my co-workers, friends, family,

and random strangers that they might rather do without. I needed a

central dumping ground for this stuff. I am not going to pollute

The Universe of Discourse with this material so I started

a new blog, called

Content-Type: text/shitpost. The

title was inspired by

a tweet of Reid McKenzie

that suggested that there should be a text/shitpost content type. I

instantly wanted to do more with the idea.

WARNING: Shitposts may be pointless, incomplete, poorly considered, poorly researched, offensive, vague, irritating, or otherwise shitty. The label is on the box. If you find yourself wanting to complain about the poor quality of a page you found on a site called shitpost.plover.com, maybe pause for a moment and consider what your life has come to.

I do not recommend that you check it out.

[Other articles in category /meta] permanent link

Wed, 22 Nov 2017

Mathematical pettifoggery and pathological examples

Mathematicians do tend to be the kind of people who quibble and pettifog over the tiniest details. This is because in mathematics, quibbling and pettifogging does work.

This example is technical, but I think I can explain it in a way that will make sense even for people who have no idea what the question is about. Don't worry if you don't understand the next paragraph.

In this math SE question: a user asks for an example of a connected topological space !!\langle X, \tau\rangle!! where there is a strictly finer topology !!\tau'!! for which !!\langle X, \tau'\rangle!! is disconnected. This is a very easy problem if you go about it the right way, and the right way follows a very typical pattern which is useful in many situations.

The pattern is “TURN IT UP TO 11!!” In this case:

- Being disconnected means you can find some things with a certain property.

- If !!\tau'!! is finer than !!\tau!!, that means it has all the same things, plus even more things of the same type.

- If you could find those things in !!\tau!!, you can still find them in !!\tau'!! because !!\tau'!! has everything that !!\tau!! does.

- So although perhaps making !!\tau!! finer could turn a connected space into a disconnected one, by adding the things you needed, it definitely can't turn a disconnected space into a connected one, because the things will still be there.

So a way to look for a connected space that becomes disconnected when !!\tau!! becomes finer is:

Start with some connected space. Then make !!\tau!! fine as you possibly can and see if that is enough.

If that works, you win. If not, you can look at the reason it didn't work, and maybe either fix up the space you started with, or else use that as the starting point in a proof that the thing you're looking for doesn't exist.

I emphasized the important point here. It is: Moving toward finer !!\tau!! can't hurt the situation and might help, so the first thing to try is to turn the fineness knob all the way up and see if that is enough to get what you want. Many situations in mathematics call for subtlety and delicate reasoning, but this is not one of those.

The technique here works perfectly. There is a topology !!\tau_d!! of maximum possible fineness, called the “discrete” topology, so that is the thing to try first. And indeed it answers the question as well as it can be answered: If !!\langle X, \tau\rangle!! is a connected space, and if there is any refinement !!\tau'!! for which !!\langle X, \tau'\rangle!! is disconnected, then !!\langle X, \tau_d\rangle!! will be disconnected. It doesn't even matter what connected space you start with, because !!\tau_d!! is always a refinement of !!\tau!!, and because !!\langle X, \tau_d\rangle!! is always disconnected, except in trivial cases. (When !!X!! has fewer than two points.)

Right after you learn the definition of what a topology is, you are presented with a bunch of examples. Some are typical examples, which showcase what the idea is really about: the “open sets” of the real line topologize the line, so that topology can be used as a tool for studying real analysis. But some are atypical examples, which showcase the extreme limits of the concept that are as different as possible from the typical examples. The discrete space is one of these. What's it for? It doesn't help with understanding the real numbers, that's for sure. It's a tool, it's the knob on the topology machine that turns the fineness all the way up.[1] If you want to prove that the machine does something or other for the real numbers, one way is to show that it always does that thing. And sometimes part of showing that it always does that thing is to show that it does that even if you turn the knob all the way to the right.

So often the first thing a mathematician will try is:

What happens if I turn the knob all the way to the right? If that doesn't blow up the machine, nothing will!

And that's why, when you ask a mathematician a question, often the first thing they will say is “ťhat fails when !!x=0!!” or “that fails when all the numbers are equal” or “ťhat fails when one number is very much bigger than the other” or “that fails when the space is discrete” or “that fails when the space has fewer than two points.” [2]

After the last article, Kyle Littler reminded me that I should not forget the important word “pathological”. One of the important parts of mathematical science is figuring out what the knobs are, how far they can go, what happens if you turn them all the way up, and what are the limits on how they can be set if we want the machine to behave more or less like the thing we are trying to study.

We have this certain knob for how many dents and bumps and spikes we can put on a sphere and have it still be a sphere, as long as we do not actually puncture or tear the surface. And we expected that no matter how far we turned this knob, the sphere would still divide space into two parts, a bounded inside and an unbounded outside, and that these regions should behave basically the same as they do when the sphere is smooth.[3]

But no, we are wrong, the knob goes farther than we thought. If we turn it to the “Alexander horned sphere” setting, smoke starts to come out of the machine and the red lights begin to blink.[4] Useful! Now if someone has some theory about how the machine will behave nicely if this and that knob are set properly, we might be able to add the useful observation “actually you also have to be careful not to turn that “dents bumps and spikes” knob too far.”

The word for these bizarre settings where some of the knobs are in the extreme positions is “pathological”. The Alexander sphere is a pathological embedding of !!S^2!! into !!\Bbb R^3!!.

[1] The leftmost setting on that knob, with the fineness turned all the way down, is called the “indiscrete topology” or the “trivial topology”.

[2] If you claim that any connected space can be disconnected by turning the “fineness” knob all the way to the right, a mathematican will immediately turn the “number of points” knob all the way to the left, and say “see, that only works for spaces with at least two points”. In a space with fewer than two points, even the discrete topology is connected.

[3]For example, if you tie your dog to a post outside the sphere, and let it wander around, its leash cannot get so tangled up with the sphere that you need to walk the dog backwards to untangle it. You can just slip the leash off the sphere.

[4] The dog can get its leash so tangled around the Alexander sphere that the only way to fix it is to untie the dog and start over. But if the “number of dimensions” knob is set to 2 instead of to 3, you can turn the “dents bumps and spikes” knob as far as you want and the leash can always be untangled without untying or moving the dog. Isn't that interesting? That is called the Jordan curve theorem.

[Other articles in category /math] permanent link

An instructive example of expected value

I think this example is very illuminating of something, although I'm not sure yet what.

Suppose you are making a short journey somewhere. You leave two minutes later than planned. How does this affect your expected arrival time? All other things being equal, you should expect to arrive two minutes later than planned. If you're walking or driving, it will probably be pretty close to two minutes no matter what happens.

Now suppose the major part of your journey involves a train that runs every hour, and you don't know just what the schedule is. Now how does your two minutes late departure affect your expected arrival time?

The expected arrival time is still two minutes later than planned. But it is not uniformly distributed. With probability !!\frac{58}{60}!!, you catch the train you planned to take. You are unaffected by your late departure, and arrive at the same time. But with probability !!\frac{2}{60}!! you miss that train and have to take the next one, arriving an hour later than you planned. The expected amount of lateness is

$$0 \text{ minutes}·\frac{58}{60} + 60 \text{ minutes}·\frac{2}{60} = 2 \text{ minutes}$$

the same as before.

[ Addendum: Richard Soderberg points out that one thing illuminated by this example is that the mathematics fails to capture the emotional pain of missing the train. Going in a slightly different direction, I would add that the expected value reduces a complex situation to a single number, and so must necessarily throw out a lot of important information. I discussed this here a while back in connection with lottery tickets.

But also I think this failure of the expected value is also a benefit: it does capture something interesting about the situation that might not have been apparent before: Considering the two minutes as a time investment, there is a sense in which the cost is knowable; it costs exactly two minutes. Yes, there is a chance that you will be hit by a truck that you would not have encountered had you left on time. But this is exactly offset by the hypothetical truck that passed by harmlessly two minutes before you arrived on the scene but which would have hit you had you left on time. ]

[Other articles in category /math] permanent link

Mon, 20 Nov 2017

Mathematical jargon for quibbling

Mathematicians tend not to be the kind of people who shout and pound their fists on the table. This is because in mathematics, shouting and pounding your fist does not work. If you do this, other mathematicians will just laugh at you. Contrast this with law or politics, which do attract the kind of people who shout and pound their fists on the table.

However, mathematicians do tend to be the kind of people who quibble and pettifog over the tiniest details. This is because in mathematics, quibbling and pettifogging does work.

Mathematics has a whole subjargon for quibbling and pettifogging, and also for excluding certain kinds of quibbles. The word “nontrivial” is preeminent here. To a first approximation, it means “shut up and stop quibbling”. For example, you will often hear mathematicians having conversations like this one:

A: Mihăilescu proved that the only solution of Catalan's equation !!a^x - b^y = 1!! is !!3^2 - 2^3!!.

B: What about when !!a!! and !!b!! are consecutive and !!x=y=1!!?

A: The only nontrivial solution.

B: Okay.

Notice that A does not explain what “nontrivial” is supposed to mean here, and B does not ask. And if you were to ask either of them, they might not be able to tell you right away what they meant. For example, if you were to inquire specifically about !!2^1 - 1^y!!, they would both agree that that is also excluded, whether or not that solution had occurred to either of them before. In this example, “nontrivial” really does mean “stop quibbling”. Or perhaps more precisely “there is actually something here of interest, and if you stop quibbling you will learn what it is”.

In some contexts, “nontrivial” does have a precise and technical meaning, and needs to be supplemented with other terms to cover other types of quibbles. For example, when talking about subgroups, “nontrivial” is supplemented with “proper”:

If a nontrivial group has no proper nontrivial subgroup, then it is a cyclic group of prime order.

Here the “proper nontrivial” part is not merely to head off quibbling; it's the crux of the theorem. But the first “nontrivial” is there to shut off a certain type of quibble arising from the fact that 1 is not considered a prime number. By this I mean if you omit “proper”, or the second “nontrivial”, the statement is still true, but inane:

If a nontrivial group has no subgroup, then it is a cyclic group of prime order.

(It is true, but vacuously so.) In contrast, if you omit the first “nontrivial”, the theorem is substantively unchanged:

If a group has no proper nontrivial subgroup, then it is a cyclic group of prime order.

This is still true, except in the case of the trivial group that is no longer excluded from the premise. But if 1 were considered prime, it would be true either way.

Looking at this issue more thoroughly would be interesting and might lead to some interesting conclusions about mathematical methodology.

- Can these terms be taxonomized?

- How do mathematical pejoratives relate? (“Abnormal, irregular, improper, degenerate, inadmissible, and otherwise undesirable”) Kelley says we use these terms to refer to “a problem we cannot handle”; that seems to be a different aspect of the whole story.

- Where do they fit in Lakatos’ Proofs and Refutations theory? Sometimes inserting “improper” just heads off a quibble. In other cases, it points the way toward an expansion of understanding, as with the “improper” polyhedra that violate Euler's theorem and motivate the introduction of the Euler characteristic.

- Compare with the large and finely-wrought jargon that distinguishes between proofs that are “elementary”, “easy”, “trivial”, “straightforward”, or “obvious”.

- Is there a category-theoretic formulation of what it means when we say “without loss of generality, take !!x\lt y!!”?

[ Addendum: Kyle Littler reminds me that I should not forget “pathological”. ]

[ Addendum 20240706: I forgot to mention that I wrote a followup article that discusses why this sort of quibbling is actually useful. ]

[Other articles in category /math] permanent link

Thu, 16 Nov 2017[ Warning: This article is meandering and does not end anywhere in particular ]

My recent article about system software errors kinda blew up the Reddit / Hacker News space, and even got listed on Voat, which I understand is the Group W Bench where they send you if you aren't moral enough to be in Reddit. Many people on these fora were eager to tell war stories of times that they had found errors in the compiler or other infrastructural software.

This morning I remembered another example that had happened to me. In the middle 1990s, I was just testing some network program on one of the Sun Solaris machines that belonged to the Computational Linguistics program, when the entire machine locked up. I had to go into the machine room and power-cycle it to get it to come back up.

I returned to my desk to pick up where I had left off, and the machine locked up, again just as I ran my program. I rebooted the machine again, and putting two and two together I tried the next run on a different, less heavily-used machine, maybe my desk workstation or something.

The problem turned out to be a bug in that version of Solaris: if you bound a network socket to some address, and then tried to connect it to the same address, everything got stuck. I wrote a five-line demonstration program and we reported the bug to Sun. I don't know if it was fixed.

My boss had an odd immediate response to this, something along the lines that connecting a socket to itself is not a sanctioned use case, so the failure is excusable. Channeling Richard Stallman, I argued that no user-space system call should ever be able to crash the system, no matter what stupid thing it does. He at once agreed.

I felt I was on safe ground, because I had in mind the GNU GCC bug reporting instructions of the time, which contained the following unequivocal statement:

If the compiler gets a fatal signal, for any input whatever, that is a compiler bug. Reliable compilers never crash.

I love this paragraph. So clear, so pithy! And the second sentence! It could have been left off, but it is there to articulate the writer's moral stance. It is a rock-firm committment in a wavering and uncertain world.

Stallman was a major influence on my writing for a long time. I first encountered his work in 1985, when I was browsing in a bookstore and happened to pick up a copy of Dr. Dobb's Journal. That issue contained the very first publication of the GNU Manifesto. I had never heard of Unix before, but I was bowled over by Stallman's vision, and I read the whole thing then and there, standing up.

(It hit the same spot in my heart as Albert Szent-Györgyi's The Crazy Ape, which made a similarly big impression on me at about the same time. I think programmers don't take moral concerns seriously enough, and this is one reason why so many of them find Stallman annoying. But this is what I think makes Stallman so important. Perhaps Dan Bernstein is a similar case.)

I have very vague memories of perhaps finding a bug in gcc, which is

perhaps why I was familiar with that particular section of the gcc

documentation. But more likely I just read it because I read

a lot of stuff. Also Stallman was probably on my “read everything he

writes” list.

Why was I trying to connect a socket to itself, anyway? Oh, it was a bug. I meant to connect it somewhere else and used the wrong variable or something. If the operating system crashes when you try, that is a bug. Reliable operating systems never crash.

[ Final note: I looked for my five-line program that connected a

socket to itself, but I could not find it. But I found something

better instead: an email I sent in April 1993 reporting a program that

caused g++ version 2.3.3 to crash with an internal compiler error.

And yes, my report does quote the same passage I quoted above. ]

[Other articles in category /prog] permanent link

Wed, 15 Nov 2017[ Credit where it is due: This was entirely Darius Bacon's idea. ]

In connection with “Recognizing when two arithmetic expressions are essentially the same”, I had several conversations with people about ways to normalize numeric expressions. In that article I observed that while everyone knows the usual associative law for addition $$ (a + b) + c = a + (b + c)$$ nobody ever seems to mention the corresponding law for subtraction: $$ (a+b)-c = a + (b-c).$$

And while everyone “knows” that subtraction is not associative because $$(a - b) - c ≠ a - (b-c)$$ nobody ever seems to observe that there is an associative law for subtraction: $$\begin{align} (a - b) + c & = a - (b - c) \\ (a -b) -c & = a-(b+c).\end{align}$$

This asymmetry is kind of a nuisance, and suggests that a more symmetric notation might be better. Darius Bacon suggested a simple change that I think is an improvement:

Write the negation of !!a!! as $$a\star$$ so that one has, for all !!a!!, $$a+a\star = a\star + a = 0.$$

The !!\star!! operation obeys the following elegant and simple laws: $$\begin{align} a\star\star & = a \\ (a+b)\star & = a\star + b\star \end{align} $$

Once we adopt !!\star!!, we get a huge payoff: We can eliminate subtraction:

Instead of !!a-b!! we now write !!a+b\star!!.

The negation of !!a+b\star!! is $$(a+b\star)\star = a\star + b{\star\star} = a\star +b.$$

We no longer have the annoying notational asymmetry between !!a-b!! and !!-b + a!! where the plus sign appears from nowhere. Instead, one is !!a+b\star!! and the other is !!b\star+a!!, which is obviously just the usual commutativity of addition.

The !!\star!! is of course nothing but a synonym for multiplication by !!-1!!. But it is a much less clumsy synonym. !!a\star!! means !!a\cdot(-1)!!, but with less inkjunk.

In conventional notation the parentheses in !!a(-b)!! are essential and if you lose them the whole thing is ruined. But because !!\star!! is just a special case of multiplication, it associates with multiplication and division, so we don't have to worry about parentheses in !!(a\star)b = a(b\star) = (ab)\star!!. They are all equal to just !!ab\star!!. and you can drop the parentheses or include them or write the terms in any order, just as you like, just as you would with !!abc!!.

The surprising associativity of subtraction is no longer surprising, because $$(a + b) - c = a + (b - c)$$ is now written as $$(a + b) + c\star = a + (b + c\star)$$ so it's just the usual associative law for addition; it is not even disguised. The same happens for the reverse associative laws for subtraction that nobody mentions; they become variations on $$ \begin{align} (a + b\star) + c\star & = a + (b\star + c\star) \\ & = a + (b+c)\star \end{align} $$ and such like.

The !!\star!! is faster to read and faster to say. Instead of “minus one” or “negative one” or “times negative one”, you just say “star”.

The !!\star!! is just a number, and it behaves like a number. Its role in an expression is the same as any other number's. It is just a special, one-off notation for a single, particularly important number.

Open questions:

Do we now need to replace the !!\pm!! sign? If so, what should we replace it with?

Maybe the idea is sound, but the !!\star!! itself is a bad choice. It is slow to write. It will inevitably be confused with the * that almost every programming language uses to denote multiplication.

The real problem here is that the !!-!! symbol is overloaded. Instead of changing the negation symbol to !!\star!! and eliminating the subtraction symbol, what if we just eliminated subtraction? None of the new notation would be incompatible with the old notation: !!-(a+-b) = b+-a!! still means exactly what it used to. But you are no longer allowed to abbreviate it to !!-(a-b) = b-a!!.

This would fix the problem of the !!\star!! taking too long to write: we would just use !!-!! in its place. It would also fix the concern of point 2: !!a\pm b!! now means !!a+b!! or !!a+-b!! which is not hard to remember or to understand. Another happy result: notations like !!-1!! and !!-2!! do not change at all.

Curious footnote: While I was writing up the draft of this article, it had a reminder in it: “How did you and Darius come up with this?” I went back to our email to look, and I discovered the answer was:

- Darius suggested the idea to me.

- I said, “Hey, that's a great idea!”

I wish I could take more credit, but there it is. Hmm, maybe I will take credit for inspiring Darius! That should be worth at least fifty percent, perhaps more.

[ This article had some perinatal problems. It escaped early from the laboratory, in a not-quite-finished state, so I apologize if you are seeing it twice. ]

[Other articles in category /math] permanent link

Sun, 12 Nov 2017

No, it is not a compiler error. It is never a compiler error.

When I used to hang out in the comp.lang.c Usenet group, back when

there was a comp.lang.c Usenet group, people would show up fairly

often with some program they had written that didn't work, and ask if

their compiler had a bug. The compiler did not have a bug. The

compiler never had a bug. The bug was always in the programmer's code

and usually in their understanding of the language.

When I worked at the University of Pennsylvania, a grad student posted to one of the internal bulletin boards looking for help with a program that didn't work. Another graduate student, a super-annoying know-it-all, said confidently that it was certainly a compiler bug. It was not a compiler bug. It was caused by a misunderstanding of the way arguments to unprototyped functions were automatically promoted.

This is actually a subtle point, obscure and easily misunderstood.

Most examples I have seen of people blaming the compiler are much

sillier. I used to be on the mailing list for discussing the

development of Perl 5, and people would show up from time to time to

ask if Perl's if statement was broken. This is a little

mind-boggling, that someone could think this. Perl was first released

in 1987. (How time flies!) The if statement is not exactly an

obscure or little-used feature. If there had been a bug in if it

would have been discovered and fixed by 1988. Again, the bug was

always in the programmer's code and usually in their understanding of

the language.

Here's something I wrote in October 2000,

which I think makes the case very clearly, this time concerning a

claimed bug in the stat() function, another feature that first

appeared in Perl 1.000:

On the one hand, there's a chance that the compiler has a broken

statand is subtracting 6 or something. Maybe that sounds likely to you but it sounds really weird to me. I cannot imagine how such a thing could possibly occur. Why 6? It all seems very unlikely.Well, in the absence of an alternative hypothesis, we have to take what we can get. But in this case, there is an alternative hypothesis! The alternative hypothesis is that [this person's] program has a bug.

Now, which seems more likely to you?

- Weird, inexplicable compiler bug that nobody has ever seen before

or

- Programmer fucked up

Hmmm. Let me think.

I'll take Door #2, Monty.

Presumably I had to learn this myself at some point. A programmer can waste a lot of time looking for the bug in the compiler instead of looking for the bug in their program. I have a file of (obnoxious) Good Advice for Programmers that I wrote about twenty years ago, and one of these items is:

Looking for a compiler bug is the strategy of LAST resort. LAST resort.

Anyway, I will get to the point. As I mentioned a few months ago, I built a simple phone app that Toph and I can use to find solutions to “twenty-four puzzles”. In these puzzles, you are given four single-digit numbers and you have to combine them arithmetically to total 24. Pennsylvania license plates have four digits, so as we drive around we play the game with the license plate numbers we see. Sometimes we can't solve a puzzle, and then we wonder: is it because there is no solution, or because we just couldn't find one? Then we ask the phone app.

The other day we saw the puzzle «5 4 5 1», which is very easy, but I asked the phone app, to find out if there were any other solutions that we missed. And it announced “No solutions.” Which is wrong. So my program had a bug, as my programs often do.

The app has a pre-populated dictionary containing all possible

solutions to all the puzzles that have solutions, which I generated

ahead of time and embedded into the app. My first guess was that bug

had been in the process that generated this dictionary, and that it

had somehow missed the solutions of «5 4 5 1». These would be indexed

under the key 1455, which is the same puzzle, because each list of

solutions is associated with the four input numbers in ascending

order. Happily I still had the original file containing the

dictionary data, but when I looked in it under 1455 I saw exactly

the two solutions that I expected to see.

So then I looked into the app itself to see where the bug was. Code Studio's underlying language is Javascript, and Code Studio has a nice debugger. I ran the app under the debugger, and stopped in the relevant code, which was:

var x = [getNumber("a"), getNumber("b"), getNumber("c"), getNumber("d")].sort().join("");

This constructs a hash key (x) that is used to index into the canned

dictionary of solutions. The getNumber() calls were retrieving the

four numbers from the app's menus, and I verified that the four

numbers were «5 4 5 1» as they ought to be. But what I saw next

astounded me: x was not being set to 1455 as it should have been.

It was set to 4155, which was not in the dictionary. And it was set

to 4155 because

the built-in sort() function

was sorting the numbers

into

the

wrong

order.

For a while I could not believe my eyes. But after another fifteen or thirty minutes of tinkering, I sent off a bug report… no, I did not. I still didn't believe it. I asked the front-end programmers at my company what my mistake had been. Nobody had any suggestions.

Then I sent off a bug report that began:

I think that Array.prototype.sort() returned a wrongly-sorted result when passed a list of four numbers. This seems impossible, but …

I was about 70% expecting to get a reply back explaining what I had

misunderstood about the behavior of Javascript's sort().

But to my astonishment, the reply came back only an hour later:

Wow! You're absolutely right. We'll investigate this right away.

In case you're curious, the bug was as follows: The sort() function

was using a bubble sort. (This is of course a bad choice, and I think

the maintainers plan to replace it.) The bubble sort makes several

passes through the input, swapping items that are out of order. It

keeps a count of the number of swaps in each pass, and if the number

of swaps is zero, the array is already ordered and the sort can stop

early and skip the remaining passes. The test for this was:

if (changes <= 1) break;

but it should have been:

if (changes == 0) break;

Ouch.

The Code Studio folks handled this very creditably, and did indeed fix it the same day. (The support system ticket is available for your perusal, as is the Github pull request with the fix, in case you are interested.)

I still can't quite believe it. I feel as though I have accidentally spotted the Loch Ness Monster, or Bigfoot, or something like that, a strange and legendary monster that until now I thought most likely didn't exist.

A bug in the sort() function. O day and night, but this is wondrous

strange!

[ Addendum 20171113: Thanks to Reddit user spotter for pointing me to a related 2008 blog post of Jeff Atwood's, “The First Rule of Programming: It's Always Your Fault”. ]

[ Addendum 20171113: Yes, yes, I know sort() is in the library, not in the compiler. I am using “compiler error” as a synecdoche

for “system software error”. ]

[ Addendum 20171116: I remembered examples of two other fundamental system software errors I have discovered, including one honest-to-goodness compiler bug. ]

[ Addendum 20200929: Russell O'Connor on a horrifying GCC bug ]

[Other articles in category /prog] permanent link

Sat, 11 Nov 2017

Randomized algorithms go fishing

A little while back I thought of a perfect metaphor for explaining what a randomized algorithm is. It's so perfect I'm sure it must have thought of many times before, but it's new to me.

Suppose you have a lake, and you want to know if there are fish in the lake. You dig some worms, pick a spot, bait the hook, and wait. At the end of the day, if you have caught a fish, you have your answer: there are fish in the lake.[1]

But what if you don't catch a fish? Then you still don't know. Perhaps you used the wrong bait, or fished in the wrong spot. Perhaps you did everything right and the fish happened not to be biting that day. Or perhaps you did everything right except there are no fish in the lake.

But you can try again. Pick a different spot, try a different bait, and fish for another day. And if you catch a fish, you know the answer: the lake does contain fish. But if not, you can go fishing again tomorrow.

Suppose you go fishing every day for a month and you catch nothing. You still don't know why. But you have a pretty good idea: most likely, it is because there are no fish to catch. It could be that you have just been very unlucky, but that much bad luck is unlikely.

But perhaps you're not sure enough. You can keep fishing. If, after a year, you have not caught any fish, you can be almost certain that there were no fish in the lake at all. Because a year-long run of bad luck is extremely unlikely. But if you are still not convinced, you can keep on fishing. You will never be 100% certain, but if you keep at it long enough you can become 99.99999% certain with as many nines as you like.

That is a randomized algorithm, for finding out of there are fish in a lake! It might tell you definitively that there are, by producing a fish. Or it might fail, and then you still don't know. But as long as it keeps failing, the chance that there are any fish rapidly becomes very small, exponentially so, and can be made as small as you like.

For not-metaphorical examples, see:

The Miller-Rabin primality test: Given an odd number K, is K composite? If it is, the Miller-Rabin test will tell you so 75% of the time. If not, you can go fishing again the next day. After n trials, you are either !!100\%!! certain that K is composite, or !!100\%-\frac1{2^{2n}}!! certain that it is prime.

Frievalds’ algorithm: given three square matrices !!A, B, !! and !!C!!, is !!C!! the product !!A×B!!? Actually multiplying !!A×B!! could be slow. But if !!A×B!! is not equal to !!C!!, Frievald's algorithm will quickly tell you that it isn't—half the time. If not, you can go fishing again. After n trials, you are either !!100\%!! certain that !!C!! is not the correct product, or !!100\%-\frac1{2^n}!! certain that it is.

[1] Let us ignore mathematicians’ pettifoggery about lakes that contain exactly one fish. This is just a metaphor. If you are really concerned, you can catch-and-release.

[Other articles in category /CS] permanent link

Tue, 07 Nov 2017

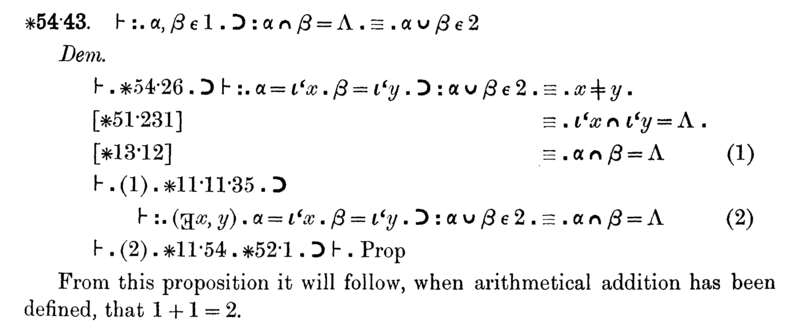

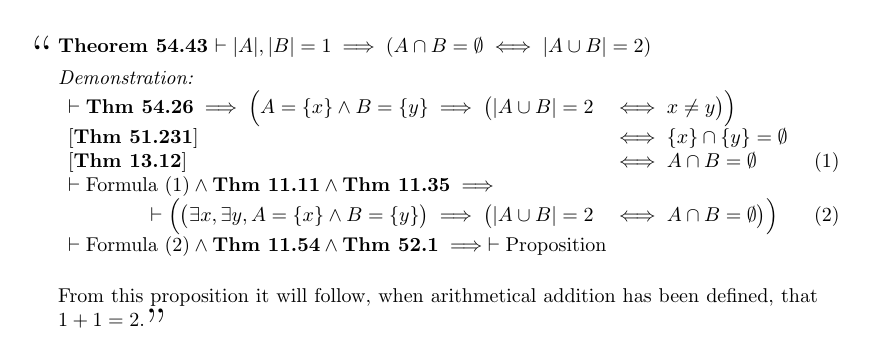

A modern translation of the 1+1=2 lemma

W. Ethan Duckworth of the Department of Mathematics and Statistics at Loyola University translated this into modern notation and has kindly given me permission to publish it here:

I think it is interesting and instructive to compare the two versions. One thing to notice is that there is no perfect translation. As when translating between two natural languages (German and English, say), the meaning cannot be preserved exactly. Whitehead and Russell's language is different from the modern language not only because the notation is different but because the underlying concepts are different. To really get what Principia Mathematica is saying you have to immerse yourself in the Principia Mathematica model of the world.

The best example of this here is the symbol “1”. In the modern translation, this means the number 1. But at this point in Principia Mathematica, the number 1 has not yet been defined, and to use it here would be circular, because proposition ∗54.43 is an important step on the way to defining it. In Principia Mathematica, the symbol “1” represents the class of all sets that contain exactly one element.[1] Following the definition of ∗52.01, in modern notation we would write something like:

$$1 \equiv_{\text{def}} \{x \mid \exists y . x = \{ y \} \}$$

But in many modern universes, that of ZF set theory in particular, there is no such object.[2] The situation in ZF is even worse: the purported definition is meaningless, because the comprehension is unrestricted.

The Principia Mathematica notation for !!|A|!!, the cardinality of set !!A!!, is !!Nc\,‘A!!, but again this is only an approximate translation. The meaning of !!Nc\,‘A!! is something close to

the unique class !!C!! such that !!x\in C!! if and only if there exists a one-to-one relation between !!A!! and !!x!!.

(So for example one might assert that !!Nc\,‘\Lambda = 0!!, and in fact this is precisely what proposition ∗101.1 does assert.) Even this doesn't quite capture the Principia Mathematica meaning, since the modern conception of a relation is that it is a special kind of set, but in Principia Mathematica relations and sets are different sorts of things. (We would also use a one-to-one function, but here there is no additional mismatch between the modern concept and the Principia Mathematica one.)

It is important, when reading old mathematics, to try to understand in modern terms what is being talked about. But it is also dangerous to forget that the ideas themselves are different, not just the language.[3] I extract a lot of value from switching back and forth between different historical views, and comparing them. Some of this value is purely historiological. But some is directly mathematical: looking at the same concepts from a different viewpoint sometimes illuminates aspects I didn't fully appreciate. And the different viewpoint I acquire is one that most other people won't have.

One of my current low-priority projects is reading W. Burnside's important 1897 book Theory of Groups of Finite Order. The value of this, for me, is not so much the group-theoretic content, but in seeing how ideas about groups have evolved. I hope to write more about this topic at some point.

[1] Actually the situation in Principia Mathematica is more complicated. There is a different class 1 defined at each type. But the point still stands.

[2] In ZF, if !!1!! were to exist as defined above, the set !!\{1\}!! would exist also, and we would have !!\{1\} \in 1!! which would contradict the axiom of foundation.

[3] This was a recurring topic of study for Imre Lakatos, most famously in his little book Proofs and Refutations. Also important is his article “Cauchy and the continuum: the significance of nonstandard analysis for the history and philosophy of mathematics.” Math. Intelligencer 1 (1978), #3, p.151–161, which I discussed here earlier, and which you can read in its entireity by paying the excellent people at Elsevier the nominal and reasonable—nay, trivial—sum of only US$39.95.

[Other articles in category /math] permanent link

Thu, 02 Nov 2017

I missed an easy solution to a silly problem

A few years back I wrote a couple of articles about the extremely poor macro plugin I wrote for Blosxom. ([1] [2]). The feature-poorness of the macro system is itself the system's principal feature, since it gives the system simple behavior and simple implementation. Sometimes this poverty means I have to use odd workarounds to get it to do what I want, but they are always simple workarounds and it is never hard to figure out why it didn't do what I wanted.

Yesterday, though, I got stuck. I had defined a macro, ->, which

the macro system would replace with →. Fine. But later in the

file I had an HTML comment:

<!-- blah blah -->

and the macro plugin duly transformed this to

<!-- blah blah -→

creating an unterminated comment.

Normally the way I would deal with this would be to change the name of

the macro from -> to something else that did not conflict with HTML

syntax, but I was curiously resistant to this. I could not think of

anything I wanted to use instead. (After a full night's sleep, it

seems to me that => would have been just fine.)

I then tried replacing --> with -->. I didn't expect

this to work, and indeed it didn't. Those &# escapes only work for

text parts of an HTML document, and cannot be used for HTML syntax.

They remove the special meaning of character sequences, which is the opposite

of what I need here.

Eventually, I just deleted the comment and moved on. That worked, although it was obviously suboptimal. I was too tired to think, and I just wanted the problem out of the way. I wish I had been a little less impulsive, because there are at least two other solutions I overlooked:

I could have defined an

END-HTML-COMMENTmacro that expanded to-->and then used it at the end of the comment. The results of macro expansion are never re-expanded, so the resulting-->would not have been subject to further replacement. This is ugly, but would have done the job, and I have used this sort of technique before.Despite its smallness, the macro plugin has had feature creep over the years, and some of these features I put in and then forgot about. (Bad programmer! No cookie!) One of these lost features is the directive

#undefall, which instructs the plugin to forget all the macro definitions. I could have solved the problem by sticking this in just before the broken comment.(The plugin does not (yet) have a selective

#undef. It would be easy to add, but the module has too many features already.)

Yesterday morning I asked my co-workers if there was an alternative HTML comment syntax, or some way to modify the comment ending sequence so that the macro plugin wouldn't spoil it. (I think there isn't, and a short look at the HTML 5.0 standard didn't suggest any workaround.)

One of the co-workers was Tye McQueen. He said that as far as he knew

there was no fix to the HTML comments that was like what I had asked

for. He asked whether I could define a second macro, -->, which

would expand to -->.

I carefully explained why this wouldn't work: when two macro definitions share a prefix, and they both match, the macro system does not make any guarantee about which substitution it will perform. If there are two overlapping macros, say:

#define pert curt

#define per bloods

then the string pertains might turn into curtains (if the first

substitution is performed) or into bloodstains (if the second is).

But you don't know which it will be, and it might be different each

time. I could fix this, but at present I prefer the answer to be

“don't define a macro that is a prefix of another macro.”

Then M. McQueen gently pointed out that -> is not a prefix of -->.

It is a suffix. My objection does not apply, and his suggestion

solves the problem.

When I write an Oops post I try to think about what lesson I can learn from the mistake. This time there isn't too much, but I do have a couple of ideas:

Before giving up on some piece of software I've written, look to see if I foresaw the problem and put in a feature to deal with it. I have a notion that this would work surprisingly often.

There are two ways to deal with the problem of writing software with too many features, and I have worked on this problem for many years from only one direction: try to write fewer features. But I could also come at it from the opposite direction: try to make better use of the features that I do write.

It feels odd that this should seem to me like a novel idea, but there it is.

Try to get more sleep.

(I haven't published an oops article in far too long, and it certainly isn't because I haven't been making mistakes. I will try to keep it in mind.)

[Other articles in category /oops] permanent link