Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2026: | J |

| 2025: | JFMAMJ |

| JASOND | |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 245 |

| Programming | 100 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 22 |

| Physics | 21 |

| Perl | 17 |

| Biology | 16 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Tue, 04 Dec 2018

I figured out that context manager bug!

A couple of days ago I described a strange bug in my “Greenlight” project that was causing Git to fail unpredictably, saying:

fatal: this operation must be run in a work tree

The problem seemed to go away when I changed

with env_var("GIT_DIR", self.repo_dir):

with env_var("GIT_WORK_TREE", self.work_dir):

result = subprocess.run(command, ...)

to

with env_var("GIT_DIR", self.repo_dir, "GIT_WORK_TREE", self.work_dir):

result = subprocess.run(command, ...)

but I didn't understand why. I said:

This was so unexpected that I wondered if the real problem was nondeterministic and if some of the debugging messages had somehow perturbed it. But I removed everything but the context manager change and ran another test, which succeeded. By then I was five and half hours into the debugging and I didn't have any energy left to actually understand what the problem had been. I still don't know.

The problem re-manifested again today, and this time I was able to track it down and fix it. The context manager code I mentioned above was not the issue.

That subprocess.run call is made inside a git_util object which,

as you can see in the tiny excerpt above, has a self.work_dir

attribute that tells it where to find the working tree. Just before

running a Git command, the git_util object installs self.work_dir

into the environment to tell Git where the working tree is.

The git_util object is originally manufactured by Greenlight itself,

which sets the work_dir attribute to a path that contains the

current process ID number. Just before the process exits, Greenlight

destroys the working tree. This way, concurrent processes never try

to use the same working tree, which would be a mess.

When Greenlight needs to operate on the repository, it uses its

git_util object directly. It also creates a submission object to

represent the submitted branch, and it installs the git_util object

into the submission object, so that the submission object can also

operate on the repository. For example, the submission object may ask

its git_util object if it needs to be rebased onto some other

branch, and if so to please do it. So:

- Greenlight has a

submission. submission.gitis thegit_utilobject that deals with Git.submission.git.work_diris the path to the per-process temporary working tree.

Greenlight's main purpose is to track these submission objects, and it has a database of them. To save time when writing the initial implementation, instead of using a real database, I had Greenlight use Python's “pickle” feature to pickle the list of submissions.

Someone would submit a branch, and Greenlight would pickle the

submission. The submission contained its git_util object, and that

got pickled along with the rest. Then Greenlight would exit and, just

before doing so, it would destroy its temporary working tree.

Then later, when someone else wanted to approve the submission for

publication, Greenlight would set up a different working tree with its

new process ID, and unpickle the submission. But the submission's

git.work_dir had been pickled with the old path, which no longer

existed.

The context manager was working just fine. It was setting

GIT_WORK_TREE to the work_dir value in the git_util object. But

the object was obsolete and its work_dir value pointed to a

directory that had been destroyed!

Adding to the confusion:

Greenlight's own

git_utilobject was always fresh and had the right path in it, so Git commands run directly by Greenlight all worked properly.Any new

submissionobjects created by Greenlight would have the right path, so Git commands run by fresh submissions also worked properly.Greenlight doesn't always destroy the working tree when it exits. If it exits abnormally, it leaves the working tree intact, for a later autopsy. And the unpickled submission would work perfectly if the working tree still existed, and it would be impossible to reproduce the problem!

Toward the end of the previous article, I said:

I suspect I'm being sabotaged somewhere by Python's weird implicit ideas of scope and variable duration, but I don't know. Yet.

For the record, then: The issue was indeed one of variable duration. But Python's weird implicit ideas were, in this instance, completely blameless. Instead the issue was caused by a software component even more complex and more poorly understood: “Dominus”.

This computer stuff is amazingly complicated. I don't know how anyone gets anything done.

[Other articles in category /prog/bug] permanent link

Sun, 02 Dec 2018There are combinatorial objects called necklaces and bracelets and I can never remember which is which.

Both are finite sequences of things (typically symbols) where the

start point is not important. So the bracelet ABCDE is considered

to be the same as the bracelets BCDEA, CDEAB, DEABC, and

EABCD.

One of the two also disregards the direction you go, so that

ABCDE is also considered the same as EDCBA (and so also DCBAE,

etc.). But which? I have to look it up every time.

I have finally thought of a mnemonic. In a necklace, the direction is important, because to reverse an actual necklace you have to pull it off over the wearer's head, turn it over, and put it back on. But in a bracelet the direction is not important, because it could be on either wrist, and a bracelet on the left wrist is the same as the reversed bracelet on the right wrist.

Okay, silly, maybe, but I think it's going to work.

[Other articles in category /math] permanent link

Another day, another bug. No, four bugs.

I'm working on a large and wonderful project called “Greenlight”. It's a Git branch merging service that implements the following workflow:

- Submitter submits a branch to Greenlight (

greenlight submit my-topic-branch) - Greenlight analyzes the branch to decide if it changes anything that requires review and signoff

- If so, it contacts the authorized reviewers, who then inform

Greenlight that they approve the changes (

greenlight approve 03a46dc1) - Greenlight merges the branch to

masterand publishes the result to the central repository

Of course, there are many details elided here.

Multiple instances of Greenlight share a local repository, but to avoid

confusion each has its own working tree. In Git you can configure

these by setting GIT_DIR and GIT_WORK_TREE environment variables,

respectively. When Greenlight needs to run a Git command, it does so

like this:

with env_var("GIT_DIR", self.repo_dir):

with env_var("GIT_WORK_TREE", self.work_dir):

result = subprocess.run(command, ...)

The env_var here is a Python context manager that saves the old

environment, sets the new environment variable, and then when the body

of the block is complete, it restores the environment to the way it

was. This worked in testing every time.

But the first time a beta tester ran the approve command, Greenlight

threw a fatal exception. It was trying to run git checkout --quiet

--detach, and this was failing, with Git saying

fatal: this operation must be run in a work tree

Where was the GIT_WORK_TREE setting going? I still don't know. But

in the course of trying to track the problem down, I changed the code

above to:

with env_var("GIT_DIR", self.repo_dir, "GIT_WORK_TREE", self.work_dir):

result = subprocess.run(command, ...)

and the problem, whatever it was, no longer manifested.

But this revealed a second bug: Greenlight no longer failed in the

approval phase. It went ahead and merged the branch, and then tried

to publish the merge with git push origin .... But the push was

rejected.

This is because the origin repository had an update hook that ran

on every push, which performed the same review analysis that Greenlight

was performing; one of Greenlight's main purposes is to be a

replacement for this hook. To avoid tying up the main repository for

too long, this hook had a two-minute timeout, after which it would die

and reject the push. This had only happened very rarely in the past,

usually when someone was inadvertently trying to push a malformed

branch. For example, they might have rebased all of master onto

their topic branch. In this case, however, the branch really was

legitimately enormous; it contained over 2900 commits.

“Oh, right,” I said. “I forgot to add the exception to the hook that tells it that it can immediately approve anything pushed by Greenlight.” The hook can assume that if the push comes from Greenlight, it has already been checked and authorized.

Pushes are happening via SSH, and Greenlight has its own SSH identity,

which is passed to the hook itself in the GL_USERNAME variable.

Modifying the hook was easy: I just added:

if environ["GL_USERNAME"] == 'greenlight':

exit(0)

This didn't work. My first idea was that Greenlight's public SSH key

had not been installed in the authorized_keys file in the right

place. When I grepped for greenlight in the authorized_keys file,

there were no matches. The key was actually there, but in Gitlab the

authorized_keys file doesn't have actual usernames in it. It has

internal userids, which are then mapped to GL_USERNAME variables by

some other entity. So I chased that wild goose for a while.

Eventually I determined that the key was in the right place, but

that the name of the Greenlight identity on the receiving side was not

greenlight but bot-greenlight, which I had forgotten.

So I changed the exception to say:

if environ["GL_USERNAME"] == 'bot-greenlight':

exit(0)

and it still didn't work. I eventually discovered that when

Greenlight did the push, the GL_USERNAME was actually set to mjd.

“Oh, right,” I said. “I forgot to have Greenlight use its own

SSH credentials in the ssh connection.”

The way you do this is to write a little wrapper program that obtains

the correct credentials and runs ssh, and then you set GIT_SSH to

point to the wrapper. It looks like this:

#!/usr/bin/env bash

export -n SSH_CLIENT SSH_TTY SSH_AUTH_SOCK SSH_CONNECTION

exec /usr/bin/ssh -i $HOME/.ssh/identity "$@"

But wait, why hadn't I noticed this before? Because, apparently,

every single person who had alpha-tested Greenlight had had their own

credentials stored in ssh-agent, and every single one had had

agent-forwarding enabled, so that when Greenlight tried to use ssh

to connect to the Git repository, SSH duly forwarded their credentials

along and the pushes succeeded. Amazing.

With these changes, the publication went through. I committed the changes to the SSH credential stuff, and some other unrelated changes, and I looked at what was left to see what had actually fixed the original bug. Every change but one was to add diagnostic messages and logging. The fix for the original bug had been to replace the nested context managers with a single context manager. This was so unexpected that I wondered if the real problem was nondeterministic and if some of the debugging messages had somehow perturbed it. But I removed everything but the context manager change and ran another test, which succeeded. By then I was five and half hours into the debugging and I didn't have any energy left to actually understand what the problem had been. I still don't know.

If you'd like to play along at home, the context manager looks like this, and did not change during the debugging process:

from contextlib import contextmanager

@contextmanager

def env_var(*args):

# Save old values of environment variables in `old`

# A saved value of `None` means that the variable was not there before

old = {}

for i in range(len(args)//2):

(key, value) = (args[2*i : 2*i+2])

old[key] = None

if key in os.environ:

old[key] = os.environ[str(key)]

if value is None: os.environ.pop(str(key), "dummy")

else:

os.environ[str(key)] = str(value)

yield

# Undo changes from versions saved in `old`

for (key, value) in old.items():

if value is None: os.environ.pop(str(key), "dummy")

else: os.environ[str(key)] = value

I suspect I'm being sabotaged somewhere by Python's weird implicit ideas of scope and variable duration, but I don't know. Yet.

This computer stuff is amazingly complicated. I don't know how anyone gets anything done.

[ Addendum 20181204: I figured it out. ]

[Other articles in category /prog/bug] permanent link

Thu, 29 Nov 2018

How many kinds of polygonal loops? (part 2)

And I said I thought there were nine analogous figures with six points.

Rahul Narain referred me to a recent discussion of almost this exact question on Math Stackexchange. (Note that the discussion there considers two figures different if they are reflections of one another; I consider them the same.) The answer turns out to be OEIS A000940. I had said:

… for !!N=6!!, I found it hard to enumerate. I think there are nine shapes but I might have missed one, because I know I kept making mistakes in the enumeration …

I missed three. The nine I got were:

And the three I missed are:

I had tried to break them down by the arrangement of the outside ring of edges, which can be described by a composition. The first two of these have type !!1+1+1+1+2!! (which I missed completely; I thought there were none of this type) and the other has type !!1+2+1+2!!, the same as the !!015342!! one in the lower right of the previous diagram.

I had ended by saying:

I would certainly not trust myself to hand-enumerate the !!N=7!! shapes.

Good call, Mr. Dominus! I considered filing this under “oops” but I decided that although I had gotten the wrong answer, my confidence in it had been adequately low. On one level it was a mistake, but on a higher and more important level, I did better.

I am going to try the (Cauchy-Frobenius-)Burnside-(Redfield-)Pólya lemma on it next and see if I can get the right answer.

Thanks again to Rahul Narain for bringing this to my attention.

[Other articles in category /math] permanent link

How many kinds of polygonal loops?

Take !!N!! equally-spaced points on a circle. Now connect them in a loop: each point should be connected to exactly two others, and each point should be reachable from the others. How many geometrically distinct shapes can be formed?

For example, when !!N=5!!, these four shapes can be formed:

(I phrased this like a geometry problem, but it should be clear it's actually a combinatorics problem. But it's much easier to express as a geometry problem; to talk about the combinatorics I have to ask you to consider a permutation !!P!! where !!P(i±1)≠P(i)±1!! blah blah blah…)

For !!N<5!! it's easy. When !!N=3!! it is always a triangle. When !!N=4!! there are only two shapes: a square and a bow tie.

But for !!N=6!!, I found it hard to enumerate. I think there are nine shapes but I might have missed one, because I know I kept making mistakes in the enumeration, and I am not sure they are all corrected:

It seems like it ought not to be hard to generate and count these, but so far I haven't gotten a good handle on it. I produced the !!N=6!! display above by considering the compositions of the number !!6!!:

| Composition | How many loops? |

|---|---|

| 6 | 1 |

| 1+5 | — |

| 2+4 | 1 |

| 3+3 | 1 |

| 1+1+4 | — |

| 1+2+3 | 1 |

| 2+2+2 | 2 |

| 1+1+1+3 | — |

| 1+1+2+2 | 1 |

| 1+2+1+1 | 1 |

| 1+1+1+1+2 | — |

| 1+1+1+1+1+1 | 1 |

| Total | 9 (?) |

(Actually it's the compositions, modulo bracelet symmetries — that is, modulo the action of the dihedral group.)

But this is fraught with opportunities for mistakes in both directions. I would certainly not trust myself to hand-enumerate the !!N=7!! shapes.

[ Addendum: For !!N=6!! there are 12 figures, not 9. For !!N=7!!, there are 39. Further details. ]

[Other articles in category /math] permanent link

Sun, 25 Nov 2018A couple of years back I was thinking about how to draw a good approximation to an equilateral triangle on a piece of graph paper. There are no lattice points that are exactly the vertices of an equilateral triangle, but you can come close, and one way to do it is to find integers !!a!! and !!b!! with !!\frac ba\approx \sqrt 3!!, and then !!\langle 0, 0\rangle, \langle 2a, 0\rangle,!! and !!\langle a, b\rangle!! are almost an equilateral triangle.

But today I came back to it for some reason and I wondered if it would be possible to get an angle closer to 60°, or numbers that were simpler, or both, by not making one of the sides of the triangle perfectly horizontal as in that example.

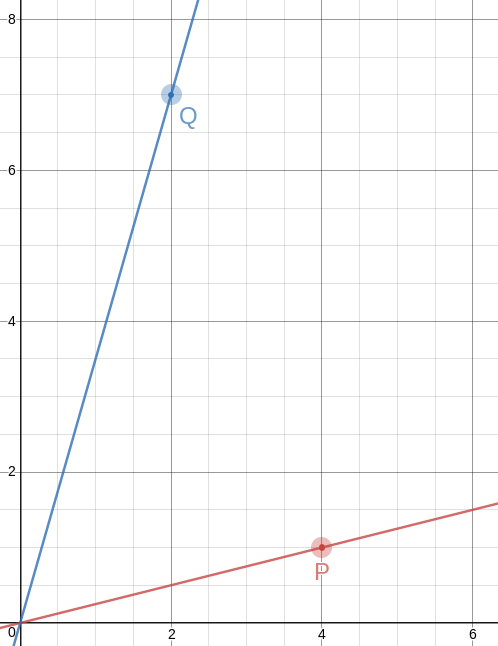

So okay, we want to find !!P = \langle a, b\rangle!! and !!Q = \langle c,d\rangle!! so that the angle !!\alpha!! between the rays !!\overrightarrow{OP}!! and !!\overrightarrow{OQ}!! is as close as possible to !!\frac\pi 3!!.

The first thing I thought of was that the dot product !!P\cdot Q = |P||Q|\cos\alpha!!, and !!P\cdot Q!! is super-easy to calculate, it's just !!ac+bd!!. So we want $$\frac{ad+bc}{|P||Q|} = \cos\alpha \approx \frac12,$$ and everything is simple, except that !!|P||Q| = \sqrt{a^2+b^2}\sqrt{c^2+d^2}!!, which is not so great.

Then I tried something else, using high-school trigonometry. Let !!\alpha_P!! and !!\alpha_Q!! be the angles that the rays make with the !!x!!-axis. Then !!\alpha = \alpha_Q - \alpha_P = \tan^{-1} \frac dc - \tan^{-1} \frac ba!!, which we want close to !!\frac\pi3!!.

Taking the tangent of both sides and applying the formula $$\tan(q-p) = \frac{\tan q - \tan p}{1 + \tan q \tan p}$$ we get $$ \frac{\frac dc - \frac ba}{1 + \frac dc\frac ba} \approx \sqrt3.$$ Or simplifying a bit, the super-simple $$\frac{ad-bc}{ac+bd} \approx \sqrt3.$$

After I got there I realized that my dot product idea had almost worked. To get rid of the troublesome !!|P||Q|!! you should consider the cross product also. Observe that the magnitude of !!P\times Q!! is !!|P||Q|\sin\alpha!!, and is also $$\begin{vmatrix} a & b & 0 \\ c & d & 0 \\ 1 & 1 & 1 \end{vmatrix} = ad - bc$$ so that !!\sin\alpha = \frac{ad-bc}{|P||Q|}!!. Then if we divide, the !!|P||Q|!! things cancel out nicely: $$\tan\alpha = \frac{\sin\alpha}{\cos\alpha} = \frac{ad-bc}{ac+bd}$$ which we want to be as close as possible to !!\sqrt 3!!.

Okay, that's fine. Now we need to find some integers !!a,b,c,d!! that do what we want. The usual trick, “see what happens if !!a=0!!”, is already used up, since that's what the previous article was about. So let's look under the next-closest lamppost, and let !!a=1!!. Actually we'll let !!b=1!! instead to keep things more horizonal. Then, taking !!\frac74!! as our approximation for !!\sqrt3!!, we want

$$\frac{ad-c}{ac+d} = \frac74$$

or equivalently $$\frac dc = \frac{7a+4}{4a-7}.$$

Now we just tabulate !!7a+4!! and !!4a-7!! looking for nice fractions:

| !!a!! | !!d =!! !!7a+4!! | !!c=!! !!4a-7!! |

|---|---|---|

| 2 | 18 | 1 |

| 3 | 25 | 5 |

| 4 | 32 | 9 |

| 5 | 39 | 13 |

| 6 | 46 | 17 |

| 7 | 53 | 21 |

| 8 | 60 | 25 |

| 9 | 67 | 29 |

| 10 | 74 | 33 |

| 11 | 81 | 37 |

| 12 | 88 | 41 |

| 13 | 95 | 45 |

| 14 | 102 | 49 |

| 15 | 109 | 53 |

| 16 | 116 | 57 |

| 17 | 123 | 61 |

| 18 | 130 | 65 |

| 19 | 137 | 69 |

| 20 | 144 | 73 |

Each of these gives us a !!\langle c,d\rangle!! point, but some are much better than others. For example, in line 3, we have take !!\langle 5,25\rangle!! but we can use !!\langle 1,5\rangle!! which gives the same !!\frac dc!! but is simpler. We still get !!\frac{ad-bc}{ac+bd} = \frac 74!! as we want.

Doing this gives us the two points !!P=\langle 3,1\rangle!! and !!Q=\langle 1, 5\rangle!!. The angle between !!\overrightarrow{OP}!! and !!\overrightarrow{OQ}!! is then !!60.255°!!. This is exactly the same as in the approximately equilateral !!\langle 0, 0\rangle, \langle 8, 0\rangle,!! and !!\langle 4, 7\rangle!! triangle I mentioned before, but the numbers could not possibly be easier to remember. So the method is a success: I wanted simpler numbers or a better approximation, and I got the same approximation with simpler numbers.

To draw a 60° angle on graph paper, mark !!P=\langle 3,1\rangle!! and !!Q=\langle 1, 5\rangle!!, draw lines to them from the origin with a straightedge, and there is your 60° angle, to better than a half a percent.

There are some other items in the table (for example row 18 gives !!P=\langle 18,1\rangle!! and !!Q=\langle 1, 2\rangle!!) but because of the way we constructed the table, every row is going to give us the same angle of !!60.225°!!, because we approximated !!\sqrt3\approx\frac74!! and !!60.225° = \tan^{-1}\frac74!!. And the chance of finding numbers better than !!\langle 3,1\rangle!! and !!\langle 1, 5\rangle!! seems slim. So now let's see if we can get the angle closer to exactly !!60°!! by using a better approximation to !!\sqrt3!! than !!\frac 74!!.

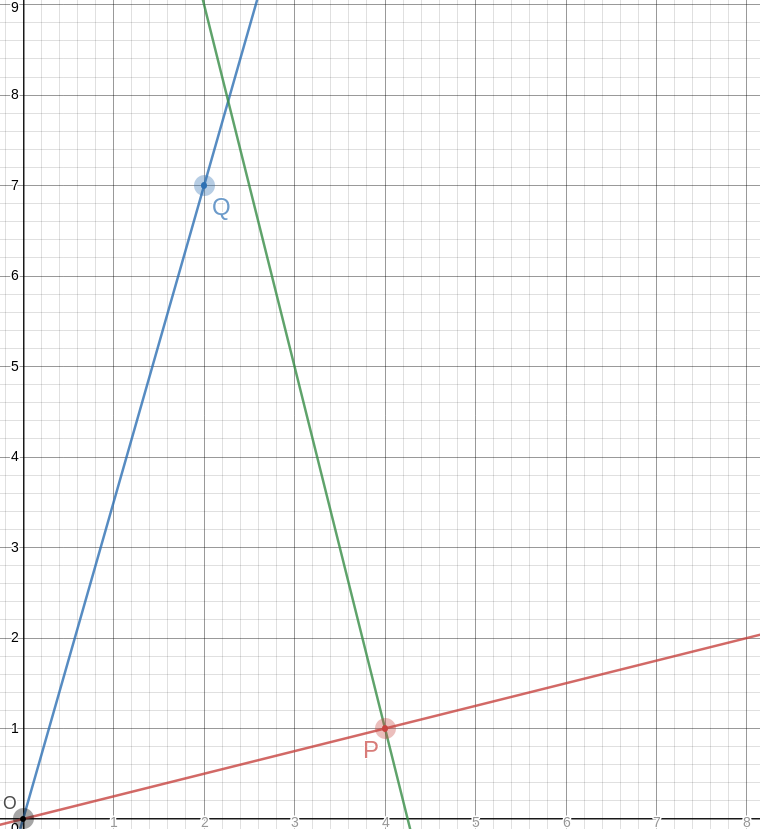

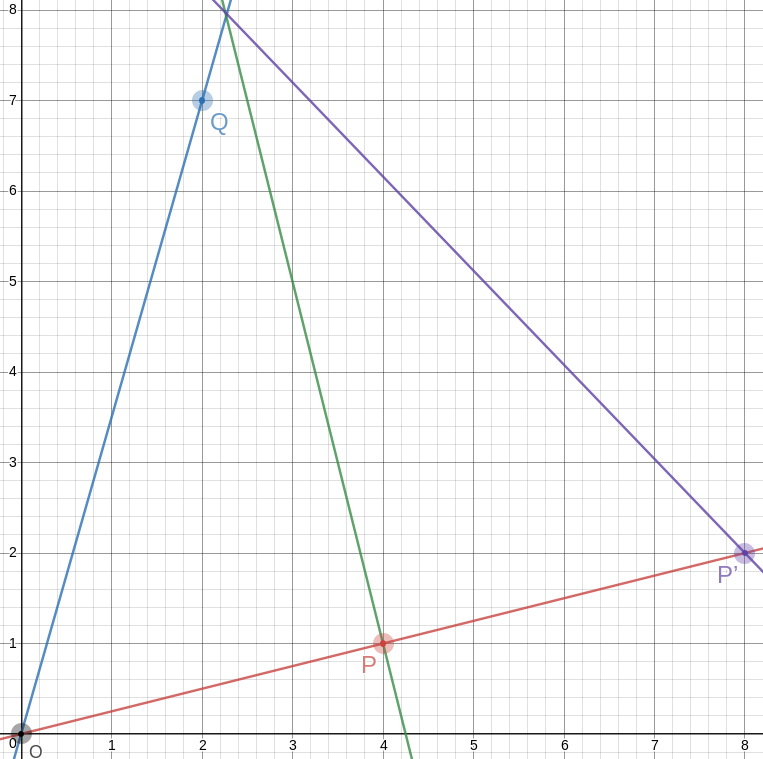

The next convergents to !!\sqrt 3!! are !!\frac{19}{11}!! and !!\frac{26}{15}!!. I tried the same procedure for !!\frac{19}{11}!! and it was a bust. But !!\frac{26}{15}!! hit the jackpot: !!a=4!! gives us !!15a-26 = 34!! and !!26a-15=119!!, both of which are multiples of 17. So the points are !!P=\langle 4,1\rangle!! and !!Q=\langle 2, 7\rangle!!, and this time the angle between the rays is !!\tan^{-1}\frac{26}{15} = 60.018°!!. This is as accurate as anyone drawing on graph paper could possibly need; on a circle with a one-mile radius it is an error of 20 inches.

Of course, the triangles you get are no longer equilateral, not even close. That first one has sides of !!\sqrt{10}, \sqrt{20}, !! and !!\sqrt{26}!!, and the second one has sides of !!\sqrt{17}, \sqrt{40}, !! and !!\sqrt{53}!!. But! The slopes of the lines are so simple, it's easy to construct equilateral triangles with a straightedge and a bit of easy measuring. Let's do it on the !!60.018°!! angle and see how it looks.

!!\overrightarrow{OP}!! has slope !!\frac14!!, so the perpendicular to it has slope !!-4!!, which means that you can draw the perpendicular by placing the straightedge between !!P!! and some point !!P+x\langle -1, 4\rangle!!, say !!\langle 2, 9\rangle!! as in the picture. The straightedge should have slope !!-4!!, which is very easy to arrange: just imagine the little squares grouped into stacks of four, and have the straightedge go through opposite corners of each stack. The line won't necessarily intersect !!\overrightarrow{OQ}!! anywhere great, but it doesn't need to, because we can just mark the intersection, wherever it is:

Let's call that intersection !!A!! for “apex”.

The point opposite to !!O!! on the other side of !!P!! is even easier; it's just !!P'=2P =\langle 8, 2\rangle!!. And the segment !!P'A!! is the third side of our equilateral triangle:

This triangle is geometrically similar to a triangle with vertices at !!\langle 0, 0\rangle, \langle 30, 0\rangle,!! and !!\langle 15, 26\rangle!!, and the angles are just close to 60°, but it is much smaller.

Woot!

[Other articles in category /math] permanent link

Mon, 19 Nov 2018I think I forgot to mention that I was looking recently at hamiltonian cycles on a dodecahedron. The dodecahedron has 30 edges and 20 vertices, so a hamiltonian path contains 20 edges and omits 10. It turns out that it is possible to color the edges of the dodecahedron in three colors so that:

- Every vertex is incident to one edge of each color

- The edges of any two of the three colors form a hamiltonian cycle

Marvelous!

(In this presentation, I have taken one of the vertices and sent it away to infinity. The three edges with arrowheads are all attached to that vertex, off at infinity, and the three faces incident to it have been stretched out to cover the rest of the plane.)

Every face has five edges and there are only three colors, so the colors can't be distributed evenly around a face. Each face is surrounded by two edges of one color, two of a second color, and only one of the last color. This naturally divides the 12 faces into three classes, depending on which color is assigned to only one edge of that face.

Each class contains two pairs of two adjacent pentagons, and each adjacent pair is adjacent to the four pairs in the other classes but not to the other pair in its own class.

Each pair shares a single edge, which we might call its “hinge”. Each pair has 8 vertices, of which two are on its hinge, four are adjacent to the hinge, and two are not near of the hinge. These last two vertices are always part of the hinges of the pairs of a different class.

I could think about this for a long time, and probably will. There is plenty more to be seen, but I think there is something else I was supposed to be doing today, let me think…. Oh yes! My “job”! So I will leave you to go on from here on your own.

[ Addendum 20181203: David Eppstein has written a much longer and more detailed article about triply-Hamiltonian edge colorings, using this example as a jumping-off point. ]

[Other articles in category /math] permanent link

Sat, 17 Nov 2018

How do you make a stella octangula?

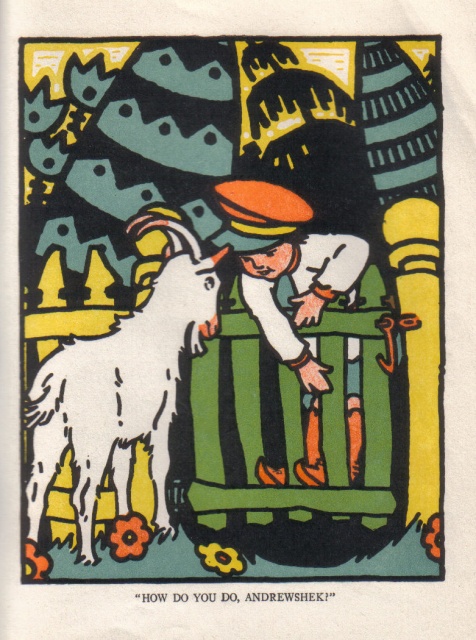

Yesterday Katara asked me out of nowhere “When you make a stella octangula, do you build it up from an octahedron or a tetrahedron?” Stella octangula was her favorite polyhedron eight years ago.

“Uh,” I said. “Both?”

Then she had to make one to see what I meant. You can start with a regular octahedron:

Then you erect spikes onto four of the octahedron's faces; this produces a regular tetrahedron:

Then you erect spikes onto the other four of the octahedron's faces. Now you have a stella octangula.

So yeah, both. Or instead of starting with a unit octahedron and erecting eight spikes of size 1, you can start with a unit tetrahedron and erect four spikes of size ½. It's both at once.

[Other articles in category /math] permanent link

Tue, 13 Nov 2018

Counting paths through polyhedra

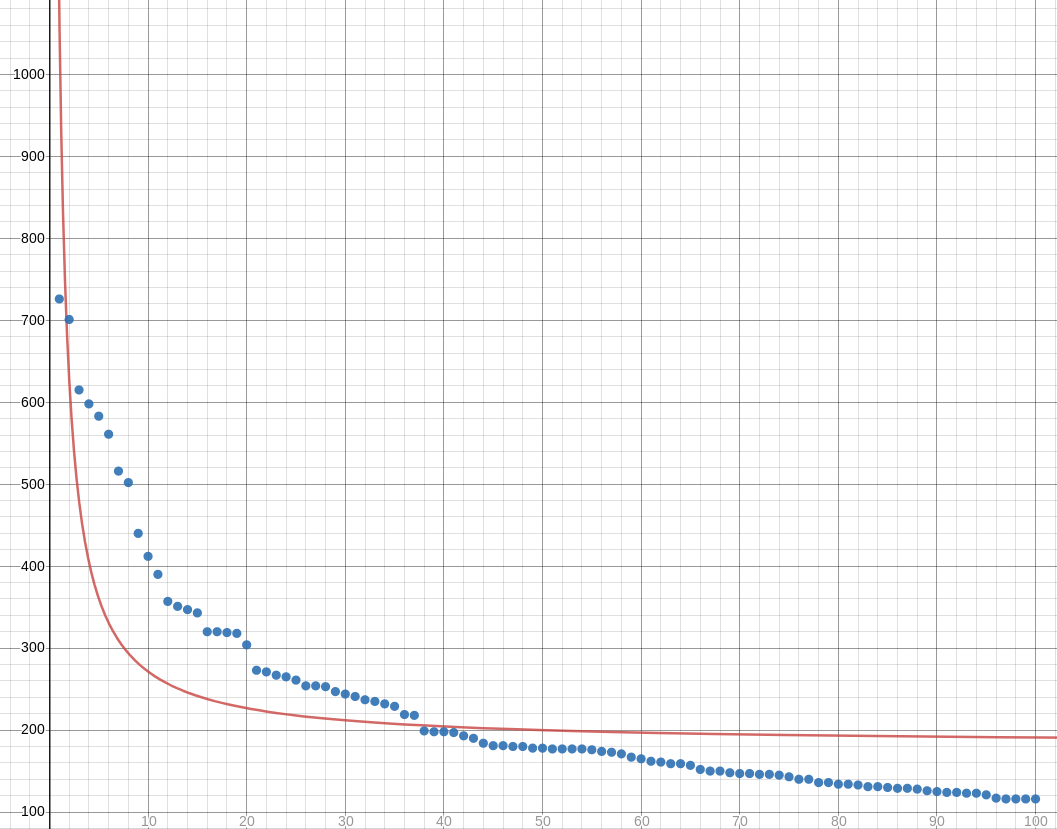

A while back someone asked on math stack exchange how many paths there were of length !!N!! from one vertex of a dodecahedron to the opposite vertex. The vertices are distance 5 apart, so for !!N<5!! the answer is zero, but the paths need not be simple, so the number grows rapidly with !!N!!; there are 58 million paths of length 19.

This is the kind of thing that the computer answers easily, so that's where I started, and unfortunately that's also where I finished, saying:

I'm still working out a combinatorial method of calculating the answer, and I may not be successful.

Another user reminded me of this and I took another whack at it. I couldn't remember what my idea had been last year, but my idea this time around was to think of the dodecahedron as the Cayley graph for a group, and then the paths are expressions that multiply out to a particular group element.

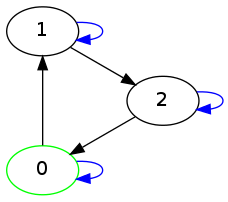

I started by looking at a tetrahedron instead of at a dodecahedron, to see how it would work out. Here's a tetrahedron.

Let's say we're counting paths from the center vertex to one of the others, say the one at the top. (Tetrahedra don't have opposite vertices, but that's not an important part of the problem.) A path is just a list of edges, and each edge is labeled with a letter !!a!!, !!b!!, or !!c!!. Since each vertex has exactly one edge with each label, every sequence of !!a!!'s, !!b!!'s, and !!c!!'s represents a distinct path from the center to somewhere else, although not necessarily to the place we want to go. Which of these paths end at the bottom vertex?

The edge labeling I chose here lets us play a wonderful trick. First, since any edge has the same label at both ends, the path !!x!! always ends at the same place as !!xaa!!, because the first !!a!! goes somewhere else and then the second !!a!! comes back again, and similarly !!xbb!! and !!xcc!! also go to the same place. So if we have a path that ends where we want, we can insert any number of pairs !!aa, bb, !! or !!cc!! and the new path will end at the same place.

But there's an even better trick available. For any starting point, and any letters !!x!! and !!y!!, the path !!xy!! always ends at the same place as !!yx!!. For example, if we start at the middle and follow edge !!b!!, then !!c!!, we end at the lower left; similarly if we follow edge !!c!! and then !!b!! we end at the same place, although by a different path.

Now suppose we want to find all the paths of length 7 from the middle to the top. Such a path is a sequence of a's, b's, and c's of length 7. Every such sequence specifies a different path out of the middle vertex, but how can we recognize which sequences end at the top vertex?

Since !!xy!! always goes to the same place as !!yx!!, the order of the

seven letters doesn't matter.

A complicated-seeming path like abacbcb must go to the same place

as !!aabbbcc!!, the same path with the letters in alphabetical order.

And since !!xx!! always goes back to

where it came from, the path !!aabbbcc!! goes to the same place as !!b!!

Since the paths we want are those that go to the same place as the trivial path !!c!!, we want paths that have an even number of !!a!!s and !!b!!s and an odd number of !!c!!s. Any path fitting that description will go to same place as !!c!!, which is the top vertex. It's easy to enumerate such paths:

| Prototypical path | How many? |

|---|---|

| ccccccc | 1 |

| cccccaa | 21 |

| cccccbb | 21 |

| cccaaaa | 35 |

| cccaabb | 210 |

| cccbbbb | 35 |

| caaaaaa | 7 |

| caaaabb | 105 |

| caabbbb | 105 |

| cbbbbbb | 7 |

| Total | 547 |

Here something like “cccbbbb” stands for all the paths that have three c's and four b's, in some order; there are !!\frac{7!}{4!3!} = 35!! possible orders, so 35 paths of this type. If we wanted to consider paths of arbitrary length, we could use Burnside's lemma, but I consider the tetrahedron to have been sufficiently well solved by the observations above (we counted 547 paths by hand in under 60 seconds) and I don't want to belabor the point.

Okay! Easy-peasy!

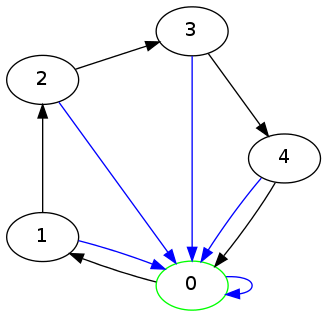

Now let's try cubes:

Here we'll consider paths between two antipodal vertices in the upper left and the lower right, which I've colored much darker gray than the other six vertices.

The same magic happens as in the tetrahedron. No matter where we

start, and no matter what !!x!! and !!y!! are, the path !!xy!! always

gets us to the same place as !!yx!!. So again, if some complicated

path gets us where we want to go, we can permute its components into

any order and get a different path of the same length to the same

place. For example, starting from the upper left, bcba, abcb, and

abbc all go to the same place.

And again, because !!xx!! always make a trip along one edge and then

back along the same edge, it never goes anywhere. So the three paths

in the previous paragraph also go to the same place as ac and ca

and also aa bcba bb aa aa aa aa bb cc cc cc bb.

We want to count paths from one dark vertex to the other. Obviously

abc is one such, and so too must be bac, cba, acb, and so

forth. There are six paths of length 3.

To get paths of length 5, we must insert a pair of matching letters

into one of the paths of length 3. Without loss of generality we can

assume that we are inserting aa. There are 20 possible orders for

aaabc, and three choices about which pair to insert, for a total of

60 paths.

To get paths of length 7, we must insert two pairs. If the two pairs are the same, there are !!\frac{7!}{5!} = 42!! possible orders and 3 choices about which letters to insert, for a total of 126. If the two pairs are different, there are !!\frac{7!}{3!3!} = 140!! possible orders and again 3 choices about which pairs to insert, for a total of 420, and a grand total of !!420+126 = 546!! paths of length 7. Counting the paths of length 9 is almost as easy. For the general case, again we could use Burnside's lemma, or at this point we could look up the unusual sequence !!6, 60, 546!! in OEIS and find that the number of paths of length !!2n+1!! is already known to be !!\frac34(9^n-1)!!.

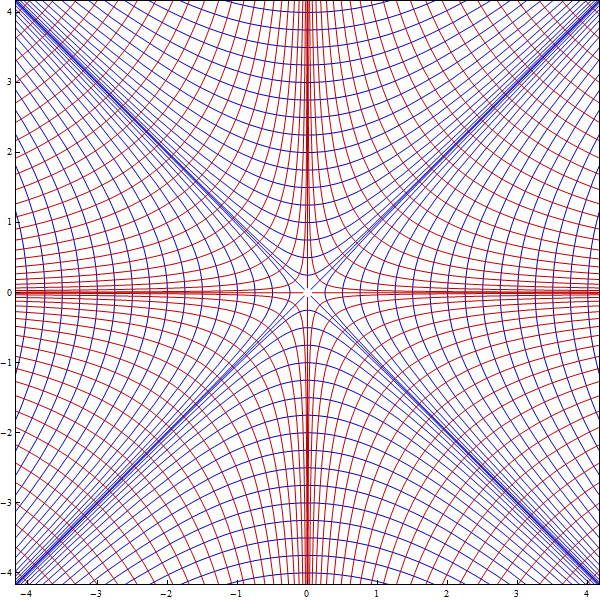

So far this technique has worked undeservedly well. The original problem wanted to use it to study paths on a dodecahedron. Where, unfortunately, the magic property !!xy=yx!! doesn't hold. It is possible to label the edges of the dodecahedron so that every sequence of labels determines a unique path:

but there's nothing like !!xy=yx!!. Well, nothing exactly like it. !!xy=yx!! is equivalent to !!(xy)^2=1!!, and here instead we have !!(xy)^{10}=1!!. I'm not sure that helps. I will probably need another idea.

The method fails similarly for the octahedron — which is good, because I can use the octahedron as a test platform to try to figure out a new idea. On an octahedron we need to use four kinds of labels because each vertex has four edges emerging from it:

Here again we don't have !!(xy)^2=1!! but we do have !!(xy)^3 = 1!!. So it's possible that if I figure out a good way to enumerate paths on the octahedron I may be able to adapt the technique to the dodecahedron. But the octahedron will be !!\frac{10}3!! times easier.

Viewed as groups, by the way, these path groups are all examples of Coxeter groups. I'm not sure this is actually a useful observation, but I've been wanting to learn about Coxeter groups for a long time and this might be a good enough excuse.

[Other articles in category /math] permanent link

A puzzle about representing numbers as a sum of 3-smooth numbers

I think this would be fun for a suitably-minded bright kid of maybe 12–15 years old.

Consider the following table of numbers of the form !!2^i3^j!!:

| 1 | 3 | 9 | 27 | 81 | 243 |

| 2 | 6 | 18 | 54 | 162 | … |

| 4 | 12 | 36 | 108 | … | … |

| 8 | 24 | 72 | 216 | … | … |

| 16 | 48 | 144 | … | … | … |

| 32 | 96 | … | … | … | … |

| 64 | 192 | … | … | … | … |

| 128 | … | … | … | … | … |

Given a number !!n!!, it is possible to represent !!n!! as a sum of entries from the table, with the following constraints:

- No more than one entry from any column

- An entry may only be used if it is in a strictly higher row than any entry to its left.

For example, one may not represent !!23 = 2 + 12 + 9!!, because the !!12!! is in a lower row than the !!2!! to its left.

| 1 | 3 | 9 | 27 |

| 2 | 6 | 18 | 54 |

| 4 | 12 | 36 | 108 |

But !!23 = 8 + 6 + 9!! is acceptable, because 6 is higher than 8, and 9 is higher than 6.

| 1 | 3 | 9 | 27 |

| 2 | 6 | 18 | 54 |

| 4 | 12 | 36 | 108 |

| 8 | 24 | 72 | 216 |

Or, put another way: can we represent any number !!n!! in the form $$n = \sum_i 2^{a_i}3^{b_i}$$ where the !!a_i!! are strictly decreasing and the !!b_i!! are strictly increasing?

Spoiler:

maxpow3 1 = 1

maxpow3 2 = 1

maxpow3 n = 3 * maxpow3 (n `div` 3)

rep :: Integer -> [Integer]

rep 0 = []

rep n = if even n then map (* 2) (rep (n `div` 2))

else (rep (n - mp3)) ++ [mp3] where mp3 = maxpow3 n

Sadly, the representation is not unique. For example, !!8+3 = 2+9!!, and !!32+24+9 = 32+6+27 = 8+12=18+27!!.

[Other articles in category /math] permanent link

Fri, 09 Nov 2018

Why I never finish my Haskell programs (part 3 of ∞)

I'm doing more work on matrix functions. A matrix represents a

relation, and I am representing a matrix as a [[Integer]]. Then

matrix addition is simply liftA2 (liftA2 (+)). Except no, that's

not right, and this is not a complaint, it's certainly my mistake.

The overloading for liftA2 for lists does not do what I want, which

is to apply the operation to each pair of correponding elements. I want

liftA2 (+) [1,2,3] [10,20,30] to be [11,22,33] but it is not.

Instead liftA2 lifts an operation to apply to each possible pair of

elements, producing [11,21,31,12,22,32,13,23,33].

And the twice-lifted version is

similarly not what I want:

$$ \require{enclose} \begin{pmatrix}1&2\\3&4\end{pmatrix}\enclose{circle}{\oplus} \begin{pmatrix}10&20\\30&40\end{pmatrix}= \begin{pmatrix} 11 & 21 & 12 & 22 \\ 31 & 41 & 32 & 42 \\ 13 & 23 & 14 & 24 \\ 33 & 43 & 34 & 44 \end{pmatrix} $$

No problem, this is what ZipList is for. ZipLists are just regular

lists that have a label on them that advises liftA2 to lift an

operation to the element-by-element version I want instead of the

each-one-by-every-other-one version that is the default. For instance

liftA2 (+) (ZipList [1,2,3]) (ZipList [10,20,30])

gives ZipList [11,22,33], as desired. The getZipList function

turns a ZipList back into a regular list.

But my matrices are nested lists, so I need to apply the ZipList

marker twice, once to the outer list, and once to each of the inner

lists, because I want the element-by-element behavior at both

levels. That's easy enough:

matrix :: [[a]] -> ZipList (ZipList a)

matrix m = ZipList (fmap ZipList m)

(The fmap here is actually being specialized to map, but that's

okay.)

Now

(liftA2 . liftA2) (+) (matrix [[1,2],[3,4]]) (matrix [[10,20],[30, 40]])

does indeed produce the result I want, except that the type markers are still in there: instead of

[[11,22],[33,44]]

I get

ZipList [ ZipList [11, 22], ZipList [33, 44] ]

No problem, I'll just use getZipList to turn them back again:

unmatrix :: ZipList (ZipList a) -> [[a]]

unmatrix m = getZipList (fmap getZipList m)

And now matrix addition is finished:

matrixplus :: [[a]] -> [[a]] -> [[a]]

matrixplus m n = unmatrix $ (liftA2 . liftA2) (+) (matrix m) (matrix n)

This works perfectly.

But the matrix and unmatrix pair bugs me a little. This business

of changing labels at both levels has happened twice already and

I am likely to need it again. So I will turn the two functions

into a single higher-order function by abstracting over ZipList.

This turns this

matrix m = ZipList (fmap ZipList m)

into this:

twice zl m = zl (fmap zl m)

with the idea that I will now have matrix = twice ZipList and

unmatrix = twice getZipList.

The first sign that something is going wrong is that twice does not

have the type I wanted. It is:

twice :: Functor f => (f a -> a) -> f (f a) -> a

where I was hoping for something more like this:

twice :: (Functor f, Functor g) => (f a -> g a) -> f (f a) -> g (g a)

which is not reasonable to expect: how can Haskell be expected to

figure out I wanted two diferent functors in there when there is only one

fmap? And indeed twice does not work; my desired matrix = twice

ZipList does not even type-check:

<interactive>:19:7: error:

• Occurs check: cannot construct the infinite type: a ~ ZipList a

Expected type: [ZipList a] -> ZipList a

Actual type: [a] -> ZipList a

• In the first argument of ‘twice’, namely ‘ZipList’

In the expression: twice ZipList

In an equation for ‘matrix’: matrix = twice ZipList

• Relevant bindings include

matrix :: [[ZipList a]] -> ZipList a (bound at <interactive>:20:5)

Telling GHC explicitly what type I want for twice doesn't work

either, so I decide it's time to go to lunch. I take paper with me,

and while I am eating my roast pork hoagie with sharp provolone and

spinach (a popular local delicacy) I work out the results of the type

unification algorithm on paper for both cases to see what goes wrong.

I get the same answers that Haskell got, but I can't see where the difference was coming from.

So now, instead of defining matrix operations, I am looking into the

type unification algorithm and trying to figure out why twice

doesn't work.

And that is yet another reason why I never finish my Haskell programs. (“What do you mean, λ-abstraction didn't work?”)

[Other articles in category /prog/haskell] permanent link

Thu, 08 Nov 2018

Haskell type checker complaint 184 of 698

I want to build an adjacency matrix for the vertices of a cube; this

is a matrix that has m[a][b] = 1 exactly when vertices a and b

share an edge. We can enumerate the vertices arbitrarily but a

convenient way to do it is to assign them the numbers 0 through 7 and

then say that vertices !!a!! and !!b!! are adjacent if, regarded as

binary numerals, they differ in exactly one bit, so:

import Data.Bits

a `adj` b = if (elem (xor a b) [1, 2, 4]) then 1 else 0

This compiles and GHC infers the type

adj :: (Bits a, Num a, Num t) => a -> a -> t

Fine.

Now I want to build the adjacency matrix, which is completely straightforward:

cube = [ [a `adj` b | b <- [0 .. 7] ] | a <- [0 .. 7] ] where

a `adj` b = if (elem (xor a b) [1, 2, 4]) then 1 else 0

Ha ha, no it isn't; in Haskell nothing is straightforward. This

produces 106 lines of type whining, followed by a failed compilation.

Apparently this is because because 0 and 7 are overloaded, and

could mean some weird values in some freakish instance of Num, and

then 0 .. 7 might generate an infinite list of 1-graded torsion

rings or something.

To fix this I have to say explicitly what I mean by 0. “Oh, yeah,

by the way, that there zero is intended to denote the integer zero,

and not the 1-graded torsion ring with no elements.”

cube = [ [a `adj` b | b <- [0 :: Integer .. 7] ] | a <- [0 .. 7] ] where

a `adj` b = if (elem (xor a b) [1, 2, 4]) then 1 else 0

Here's another way I could accomplish this:

zero_i_really_mean_it = 0 :: Integer

cube = [ [a `adj` b | b <- [zero_i_really_mean_it .. 7] ] | a <- [0 .. 7] ] where

a `adj` b = if (elem (xor a b) [1, 2, 4]) then 1 else 0

Or how about this?

cube = [ [a `adj` b | b <- numbers_dammit [0 .. 7] ] | a <- [0 .. 7] ] where

p `adj` q = if (elem (xor p q) [1, 2, 4]) then 1 else 0

numbers_dammit = id :: [Integer] -> [Integer]

I think there must be something really wrong with the language design here. I don't know exactly what it is, but I think someone must have made the wrong tradeoff at some point.

[Other articles in category /prog/haskell] permanent link

How not to reconfigure your sshd

Yesterday I wanted to reconfigure the sshd on a remote machine.

Although I'd never done sshd itself, I've done this kind of thing

a zillion times before. It looks like this: there is a configuration

file (in this case /etc/ssh/sshd-config) that you modify. But this

doesn't change the running server; you have to notify the server that

it should reread the file. One way would be by killing the server and

starting a new one. This would interrupt service, so instead you can

send the server a different signal (in this case SIGHUP) that tells

it to reload its configuration without exiting. Simple enough.

Except, it didn't work. I added:

Match User mjd

ForceCommand echo "I like pie!"

and signalled the server, then made a new connection to see if it

would print I like pie! instead of starting a shell. It started a

shell. Okay, I've never used Match or ForceCommand before, maybe

I don't understand how they work, I'll try something simpler. I

added:

PrintMotd yes

which seemed straightforward enough, and I put some text into

/etc/motd, but when I connected it didn't print the motd.

I tried a couple of other things but none of them seemed to work.

Okay, maybe the sshd is not getting the signal, or something? I

hunted up the logs, but there was a report like what I expected:

sshd[1210]: Received SIGHUP; restarting.

This was a head-scratcher. Was I modifying the wrong file? It semed

hardly possible, but I don't administer this machine so who knows? I

tried lsof -p 1210 to see if maybe sshd had some other config file

open, but it doesn't keep the file open after it reads it, so that was

no help.

Eventually I hit upon the answer, and I wish I had some useful piece of advice here for my future self about how to figure this out. But I don't because the answer just struck me all of a sudden.

(It's nice when that happens, but I feel a bit cheated afterward: I solved the problem this time, but I didn't learn anything, so how does it help me for next time? I put in the toil, but I didn't get the full payoff.)

“Aha,” I said. “I bet it's because my connection is multiplexed.”

Normally when you make an ssh connection to a remote machine, it

calls up the server, exchanges credentials, each side authenticates

the other, and they negotiate an encryption key. Then the server

forks, the child starts up a login shell and mediates between the

shell and the network, encrypting in one direction and decrypting in

the other. All that negotiation and authentication takes time.

There is a “multiplexing” option you can use instead. The handshaking

process still occurs as usual for the first connection. But once the

connection succeeds, there's no need to start all over again to make a

second connection. You can tell ssh to multiplex several virtual

connections over its one real connection. To make a new virtual

connection, you run ssh in the same way, but instead of contacting

the remote server as before, it contacts the local ssh client that's

already running and requests a new virtual connection. The client,

already connected to the remote server, tells the server to allocate a

new virtual connection and to start up a new shell session for it.

The server doesn't even have to fork; it just has to allocate another

pseudo-tty and run a shell in it. This is a lot faster.

I had my local ssh client configured to use a virtual connection if

that was possible. So my subsequent ssh commands weren't going

through the reconfigured parent server. They were all going through

the child server that had been forked hours before when I started my

first connection. It wasn't affected by reconfiguration of the parent

server, from which it was now separate.

I verified this by telling ssh to make a new connection without

trying to reuse the existing virtual connection:

ssh -o ControlPath=none -o ControlMaster=no ...

This time I saw the MOTD and when I reinstated that Match command I

got I like pie! instead of a shell.

(It occurs to me now that I could have tried to SIGHUP the child server process that my connections were going through, and that would probably have reconfigured any future virtual connections through that process, but I didn't think of it at the time.)

Then I went home for the day, feeling pretty darn clever, right up

until I discovered, partway through writing this article, that I can't

log in because all I get is I like pie! instead of a shell.

[Other articles in category /Unix] permanent link

Fri, 02 Nov 2018

Another trivial utility: git-q

One of my favorite programs is a super simple Git utility called

git-vee

that I just love, and I use fifty times a day. It displays a very

simple graph that shows where two branches diverged. For example, my

push of master was refused because it was not a

fast-forward. So I

used git-vee to investigate, and saw:

* a41d493 (HEAD -> master) new article: Migraine

* 2825a71 message headers are now beyond parody

| * fa2ae34 (origin/master) message headers are now beyond parody

|/

o 142c68a a bit more information

The current head (master) and its upstream (origin/master) are

displayed by default. Here the nearest common ancestor is 142c68a,

and I can see the two commits after that on master that are

different from the commit on origin/master. The command is called

git-vee because the graph is (usually) V-shaped, and I want to find

out where the point of the V is and what is on its two arms.

From this V, it appears that what happened was: I pushed fa2ae34,

then amended it to produce 2825a71, but I have not yet force-pushed

the amendment. Okay! I should simply do the force-push now…

Except wait, what if that's not what happened? What if what

happened was, 2825a71 was the original commit, and I pushed it, then

fetched it on a different machine, amended it to produce fa2ae34,

and force-pushed that? If so, then force-pushing 2825a71 now would

overwrite the amendments. How can I tell what I should do?

Formerly I would have used diff and studied the differences, but now

I have an easier way to find the answer. I run:

git q HEAD^ origin/master

and it produces the dates on which each commit was created:

2825a71 Fri Nov 2 02:30:06 2018 +0000

fa2ae34 Fri Nov 2 02:25:29 2018 +0000

Aha, it was as I originally thought: 2825a71 is five minutes newer.

The force-push is the right thing to do this time.

Although the commit date is the default output, the git-q

command can

produce any of the information known to git-log, using the usual

escape sequences.

For example, git q %s ... produces subject lines:

% git q %s HEAD origin/master 142c68a

a41d493 new article: Migraine

fa2ae34 message headers are now beyond parody

142c68a a bit more information

and git q '%an <%ae>' tells you who made the commits:

a41d493 Mark Jason Dominus (陶敏修) <mjd@plover.com>

fa2ae34 Mark Jason Dominus (陶敏修) <mjd@plover.com>

142c68a Mark Jason Dominus (陶敏修) <mjd@plover.com>

The program is in my personal git-util repository but it's totally simple and should be easy to customize the way you want:

#!/usr/bin/python3

from sys import argv, stderr

import subprocess

if len(argv) < 3: usage()

if argv[1].startswith('%'):

item = argv[1]

ids = argv[2:]

else:

item='%cd'

ids = argv[1:]

for id in ids:

subprocess.run([ "git", "--no-pager",

"log", "-1", "--format=%h " + item, id])

[Other articles in category /prog] permanent link

Mon, 29 Oct 2018Warning: Long and possibly dull.

I spent a big chunk of today fixing a bug that should have been easy but that just went deeper and deeper. If you look over in the left sidebar there you'll se a sub-menu titled “subtopics” with a per-category count of the number of articles in each section of this blog. (Unless you're using a small display, where the whole sidebar is suppressed.) That menu was at least a year out of date. I wanted to fix it.

The blog software I use is the wonderfully terrible

Blosxom. It has a plugin system,

and the topic menu was generated by a plugin that I wrote some time

ago. When the topic plugin starts up it opens two Berkeley

DB files. Each is a simple

key-value mapping. One maps topic names to article counts. The other

is just a set of article IDs for the articles that have already been

counted. These key-value mappings are exposed in Perl as hash

variables.

When I regenerate the static site, the topic plugin has a

subroutine, story, that is called for each article in each generated

page. The business end of the subroutine looks something like this:

sub story {

# ... acquire arguments ..

if ( $Seen{ $article_id } ) {

return;

} else {

$topic_count{ $article_topic }++;

$Seen{ $article_id } = 1;

}

}

The reason the menu wasn't being updated is that at some point in the

past, I changed the way story plugins were called. Out of the box,

Blosxom passes story a list of five arguments, like this:

my ($pkg, $path, $filename, $story_ref, $title_ref) = @_;

Over the years I had extended this to eight or nine, and I felt it was getting unwieldy, so at some point I changed it to pass a hash, like this:

my %args = (

category => $path, # directory of this story

filename => $fn, # filename of story, without suffix

...

)

$entries = $plugin->story(\%args);

When I made this conversion, I had to convert all the plugins. I

missed converting topic. So instead of getting the eight or nine

arguments it expected, it got two: the plugin itself, and the hash.

Then it used the hash as the key into the databases, which by now

were full of thousands of entries for things like HASH(0x436c1d)

because that is what Perl silently and uselessly does if you try to

use a hash as if it were a string.

Anyway, this was easily fixed, or should have been easily fixed. All I needed to do was convert the plugin to use the new calling convention. Ha!

One thing all my plugins do when they start up is write a diagnostic log, something like this:

sub start {

open F, ">", "/tmp/topic.$>";

print F "Writing to $blosxom::plugin_state_dir/topics\n";

}

Then whenever the plugin has something to announce it just does

print F. For example, when the plugin increments the count for a

topic, it inserts a message like this:

print F "'$article_id' is item $topic_count{$article_topic} in topic $article_topic.\n";

If the article has already been seen, it remains silent.

Later I can look in /tmp/topic.119 or whatever to see what it said.

When I'm debugging a plugin, I can open an Emacs buffer on this file

and put it in auto-revert mode so that Emacs always displays the

current contents of the file.

Blosxom has an option to generate pages on demand for a web browser,

and I use this for testing. https://blog.plover.com/PATH is the

static version of the article, served from a pre-generated static

file. But https://blog.plover.com/test/PATH calls Blosxom as a CGI

script to generate the article on the fly and send it to the browser.

So I visited https://blog.plover.com/test/2018/, which should

generate a page with all the articles from 2018, to see what the

plugin put in the file. I should have seen it inserting a lot of

HASH(0x436c1d) garbage:

'lang/etym/Arabic-2' is article 1 in topic HASH(0x22c501b)

'addenda/200801' is article 1 in topic HASH(0x5300aa2)

'games/poker-24' is article 1 in topic HASH(0x4634a79)

'brain/pills' is article 1 in topic HASH(0x1a9f6ab)

'lang/long-s' is article 1 in topic HASH(0x29489be)

'google-roundup/200602' is article 1 in topic HASH(0x360e6f5)

'prog/van-der-waerden-1' is article 1 in topic HASH(0x3f2a6dd)

'math/math-se-gods' is article 1 in topic HASH(0x412b105)

'math/pow-sqrt-2' is article 1 in topic HASH(0x23ebfe4)

'aliens/dd/p22' is article 1 in topic HASH(0x878748)

I didn't see this. I saw the startup message and nothing else. I did

a bunch of very typical debugging, such as having the plugin print a

message every time story was called:

sub story {

print F "Calling 'story' (@_)\n";

...

}

Nothing. But I knew that story was being called. Was I maybe

editing the wrong file on disk? No, because I could introduce a

syntax error and the browser would happily report the resulting 500

Server Error. Fortunately, somewhere along the way I changed

open F, ">", "/tmp/topic.$>";

to

open F, ">>", "/tmp/topic.$>";

and discovered that each time I loaded the page, the plugin was run

exactly twice. When I had had >, the second run would immediately

overwrite the diagnostics from the first run.

But why was the plugin being run twice? This took quite a while to track down. At first I suspected that Blosxom was doing it, either on purpose or by accident. My instance of Blosxom is a hideous Frankenstein monster that has been cut up and reassembled and hacked and patched dozens of times since 2006 and it is full of unpleasant surprises. But the problem turned out to be quite different. Looking at the Apache server logs I saw that the browser was actually making two requests, not one:

100.14.199.174 - mjd [28/Oct/2018:18:00:49 +0000] "GET /test/2018/ HTTP/1.1" 200 213417 "-" ...

100.14.199.174 - mjd [28/Oct/2018:18:00:57 +0000] "GET /test/2018/BLOGIMGREF/horseshoe-curve-small.mp4 HTTP/1.1" 200 623 ...

Since the second request was for a nonexistent article, the story

callback wasn't invoked in the second run. So I would see the startup

message, but I didn't see any messages from the story callback.

They had been there in the first run for the first request, but that

output was immediately overwritten on the second request.

BLOGIMGREF is a tag that I include in image URLs, that expands to

whatever is the appropriate URL for the images for the particular

article it's in. This expansion is done by a different plugin, called

path2, and apparently in this case it wasn't being expanded. The

place it was being used was easy enough to find; it looked like this:

<video width="480" height="270" controls>

<source src="BLOGIMGREF/horseshoe-curve-small.mp4" type="video/mp4">

</video>

So I dug down into the path2 plugin to find out why BLOGIMGREF

wasn't being replaced by the correct URL prefix, which should have

been in a different domain entirely.

This took a very long time to track down, and I think it was totally

not my fault. When I first wrote path2 I just had it do a straight

text substitution. But at some point I had improved this to use a real

HTML parser, supplied by the Perl HTML::TreeBuilder module. This

would parse the article body and return a tree of HTML::Element

objects, which the plugin would then filter, looking for img and a

elements. The plugin would look for the magic tags and replace them

with the right URLs.

This magic tag was not in an img or an a element, so the plugin

wasn't finding it. I needed to tell the plugin to look in source

elements also. Easy fix! Except it didn't work.

Then began a tedious ten-year odyssey through the HTML::TreeBuilder

and HTML::Element modules to find out why it hadn't worked. It took

a long time because I'm good at debugging. When you lose your wallet,

you look in the most likely places first, and I know from many years

of experience what the most likely places are — usually in my

misunderstanding of the calling convention of some library I didn't

write, or my misunderstanding of what it was supposed to do; sometimes

in my own code. The downside of this is that when the wallet is in

an unlikely place it takes a really long time to find it.

The end result this time was that it wasn't in any of the usual

places. It was 100% not my fault: HTML::TreeBuilder has a bug in

its parser. For

some reason it completely ignores source elements:

perl -MHTML::TreeBuilder -e '$z = q{<source src="/media/horseshoe-curve-small.mp4" type="video/mp4"/>}; HTML::TreeBuilder->new->parse($z)->eof->elementify()->dump(\*STDERR)'

The output is:

<html> @0 (IMPLICIT)

<head> @0.0 (IMPLICIT)

<body> @0.1 (IMPLICIT)

No trace of the source element. I reported the bug, commented out

the source element in the article, and moved on. (The article was

unpublished, in part because I could never get the video to play

properly in the browser. I had been tearing my hair about over it,

but now I knew why! The BLOGIMGREF in the URL was not being

replaced! Because of a bug in the HTML parser!)

With that fixed I went back to finish the work on the topic plugin.

Now that the diagnostics were no longer being overwritten by the bogus

request for /test/2018/BLOGIMGREF/horseshoe-curve-small.mp4, I

expected to see the HASH(0x436c1d) garbage. I did, and I fixed

that. Then I expected the 'article' is article 17 in topic prog

lines to go away. They were only printed for new articles that hadn't

been seen before, and by this time every article should have been in

the %Seen database.

But no, every article on the page, every article from 2018, was being processed every time I rebuilt the page. And the topic counts were going up, up, up.

This also took a long time to track down, because again the cause was so unlikely. I must have been desperate because I finally found it by doing something like this:

if ( $Seen{ $article_id } ) {

return;

} else {

$topic_count{ $article_topic }++;

$Seen{ $article_id } = 1;

die "WTF!!" unless $Seen{ $article_id };

}

Yep, it died. Either Berkeley DB, or Perl's BerkeleyDB module, was

just flat-out not working. Both of them are ancient, and this kind of

shocking bug should have been shaken out 20 years go. WTF, indeed,

I fixed this by discarding the entire database and rebuilding it. I

needed to clean out the HASH(0x436c1d) crap anyway.

I am sick of DB files. I am never using them again. I have been bitten too many times. From now on I am doing the smart thing, by which I mean the dumb thing, the worse-is-better thing: I will read a plain text file into memory, modify it, and write out the modified version whem I am done. It will be simple to debug the code and simple to modify the database.

Well, that sucked. Usually this sort of thing is all my fault, but this time I was only maybe 10% responsible.

At least it's working again.

[ Addendum: I learned that discarding the source element is a

⸢feature⸣ of HTML::Parser. It has a list of valid HTML4 tags and by

default it ignores any element that isn't one. The maintainer won't

change the default to HTML5 because that might break backward

compatibility for people who are depending on this behavior. ]

[Other articles in category /prog/bug] permanent link

Sun, 28 Oct 2018

More about auto-generated switch-cases

Yesterday I described what I thought was a cool hack I had seen in

rsync, to try several

possible methods and then remember which one worked so as to skip the

others on future attempts. This was abetted by a different hack, for

automatically generating the case labels for the switch, which I

thought was less cool.

Simon Tatham wrote to me with a technique for compile-time generation

of case labels that I liked better. Recall that the context is:

int set_the_mtime(...) {

static int switch_step = 0;

switch (switch_step) {

#ifdef METHOD_1_MIGHT_WORK

case ???:

if (method_1_works(...))

break;

switch_step++;

/* FALLTHROUGH */

#endif

#ifdef METHOD_2_MIGHT_WORK

case ???:

if (method_2_works(...))

break;

switch_step++;

/* FALLTHROUGH */

#endif

... etc. ...

}

return 1;

}

M. Tatham suggested this:

#define NEXT_CASE switch_step = __LINE__; case __LINE__

You use it like this:

int set_the_mtime(...) {

static int switch_step = 0;

switch (switch_step) {

default:

#ifdef METHOD_1_MIGHT_WORK

NEXT_CASE:

if (method_1_works(...))

break;

/* FALLTHROUGH */

#endif

#ifdef METHOD_2_MIGHT_WORK

NEXT_CASE:

if (method_2_works(...))

break;

/* FALLTHROUGH */

#endif

... etc. ...

}

return 1;

}

The case labels are no longer consecutive, but that doesn't matter;

all that is needed is for them to be distinct. Nobody is ever going

to see them except the compiler. M. Tatham called this

“the case __LINE__ trick”, which suggested to me that it was

generally known. But it was new to me.

One possible drawback of this method is that if the file contains more

than 255 lines, the case labels will not fit in a single byte. The

ultimate effect of this depends on how the compiler handles switch.

It might be compiled into a jump table with !!2^{16}!! entries, which

would only be a problem if you had to run your program in 1986. Or it

might be compiled to an if-else tree, or something else we don't want.

Still, it seems like a reasonable bet.

You could use case 0: at the beginning instead of default:, but

that's not as much fun. M. Tatham observes that it's one of very few

situations in which it makes sense not to put default: last. He

says this is the only other one he knows:

switch (month) {

case SEPTEMBER:

case APRIL:

case JUNE:

case NOVEMBER:

days = 30;

break;

default:

days = 31;

break;

case FEBRUARY:

days = 28;

if (leap_year)

days = 29;

break;

}

Addendum 20181029: Several people have asked for an explanation of why

the default is in the middle of the last switch. It follows the

pattern of a very well-known mnemonic

poem that goes

Thirty days has September,

April, June and November.

All the rest have thirty-one

Except February, it's a different one:

It has 28 days clear,

and 29 each leap year.

Wikipedia says:

[The poem has] been called “one of the most popular and oft-repeated verses in the English language” and “probably the only sixteenth-century poem most ordinary citizens know by heart”.

[Other articles in category /prog] permanent link

Sat, 27 Oct 2018

A fun optimization trick from rsync

I was looking at the rsync source code today and I saw a neat trick

I'd never seen before. It wants to try to set the mtime on a file,

and there are several methods that might work, but it doesn't know

which. So it tries them in sequence, and then it remembers which one

worked and uses that method on subsequent calls:

int set_the_mtime(...) {

static int switch_step = 0;

switch (switch_step) {

case 0:

if (method_0_works(...))

break;

switch_step++;

/* FALLTHROUGH */

case 1:

if (method_1_works(...))

break;

switch_step++;

/* FALLTHROUGH */

case 2:

...

case 17:

if (method_17_works(...))

break;

return -1; /* ultimate failure */

}

return 0; /* success */

}

The key item here is the static switch_step variable. The first

time the function is called, its value is 0 and the switch starts at

case 0. If methods 0 through 7 all fail and method 8 succeeds,

switch_step will have been set to 8, and on subsequent calls to the

function the switch will jump immediately to case 8.

The actual code is a little more sophisticated than this. The list of

cases is built depending on the setting of several compile-time config

flags, so that the code that is compiled only includes the methods

that are actually callable. Calling one of the methods can produce

three distinguishable results: success, real failure (because of

permission problems or some such), or a sort of fake failure

(ENOSYS) that only means that the underlying syscall is

unimplemented. This third type of result is the one where it makes

sense to try another method. So the cases actually look like this:

case 7:

if (method_7_works(...))

break;

if (errno != ENOSYS)

return -1; /* real failure */

switch_step++;

/* FALLTHROUGH */

On top of this there's another trick: since the various cases are

conditionally compiled depending on the config flags, we don't know

ahead of time which ones will be included. So the case labels

themselves are generated at compile time this way:

#include "case_N.h"

if (method_7_works(...))

break;

...

#include "case_N.h"

if (method_8_works(...))

break;

...

The first time we #include "case_N.h", it turns into case 0:; the

second time, it turns into case 1:, and so on:

#if !defined CASE_N_STATE_0

#define CASE_N_STATE_0

case 0:

#elif !defined CASE_N_STATE_1

#define CASE_N_STATE_1

case 1:

...

#else

#error Need to add more case statements!

#endif

Unfortunately you can only use this trick one switch per file.

Although I suppose if you really wanted to reuse it you could make a

reset_case_N.h file which would contain

#undef CASE_N_STATE_0

#undef CASE_N_STATE_1

...

[ Addendum 20181028: Simon Tatham brought up a technique for

generating the case labels that we agree is

better than what rsync did. ]

[Other articles in category /prog] permanent link

Fri, 26 Oct 2018

A snide addendum about implicit typeclass instances

In an earlier article I demanded:

Maybe someone can explain to me why this is a useful behavior, and then explain why it is so useful that it should happen automatically …

“This” being that instead of raising a type error, Haskell quietly accepts this nonsense:

fmap ("super"++) (++"weasel")

but it clutches its pearls and faints in horror when confronted with this expression:

fmap ("super"++) "weasel"

Nobody did explain this.

But I imagined

someone earnestly explaining: “Okay, but in the first case, the

(++"weasel") is interpreted as a value in the environment functor,

so fmap is resolved to its the environment instance, which is (.).

That doesn't happen in the second example.”

Yeah, yeah, I know that. Hey, you know what else is a functor? The

identity functor. If fmap can be quietly demoted to its (->) e

instance, why can't it also be quietly demoted to its Id instance,

which is ($), so that fmap ("super"++) "weasel" can quietly

produce "superweasel"?

I understand this is a terrible idea. To be clear, what I want is for it to collapse on the divan for both expressions. Pearl-clutching is Haskell's finest feature and greatest strength, and it should do it whenever possible.

[Other articles in category /prog/haskell] permanent link

Tue, 23 Oct 2018A few years back I asked on history stackexchange:

Wikipedia's article on the Halifax Gibbet says, with several citations:

…ancient custom and law gave the Lord of the Manor the authority to execute summarily by decapitation any thief caught with stolen goods to the value of 13½d or more…

My question being: why 13½ pence?

This immediately attracted an answer that was no good at all. The author began by giving up:

This question is historically unanswerable.

I've met this guy and probably you have too: he knows everything worth knowing, and therefore what he doesn't know must be completely beyond the reach of mortal ken. But that doesn't mean he will shrug and leave it at that, oh no. Having said nobody could possibly know, he will nevertheless ramble for six or seven decreasingly relevant paragraphs, as he did here.

45 months later, however, a concise and pertinent answer was given by Aaron Brick:

13½d is a historical value called a loonslate. According to William Hone, it has a Scottish origin, being two-thirds of the Scottish pound, as the mark was two-thirds of the English pound. The same value was proposed as coinage for South Carolina in 1700.

This answer makes me happy in several ways, most of them positive. I'm glad to have a lead for where the 13½ pence comes from. I'm glad to learn the odd word “loonslate”. And I'm glad to be introduced to the bizarre world of pre-union Scottish currency, which, in addition to the loonslate, includes the bawbee, the unicorn, the hardhead, the bodle, and the plack.

My pleasure has a bit of evil spice in it too. That fatuous claim that the question was “historically unanswerable” had been bothering me for years, and M. Brick's slam-dunk put it right where it deserved.

I'm still not completely satisfied. The Scottish mark was worth ⅔ of a pound Scots, and the pound Scots, like the English one, was divided not into 12 pence but into 20 shillings of 12 pence each, so that a Scottish mark was worth 160d, not 13½d. Brick cites William Hone, who claims that the pound Scots was divided into twelve pence, rather than twenty shillings, so that a mark was worth 13⅔ pence, but I can't find any other source that agrees with him. Confusing the issue is that starting under the reign of James VI and I in 1606, the Scottish pound was converted to the English at a rate of twelve-to-one, so that a Scottish mark would indeed have been convertible to 13⅔ English pence, except that the English didn't denominate pence in thirds, so perhaps it was legally rounded down to 13½ pence. But this would all have been long after the establishment of the 13½d in the Halifax gibbet law and so unrelated to it.

Or would it? Maybe the 13½d entered popular consciousness in the 17th century, acquired the evocative slang name “hangman’s wages”, and then an urban legend arose about it being the cutoff amount for the Halifax gibbet, long after the gibbet itself was dismantled arond 1650. I haven't found any really convincing connection between the 13½d and the gibbet that dates earlier than 1712. The appearance of the 13½d in the gibbet law could be entirely the invention of Samuel Midgley.

I may dig into this some more. The 1771 Encyclopædia Britannica has a 16-page article on “Money” that I can look at. I may not find out what I want to know, but I will probably find out something.

[Other articles in category /history] permanent link

Getting Applicatives from Monads and “>>=” from “join”

I complained recently about GHC not being able to infer an

Applicative instance from a type that already has a Monad

instance, and there is a related complaint that the Monad instance

must define >>=. In some type classes, you get a choice about

what to define, and then the rest of the functions are built from the

ones you provided. To take a particular simple example, with Eq

you have the choice of defining == or /=, and if you omit one

Haskell will construct the other for you. It could do this with >>=

and join, but it doesn't, for technical reasons I don't

understand

[1]

[2]

[3].

But both of these problems can be worked around. If I have a Monad instance, it seems to work just fine if I say:

instance Applicative Tree where

pure = return

fs <*> xs = do

f <- fs

x <- xs

return (f x)

Where this code is completely canned, the same for every Monad.

And if I know join but not >>=, it seems to work just fine if I say:

instance Monad Tree where

return = ...

x >>= f = join (fmap f x) where

join tt = ...

I suppose these might faul foul of whatever problem is being described in the documents I linked above. But I'll either find out, or I won't, and either way is a good outcome.

[ Addendum: Vaibhav Sagar points out that my definition of <*> above

is identical to that of Control.Monad.ap, so that instead of

defining <*> from scratch, I could have imported ap and then

written <*> = ap. ]

[ Addendum 20221021: There are actually two definitions of <*> that will work. [1] [2] ]

[Other articles in category /prog/haskell] permanent link

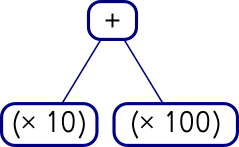

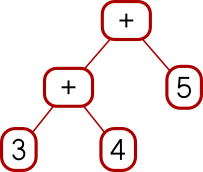

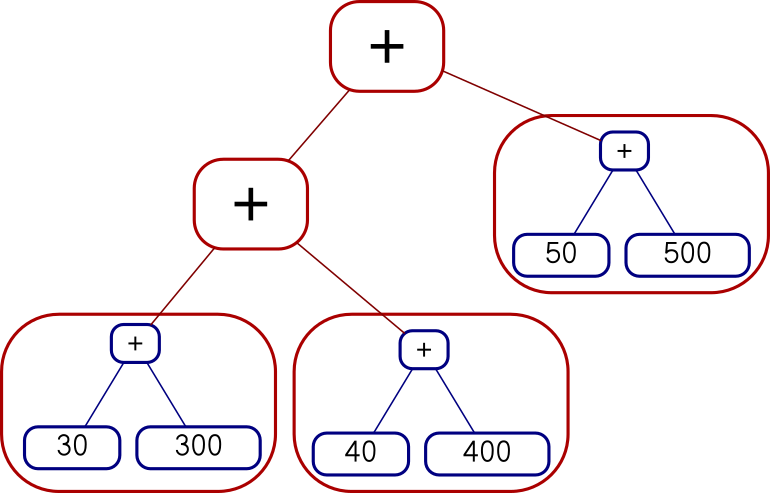

Mon, 22 Oct 2018While I was writing up last week's long article about Traversable, I wrote this stuff about Applicative also. It's part of the story but I wasn't sure how to work it into the other narrative, so I took it out and left a remark that “maybe I'll publish a writeup of that later”. This is a disorganized collection of loosely-related paragraphs on that topic.

It concerns my attempts to create various class instance definitions for the following type:

data Tree a = Con a | Add (Tree a) (Tree a)

deriving (Eq, Show)

which notionally represents a type of very simple expression tree over values of type a.

I need some function for making Trees that isn't too

simple or too complicated, and I went with:

h n | n < 2 = Con n

h n = if even n then Add (h (n `div` 2)) (h (n `div` 2))

else Add (Con 1) (h (n - 1))

which builds trees like these:

2 = 1 + 1

3 = 1 + (1 + 1)

4 = (1 + 1) + (1 + 1)