Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2026: | J |

| 2025: | JFMAMJ |

| JASOND | |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 245 |

| Programming | 100 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 22 |

| Physics | 21 |

| Perl | 17 |

| Biology | 16 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Wed, 30 Dec 2020

Benjamin Franklin and the Exercises of Ignatius

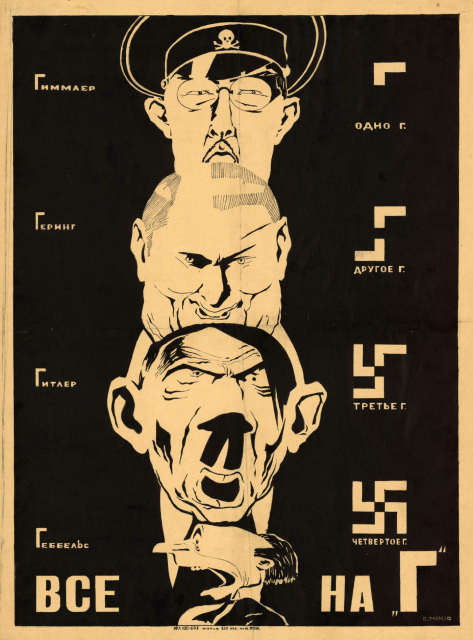

Recently I learned of the Spiritual Exercises of St. Ignatius. Wikipedia says (or quotes, it's not clear):

Morning, afternoon, and evening will be times of the examinations. The morning is to guard against a particular sin or fault, the afternoon is a fuller examination of the same sin or defect. There will be a visual record with a tally of the frequency of sins or defects during each day. In it, the letter 'g' will indicate days, with 'G' for Sunday. Three kinds of thoughts: "my own" and two from outside, one from the "good spirit" and the other from the "bad spirit".

This reminded me very strongly of Chapter 9 of Benjamin Franklin's Autobiography, in which he presents “A Plan for Attaining Moral Perfection”:

My intention being to acquire the habitude of all these virtues, I judg'd it would be well not to distract my attention by attempting the whole at once, but to fix it on one of them at a time… Conceiving then, that, agreeably to the advice of Pythagoras in his Golden Verses, daily examination would be necessary, I contrived the following method for conducting that examination.

I made a little book, in which I allotted a page for each of the virtues. I rul'd each page with red ink, so as to have seven columns, one for each day of the week, marking each column with a letter for the day. I cross'd these columns with thirteen red lines, marking the beginning of each line with the first letter of one of the virtues, on which line, and in its proper column, I might mark, by a little black spot, every fault I found upon examination to have been committed respecting that virtue upon that day.

I determined to give a week's strict attention to each of the virtues successively. Thus, in the first week, my great guard was to avoid every the least offense against Temperance, leaving the other virtues to their ordinary chance, only marking every evening the faults of the day.

So I wondered: was Franklin influenced by the Exercises? I don't know, but it's possible. Wondering about this I consulted the Mighty Internet, and found two items in the Woodstock Letters, a 19th-century Jesuit periodical, wondering the same thing:

The following extract from Franklin’s Autobiography will prove of interest to students of the Exercises: … Did Franklin learn of our method of Particular Examen from some of the old members of the Suppressed Society?

(“Woodstock Letters” Volume XXXIV #2 (Sep 1905) p.311–313)

I can't guess at the main question, but I can correct one small detail: although this part of the Autobiography was written around 1784, the time of which Franklin was writing, when he actually made his little book, was around 1730, well before the suppression of the Society.

The following issue takes up the matter again:

Another proof that Franklin was acquainted with the Exercises is shown from a letter he wrote to Joseph Priestley from London in 1772, where he gives the method of election of the Exercises. …

(“Woodstock Letters” Volume XXXIV #3 (Dec 1905) p.459–461)

Franklin describes making a decision by listing, on a divided sheet of paper, the reasons for and against the proposed action. And then a variation I hadn't seen: balance arguments for and arguments against, and cross out equally-balanced sets of arguments. Franklin even suggests evaluations as fine as matching two arguments for with three slightly weaker arguments against and crossing out all five together.

I don't know what this resembles in the Exercises but it certainly was striking.

[Other articles in category /book] permanent link

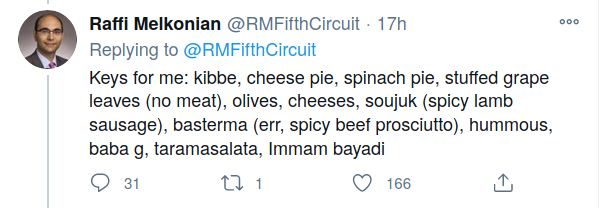

Sat, 26 Dec 2020This tweet from Raffi Melkonian describes the appetizer plate at his house on Christmas. One item jumped out at me:

basterma (err, spicy beef prosciutto)

I wondered what that was like, and then I realized I do have some idea, because I recognized the word. Basterma is not an originally Armenian word, it's a Turkish loanword, I think canonically spelled pastırma. And from Turkish it made a long journey through Romanian and Yiddish to arrive in English as… pastrami…

For which “spicy beef prosciutto” isn't a bad description at all.

[Other articles in category /lang/etym] permanent link

Tue, 15 Dec 2020The world is so complicated! It has so many things in it that I could not even have imagined.

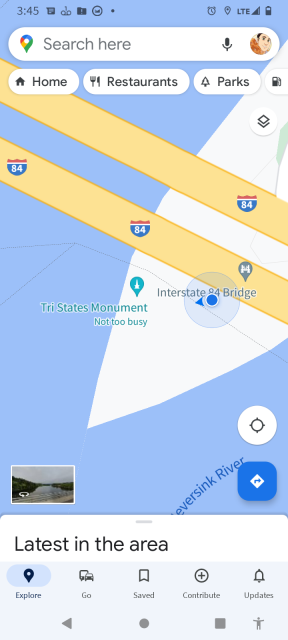

Yesterday I learned that since 1949 there has been a compact between New Mexico and Texas about how to divide up the water in the Pecos River, which flows from New Mexico to Texas, and then into the Rio Grande.

New Mexico is not allowed to use all the water before it gets to Texas. Texas is entitled to receive a certain amount.

There have been disputes about this in the past (the Supreme Court case has been active since 1974), so in 1988 the Supreme Court appointed Neil S. Grigg, a hydraulic engineer and water management expert from Colorado, to be “River Master of the Pecos River”, to mediate the disputes and account for the water. The River Master has a rulebook, which you can read online. I don't know how much Dr. Grigg is paid for this.

In 2014, Tropical Storm Odile dumped a lot of rain on the U.S. Southwest. The Pecos River was flooding, so Texas asked NM to hold onto the Texas share of the water until later. (The rulebook says they can do this.) New Mexico arranged for the water that was owed to Texas to be stored in the Brantley Reservoir.

A few months later Texas wanted their water. "OK," said New Mexico. “But while we were holding it for you in our reservoir, some of it evaporated. We will give you what is left.”

“No,” said Texas, “we are entitled to a certain amount of water from you. We want it all.”

But the rule book says that even though the water was in New Mexico's reservoir, it was Texas's water that evaporated. (Section C5, “Texas Water Stored in New Mexico Reservoirs”.)

[ Addendum 20230528: To my amazement this case has come to my attention again, because legal blogger Adam Unikowsky cited it as one of “the [ten] least significant cases of the decade”. I wrote a brief followup about why I enjoy Unikowsky's Legal Newsletter. ]

[Other articles in category /law] permanent link

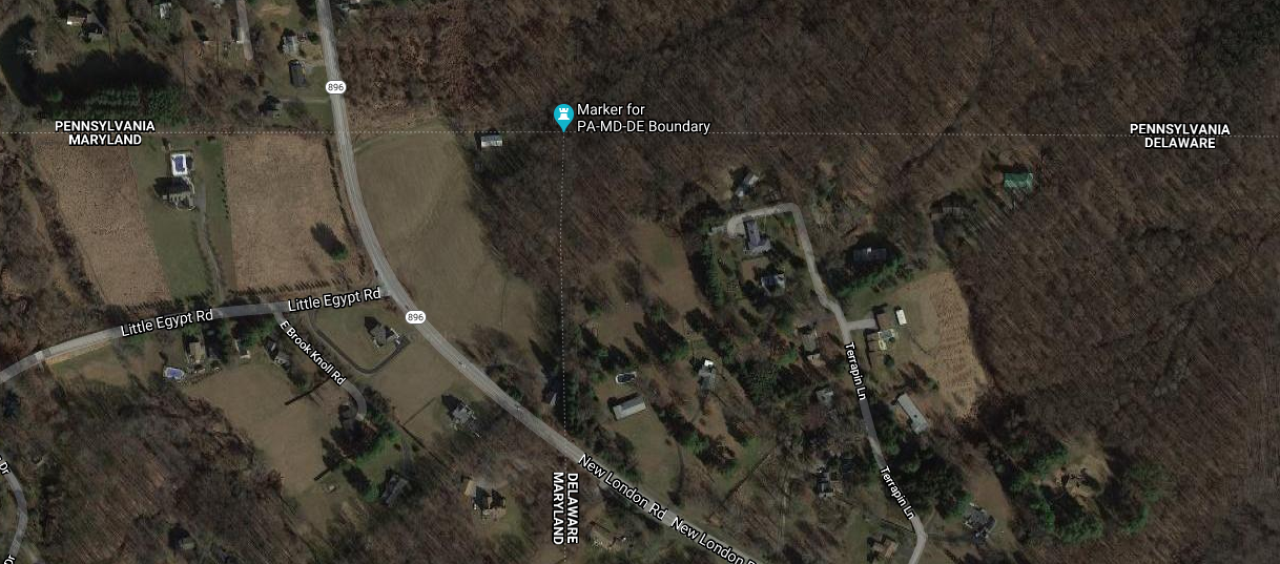

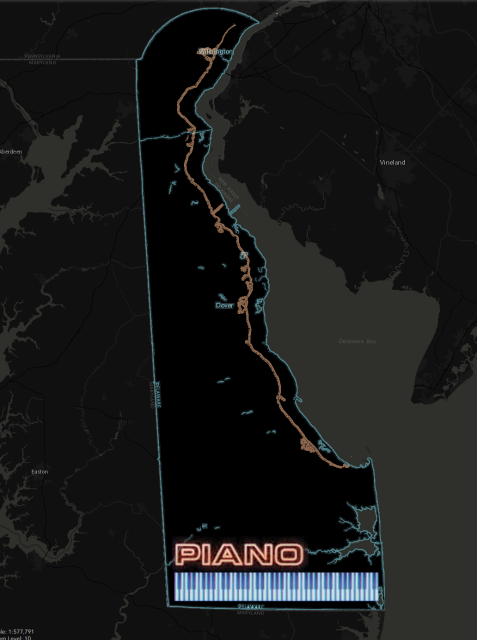

Thu, 10 Dec 2020I see that the Pennsylvania-Delaware-Maryland triple border is near White Clay Creek State Park, outside of Newark, DE. That sounds nice, so perhaps I will stop by and take a look, and see if there really is white clay in the creek.

I had some free time yesterday, so that is what I did. The creek is pretty. I did not see anything that appeared to be white clay. Of course I did not investigate extensively, or even closely, because the weather was too cold for wading. But the park was beautiful.

There is a walking trail in the park that reaches the tripoint itself. I didn't walk the whole trail. The park entrance is at the other end of the park from the tripoint. After wandering around in the park for a while, I went back to the car, drove to the Maryland end of the park, and left the car on the side of the Maryland Route 896 (or maybe Pennsylvania Route 896, it's hard to be sure). Then I cut across private property to the marker.

The marker itself looks like this:

As you see, the Pennsylvania sides of the monument are marked with ‘P’ and the Maryland side with ‘M’. The other ‘M’ is actually in Delaware. This Newark Post article explains why there is no ‘D’:

The marker lists only Maryland and Pennsylvania, not Delaware, because in 1765, Delaware was part of Pennsylvania.

This does not explain the whole thing. The point was first marked in 1765 by Mason and Dixon and at that time Delaware was indeed part of Pennsylvania. But as you see the stone marker was placed in 1849, by which time Delaware had been there for some time. Perhaps the people who installed the new marker were trying to pretend that Delaware did not exist.

[ Addendum 20201218: Daniel Wagner points out that even if the 1849 people were trying to depict things as they were in 1765, the marker is still wrong; it should have three ‘P’ and one ‘M’, not two of each. I did read that the surveyors who originally placed the 1849 marker put it in the wrong spot, and it had to be moved later, so perhaps they were just not careful people. ]

Theron Stanford notes that this point is also the northwestern corner of the Wedge. This sliver of land was east of the Maryland border, but outside the Twelve-Mile Circle and so formed an odd prodtrusion from Pennsylvania. Pennsylvania only reliquinshed claims to it in 1921 and it is now agreed to be part of Delaware. Were the Wedge still part of Pennsylvania, the tripoint would have been at its southernmost point.

Looking at the map now I see that to get to the marker, I must have driven within a hundred yards of the westmost point of the Twelve-Mile Circle itself, and there is a (somewhat more impressive) marker there. Had I realized at the time I probably would have tried to stop off.

I have some other pictures of the marker if you are not tired of this yet.

[ Addendum 20201211: Tim Heany asks “Is there no sign at the border on 896?” There probably is, and this observation is a strong argument that I parked the car in Maryland. ]

[ Addendum 20201211: Yes, ‘prodtrusion’ was a typo, but it is a gift from the Gods of Dada and should be treasured, not thrown in the trash. ]

[Other articles in category /misc] permanent link

Sat, 21 Nov 2020

Testing for divisibility by 19

[ Previously, Testing for divisibility by 7. ]

A couple of nights ago I was keeping Katara company while she revised an essay on The Scarlet Letter (ugh) and to pass the time one of the things I did was tinker with the tests for divisibility rules by 9 and 11. In the course of this I discovered the following method for divisibility by 19:

Double the last digit and add the next-to-last.

Double that and add the next digit over.

Repeat until you've added the leftmost digit.

The result will be a smaller number which is a multiple of 19 if and only if the original number was.

For example, let's consider, oh, I don't know, 2337. We calculate:

- 7·2+3 = 17

- 17·2 + 3 = 37

- 37·2 + 2 = 76

76 is a multiple of 19, so 2337 was also. But if you're not sure about 76 you can compute 2·6+7 = 19 and if you're not sure about that you need more help than I can provide.

I don't claim this is especially practical, but it is fun, not completely unworkable, and I hadn't seen anything like it before. You can save a lot of trouble by reducing the intermediate values mod 19 when needed. In the example above above, after the first step you get to 17, which you can reduce mod 19 to -2, and then the next step is -2·2+3 = -1, and the final step is -1·2+2 = 0.

Last time I wrote about this Eric Roode sent me a whole compendium of divisibility tests, including one for divisibility by 19. It's a little like mine, but in reverse: group the digits in pairs, left to right; multiply each pair by 5 and then add the next pair. Here's 2337 again:

- 23·5 + 37 = 152

- 1·5 + 52 = 57

Again you can save a lot trouble by reducing mod 19 before the multiplication. So instead of the first step being 23·5 + 37 you can reduce the 23·5 to 4·5 = 20 and then add the 37 to get 57 right away.

[ Addendum: Of course this was discovered long ago, and in fact Wikipedia mentions it. ]

[ Addendum 20201123: An earlier version of this article claimed that the double-and-add step I described preserves the mod-19 residue. It does not, of course; the doubling step doubles it. It is, however, true that it is zero afterward if and only if it was zero before. ]

[Other articles in category /math] permanent link

[Other articles in category /misc] permanent link

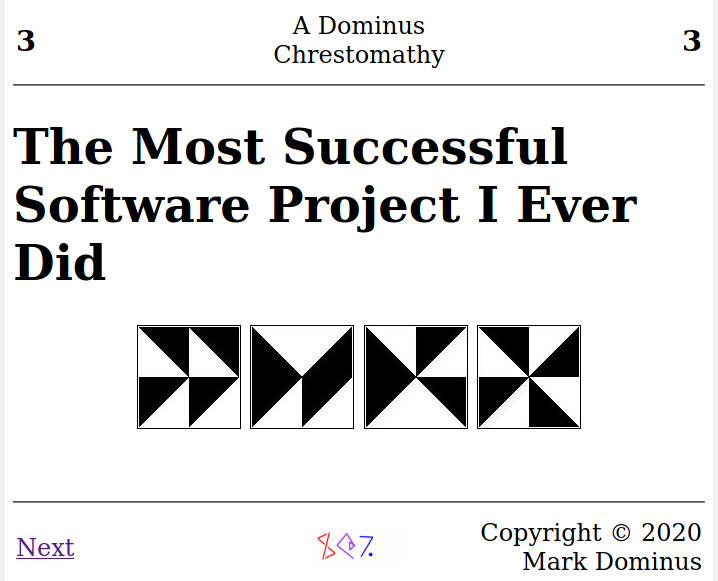

Mon, 02 Nov 2020

A better way to do remote presentations

A few months ago I wrote an article about a strategy I had tried when giving a talk via videochat. Typically:

The slides are presented by displaying them on the speaker's screen, and then sharing the screen image to the audience.

I thought I had done it a better way:

I published the slides on my website ahead of time, and sent the link to the attendees. They had the option to follow along on the web site, or to download a copy and follow along in their own local copy.

This, I thought, had several advantages:

Each audience person can adjust the monitor size, font size, colors to suit their own viewing preferences.

The audience can see the speaker. Instead of using my outgoing video feed to share the slides, I could share my face as I spoke.

With the slides under their control, audience members can go back to refer to earlier material, or skip ahead if they want.

When I brought this up with my co-workers, some of them had a very good objection:

I am too lazy to keep clicking through slides as the talk progresses. I just want to sit back and have it do all the work.

Fair enough! I have done this.

If you package your slides with page-turner, one instance becomes the “leader” and the rest are “followers”. Whenever the leader moves from one slide to the next, a very simple backend server is notified. The followers periodically contact the server to find out what slide they are supposed to be showing, and update themselves accordingly. The person watching the show can sit back and let it do all the work.

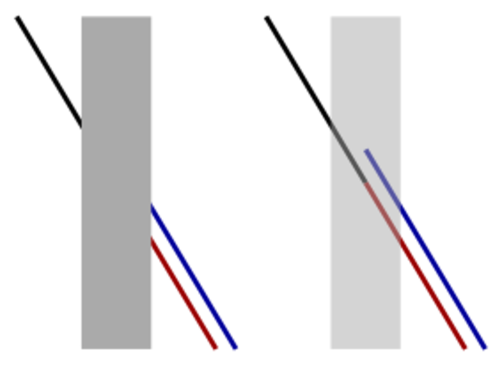

But! If an audience member wants to skip ahead, or go back, that works too. They can use the arrow keys on their keyboard. Their follower instance will stop synchronizing with the leader's slide. Instead, it will display a box in the corner of the page, next to the current slide's page number, that says what slide the leader is looking at. The number in this box updates dynamically, so the audience person always knows how far ahead or behind they are.

|

|

| Synchronized | Unsynchronized |

At left, the leader is displaying slide 3, and the follower is there also. When the leader moves on to slide 4, the follower instance will switch automatically.

At right, the follower is still looking at slide 3, but is detached from the leader, who has moved on to slide 007, as you can see in the gray box.

When the audience member has finished their excursion, they can click the gray box and their own instance will immediately resynchronize with the leader and follow along until the next time they want to depart.

I used this to give a talk to the Charlotte Perl Mongers last week and it worked. Responses were generally positive even though the UI is a little bit rough-looking.

Technical details

The back end is a tiny server, written in Python 3 with Flask. The server is really tiny, only about 60 lines of code. It has only two endpoints: for getting the leader's current page, and for setting it. Setting requires a password.

@app.route('/get-page')

def get_page():

return { "page": app.server.get_pageName() }

@app.route('/set-page', methods=['POST'])

def set_page():

…

password = request.data["password"]

page = request.data["page"]

try:

app.server.update_pageName(page, password)

except WrongPassword:

return failure("Incorrect password"), status.HTTP_401_UNAUTHORIZED

return { "success": True }

The front end runs in the browser. The user downloads the front-end

script, pageturner.js, from the same place they are getting the

slides. Each slide contains, in its head element:

<LINK REL='next' HREF='slide003.html' TYPE='text/html; charset=utf-8'>

<LINK REL='previous' HREF='slide001.html' TYPE='text/html; charset=utf-8'>

<LINK REL='this' HREF='slide002.html' TYPE='text/html; charset=utf-8'>

<script language="javascript" src="pageturner.js" >

</script>

The link elements tell page-turner where to go when someone uses

the arrow keys. (This could, of course, be a simple counter, if your

slides are simply numbered, but my slide decks often have

slide002a.html and the like.) Most of page-turner's code

is in

pageturner.js,

which is a couple hundred lines of JavaScript.

On page switching, a tiny amount of information is stored in the

browser window's sessionStorage object. This is so that after the

new page is loaded, the program can remember whether it is supposed to

be synchronized.

If you want page-turner to display the leader's slide number when the

follower is desynchronized, as in the example above, you include an

element with class phantom_number. The phantom_click handler

resynchronizes the follower:

<b onclick="phantom_click()" class="phantom_number"></b>

The password for the set-page endpoint is embedded in the

pageturner.js file. Normally, this is null, which means that the

instance is a follower. If the password is null, page-turner won't

try to update set-page. If you want to be the leader, you change

"password": null,

to

"password": "swordfish",

or whatever.

Many improvements are certainly possible. It could definitely look a lot better, but I leave that to someone who has interest and aptitude in design.

I know of one serious bug: at present the server doesn't handle SSL,

so must be run at an http://… address; if the slides reside at an

https://… location, the browser will refuse to make the AJAX

requests. This shouldn't be hard to fix.

Source code download

Patches welcome.

License

The software is licensed under the Creative Commons Attribution 4.0 license (CC BY 4.0).

You are free to share (copy and redistribute the software in any medium or format) and adapt (remix, transform, and build upon the material) for any purpose, even commercially, so long as you give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

Share and enjoy.

[Other articles in category /talk] permanent link

Sun, 18 Oct 2020

Newton's Method and its instability

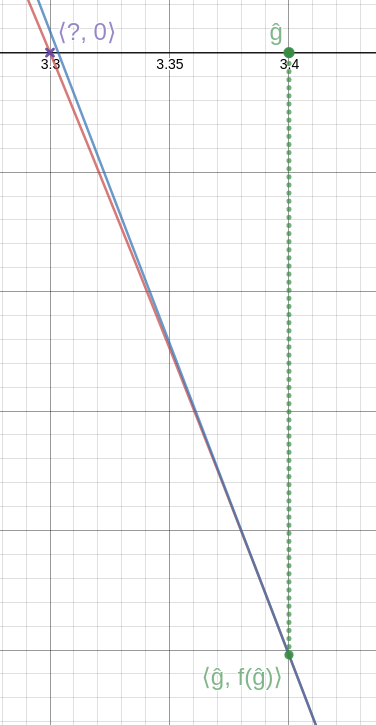

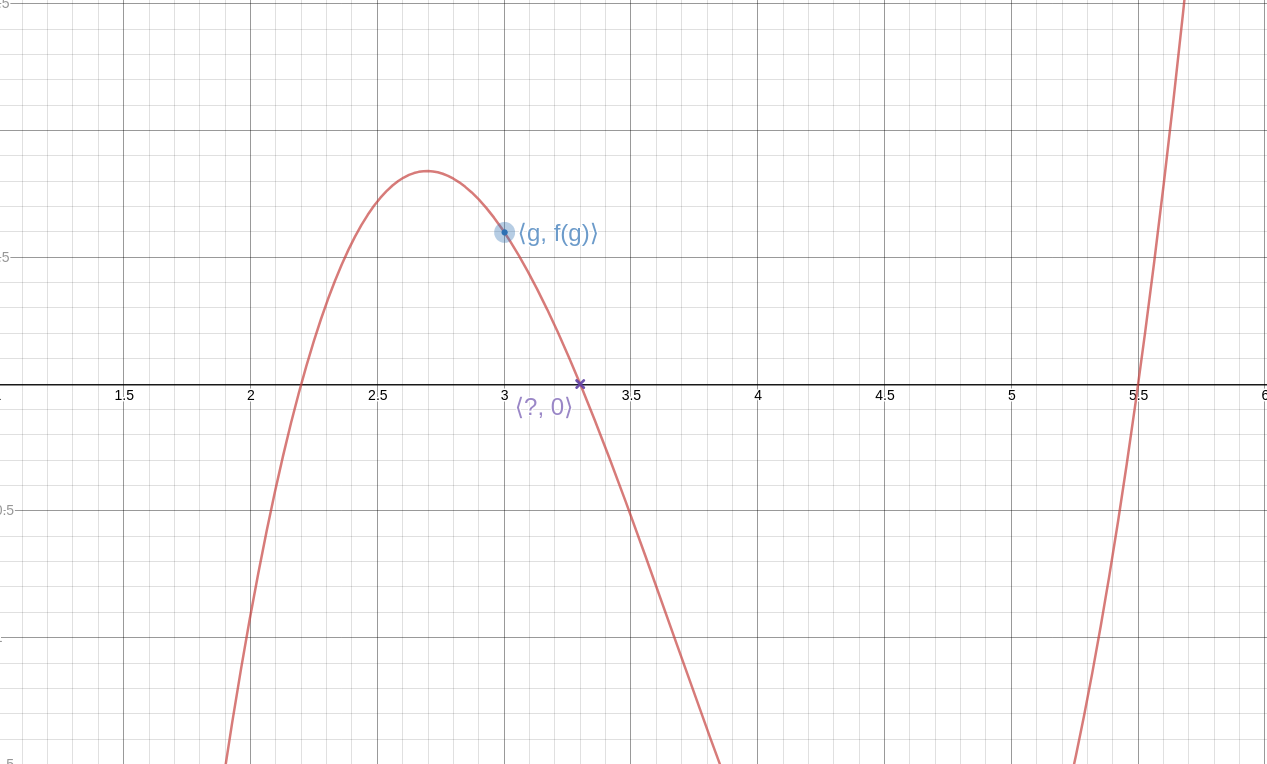

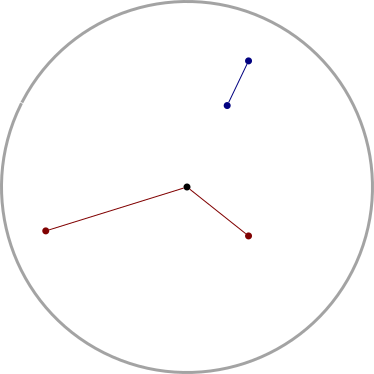

While messing around with Newton's method for last week's article, I built this Desmos thingy:

The red point represents the initial guess; grab it and drag it around, and watch how the later iterations change. Or, better, visit the Desmos site and play with the slider yourself.

(The curve here is !!y = (x-2.2)(x-3.3)(x-5.5)!!; it has a local maximum at around !!2.7!!, and a local minimum at around !!4.64!!.)

Watching the attractor point jump around I realized I was arriving at a much better understanding of the instability of the convergence. Clearly, if your initial guess happens to be near an extremum of !!f!!, the next guess could be arbitrarily far away, rather than a small refinement of the original guess. But even if the original guess is pretty good, the refinement might be near an extremum, and then the following guess will be somewhere random. For example, although !!f!! is quite well-behaved in the interval !![4.3, 4.35]!!, as the initial guess !!g!! increases across this interval, the refined guess !!\hat g!! decreases from !!2.74!! to !!2.52!!, and in between these there is a local maximum that kicks the ball into the weeds. The result is that at !!g=4.3!! the method converges to the largest of the three roots, and at !!g=4.35!!, it converges to the smallest.

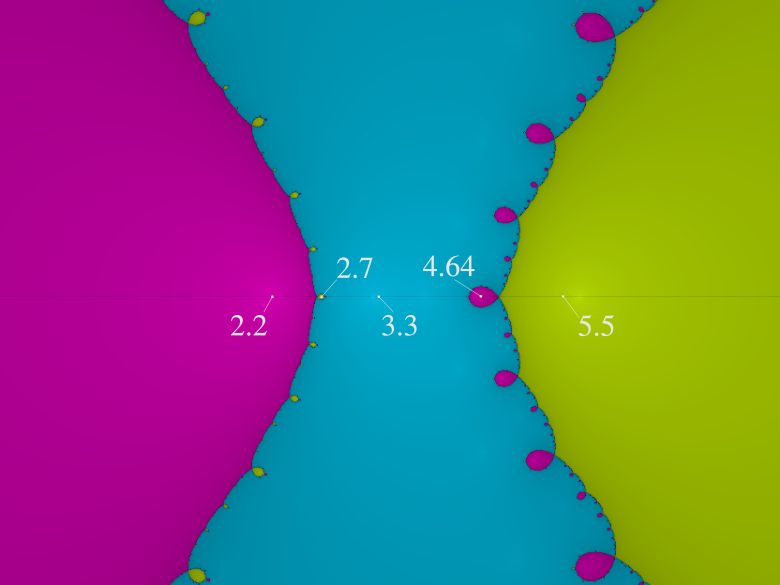

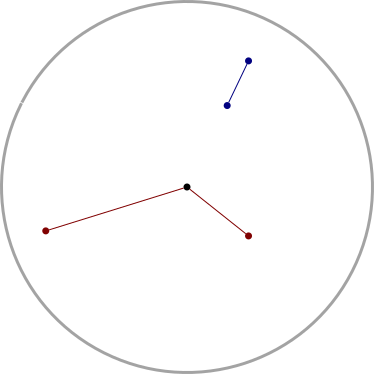

This is where the Newton basins come from:

Here we are considering the function !!f:z\mapsto z^3 -1!! in the complex plane. Zero is at the center, and the obvious root, !!z=1!! is to its right, deep in the large red region. The other two roots are at the corresponding positions in the green and blue regions.

Starting at any red point converges to the !!z=1!! root. Usually, if you start near this root, you will converge to it, which is why all the points near it are red. But some nearish starting points are near an extremum, so that the next guess goes wild, and then the iteration ends up at the green or the blue root instead; these areas are the rows of green and blue leaves along the boundary of the large red region. And some starting points on the boundaries of those leaves kick the ball into one of the other leaves…

Here's the corresponding basin diagram for the polynomial !!y = (x-2.2)(x-3.3)(x-5.5)!! from earlier:

The real axis is the horizontal hairline along the middle of the diagram. The three large regions are the main basins of attraction to the three roots (!!x=2.2, 3.3!!, and !!5.5!!) that lie within them.

But along the boundaries of each region are smaller intrusive bubbles where the iteration converges to a surprising value. A point moving from left to right along the real axis passes through the large pink !!2.2!! region, and then through a very small yellow bubble, corresponding to the values right around the local maximum near !!x=2.7!! where the process unexpectedly converges to the !!5.5!! root. Then things settle down for a while in the blue region, converging to the !!3.3!! root as one would expect, until the value gets close to the local minimum at !!4.64!! where there is a pink bubble because the iteration converges to the !!2.2!! root instead. Then as !!x!! increases from !!4.64!! to !!5.5!!, it leaves the pink bubble and enters the main basin of attraction to !!5.5!! and stays there.

If the picture were higher resolution, you would be able to see that the pink bubbles all have tiny yellow bubbles growing out of them (one is 4.39), and the tiny yellow bubbles have even tinier pink bubbles, and so on forever.

(This was generated by the Online Fractal Generator at usefuljs.net; the labels were added later by me. The labels’ positions are only approximate.)

[ Addendum: Regarding complex points and !!f : z\mapsto z^3-1!! I said “some nearish starting points are near an extremum”. But this isn't right; !!f!! has no extrema. It has an inflection point at !!z=0!! but this doesn't explain the instability along the lines !!\theta = \frac{2k+1}{3}\pi!!. So there's something going on here with the complex derivative that I don't understand yet. ]

[Other articles in category /math] permanent link

Fixed points and attractors, part 3

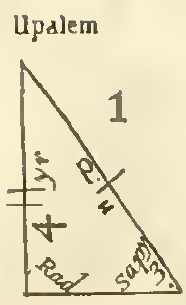

Last week I wrote about a super-lightweight variation on Newton's method, in which one takes this function: $$f_n : \frac ab \mapsto \frac{a+nb}{a+b}$$

or equivalently

$$f_n : x \mapsto \frac{x+n}{x+1}$$

Iterating !!f_n!! for a suitable initial value (say, !!1!!) converges to !!\sqrt n!!:

$$ \begin{array}{rr} x & f_3(x) \\ \hline 1.0 & 2.0 \\ 2.0 & 1.667 \\ 1.667 & 1.75 \\ 1.75 & 1.727 \\ 1.727 & 1.733 \\ 1.733 & 1.732 \\ 1.732 & 1.732 \end{array} $$

Later I remembered that a few months back I wrote a couple of articles about a more general method that includes this as a special case:

The general idea was:

Suppose we were to pick a function !!f!! that had !!\sqrt 2!! as a fixed point. Then !!\sqrt 2!! might be an attractor, in which case iterating !!f!! will get us increasingly accurate approximations to !!\sqrt 2!!.

We can see that !!\sqrt n!! is a fixed point of !!f_n!!:

$$ \begin{align} f_n(\sqrt n) & = \frac{\sqrt n + n}{\sqrt n + 1} \\ & = \frac{\sqrt n(1 + \sqrt n)}{1 + \sqrt n} \\ & = \sqrt n \end{align} $$

And in fact, it is an attracting fixed point, because if !!x = \sqrt n + \epsilon!! then

$$\begin{align} f_n(\sqrt n + \epsilon) & = \frac{\sqrt n + \epsilon + n}{\sqrt n + \epsilon + 1} \\ & = \frac{(\sqrt n + \sqrt n\epsilon + n) - (\sqrt n -1)\epsilon}{\sqrt n + \epsilon + 1} \\ & = \sqrt n - \frac{(\sqrt n -1)\epsilon}{\sqrt n + \epsilon + 1} \end{align}$$

Disregarding the !!\epsilon!! in the denominator we obtain $$f_n(\sqrt n + \epsilon) \approx \sqrt n - \frac{\sqrt n - 1}{\sqrt n + 1} \epsilon $$

The error term !!-\frac{\sqrt n - 1}{\sqrt n + 1} \epsilon!! is strictly smaller than the original error !!\epsilon!!, because !!0 < \frac{x-1}{x+1} < 1!! whenever !!x>1!!. This shows that the fixed point !!\sqrt n!! is attractive.

In the previous articles I considered several different simple functions that had fixed points at !!\sqrt n!!, but I didn't think to consider this unusally simple one. I said at the time:

I had meant to write about Möbius transformations, but that will have to wait until next week, I think.

but I never did get around to the Möbius transformations, and I have long since forgotten what I planned to say. !!f_n!! is an example of a Möbius transformation, and I wonder if my idea was to systematically find all the Möbius transformations that have !!\sqrt n!! as a fixed point, and see what they look like. It is probably possible to automate the analysis of whether the fixed point is attractive, and if not to apply one of the transformations from the previous article to make it attractive.

[Other articles in category /math] permanent link

Tue, 13 Oct 2020

Newton's Method but without calculus — or multiplication

Newton's method goes like this: We have a function !!f!! and we want to solve the equation !!f(x) = 0.!! We guess an approximate solution, !!g!!, and it doesn't have to be a very good guess.

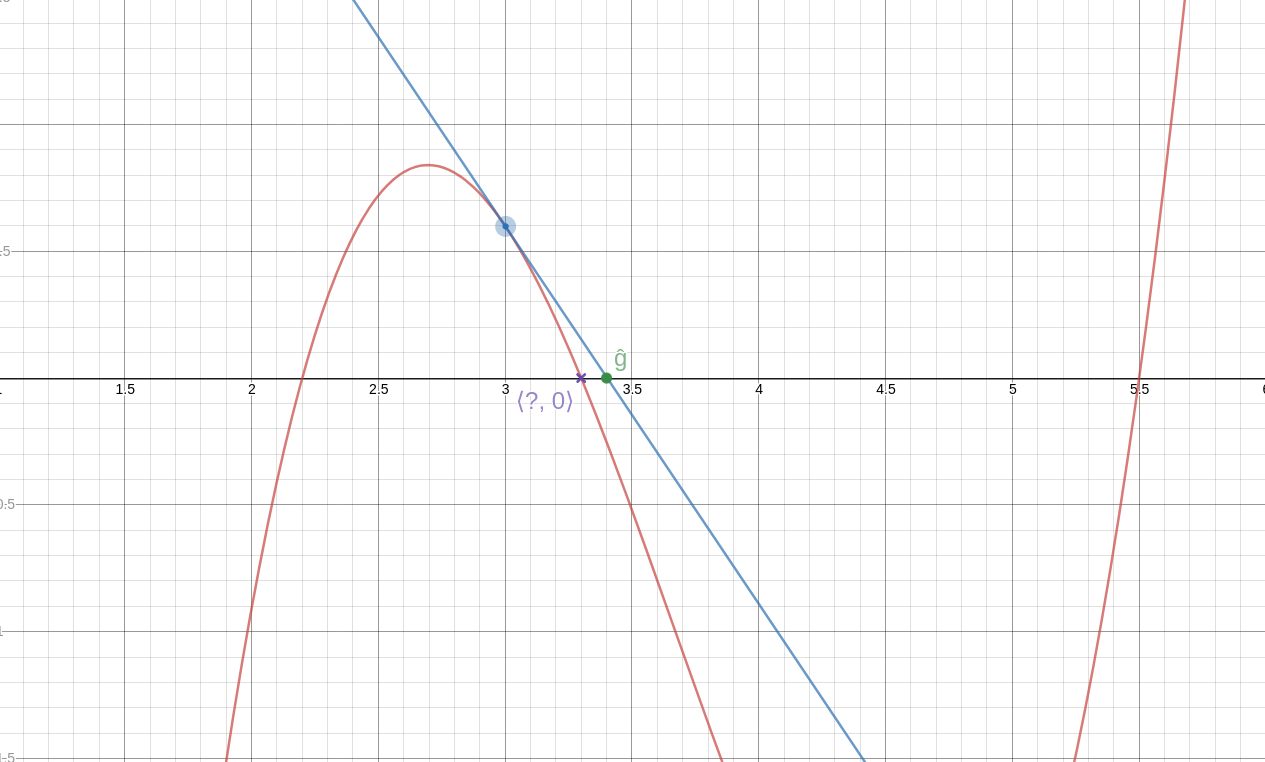

Then we calculate the line !!T!! tangent to !!f!! through the point !!\langle g, f(g)\rangle!!. This line intersects the !!x!!-axis at some new point !!\langle \hat g, 0\rangle!!, and this new value, !!\hat g!!, is a better approximation to the value we're seeking.

Analytically, we have:

$$\hat g = g - \frac{f(g)}{f'(g)}$$

where !!f'(g)!! is the derivative of !!f!! at !!g!!.

We can repeat the process if we like, getting better and better approximations to the solution. (See detail at left; click to enlarge. Again, the blue line is the tangent, this time at !!\langle \hat g, f(\hat g)\rangle!!. As you can see, it intersects the axis very close to the actual solution.)

In general, this requires calculus or something like it, but in any particular case you can avoid the calculus. Suppose we would like to find the square root of 2. This amounts to solving the equation $$x^2-2 = 0.$$ The function !!f!! here is !!x^2-2!!, and !!f'!! is !!2x!!. Once we know (or guess) !!f'!!, no further calculus is needed. The method then becomes: Guess !!g!!, then calculate $$\hat g = g - \frac{g^2-2}{2g}.$$ For example, if our initial guess is !!g = 1.5!!, then the formula above tells us that a better guess is !!\hat g = 1.5 - \frac{2.25 - 2}{3} = 1.4166\ldots!!, and repeating the process with !!\hat g!! produces !!1.41421\mathbf{5686}!!, which is very close to the correct result !!1.41421\mathbf{3562}!!. If we want the square root of a different number !!n!! we just substitute it for the !!2!! in the numerator.

This method for extracting square roots works well and requires no calculus. It's called the Babylonian method and while there's no evidence that it was actually known to the Babylonians, it is quite ancient; it was first recorded by Hero of Alexandria about 2000 years ago.

How might this have been discovered if you didn't have calculus? It's actually quite easy. Here's a picture of the number line. Zero is at one end, !!n!! is at the other, and somewhere in between is !!\sqrt n!!, which we want to find.

Also somewhere in between is our guess !!g!!. Say we guessed too low, so !!0 \lt g < \sqrt n!!. Now consider !!\frac ng!!. Since !!g!! is too small to be !!\sqrt n!! exactly, !!\frac ng!! must be too large. (If !!g!! and !!\frac ng!! were both smaller than !!\sqrt n!!, then their product would be smaller than !!n!!, and it isn't.)

Similarly, if the guess !!g!! is too large, so that !!\sqrt n < g!!, then !!\frac ng!! must be less than !!\sqrt n!!. The important point is that !!\sqrt n!! is between !!g!! and !!\frac ng!!. We have narrowed down the interval in which !!\sqrt n!! lies, just by guessing.

Since !!\sqrt n!! lies in the interval between !!g!! and !!\frac ng!! our next guess should be somewhere in this smaller interval. The most obvious thing we can do is to pick the point halfway in the middle of !!g!! and !!\frac ng!!, So if we guess the average, $$\frac12\left(g + \frac ng\right),$$ this will probably be much closer to !!\sqrt n!! than !!g!! was:

This average is exactly what Newton's method would have calculated, because $$\frac12\left(g + \frac ng\right) = g - \frac{g^2-n}{2g}.$$

But we were able to arrive at the same computation with no calculus at all — which is why this method could have been, and was, discovered 1700 years before Newton's method itself.

If we're dealing with rational numbers then we might write !!g=\frac ab!!, and then instead of replacing our guess !!g!! with a better guess !!\frac12\left(g + \frac ng\right)!!, we could think of it as replacing our guess !!\frac ab!! with a better guess !!\frac12\left(\frac ab + \frac n{\frac ab}\right)!!. This simplifies to

$$\frac ab \Rightarrow \frac{a^2 + nb^2}{2ab}$$

so that for example, if we are calculating !!\sqrt 2!!, and we start with the guess !!g=\frac32!!, the next guess is $$\frac{3^2 + 2\cdot2^2}{2\cdot3\cdot 2} = \frac{17}{12} = 1.4166\ldots$$ as we saw before. The approximation after that is !!\frac{289+288}{2\cdot17\cdot12} = \frac{577}{408} = 1.41421568\ldots!!. Used this way, the method requires only integer calculations, and converges very quickly.

But the numerators and denominators increase rapidly, which is good in one sense (it means you get to the accurate approximations quickly) but can also be troublesome because the numbers get big and also because you have to multiply, and multiplication is hard.

But remember how we figured out to do this calculation in the first place: all we're really trying to do is find a number in between !!g!! and !!\frac ng!!. We did that the first way that came to mind, by averaging. But perhaps there's a simpler operation that we could use instead, something even easier to compute?

Indeed there is! We can calculate the mediant. The mediant of !!\frac ab!! and !!\frac cd!! is simply $$\frac{a+c}{b+d}$$ and it is very easy to show that it lies between !!\frac ab!! and !!\frac cd!!, as we want.

So instead of the relatively complicated $$\frac ab \Rightarrow \frac{a^2 + nb^2}{2ab}$$ operation, we can try the very simple and quick $$\frac ab \Rightarrow \operatorname{mediant}\left(\frac ab, \frac{nb}{a}\right) = \frac{a+nb}{b+a}$$ operation.

Taking !!n=2!! as before, and starting with !!\frac 32!!, this produces:

$$ \frac 32 \Rightarrow\frac{ 7 }{ 5 } \Rightarrow\frac{ 17 }{ 12 } \Rightarrow\frac{ 41 }{ 29 } \Rightarrow\frac{ 99 }{ 70 } \Rightarrow\frac{ 239 }{ 169 } \Rightarrow\frac{ 577 }{ 408 } \Rightarrow\cdots$$

which you may recognize as the convergents of !!\sqrt2!!. These are actually the rational approximations of !!\sqrt 2!! that are optimally accurate relative to the sizes of their denominators. Notice that !!\frac{17}{12}!! and !!\frac{577}{408}!! are in there as they were before, although it takes longer to get to them.

I think it's cool that you can view it as a highly-simplified version of Newton's method.

[ Addendum: An earlier version of the last paragraph claimed:

None of this is a big surprise, because it's well-known that you can get the convergents of !!\sqrt n!! by applying the transformation !!\frac ab\Rightarrow \frac{a+nb}{a+b}!!, starting with !!\frac11!!.

Simon Tatham pointed out that this was mistaken. It's true when !!n=2!!, but not in general. The sequence of fractions that you get does indeed converge to !!\sqrt n!!, but it's not usually the convergents, or even in lowest terms. When !!n=3!!, for example, the numerators and denominators are all even. ]

[ Addendum: Newton's method as I described it, with the crucial !!g → g - \frac{f(g)}{f'(g)}!! transformation, was actually invented in 1740 by Thomas Simpson. Both Isaac Newton and Thomas Raphson had earlier described only special cases, as had several Asian mathematicians, including Seki Kōwa. ]

[ Previous discussion of convergents: Archimedes and the square root of 3; 60-degree angles on a lattice. A different variation on the Babylonian method. ]

[ Note to self: Take a look at what the AM-GM inequality has to say about the behavior of !!\hat g!!. ]

[ Addendum 20201018: A while back I discussed the general method of picking a function !!f!! that has !!\sqrt 2!! as a fixed point, and iterating !!f!!. This is yet another example of such a function. ]

[Other articles in category /math] permanent link

Wed, 23 Sep 2020

The mystery of the malformed command-line flags

Today a user came to tell me that their command

greenlight submit branch-name --require-review-by skordokott

failed, saying:

**

** unexpected extra argument 'branch-name' to 'submit' command

**

This is surprising. The command looks correct. The branch name is

required. The --require-review-by option can be supplied any

number of times (including none) and each must have a value provided.

Here it is given once and the provided value appears to be

skordocott.

The greenlight command is a crappy shell script that pre-validates

the arguments before sending them over the network to the real server.

I guessed that the crappy shell script parser wanted the branch name

last, even though the server itself would have been happy to take the

arguments in either order. I suggested that the user try:

greenlight submit --require-review-by skordokott branch-name

But it still didn't work:

**

** unexpected extra argument '--require-review-by' to 'submit' command

**

I dug in to the script and discovered the problem, which was not actually a programming error. The crappy shell script was behaving correctly!

I had written up release notes for the --require-review-by feature.

The user had clipboard-copied the option string out of

the release notes and pasted it into the shell. So why didn't it work?

In an earlier draft of the release notes, when they were displayed as an HTML page, there would be bad line breaks:

blah blah blah be sure to use the

-

-require-review-byoption…

or:

blah blah blah the new

--

require-review-byfeature is…

No problem, I can fix it! I just changed the pair of hyphens (- U+002D)

at the beginning of --require-review-by to Unicode nonbreaking

hyphens (‑ U+2011). Bad line breaks begone!

But then this hapless user clipboard-copied the option string out of

the release notes, including its U+2011 characters. The parser in the

script was (correctly) looking for U+002D characters, and didn't

recognize --require-review-by as an option flag.

One lesson learned: people will copy-paste stuff out of documentation, and I should be prepared for that.

There are several places to address this. I made the error message more transparent; formerly it would complain only about the first argument, which was confusing because it was the one argument that wasn't superfluous. Now it will say something like

**

** extra branch name '--require-review-by' in 'submit' command

**

**

** extra branch name 'skordokott' in 'submit' command

**

which is more descriptive of what it actually doesn't like.

I could change the nonbreaking hyphens in the release notes back to regular hyphens and just accept the bad line breaks. But I don't want to. Typography is important.

One idea I'm toying with is to have the shell script silently replace all nonbreaking hyphens with regular ones before any further processing. It's a hack, but it seems like it might be a harmless one.

So many weird things can go wrong. This computer stuff is really complicated. I don't know how anyone get anything done.

[ Addendum: A reader suggests that I could have fixed the line breaks with CSS. But the release notes were being presented as a Slack “Post”, which is essentially a WYSIWYG editor for creating shared documents. It presents the document in a canned HTML style, and as far as I know there's no way to change the CSS it uses. Similarly, there's no way to insert raw HTML elements, so no way to change the style per-element. ]

[Other articles in category /prog/bug] permanent link

Sun, 13 Sep 2020The front page of NPR.org today has this headline:

It contains this annoying phrase:

The race for Joni Ernst's seat could help determine control of the Senate.

Someone has really committed to hedging.

I would have said that the race would certainly help determine control of the Senate, or that it could determine control of the Senate. The statement as written makes an extremely weak claim.

The article itself doesn't include this phrase. This is why reporters hate headline-writers.

[Other articles in category /lang] permanent link

Fri, 11 Sep 2020

Historical diffusion of words for “eggplant”

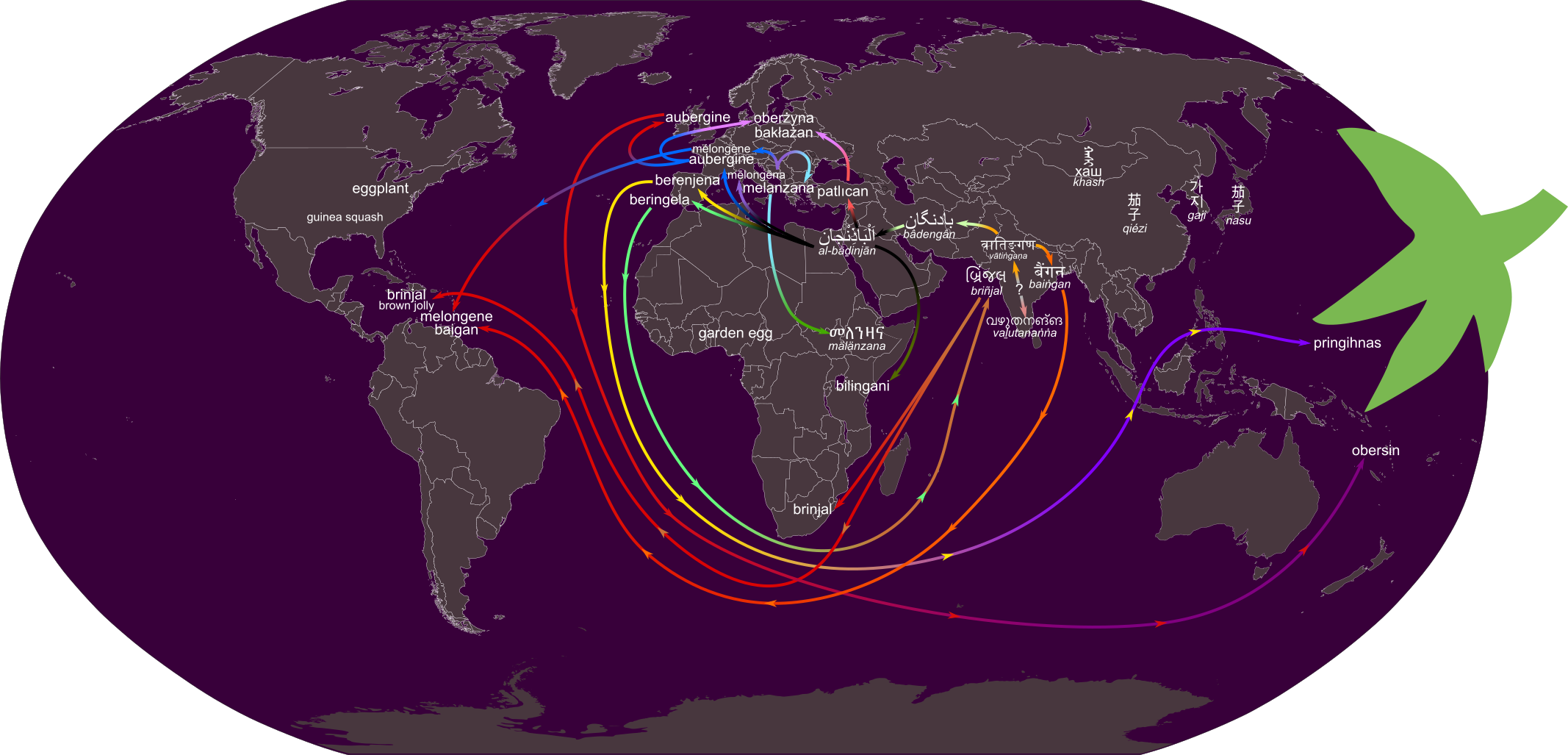

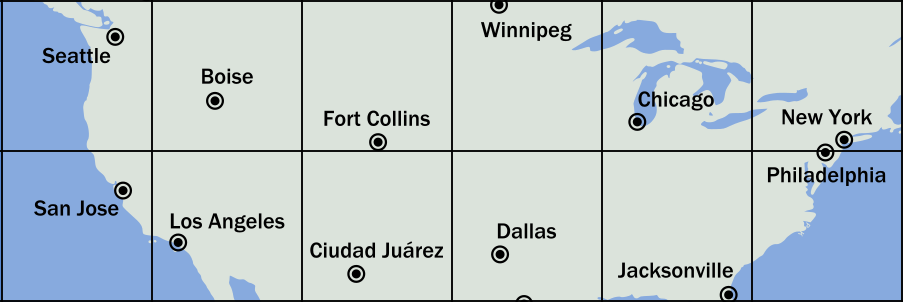

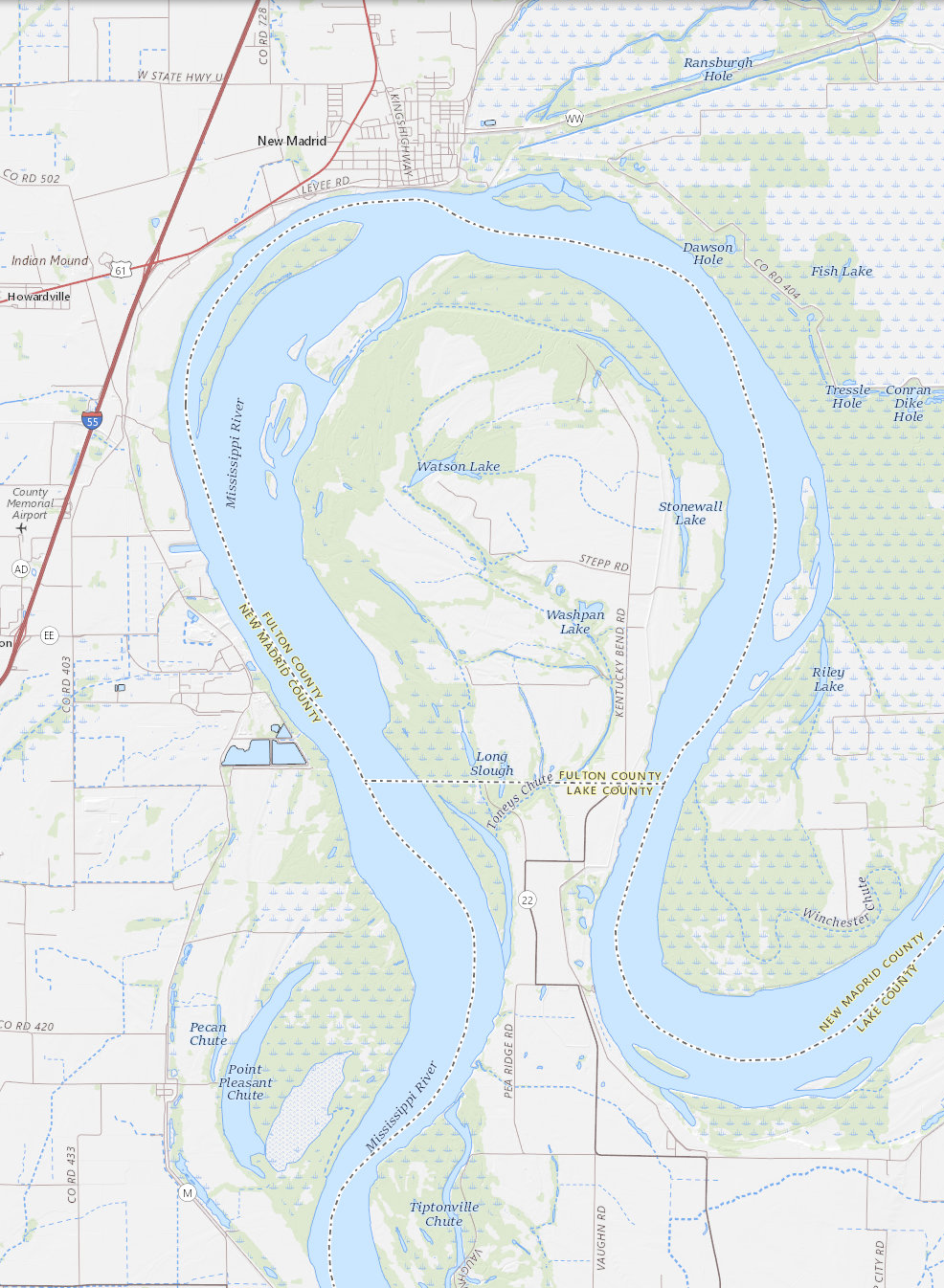

In reply to my recent article about the history of words for “eggplant”, a reader, Lydia, sent me this incredible map they had made that depicts the history and the diffusion of the terms:

Lydia kindly gave me permission to share their map with you. You can see the early Dravidian term vaḻutanaṅṅa in India, and then the arrows show it travelling westward across Persia and, Arabia, from there to East Africa and Europe, and from there to the rest of the world, eventually making its way back to India as brinjal before setting out again on yet more voyages.

Thank you very much, Lydia! And Happy Diada Nacional de Catalunya, everyone!

[Other articles in category /lang/etym] permanent link

A maxim for conference speakers

The only thing worse than re-writing your talk the night before is writing your talk the night before.

[Other articles in category /talk] permanent link

Fri, 28 Aug 2020This morning Katara asked me why we call these vegetables “zucchini” and “eggplant” but the British call them “courgette” and “aubergine”.

I have only partial answers, and the more I look, the more complicated they get.

Zucchini

The zucchini is a kind of squash, which means that in Europe it is a post-Columbian import from the Americas.

“Squash” itself is from Narragansett, and is not related to the verb “to squash”. So I speculate that what happened here was:

American colonists had some name for the zucchini, perhaps derived from an Narragansett or another Algonquian language, or perhaps just “green squash” or “little gourd” or something like that. A squash is not exactly a gourd, but it's not exactly not a gourd either, and the Europeans seem to have accepted it as a gourd (see below).

When the vegetable arrived in France, the French named it courgette, which means “little gourd”. (Courge = “gourd”.) Then the Brits borrowed “courgette” from the French.

Sometime much later, the Americans changed the name to “zucchini”, which also means “little gourd”, this time in Italian. (Zucca = “gourd”.)

The Big Dictionary has citations for “zucchini” only back to 1929, and “courgette” to 1931. What was this vegetable called before that? Why did the Americans start calling it “zucchini” instead of whatever they called it before, and why “zucchini” and not “courgette”? If it was brought in by Italian immigrants, one might expect to the word to have appeared earlier; the mass immigration of Italians into the U.S. was over by 1920.

Following up on this thought, I found a mention of it in Cuniberti, J. Lovejoy., Herndon, J. B. (1918). Practical Italian recipes for American kitchens, p. 18: “Zucchini are a kind of small squash for sale in groceries and markets of the Italian neighborhoods of our large cities.” Note that Cuniberti explains what a zucchini is, rather than saying something like “the zucchini is sometimes known as a green summer squash” or whatever, which suggests that she thinks it will not already be familiar to the readers. It looks as though the story is: Colonial Europeans in North America stopped eating the zucchini at some point, and forgot about it, until it was re-introduced in the early 20th century by Italian immigrants.

When did the French start calling it courgette? When did the Italians start calling it zucchini? Is the Italian term a calque of the French, or vice versa? Or neither? And since courge (and gourd) are evidently descended from Latin cucurbita, where did the Italians get zucca?

So many mysteries.

Eggplant

Here I was able to get better answers. Unlike squash, the eggplant is native to Eurasia and has been cultivated in western Asia for thousands of years.

The puzzling name “eggplant” is because the fruit, in some varieties, is round, white, and egg-sized.

The term “eggplant” was then adopted for other varieties of the same plant where the fruit is entirely un-egglike.

“Eggplant” in English goes back only to 1767. What was it called before that? Here the OED was more help. It gives this quotation, from 1785:

When this [sc. its fruit] is white, it has the name of Egg-Plant.

I inferred that the preceding text described it under a better-known name, so, thanks to the Wonders of the Internet, I looked up the original source:

Melongena or Mad Apple is also of this genus [solanum]; it is cultivated as a curiosity for the largeness and shape of its fruit; and when this is white, it has the name of Egg Plant; and indeed it then perfectly resembles a hen's egg in size, shape, and colour.

(Jean-Jacques Rosseau, Letters on the Elements of Botany, tr. Thos. Martyn 1785. Page 202. (Wikipedia))

The most common term I've found that was used before “egg-plant” itself is “mad apple”. The OED has cites from the late 1500s that also refer to it as a “rage apple”, which is a calque of French pomme de rage. I don't know how long it was called that in French. I also found “Malum Insanam” in the 1736 Lexicon technicum of John Harris, entry “Bacciferous Plants”.

Melongena was used as a scientific genus name around 1700 and later adopted by Linnaeus in 1753. I can't find any sign that it was used in English colloquial, non-scientific writing. Its etymology is a whirlwind trip across the globe. Here's what the OED says about it:

The neo-Latin scientific term is from medieval Latin melongena

Latin melongena is from medieval Greek μελιντζάνα (/melintzána/), a variant of Byzantine Greek ματιζάνιον (/matizánion/) probably inspired by the common Greek prefix μελανο- (/melano-/) “dark-colored”. (Akin to “melanin” for example.)

Greek ματιζάνιον is from Arabic bāḏinjān (بَاذِنْجَان). (The -ιον suffix is a diminutive.)

Arabic bāḏinjān is from Persian bādingān (بادنگان)

Persian bādingān is from Sanskrit and Pali vātiṅgaṇa (भण्टाकी)

Sanskrit vātiṅgaṇa is from Dravidian (for example, Malayalam is vaḻutana (വഴുതന); the OED says “compare… Tamil vaṟutuṇai”, which I could not verify.)

Wowzers.

Okay, now how do we get to “aubergine”? The list above includes Arabic bāḏinjān, and this, like many Arabic words was borrowed into Spanish, as berengena or alberingena. (The “al-” prefix is Arabic for “the” and is attached to many such borrowings, for example “alcohol” and “alcove”.)

From alberingena it's a short step to French aubergine. The OED entry for aubergine doesn't mention this. It claims that aubergine is from “Spanish alberchigo, alverchiga, ‘an apricocke’”. I think it's clear that the OED blew it here, and I think this must be the first time I've ever been confident enough to say that. Even the OED itself supports me on this: the note at the entry for brinjal says: “cognate with the Spanish alberengena is the French aubergine”. Okay then. (Brinjal, of course, is a contraction of berengena, via Portuguese bringella.)

Sanskrit vātiṅgaṇa is also the ultimate source of modern Hindi baingan, as in baingan bharta.

(Wasn't there a classical Latin word for eggplant? If so, what was it? Didn't the Romans eat eggplant? How do you conquer the world without any eggplants?)

[ Addendum: My search for antedatings of “zucchini” turned up some surprises. For example, I found what seemed to be many mentions in an 1896 history of Sicily. These turned out not to be about zucchini at all, but rather the computer's pathetic attempts at recognizing the word Σικελίαν. ]

[ Addendum 20200831: Another surprise: Google Books and Hathi Trust report that “zucchini” appears in the 1905 Collier Modern Eclectic Dictionary of the English Langauge, but it's an incredible OCR failure for the word “acclamation”. ]

[ Addendum 20200911: A reader, Lydia, sent me a beautiful map showing the evolution of the many words for ‘eggplant’. Check it out. ]

[ Addendum 20231021: The Japanese kabocha squash (カボチャ) is probably so-called because it was brought by the Portuguese from Camboja, Cambodia. ]

[ Addendum 20231127: A while back I looked into the question of whether the Romans had eggplants, and it seems that consensus was that they did not! Incredible. How much longer their empire would have lasted if they had been able to draw in the power of the eggplant? This probably goes some way to explaining why the Byzantine Empire lasted so much longer than the Western Empire. ]

[Other articles in category /lang/etym] permanent link

Mon, 24 Aug 2020Ripta Pasay brought to my attention the English cookbook Liber Cure Cocorum, published sometime between 1420 and 1440. The recipes are conveyed as poems:

Conyngus in gravé.

Sethe welle þy conyngus in water clere,

After, in water colde þou wasshe hom sere,

Take mylke of almondes, lay hit anone

With myed bred or amydone;

Fors hit with cloves or gode gyngere;

Boyle hit over þo fyre,

Hew þo conyngus, do hom þer to,

Seson hit with wyn or sugur þo.

(Original plus translation by Cindy Renfrow)

“Conyngus” is a rabbit; English has the cognate “coney”.

If you have read my article on how to read Middle English you won't have much trouble with this. There are a few obsolete words: sere means “separately”; myed bread is bread crumbs, and amydone is starch.

I translate it (very freely) as follows:

Rabbit in gravy.

Boil well your rabbits in clear water,

then wash them separately in cold water.

Take almond milk, put it on them

with grated bread or starch;

stuff them with cloves or good ginger;

boil them over the fire,

cut them up,

and season with wine or sugar.

Thanks, Ripta!

[Other articles in category /food] permanent link

Fri, 21 Aug 2020

Mixed-radix fractions in Bengali

[ Previously, Base-4 fractions in Telugu. ]

I was really not expecting to revisit this topic, but a couple of weeks ago, looking for something else, I happened upon the following curiously-named Unicode characters:

U+09F4 (e0 a7 b4): BENGALI CURRENCY NUMERATOR ONE [৴]

U+09F5 (e0 a7 b5): BENGALI CURRENCY NUMERATOR TWO [৵]

U+09F6 (e0 a7 b6): BENGALI CURRENCY NUMERATOR THREE [৶]

U+09F7 (e0 a7 b7): BENGALI CURRENCY NUMERATOR FOUR [৷]

U+09F8 (e0 a7 b8): BENGALI CURRENCY NUMERATOR ONE LESS THAN THE DENOMINATOR [৸]

U+09F9 (e0 a7 b9): BENGALI CURRENCY DENOMINATOR SIXTEEN [৹]

Oh boy, more base-four fractions! What on earth does “NUMERATOR ONE LESS THAN THE DENOMINATOR” mean and how is it used?

An explanation appears in the Unicode proposal to add the related “ganda” sign:

U+09FB (e0 a7 bb): BENGALI GANDA MARK [৻]

(Anshuman Pandey, “Proposal to Encode the Ganda Currency Mark for Bengali in the BMP of the UCS”, 2007.)

Pandey explains: prior to decimalization, the Bengali rupee (rupayā) was divided into sixteen ānā. Standard Bengali numerals were used to write rupee amounts, but there was a special notation for ānā. The sign ৹ always appears, and means sixteenths. Then. Prefixed to this is a numerator symbol, which goes ৴, ৵, ৶, ৷ for 1, 2, 3, 4. So for example, 3 ānā is written ৶৹, which means !!\frac3{16}!!.

The larger fractions are made by adding the numerators, grouping by 4's:

| ৴ | ৵ | ৶ | 1, 2, 3 | |

| ৷ | ৷৴ | ৷৵ | ৷৶ | 4, 5, 6, 7 |

| ৷৷ | ৷৷৴ | ৷৷৵ | ৷৷৶ | 8, 9, 10, 11 |

| ৸ | ৸৴ | ৸৵ | ৸৶ | 12, 13, 14, 15 |

except that three fours (৷৷৷) is too many, and is abbreviated by the intriguing NUMERATOR ONE LESS THAN THE DENOMINATOR sign ৸ when more than 11 ānā are being written.

Historically, the ānā was divided into 20 gaṇḍā; the gaṇḍā amounts are written with standard (Benagli decimal) numerals instead of the special-purpose base-4 numerals just described. The gaṇḍā sign ৻ precedes the numeral, so 4 gaṇḍā (!!\frac15!! ānā) is wrtten as ৻৪. (The ৪ is not an 8, it is a four.)

What if you want to write 17 rupees plus !!9\frac15!! ānā? That is 17 rupees plus 9 ānā plus 4 gaṇḍā. If I am reading this report correctly, you write it this way:

১৭৷৷৴৻৪

This breaks down into three parts as ১৭ ৷৷৴ ৻৪. The ১৭ is a 17, for 17 rupees; the ৷৷৴ means 9 ānā (the denominator ৹ is left implicit) and the ৻৪ means 4 gaṇḍā, as before. There is no separator between the rupees and the ānā. But there doesn't need to be, because different numerals are used! An analogous imaginary system in Latin script would be to write the amount as

17dda¢4

where the ‘17’ means 17 rupees, the ‘dda’ means 4+4+1=9 ānā, and the ¢4 means 4 gaṇḍā. There is no trouble seeing where the ‘17’ ends and the ‘dda’ begins.

Pandey says there was an even smaller unit, the kaṛi. It was worth ¼ of a gaṇḍā and was again written with the special base-4 numerals, but as if the gaṇḍā had been divided into 16. A complete amount might be written with decimal numerals for the rupees, base-4 numerals for the ānā, decimal numerals again for the gaṇḍā, and base-4 numerals again for the kaṛi. No separators are needed, because each section is written symbols that are different from the ones in the adjoining sections.

[Other articles in category /math] permanent link

Thu, 06 Aug 2020

Recommended reading: Matt Levine’s Money Stuff

Lately my favorite read has been Matt Levine’s Money Stuff articles from Bloomberg News. Bloomberg's web site requires a subscription but you can also get the Money Stuff articles as an occasional email. It arrives at most once per day.

Almost every issue teaches me something interesting I didn't know, and almost every issue makes me laugh.

Example of something interesting: a while back it was all over the news that oil prices were negative. Levine was there to explain what was really going on and why. Some people manage index funds. They are not trying to beat the market, they are trying to match the index. So they buy derivatives that give them the right to buy oil futures contracts at whatever the day's closing price is. But say they already own a bunch of oil contracts. If they can get the close-of-day price to dip, then their buy-at-the-end-of-the-day contracts will all be worth more because the counterparties have contracted to buy at the dip price. How can you get the price to dip by the end of the day? Easy, unload 20% of your contracts at a bizarre low price, to make the value of the other 80% spike… it makes my head swim.

But there are weird second- and third-order effects too. Normally if you invest fifty million dollars in oil futures speculation, there is a worst-case: the price of oil goes to zero and you lose your fifty million dollars. But for these derivative futures, the price could in theory become negative, and for short time in April, it did:

If the ETF’s oil futures go to -$37.63 a barrel, as some futures did recently, the ETF investors lose $20—their entire investment—and, uh, oops? The ETF runs out of money when the futures hit zero; someone else has to come up with the other $37.63 per barrel.

One article I particularly remember discussed the kerfuffle a while back concerning whether Kelly Loeffler improperly traded stocks on classified coronavirus-related intelligence that she received in her capacity as a U.S. senator. I found Levine's argument persuasive:

“I didn’t dump stocks, I am a well-advised rich person, someone else manages my stocks, and they dumped stocks without any input from me” … is a good defense! It’s not insider trading if you don’t trade; if your investment manager sold your stocks without input from you then you’re fine. Of course they could be lying, but in context the defense seems pretty plausible. (Kelly Loeffler, for instance, controversially dumped about 0.6% of her portfolio at around the same time, which sure seems like the sort of thing an investment adviser would do without any input from her? You could call your adviser and say “a disaster is coming, sell everything!,” but calling them to say “a disaster is coming, sell a tiny bit!” seems pointless.)

He contrasted this case with that of Richard Burr, who, unlike Loeffler, remains under investigation. The discussion was factual and informative, unlike what you would get from, say, Twitter, or even Metafilter, where the response was mostly limited to variations on “string them up” and “eat the rich”.

Money Stuff is also very funny. Today’s letter discusses a disclosure filed recently by Nikola Corporation:

More impressive is that Nikola’s revenue for the second quarter was very small, just $36,000. Most impressive, though, is how they earned that revenue:

During the three months ended June 30, 2020 and 2019 the Company recorded solar revenues of $0.03 million and $0.04 million, respectively, for the provision of solar installation services to the Executive Chairman, which are billed on time and materials basis. …

“Solar installation projects are not related to our primary operations and are expected to be discontinued,” says Nikola, but I guess they are doing one last job, specifically installing solar panels at founder and executive chairman Trevor Milton’s house? It is a $13 billion company whose only business so far is doing odd jobs around its founder’s house.

A couple of recent articles that cracked me up discussed clueless day-traders pushing up the price of Hertz stock after Hertz had declared bankruptcy, and how Hertz diffidently attempted to get the SEC to approve a new stock issue to cater to these idiots. (The SEC said no.)

One recurring theme in the newsletter is “Everything is Securities Fraud”. This week, Levine asks:

Is it securities fraud for a public company to pay bribes to public officials in exchange for lucrative public benefits?

Of course you'd expect that the executives would be criminally charged, as they have been. But is there a cause for the company’s shareholders to sue? If you follow the newsletter, you know what the answer will be:

Oh absolutely…

because Everything is Securities Fraud.

Still it is a little weird. Paying bribes to get public benefits is, you might think, the sort of activity that benefits shareholders. Sure they were deceived, and sure the stock price was too high because investors thought the company’s good performance was more legitimate and sustainable than it was, etc., but the shareholders are strange victims. In effect, executives broke the law in order to steal money for the shareholders, and when the shareholders found out they sued? It seems a little ungrateful?

I recommend it.

Levine also has a Twitter account but it is mostly just links to his newsletter articles.

[ Addendum 20200821: Unfortunately, just a few days after I posted this, Matt Levine announced that his newletter would be on hiatus for a few months, as he would be on paternity leave. Sorry! ]

[ Addendum 20210207: Money Stuff is back. ]

[ Addendum 20221204: This article about the balance sheet circulated by FTX in the hours before its bankruptcy may be my favorite of all time. ]

[Other articles in category /ref] permanent link

Wed, 05 Aug 2020

A maybe-interesting number trick?

I'm not sure if this is interesting, trivial, or both. You decide.

Let's divide the numbers from 1 to 30 into the following six groups:

| A | 1 | 2 | 4 | 8 | 16 |

| B | 3 | 6 | 12 | 17 | 24 |

| C | 5 | 9 | 10 | 18 | 20 |

| D | 7 | 14 | 19 | 25 | 28 |

| E | 11 | 13 | 21 | 22 | 26 |

| F | 15 | 23 | 27 | 29 | 30 |

Choose any two rows. Chose a number from each row, and multiply them mod 31. (That is, multiply them, and if the product is 31 or larger, divide it by 31 and keep the remainder.)

Regardless of which two numbers you chose, the result will always be in the same row. For example, any two numbers chosen from rows B and D will multiply to yield a number in row E. If both numbers are chosen from row F, their product will always appear in row A.

[Other articles in category /math] permanent link

Sun, 02 Aug 2020Gulliver's Travels (1726), Part III, chapter 2:

I observed, here and there, many in the habit of servants, with a blown bladder, fastened like a flail to the end of a stick, which they carried in their hands. In each bladder was a small quantity of dried peas, or little pebbles, as I was afterwards informed. With these bladders, they now and then flapped the mouths and ears of those who stood near them, of which practice I could not then conceive the meaning. It seems the minds of these people are so taken up with intense speculations, that they neither can speak, nor attend to the discourses of others, without being roused by some external action upon the organs of speech and hearing… . This flapper is likewise employed diligently to attend his master in his walks, and upon occasion to give him a soft flap on his eyes; because he is always so wrapped up in cogitation, that he is in manifest danger of falling down every precipice, and bouncing his head against every post; and in the streets, of justling others, or being justled himself into the kennel.

When I first told Katara about this, several years ago, instead of “the minds of these people are so taken up with intense speculations” I said they were obsessed with their phones.

Now the phones themselves have become the flappers:

Y. Tung and K. G. Shin, "Use of Phone Sensors to Enhance Distracted Pedestrians’ Safety," in IEEE Transactions on Mobile Computing, vol. 17, no. 6, pp. 1469–1482, 1 June 2018, doi: 10.1109/TMC.2017.2764909.

Our minds are not even taken up with intense speculations, but with Instagram. Dean Swift would no doubt be disgusted.

[Other articles in category /book] permanent link

Sat, 01 Aug 2020

How are finite fields constructed?

Here's another recent Math Stack Exchange answer I'm pleased with.

I know this question has been asked many times and there is good information out there which has clarified a lot for me but I still do not understand how the addition and multiplication tables for !!GF(4)!! is constructed?

I've seen [links] but none explicity explain the construction and I'm too new to be told "its an extension of !!GF(2)!!"

The only “reasonable” answer here is “get an undergraduate abstract algebra text and read the chapter on finite fields”. Because come on, you can't expect some random stranger to appear and write up a detailed but short explanation at your exact level of knowledge.

But sometimes Internet Magic Lightning strikes and that's what you do get! And OP set themselves up to be struck by magic lightning, because you can't get a detailed but short explanation at your exact level of knowledge if you don't provide a detailed but short explanation of your exact level of knowledge — and this person did just that. They understand finite fields of prime order, but not how to construct the extension fields. No problem, I can explain that!

I had special fun writing this answer because I just love constructing extensions of finite fields. (Previously: [1] [2])

For any given !!n!!, there is at most one field with !!n!! elements: only one, if !!n!! is a power of a prime number (!!2, 3, 2^2, 5, 7, 2^3, 3^2, 11, 13, \ldots!!) and none otherwise (!!6, 10, 12, 14\ldots!!). This field with !!n!! elements is written as !!\Bbb F_n!! or as !!GF(n)!!.

Suppose we want to construct !!\Bbb F_n!! where !!n=p^k!!. When !!k=1!!, this is easy-peasy: take the !!n!! elements to be the integers !!0, 1, 2\ldots p-1!!, and the addition and multiplication are done modulo !!n!!.

When !!k>1!! it is more interesting. One possible construction goes like this:

The elements of !!\Bbb F_{p^k}!! are the polynomials $$a_{k-1}x^{k-1} + a_{k-2}x^{k-2} + \ldots + a_1x+a_0$$ where the coefficients !!a_i!! are elements of !!\Bbb F_p!!. That is, the coefficients are just integers in !!{0, 1, \ldots p-1}!!, but with the understanding that the addition and multiplication will be done modulo !!p!!. Note that there are !!p^k!! of these polynomials in total.

Addition of polynomials is done exactly as usual: combine like terms, but remember that the coefficients are added modulo !!p!! because they are elements of !!\Bbb F_p!!.

Multiplication is more interesting:

a. Pick an irreducible polynomial !!P!! of degree !!k!!. “Irreducible” means that it does not factor into a product of smaller polynomials. How to actually locate an irreducible polynomial is an interesting question; here we will mostly ignore it.

b. To multiply two elements, multiply them normally, remembering that the coefficients are in !!\Bbb F_p!!. Divide the product by !!P!! and keep the remainder. Since !!P!! has degree !!k!!, the remainder must have degree at most !!k-1!!, and this is your answer.

Now we will see an example: we will construct !!\Bbb F_{2^2}!!. Here !!k=2!! and !!p=2!!. The elements will be polynomials of degree at most 1, with coefficients in !!\Bbb F_2!!. There are four elements: !!0x+0, 0x+1, 1x+0, !! and !!1x+1!!. As usual we will write these as !!0, 1, x, x+1!!. This will not be misleading.

Addition is straightforward: combine like terms, remembering that !!1+1=0!! because the coefficients are in !!\Bbb F_2!!:

$$\begin{array}{c|cccc} + & 0 & 1 & x & x+1 \\ \hline 0 & 0 & 1 & x & x+1 \\ 1 & 1 & 0 & x+1 & x \\ x & x & x+1 & 0 & 1 \\ x+1 & x+1 & x & 1 & 0 \end{array} $$

The multiplication as always is more interesting. We need to find an irreducible polynomial !!P!!. It so happens that !!P=x^2+x+1!! is the only one that works. (If you didn't know this, you could find out easily: a reducible polynomial of degree 2 factors into two linear factors. So the reducible polynomials are !!x^2, x·(x+1) = x^2+x!!, and !!(x+1)^2 = x^2+2x+1 = x^2+1!!. That leaves only !!x^2+x+1!!.)

To multiply two polynomials, we multiply them normally, then divide by !!x^2+x+1!! and keep the remainder. For example, what is !!(x+1)(x+1)!!? It's !!x^2+2x+1 = x^2 + 1!!. There is a theorem from elementary algebra (the “division theorem”) that we can find a unique quotient !!Q!! and remainder !!R!!, with the degree of !!R!! less than 2, such that !!PQ+R = x^2+1!!. In this case, !!Q=1, R=x!! works. (You should check this.) Since !!R=x!! this is our answer: !!(x+1)(x+1) = x!!.

Let's try !!x·x = x^2!!. We want !!PQ+R = x^2!!, and it happens that !!Q=1, R=x+1!! works. So !!x·x = x+1!!.

I strongly recommend that you calculate the multiplication table yourself. But here it is if you want to check:

$$\begin{array}{c|cccc} · & 0 & 1 & x & x+1 \\ \hline 0 & 0 & 0 & 0 & 0 \\ 1 & 0 & 1 & x & x+1 \\ x & 0 & x & x+1 & 1 \\ x+1 & 0 & x+1 & 1 & x \end{array} $$

To calculate the unique field !!\Bbb F_{2^3}!! of order 8, you let the elements be the 8 second-degree polynomials !!0, 1, x, \ldots, x^2+x, x^2+x+1!! and instead of reducing by !!x^2+x+1!!, you reduce by !!x^3+x+1!!. (Not by !!x^3+x^2+x+1!!, because that factors as !!(x^2+1)(x+1)!!.) To calculate the unique field !!\Bbb F_{3^2}!! of order 27, you start with the 27 third-degree polynomials with coefficients in !!{0,1,2}!!, and you reduce by !!x^3+2x+1!! (I think).

The special notation !!\Bbb F_p[x]!! means the ring of all polynomials with coefficients from !!\Bbb F_p!!. !!\langle P \rangle!! means the ring of all multiples of polynomial !!P!!. (A ring is a set with an addition, subtraction, and multiplication defined.)

When we write !!\Bbb F_p[x] / \langle P\rangle!! we are constructing a thing called a “quotient” structure. This is a generalization of the process that turns the ordinary integers !!\Bbb Z!! into the modular-arithmetic integers we have been calling !!\Bbb F_p!!. To construct !!\Bbb F_p!!, we start with !!\Bbb Z!! and then agree that two elements of !!\Bbb Z!! will be considered equivalent if they differ by a multiple of !!p!!.

To get !!\Bbb F_p[x] / \langle P \rangle!! we start with !!\Bbb F_p[x]!!, and then agree that elements of !!\Bbb F_p[x]!! will be considered equivalent if they differ by a multiple of !!P!!. The division theorem guarantees that of all the equivalent polynomials in a class, exactly one of them will have degree less than that of !!P!!, and that is the one we choose as a representative of its class and write into the multiplication table. This is what we are doing when we “divide by !!P!! and keep the remainder”.

A particularly important example of this construction is !!\Bbb R[x] / \langle x^2 + 1\rangle!!. That is, we take the set of polynomials with real coefficients, but we consider two polynomials equivalent if they differ by a multiple of !!x^2 + 1!!. By the division theorem, each polynomial is then equivalent to some first-degree polynomial !!ax+b!!.

Let's multiply $$(ax+b)(cx+d).$$ As usual we obtain $$acx^2 + (ad+bc)x + bd.$$ From this we can subtract !!ac(x^2 + 1)!! to obtain the equivalent first-degree polynomial $$(ad+bc) x + (bd-ac).$$

Now recall that in the complex numbers, !!(b+ai)(d + ci) = (bd-ac) + (ad+bc)i!!. We have just constructed the complex numbers,with the polynomial !!x!! playing the role of !!i!!.

[ Note to self: maybe write a separate article about what makes this a good answer, and how it is structured. ]

[Other articles in category /math/se] permanent link

Fri, 31 Jul 2020

What does it mean to expand a function “in powers of x-1”?

A recent Math Stack Excahnge post was asked to expand the function !!e^{2x}!! in powers of !!(x-1)!! and was confused about what that meant, and what the point of it was. I wrote an answer I liked, which I am reproducing here.

You asked:

I don't understand what are we doing in this whole process

which is a fair question. I didn't understand this either when I first learned it. But it's important for practical engineering reasons as well as for theoretical mathematical ones.

Before we go on, let's see that your proposal is the wrong answer to this question, because it is the correct answer, but to a different question. You suggested: $$e^{2x}\approx1+2\left(x-1\right)+2\left(x-1\right)^2+\frac{4}{3}\left(x-1\right)^3$$

Taking !!x=1!! we get !!e^2 \approx 1!!, which is just wrong, since actually !!e^2\approx 7.39!!. As a comment pointed out, the series you have above is for !!e^{2(x-1)}!!. But we wanted a series that adds up to !!e^{2x}!!.

As you know, the Maclaurin series works here:

$$e^{2x} \approx 1+2x+2x^2+\frac{4}{3}x^3$$

so why don't we just use it? Let's try !!x=1!!. We get $$e^2\approx 1 + 2 + 2 + \frac43$$

This adds to !!6+\frac13!!, but the correct answer is actually around !!7.39!! as we saw before. That is not a very accurate approximation. Maybe we need more terms? Let's try ten:

$$e^{2x} \approx 1+2x+2x^2+\frac{4}{3}x^3 + \ldots + \frac{8}{2835}x^9$$

If we do this we get !!7.3887!!, which isn't too far off. But it was a lot of work! And we find that as !!x!! gets farther away from zero, the series above gets less and less accurate. For example, take !!x=3.1!!, the formula with four terms gives us !!66.14!!, which is dead wrong. Even if we use ten terms, we get !!444.3!!, which is still way off. The right answer is actually !!492.7!!.

What do we do about this? Just add more terms? That could be a lot of work and it might not get us where we need to go. (Some Maclaurin series just stop working at all too far from zero, and no amount of terms will make them work.) Instead we use a different technique.

Expanding the Taylor series “around !!x=a!!” gets us a different series, one that works best when !!x!! is close to !!a!! instead of when !!x!! is close to zero. Your homework is to expand it around !!x=1!!, and I don't want to give away the answer, so I'll do a different example. We'll expand !!e^{2x}!! around !!x=3!!. The general formula is $$e^{2x} \approx \sum \frac{f^{(i)}(3)}{i!} (x-3)^i\tag{$\star$}\ \qquad \text{(when $x$ is close to $3$)}$$

The !!f^{(i)}(x)!! is the !!i!!'th derivative of !! e^{2x}!! , which is !!2^ie^{2x}!!, so the first few terms of the series above are:

$$\begin{eqnarray} e^{2x} & \approx& e^6 + \frac{2e^6}1 (x-3) + \frac{4e^6}{2}(x-3)^2 + \frac{8e^6}{6}(x-3)^3\\ & = & e^6\left(1+ 2(x-3) + 2(x-3)^2 + \frac34(x-3)^3\right)\\ & & \qquad \text{(when $x$ is close to $3$)} \end{eqnarray} $$

The first thing to notice here is that when !!x!! is exactly !!3!!, this series is perfectly correct; we get !!e^6 = e^6!! exactly, even when we add up only the first term, and ignore the rest. That's a kind of useless answer because we already knew that !!e^6 = e^6!!. But that's not what this series is for. The whole point of this series is to tell us how different !!e^{2x}!! is from !!e^6!! when !!x!! is close to, but not equal to !!3!!.

Let's see what it does at !!x=3.1!!. With only four terms we get $$\begin{eqnarray} e^{6.2} & \approx& e^6(1 + 2(0.1) + 2(0.1)^2 + \frac34(0.1)^3)\\ & = & e^6 \cdot 1.22075 \\ & \approx & 492.486 \end{eqnarray}$$

which is very close to the correct answer, which is !!492.7!!. And that's with only four terms. Even if we didn't know an exact value for !!e^6!!, we could find out that !!e^{6.2}!! is about !!22.075\%!! larger, with hardly any calculation.

Why did this work so well? If you look at the expression !!(\star)!! you can see: The terms of the series all have factors of the form !!(x-3)^i!!. When !!x=3.1!!, these are !!(0.1)^i!!, which becomes very small very quickly as !!i!! increases. Because the later terms of the series are very small, they don't affect the final sum, and if we leave them out, we won't mess up the answer too much. So the series works well, producing accurate results from only a few terms, when !!x!! is close to !!3!!.

But in the Maclaurin series, which is around !!x=0!!, those !!(x-3)^i!! terms are !!x^i!! terms intead, and when !!x=3.1!!, they are not small, they're very large! They get bigger as !!i!! increases, and very quickly. (The !! i! !! in the denominator wins, eventually, but that doesn't happen for many terms.) If we leave out these many large terms, we get the wrong results.

The short answer to your question is:

Maclaurin series are only good for calculating functions when !!x!! is close to !!0!!, and become inaccurate as !!x!! moves away from zero. But a Taylor series around !!a!! has its “center” near !!a!! and is most accurate when !!x!! is close to !!a!!.

[Other articles in category /math/se] permanent link

Wed, 29 Jul 2020Toph left the cap off one of her fancy art markers and it dried out, so I went to get her a replacement. The marker costs $5.85, plus tax, and the web site wanted a $5.95 shipping fee. Disgusted, I resolved to take my business elsewhere.

On Wednesday I drove over to a local art-supply store to get the marker. After taxes the marker was somehow around $8.50, but I also had to pay $1.90 for parking. So if there was a win there, it was a very small one.

But also, I messed up the parking payment app, which has maybe the worst UI of any phone app I've ever used. The result was a $36 parking ticket.

Lesson learned. I hope.

[Other articles in category /oops] permanent link

Today I learned:

There is a genus of ankylosaurs named Zuul after “demon and demi-god Zuul, the Gatekeeper of Gozer, featured in the 1984 film Ghostbusters”.

The type species of Zuul is Zuul crurivastator, which means “Zuul, destroyer of shins”. Wikipedia says:

The epithet … refers to a presumed defensive tactic of ankylosaurids, smashing the lower legs of attacking predatory theropods with their tail clubs.

My eight-year-old self is gratified that the ankylosaurids are believed to attack their enemies’ ankles.

The original specimen of Z. crurivastator, unusually-well preserved, was nicknamed “Sherman”.

Here is a video of Dan Aykroyd discussing the name, with Sherman.

[Other articles in category /bio] permanent link

Wed, 15 Jul 2020

More trivia about megafauna and poisonous plants

A couple of people expressed disappointment with yesterday's article, which asked were giant ground sloths immune to poison ivy?, but then failed to deliver on the implied promise. I hope today's article will make up for that.

Elephants

I said:

Mangoes are tropical fruit and I haven't been able to find any examples of Pleistocene megafauna that lived in the tropics…

David Formosa points out what should have been obvious: elephants are megafauna, elephants live where mangoes grow (both in Africa and in India), elephants love eating mangoes [1] [2] [3], and, not obvious at all…

Elephants are immune to poison ivy!

Captive elephants have been known to eat poison ivy, not just a little bite, but devouring entire vines, leaves and even digging up the roots. To most people this would have cause a horrific rash … To the elephants, there was no rash and no ill effect at all…

It's sad that we no longer have megatherium. But we do have elephants, which is pretty awesome.

Idiot fruit

The idiot fruit is just another one of those legendarily awful creatures that seem to infest every corner of Australia (see also: box jellyfish, stonefish, gympie gympie, etc.); Wikipedia says:

The seeds are so toxic that most animals cannot eat them without being severely poisoned.

At present the seeds are mostly dispersed by gravity. The plant is believed to be an evolutionary anachronism. What Pleistocene megafauna formerly dispersed the poisonous seeds of the idiot fruit?

A wombat. A six-foot-tall wombat.

I am speechless with delight.

[Other articles in category /bio] permanent link

Tue, 14 Jul 2020

Were giant ground sloths immune to poison ivy?

The skin of the mango fruit contains urushiol, the same irritating chemical that is found in poison ivy. But why? From the mango's point of view, the whole point of the mango fruit is to get someone to come along and eat it, so that they will leave the seed somewhere else. Posioning the skin seems counterproductive.

An analogous case is the chili pepper, which contains an irritating chemical, capsaicin. I think the answer here is believed to be that while capsaicin irritates mammals, birds are unaffected. The chili's intended target is birds; you can tell from the small seeds, which are the right size to be pooped out by birds. So chilis have a chemical that encourages mammals to leave the fruit in place for birds.

What's the intended target for the mango fruit? Who's going to poop out a seed the size of a mango pit? You'd need a very large animal, large enough to swallow a whole mango. There aren't many of these now, but that's because they became extinct at the end of the Pleistocene epoch: woolly mammoths and rhinoceroses, huge crocodiles, giant ground sloths, and so on. We may have eaten the animals themselves, but we seem to have quite a lot of fruits around that evolved to have their seeds dispersed by Pleistocene megafauna that are now extinct. So my first thought was, maybe the mango is expecting to be gobbled up by a giant gound sloth, and have its giant seed pooped out elsewhere. And perhaps its urushiol-laden skin makes it unpalatable to smaller animals that might not disperse the seeds as widely, but the giant ground sloth is immune. (Similarly, I'm told that goats are immune to urushiol, and devour poison ivy as they do everything else.)

Well, maybe this theory is partly correct, but even if so, the animal definitely wasn't a giant ground sloth, because those lived only in South America, whereas the mango is native to South Asia. Ground slots and avocados, yes; mangos no.

Still the theory seems reasonable, except that mangoes are tropical fruit and I haven't been able to find any examples of Pleistocene megafauna that lived in the tropics. Still I didn't look very hard.

Wikipedia has an article on evolutionary anachronisms that lists a great many plants, but not the mango.

[ Addendum: I've eaten many mangoes but never noticed any irritation from the peel. I speculate that cultivated mangoes are varieties that have been bred to contain little or no urushiol, or that there is a post-harvest process that removes or inactivates the urushiol, or both. ]

[ Addendum 20200715: I know this article was a little disappointing and that it does not resolve the question in the title. Sorry. But I wrote a followup that you might enjoy anyway. ]

[Other articles in category /bio] permanent link