Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2025: | JFMAM |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

| A monad for probability and provenance |

| Another short explanation of Gödel's theorem |

| Monads are like burritos |

| On failing open |

| World's shortest explanation of Gödel's theorem |

Subtopics:

| Mathematics | 245 |

| Programming | 99 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 22 |

| Physics | 21 |

| Perl | 17 |

| Biology | 15 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Thu, 31 Dec 2009

A monad for probability and provenance

I don't quite remember how I arrived at this, but it occurred to me

last week that probability distributions form a monad. This is the

first time I've invented a new monad that I hadn't seen before; then I

implemented it and it behaved pretty much the way I thought it would.

So I feel like I've finally arrived, monadwise.

Suppose a monad value represents all the possible outcomes of an event, each with a probability of occurrence. For concreteness, let's suppose all our probability distributions are discrete. Then we might have:

data ProbDist p a = ProbDist [(a,p)] deriving (Eq, Show) unpd (ProbDist ps) = psEach a is an outcome, and each p is the probability of that outcome occurring. For example, biased and unbiased coins:

unbiasedCoin = ProbDist [ ("heads", 0.5),

("tails", 0.5) ];

biasedCoin = ProbDist [ ("heads", 0.6),

("tails", 0.4) ];

Or a couple of simple functions for making dice:

import Data.Ratio

d sides = ProbDist [(i, 1 % sides) | i <- [1 .. sides]]

die = d 6

d n is an n-sided die.

The Functor instance is straightforward:

instance Functor (ProbDist p) where

fmap f (ProbDist pas) = ProbDist $ map (\(a,p) -> (f a, p)) pas

The Monad instance requires return and

>>=. The return function merely takes an event and

turns it into a distribution where that event occurs with probability

1. I find join easier to think about than >>=.

The join function takes a nested distribution, where each

outcome of the outer distribution specifies an inner distribution for

the actual events, and collapses it into a regular, overall

distribution. For example, suppose you put a biased coin and an

unbiased coin in a bag, then pull one out and flip it:

bag :: ProbDist Double (ProbDist Double String)

bag = ProbDist [ (biasedCoin, 0.5),

(unbiasedCoin, 0.5) ]

The join operator collapses this into a single ProbDist

Double String:

ProbDist [("heads",0.3),

("tails",0.2),

("heads",0.25),

("tails",0.25)]

It would be nice if join could combine the duplicate

heads into a single ("heads", 0.55) entry. But that

would force an Eq a constraint on the event type, which isn't

allowed, because (>>=) must work for all data types, not

just for instances of Eq. This is a problem with Haskell,

not with the monad itself. It's the same problem that prevents one

from making a good set monad in Haskell, even though categorially sets

are a perfectly good monad. (The return function constructs

singletons, and the join function is simply set union.)

Maybe in the next language.Perhaps someone else will find the >>= operator easier to understand than join? I don't know. Anyway, it's simple enough to derive once you understand join; here's the code:

instance (Num p) => Monad (ProbDist p) where return a = ProbDist [(a, 1)] (ProbDist pas) >>= f = ProbDist $ do (a, p) <- pas let (ProbDist pbs) = f a (b, q) <- pbs return (b, p*q)So now we can do some straightforward experiments:

liftM2 (+) (d 6) (d 6) ProbDist [(2,1 % 36),(3,1 % 36),(4,1 % 36),(5,1 % 36),(6,1 % 36),(7,1 % 36),(3,1 % 36),(4,1 % 36),(5,1 % 36),(6,1 % 36),(7,1 % 36),(8,1 % 36),(4,1 % 36),(5,1 % 36),(6,1 % 36),(7,1 % 36),(8,1 % 36),(9,1 % 36),(5,1 % 36),(6,1 % 36),(7,1 % 36),(8,1 % 36),(9,1 % 36),(10,1 % 36),(6,1 % 36),(7,1 % 36),(8,1 % 36),(9,1 % 36),(10,1 % 36),(11,1 % 36),(7,1 % 36),(8,1 % 36),(9,1 % 36),(10,1 % 36),(11,1 % 36),(12,1 % 36)]This is nasty-looking; we really need to merge the multiple listings of the same event. Here is a function to do that:

agglomerate :: (Num p, Eq b) => (a -> b) -> ProbDist p a -> ProbDist p b

agglomerate f pd = ProbDist $ foldr insert [] (unpd (fmap f pd)) where

insert (k, p) [] = [(k, p)]

insert (k, p) ((k', p'):kps) | k == k' = (k, p+p'):kps

| otherwise = (k', p'):(insert (k,p) kps)

agg :: (Num p, Eq a) => ProbDist p a -> ProbDist p a

agg = agglomerate id

Then agg $ liftM2 (+) (d 6) (d 6) produces:

ProbDist [(12,1 % 36),(11,1 % 18),(10,1 % 12),(9,1 % 9),

(8,5 % 36),(7,1 % 6),(6,5 % 36),(5,1 % 9),

(4,1 % 12),(3,1 % 18),(2,1 % 36)]

Hey, that's correct.There must be a shorter way to write insert. It really bothers me, because it looks look it should be possible to do it as a fold. But I couldn't make it look any better.

You are not limited to calculating probabilities. The monad actually will count things. For example, let us throw three dice and count how many ways there are to throw various numbers of sixes:

eq6 n = if n == 6 then 1 else 0

agg $ liftM3 (\a b c -> eq6 a + eq6 b + eq6 c) die die die

ProbDist [(3,1),(2,15),(1,75),(0,125)]

There is one way to throw three sixes, 15 ways to throw two sixes, 75

ways to throw one six, and 125 ways to throw no sixes. So

ProbDist is a misnomer. It's easy to convert counts to probabilities:

probMap :: (p -> q) -> ProbDist p a -> ProbDist q a probMap f (ProbDist pds) = ProbDist $ (map (\(a,p) -> (a, f p))) pds normalize :: (Fractional p) => ProbDist p a -> ProbDist p a normalize pd@(ProbDist pas) = probMap (/ total) pd where total = sum . (map snd) $ pas normalize $ agg $ probMap toRational $ liftM3 (\a b c -> eq6 a + eq6 b + eq6 c) die die die ProbDist [(3,1 % 216),(2,5 % 72),(1,25 % 72),(0,125 % 216)]I think this is the first time I've gotten to write die die die in a computer program.

The do notation is very nice. Here we calculate the distribution where we roll four dice and discard the smallest:

stat = do

a <- d 6

b <- d 6

c <- d 6

d <- d 6

return (a+b+c+d - minimum [a,b,c,d])

probMap fromRational $ agg stat

ProbDist [(18,1.6203703703703703e-2),

(17,4.1666666666666664e-2), (16,7.253086419753087e-2),

(15,0.10108024691358025), (14,0.12345679012345678),

(13,0.13271604938271606), (12,0.12885802469135801),

(11,0.11419753086419752), (10,9.41358024691358e-2),

(9,7.021604938271606e-2), (8,4.7839506172839504e-2),

(7,2.9320987654320986e-2), (6,1.6203703703703703e-2),

(5,7.716049382716049e-3), (4,3.0864197530864196e-3),

(3,7.716049382716049e-4)]

One thing I was hoping to get didn't work out. I had this idea that

I'd be able to calculate the outcome of a game of craps like this:

dice = liftM2 (+) (d 6) (d 6) point n = do roll <- dice case roll of 7 -> return "lose" _ | roll == n = "win" _ | otherwise = point n craps = do roll <- dice case roll of 2 -> return "lose" 3 -> return "lose" 4 -> point 4 5 -> point 5 6 -> point 6 7 -> return "win" 8 -> point 8 9 -> point 9 10 -> point 10 11 -> return "win" 12 -> return "lose"This doesn't work at all; point is an infinite loop because the first value of dice, namely 2, causes a recursive call. I might be able to do something about this, but I'll have to think about it more.

It also occurred to me that the use of * in the definition of >>= / join could be generalized. A couple of years back I mentioned a paper of Green, Karvounarakis, and Tannen that discusses "provenance semirings". The idea is that each item in a database is annotated with some "provenance" information about why it is there, and you want to calculate the provenance for items in tables that are computed from table joins. My earlier explanation is here.

One special case of provenance information is that the provenances are probabilities that the database information is correct, and then the probabilities are calculated correctly for the joins, by multiplication and addition of probabilities. But in the general case the provenances are opaque symbols, and the multiplication and addition construct regular expressions over these symbols. One could generalize ProbDist similarly, and the ProbDist monad (even more of a misnomer this time) would calculate the provenance automatically. It occurs to me now that there's probably a natural way to view a database table join as a sort of Kleisli composition, but this article has gone on too long already.

Happy new year, everyone.

[ Addendum 20100103: unsurprisingly, this is not a new idea. Several readers wrote in with references to previous discussion of this monad, and related monads. It turns out that the idea goes back at least to 1981. ]

[ Addendum 20220522: The article begins “I don't quite remember how I arrived at this”, but I just remembered how I arrived at it! I was thinking about how List can be interpreted as the monad that captures the idea of nondeterministic computation. A function that yields a list [a, b, c] represents a nondeterministic computation that might yield any of a, b, or c. (This idea goes back at least as far as Moggi's 1989 monads paper.) I was thinking about an extension to this idea: what if the outcomes were annotated with probabilities to indicate how often each was the result. ]

My thanks to Graham Hunter for his donation.

[Other articles in category /prog/haskell] permanent link

Tue, 15 Dec 2009

Monads are like burritos

A few months ago Brent

Yorgey complained about a certain class of tutorials which present

monads by explaining how monads are like burritos.

At first I thought the choice of burritos was only a facetious reference to the peculiar and sometimes strained analogies these tutorials make. But then I realized that monads are like burritos.

I will explain.

A monad is a special kind of a functor. A functor F takes each type T and maps it to a new type FT. A burrito is like a functor: it takes a type, like meat or beans, and turns it into a new type, like beef burrito or bean burrito.

A functor must also be equipped with a map function that lifts functions over the original type into functions over the new type. For example, you can add chopped jalapeños or shredded cheese to any type, like meat or beans; the lifted version of this function adds chopped jalapeños or shredded cheese to the corresponding burrito.

A monad must also possess a unit function that takes a regular value, such as a particular batch of meat, and turns it into a burrito. The unit function for burritos is obviously a tortilla.

Finally, a monad must possess a join function that takes a ridiculous burrito of burritos and turns them into a regular burrito. Here the obvious join function is to remove the outer tortilla, then unwrap the inner burritos and transfer their fillings into the outer tortilla, and throw away the inner wrappings.

The map, join, and unit functions must satisfy certain laws. For example, if B is already a burrito, and not merely a filling for a burrito, then join(unit(B)) must be the same as B. This means that if you have a burrito, and you wrap it in a second tortilla, and then unwrap the contents into the outer tortilla, the result is the same as what you started with.

This is true because tortillas are indistinguishable.

I know you are going to point out that some tortillas have the face of Jesus. But those have been toasted, and so are unsuitable for burrito-making, and do not concern us here.

So monads are indeed like burritos.

I asked Brent if this was actually what he had in mind when he first suggested the idea of tutorials explaining monads in terms of burritos, and if everyone else had understood this right away.

But he said no, I was the lone genius.

[ Addendum 20120106: Chris Done has presented this theory in cartoon form. ]

[ Addendum 20201025: Eugenia Cheng tweets this page! But the last word, “stupid”, is inexplicably misspelled. ]

[Other articles in category /prog] permanent link

Mon, 14 Dec 2009

Another short explanation of Gödel's theorem

In yesterday's article, I

said:

A while back I started writing up an article titled "World's shortest explanation of Gödel's theorem". But I didn't finish it...

I went and had a look to see what was wrong with it, and to my surprise, there seemed to be hardly anything wrong with it. Perhaps I just forgot to post it. So if you disliked yesterday's brief explanation of Gödel's theorem—and many people did—you'll probably dislike this one even more. Enjoy!

A reader wrote to question my characterization of Gödel's theorem in the previous article. But I think I characterized it correctly; I said:

The only systems of mathematical axioms strong enough to prove all true statements of arithmetic, are those that are so strong that they also prove all the false statements of arithmetic.I'm going to explain how this works.

You start by choosing some system of mathematics that has some machinery in it for making statements about things like numbers and for constructing proofs of theorems like 1+1=2. Many such systems exist. Let's call the one we have chosen M, for "mathematics".

Gödel shows that if M has enough mathematical machinery in it to actually do arithmetic, then it is possible to produce a statement S whose meaning is essentially "Statement S cannot be proved in system M."

It is not at all obvious that this is possible, or how it can be done, and I am not going to get into the details here. Gödel's contribution was seeing that it was possible to do this.

So here's S again:

S: Statement S cannot be proved in system M.

Now there are two possibilities. Either S is in fact provable in system M, or it is not. One of these must hold.

If S is provable in system M, then it is false, and so it is a false statement that can be proved in system M. M therefore proves some false statements of arithmetic.

If S is not provable in system M, then it is true, and so it is a true statement that cannot be proved in system M. M therefore fails to prove some true statements of arithmetic.

So something goes wrong with M: either it fails to prove some true statements, or else it succeeds in proving some false statements.

List of topics I deliberately omitted from this article, that mathematicians should not write to me about with corrections: Presburger arithmetic. Dialetheism. Inexhaustibility. ω-incompleteness. Non-RE sets of axioms.

| Buy Godel's Theorem: An Incomplete Guide to Its Use and Abuse  from Bookshop.org (with kickback) (without kickback) |

If you liked this post, you may enjoy Torkel Franzén's books Godel's Theorem: An Incomplete Guide to Its Use and Abuse and Inexhaustibility: A Non-Exhaustive Treatment. If you disliked this post, you are even more likely to enjoy them.

Many thanks to Robert Bond for his contribution.

[Other articles in category /math] permanent link

Sun, 13 Dec 2009

World's shortest explanation of Gödel's theorem

A while back I started writing up an article titled "World's shortest

explanation of Gödel's theorem". But I didn't finish it, and

later I encountered Raymond Smullyan's version, which is much shorter

anyway. So here, shamelessly stolen from Smullyan, is the World's

shortest explanation of Gödel's theorem.

We have some sort of machine that prints out statements in some sort of language. It needn't be a statement-printing machine exactly; it could be some sort of technique for taking statements and deciding if they are true. But let's think of it as a machine that prints out statements.

In particular, some of the statements that the machine might (or might not) print look like these:

| P*x | (which means that | the machine will print x) |

| NP*x | (which means that | the machine will never print x) |

| PR*x | (which means that | the machine will print xx) |

| NPR*x | (which means that | the machine will never print xx) |

For example, NPR*FOO means that the machine will never print FOOFOO. NP*FOOFOO means the same thing. So far, so good.

Now, let's consider the statement NPR*NPR*. This statement asserts that the machine will never print NPR*NPR*.

Either the machine prints NPR*NPR*, or it never prints NPR*NPR*.

If the machine prints NPR*NPR*, it has printed a false statement. But if the machine never prints NPR*NPR*, then NPR*NPR* is a true statement that the machine never prints.

So either the machine sometimes prints false statements, or there are true statements that it never prints.

So any machine that prints only true statements must fail to print some true statements.

Or conversely, any machine that prints every possible true statement must print some false statements too.

The proof of Gödel's theorem shows that there are statements of pure arithmetic that essentially express NPR*NPR*; the trick is to find some way to express NPR*NPR* as a statement about arithmetic, and most of the technical details (and cleverness!) of Gödel's theorem are concerned with this trick. But once the trick is done, the argument can be applied to any machine or other method for producing statements about arithmetic.

The conclusion then translates directly: any machine or method that produces statements about arithmetic either sometimes produces false statements, or else there are true statements about arithmetic that it never produces. Because if it produces something like NPR*NPR* then it is wrong, but if it fails to produce NPR*NPR*, then that is a true statement that it has failed to produce.

So any machine or other method that produces only true statements about arithmetic must fail to produce some true statements.

Hope this helps!

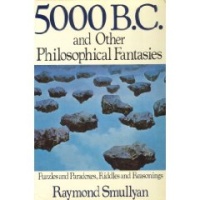

(This explanation appears in Smullyan's book 5000 BC and Other Philosophical Fantasies, chapter 3, section 65, which is where I saw it. He discusses it at considerable length in Chapter 16 of The Lady or the Tiger?, "Machines that Talk About Themselves". It also appears in The Mystery of Scheherezade.)

I gratefully acknowledge Charles Colht for his generous donation to this blog.

[ Addendum 20091214: Another article on the same topic. ]

[ Addendum 20150403: Reddit user cafe_anon has formalized this argument as a Coq proof. ]

[ Addendum 20150406: Reddit user TezlaKoil has formalized this argument as an Agda proof. (Unformatted version) ]

[Other articles in category /math] permanent link

Fri, 11 Dec 2009

On failing open

An axiom of security analysis is that nearly all security mechanisms

must fail closed. What this means is that if there is an uncertainty

about whether to grant or to deny access, the right choice is nearly

always to deny access.

For example, consider a login component that accepts a username and a password and then queries a remote authentication server to find out if the password is correct. If the connection to the authentication server fails, or if the authentication server is down, the login component must decide whether to grant or deny access, in the absence of correct information from the server. The correct design is almost certainly to "fail closed", and to deny access.

I used to teach security classes, and I would point out that programs sometimes have bugs, and do the wrong thing. If your program has failed closed, and if this is a bug, then you have an irate user. The user might call you up and chew you out, or might chew you out to your boss, and they might even miss a crucial deadline because your software denied them access when it should have granted access. But these are relatively small problems. If your program has failed open, and if this is a bug, then the user might abscond with the entire payroll and flee to Brazil.

(I was once teaching one of these classes in Lisbon, and I reached the "flee to Brazil" example without having realized ahead of time that this had greater potential to offend the Portuguese than many other people. So I apologized. But my hosts very kindly told me that they would have put it the same way, and that in fact the Mayor of Lisbon had done precisely what I described a few years before. The moral of the story is to read over the slides ahead of time before giving the talk.)

But I digress. One can find many examples in the history of security that failed the wrong way.

However, the issue is on my mind because I was at a job interview a few weeks ago with giant media corporation XYZ. At the interview, we spent about an hour talking about an architectural problem they were trying to solve. XYZ operates a web site where people can watch movies and TV programs online. Thy would like to extend the service so that people who subscribe to premium cable services, such as HBO, can authenticate themselves to the web site and watch HBO programs there; HBO non-subscribers should get only free TV content. The problem in this case was that the authentication data was held on an underpowered legacy system that could serve only a small fraction of the requests that came in.

The solution was to cache the authentication data on a better system, and gather and merge change information from the slow legacy system as possible.

I observed during the discussion that this was a striking example of the rare situation in which one wants the authentication system to fail open instead of closed. For suppose one grants access that should not be granted. Then someone on the Internet gets to watch a movie or an episode of The Sopranos for free, which is not worth getting excited about and which happens a zillion times a day anyhow.

But suppose the software denies access that should have been granted. Then there is a legitimate paying customer who has paid to watch The Sopranos, and we told them no. Now they are a legitimately irate customer, which is bad, and they may call the support desk, costing XYZ Corp a significant amount of money, which is also bad. So all other things being equal, one should err on the side of lenity: when in doubt, grant access.

I would like to thank Andrew Lenards for his gift.

[Other articles in category /tech] permanent link