Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2025: | JFMAM |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 245 |

| Programming | 99 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 22 |

| Physics | 21 |

| Perl | 17 |

| Biology | 15 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Thu, 30 Mar 2006

Blog Posts Escape from Lab

Yesterday I decided to put my blog posts into CVS. As part of doing

that, I copied the blog articles tree. I must have made a mistake,

because not all the files were copied in the way I wanted.

To prevent unfinished articles from getting out before they are ready, I have a Blosxom plugin that suppresses any article whose name is title.blog if it is accompanied by a title.notyet file. I can put the draft of an article in the .notyet file and then move it to .blog when it's ready to appear, or I can put the draft in .blog with an empty or symbolic-link .notyet alongside it, and then remove the .notyet file when the article is ready.

I also use this for articles that will never be ready. For example, a while back I got the idea that it might be funny if I were to poſt ſome articles with long medial letter 'ſ' as one ſees in Baroque printing and writing. To try out what this would look like I copied one of my articles---the one on Baroque writing ſtyle ſeemed appropriate---and changed the filename from baroque-style.blog to baroque-ftyle.blog. Then I created baroque-ftyle.notyet ſo that nobody would ever ſee my ſtrange experiment.

But somehow in the big shakeup last night, some of the notyet files got lost, and so this post and one other escaped from my laboratory into the outside world. As I explained in an earlier post, I can remove these from my web site, but aggregators like Bloglines.com will continue to display my error. it's tempting to blame this on Blosxom, or on CVS, or on the sysadmin, or something like that, but I think the most likely explanation for this one is that I screwed up all by myself.

The other escapee was an article about the optional second argument in Perl's built-in bless function, which in practice is never omitted. This article was so dull that I abandoned it in the middle, and instead addressed the issue in passing in an article on a different subject.

So if your aggregator is displaying an unfinished and boring article about one-argument bless, or a puzzling article that seems like a repeat of an earlier one, but with all the s's replaced with ſ's, that is why.

These errors might be interesting as meta-information about how I work. I had hoped not to discuss any such meta-issues here, but circumstances seem to have forced me to do it at least a little. One thing I think might be interesting is what my draft articles look like. Some people rough out an article first, then go back and fix it it. I don't do that. I write one paragraph, and then when it's ready, I write another paragraph. My rough drafts look almost the same as my finished product, right up to the point at which they stop abruptly, sometimes in the middle of a sentence.

Another thing you might infer from these errors is that I have a lot of junk sitting around that is probably never going to be used for anything. Since I started the blog, I've written about 85,000 words of articles that were released, and about 20,000 words of .notyet. Some of the .notyet stuff will eventually see the light of day, of course. For example, I have about 2500 words of addenda to this month's posts that are scheduled for release tomorrow, 1000 words about this month's Google queries and the nature of "authority" on the Web, 1000 words about the structure of the real numbers, 1300 words about the Grelling-Nelson paradox, and so on.

But I alſo have that 1100-word experiment about what happens to an article when you use long medial s's everywhere. (Can you believe I actually conſidered doing this in every one of my poſts? It's tempting, but just a little too idioſyncratic, even for me.) I have 500 words about why to attend a colloquium, how to convince everyone there that you're a genius, and what's wrong with education in general. I have 350 words that were at the front of my article about the 20 most important tools that explained in detail why most criticism of Forbes' list would be unfair; it wasted a whole page at the beginning of that article, so I chopped it out, but I couldn't bear to throw it away. I have two thirds of a 3000-word article written about why my brain is so unusual and how I've coped with its peculiar limitations. That one won't come out unless I can convince myself that anyone else will find it more than about ten percent as interesting as I find it.

[Other articles in category /oops] permanent link

Tue, 28 Mar 2006

The speed of electricity

For some reason I have needed to know this several times in the past

few years: what is the speed of electricity? And for some reason,

good answers are hard to come by.

(Warning: as with all my articles on physics, readers are cautioned that I do not know what I am talking about, but that I can talk a good game and make up plenty of plausible-sounding bullshit that sounds so convincing that I believe it myself. Beware of bullshit.)

If you do a Google search for "speed of electricity", the top hit is Bill Beaty's long discourse on the subject. In this brilliantly obtuse article, Beaty manages to answer just about every question you might have about everything except the speed of electricity, and does so in a way that piles confusion on confusion.

Here's the funny thing about electricity. To have electricity, you need moving electrons in the wire, but the electrons are not themselves the electricity. It's the motion, not the electrons. It's like that joke about the two rabbinical students who are arguing about what makes tea sweet. "It's the sugar," says the first one. "No," disagrees the other, "it's the stirring." With electricity, it really is the stirring.

We can understand this a little better with an analogy. Actually, several analogies, each of which, I think, illuminates the others. They will get progressively closer to the real truth of the matter, but readers are cautioned that these are just analogies, and so may be misleading, particularly if overextended. Also, even the best one is not really very good. I am introducing them primarily to explain why I think M. Beaty's answer is obtuse.

- Consider a

garden hose a hundred feet long. Suppose the hose is already full of

water. You turn on the hose at one end, and water starts coming out

the other end. Then you turn off the hose, and the water stops coming

out. How long does it take for the water to stop coming out? It

probably happens pretty darn fast, almost instantaneously.

This shows that the "signal" travels from one end of the hose to the other at a high speed—and here's the key idea—at a much higher speed than the speed of the water itself. If the hose is one square inch in cross-section, its total volume is about 5.2 gallons. So if you're getting two gallons per minute out of it, that means that water that enters the hose at the faucet end doesn't come out the nozzle end until 156 seconds later, which is pretty darn slow. But it certainly isn't the case that you have to wait 156 seconds for the water to stop coming out after you turn off the faucet. That's just how long it would take to empty the hose. And similarly, you don't have to wait that long for water to start coming out when you turn the faucet on, unless the hose was empty to begin with.

The water is like the electrons in the wire, and electricity is like that signal that travels from the faucet to the nozzle when you turn off the water. The electrons might be travelling pretty slowly, but the signal travels a lot faster.

- You're waiting in the check-in line at the airport. One of the

clerks calls "Can I help who's next?" and the lady at the front of the

line steps up to the counter. Then the next guy in line steps up to

the front of the line. Then the next person steps up. Eventually,

the last person in line steps up. You can imagine that there's a

"hole" that opens up at the front of the line, and the hole travels

backwards through the line to the back end.

How fast does the hole travel? Well, it depends. But one thing is sure: the speed at which the hole moves backward is not the same as the speed at which the people move forward. It might take the clerks another hour to process the sixty people in line. That does not mean that when they call "next", it will take an hour for the hole to move all the way to the back. In fact, the rate at which the hole moves is to a large extent independent of how fast the people in the line are moving forward.

The people in the line are like electrons. The place at which the people are actually moving—the hole—is the electricity itself.

- In the ocean, the waves start far out from shore, and then roll in

toward the shore. But if you look at a cork bobbing on the waves, you

see right away that even though the waves move toward the shore, the

water is staying in pretty much the same place. The cork is

not moving toward the shore; it's bobbing up and down, and it might

well stay in the same place all day, bobbing up and down. It should

be pretty clear that the speed with which the water and the cork are

moving up and down is only distantly related to the speed with which

the waves are coming in to shore. The water is like the electrons,

and the wave is like the electricity.

- A bomb explodes on a hill, and sometime later Ike on the next hill

over hears the bang. This is because the exploding bomb compresses

the air nearby, and then the compressed air expands, compressing the

air a little way away again, and the compressed air expands and

compresses the air a little way farther still, and so there's a wave

of compression that spreads out from the bomb until eventually the air

on the next hill is compressed and presses on Ike's eardrums. It's

important to realize that no individual air molecule has traveled from

hill A to hill B. Each air molecule stays in pretty much the same

place, moving back and forth a bit, like the water in the water waves

or the people in the airport queue. Each person in the airport line

stays in pretty much the same place, even though the "hole" moves all

the way from the front of the line to the back. Similarly, the air

molecules all stay in pretty much the same place even as the

compression wave goes from hill A to hill B. When you speak to

someone across the room, the sound travels to them at a speed of 680

miles per hour, but they are not bowled over by hurricane-force winds.

(Thanks to Aristotle Pagaltzis for suggesting that I point this out.)

Here the air molecules are like the electrons in the wire, and the

sound is like the electricity.

I believe that when someone asks for the speed of electricity, what they are typically after is something like: When I flip the switch on the wall, how long before the light goes on? Or: the ALU in my computer emits some bits. How long before those bits get to the output bus? Or again: I send a telegraph message from Nova Scotia to Ireland on an undersea cable. How long before the message arrives in Ireland? Or again: computers A and B are on the same branch of an ethernet, 10 meters apart. How long before a packet emitted by A's ethernet hardware gets to B's ethernet hardware?

M. Beaty's answer about the speed of the electrons is totally useless as an answer to this kind of question. It's a really detailed, interesting answer to a question to which hardly anyone was interested in the answer.

Here the analogy with the speed of sound really makes clear what is wrong with M. Beaty's answer. I set off a bomb on one hill. How long before Ike on the other hill a mile away hears the bang? Or, in short, "what is the speed of sound?" M. Beaty doesn't know what the speed of sound is, but he is glad to tell you about the speed at which the individual air molecules are moving back and forth, although this actually has very little to do with the speed of sound. He isn't going to tell you how long before the tsunami comes and sweeps away your village, but he has plenty to say about how fast the cork is bobbing up and down on the water.

That's all fine, but I don't think it's what people are looking for when they want the speed of electricity. So the individual charges in the wire are moving at 2.3 mm/s; who cares? As M. Beaty was at some pains to point out, the moving charges are not themselves the electricity, so why bring it up?

I wanted to end this article with a correct and pertinent answer to the question. For a while, I was afraid I was going to have to give up. At first, I just tried looking it up on the web. Many people said that the electricity travels at the speed of light, c. This seemed rather implausible to me, for various reasons. (That's another essay for another day.) And there was widespread disagreement about how fast it really was. For example:

But then I found this page on the characteristic impedance of coaxial cables and other wires, which seems rather more to the point than most of the pages I have found that purport to discuss the "speed of electricity" directly.

From this page, we learn that the thing I have been referring to as the "speed of electricity" is called, in electrical engineering jargon, the "velocity factor" of the wire. And it is a simple function of the "dielectric constant" not of the wire material itself, but of the insulation between the two current-carrying parts of the wire! (In typical physics fashion, the dielectric "constant" is anything but; it depends on the material of which the insulation is made, the temperature, and who knows what other stuff they aren't telling me. Dielectric constants in the rest of the article are for substances at room temperature.) The function is simply:

$$V = {c\over\sqrt{\varepsilon_r}}$$

where V is the velocity of electricity in the wire, and εr is the dielectric constant of the insulating material, relative to that of vacuum. Amazingly, the shape, material, and configuration of the wire doesn't come into it; for example it doesn't matter if the wire is coaxial or twin parallel wires. (Remember the warning from the top of the page: I don't know what I am talking about.) Dielectric constants range from 1 up to infinity, so velocity ranges from c down to zero, as one would expect. This explains why we find so many inconsistent answers about the speed of electricity: it depends on a specific physical property of the wire. But we can consider some common examples.Wikipedia says that the dielectric constant of rubber is about 7 (and this website specifies 6.7 for neoprene) so we would expect the speed of electricity in rubber-insulated wire to be about 0.38c. This is not quite accurate, because the wires are also insulated by air and by the rest of the universe. But it might be close to that. (Remember that warning!)

The dielectric constant of air is very small—Wikipedia says 1.0005, and the other site gives 1.0548 for air at 100 atmospheres pressure—so if the wires are insulated only by air, the speed of electricity in the wires should be very close to the speed of light.

We can also work the calculation the other way: this web page says that signal propagation in an ethernet cable is about 0.66c, so we infer that the dielectric constant for the insulator is around 1/0.662 = 2.3. We look up this number in a a table of dielectric constants and guess from that that the insulator might be polyethylene or something like it. (This inference would be correct.)

What's the lower limit on signal propagation in wires? I found a reference to a material with a dielectric constant of 2880. Such a material, used as an insulator between two wires, would result in a velocity of about 2% of c, which is still 5600 km/s. this page mentions cement pastes with "effective dielectric constants" up around 90,000, yielding an effective velocity of 1/300 c, or 1000 km/s.

Finally, I should add that the formula above only applies for direct currents. For varying currents, such as are typical in AC power lines, the dielectric constant apparently varies with time (some constant!) and the analysis is more complicated.

[ Addendum 20180904: Paul Martin suggests that I link to this useful page about dielectric constants. It includes an extensive table of the εr for various polymers. Mostly they are between 2 and 3. ]

[Other articles in category /physics] permanent link

Sun, 26 Mar 2006

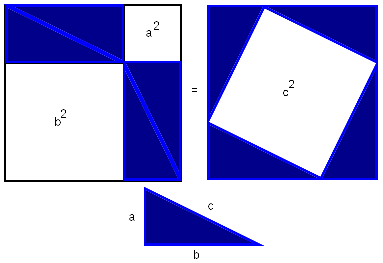

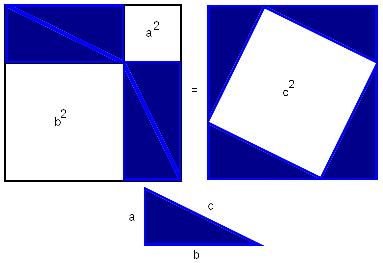

Approximations and the big hammer

In today's

article about rational approximations to √3, I said that "basic

algebra tells us that √(1-ε) ≈ 1 - ε/2 when

ε is small".

A lot of people I know would be tempted to invoke calculus for this, or might even think that calculus was required. They see the phrase "when ε is small" or that the statement is one about limits, and that immediately says calculus.

Calculus is a powerful tool for producing all sorts of results like that one, but for that one in particular, it is a much bigger, heavier hammer than one needs. I think it's important to remember how much can be accomplished with more elementary methods.

The thing about √(1-ε) is simple. First-year algebra tells us that (1 - ε/2)2 = 1 - ε + ε2/4. If ε is small, then ε2/4 is really small, so we won't lose much accuracy by disregarding it.

This gives us (1 - ε/2)2 ≈ 1 - ε, or, equivalently, 1 - ε/2 ≈ √(1 - ε). Wasn't that simple?

[Other articles in category /math] permanent link

Sat, 25 Mar 2006

Achimedes and the square root of 3

In my recent

discussion of why π might be about 3, I mentioned in passing

that Archimedes, calculating the approximate value of π used 265/153

as a rational approximation to √3. The sudden appearance of the

fraction 265/153 is likely to make almost anyone say "huh"? Certainly

it made me say that. And even Dr. Chuck Lindsey, who wrote up the

detailed explanation of Archimedes' work from which I learned

about the 265/153 in the first place, says:

Throughout this proof, Archimedes uses several rational approximations to various square roots. Nowhere does he say how he got those approximations—they are simply stated without any explanation—so how he came up with some of these is anybody's guess.It's a bit strange that Dr. Lindsey seems to find this mysterious, because I think there's only one way to do it, and it's really easy to find, so long as you ask the question "how would Archimedes go about calculating rational approximations to √3", rather than "where the heck did 265/153 come from?" It's like one of those pencil mazes they print in the Sunday kids' section of the newspaper: it looks complicated, but if you work it in the right direction, it's trivial.

Suppose you are a mathematician and you do not have a pocket calculator. You are sure to need some rational approximations to √3 somewhere along the line. So you should invest some time and effort into calculating some that you can store in the cupboard for when you need them. How can you do that?

You want to find pairs of integers a and b with a/b ≈ √3. Or, equivalently, you want a and b with a2 ≈ 3b2. But such pairs are easy to find: Simply make a list of perfect squares 1 4 9 16 25 36 49..., and their triples 3 12 27 48 75 108 147..., and look for numbers in one list that are close to numbers in the other list. 22 is close to 3·12, so √3 ≈ 2/1. 72 is close to 3·42, so √3 ≈ 7/4. 192 is close to 3·112, so √3 ≈ 19/11. 972 is close to 3·562, so √3 ≈ 97/56.

Even without the benefits of Hindu-Arabic numerals, this is not a very difficult or time-consuming calculation. You can carry out the tabulation to a couple of hundred entries in a few hours, and if you do you will find that 2652 = 70225, and 3·1532 is 70227, so that √3 ≈ 265/153.

Once you understand this, it's clear why Archimedes did not explain himself. By saying that √3 was approximately 265/153, had had exhausted the topic. By saying so, you are asserting no more and no less than that 3·1532 ≈ 2652; if the reader is puzzled, all they have to do is spend a minute carrying out the multiplication to see that you are right. The only interesting point that remains is how you found those two integers in the first place, but that's not part of Archimedes' topic, and it's pretty obvious anyway.

[ Addendum 20090122: Dr. Lindsey was far from the only person to have been puzzled by this. More here. ]

In my article about the peculiarity of π, I briefly mentioned continued fractions, saying that if you truncate the continued fraction representation of a number, you get a rational number that is, in a certain sense, one of the best possible rational approximations to the original number. I'll eventually explain this in detail; in the meantime, I just want to point out that 265/153 is one of these best-possible approximations; the mathematics jargon is that 265/153 is one of the "convergents" of √3.

The approximation of √n by rationals leads one naturally to the so-called "Pell's equation", which asks for integer solutions to ax2 - by2 = ±1; these turn out to be closely related to the convergents of √(a/b). So even if you know nothing about continued fractions or convergents, you can find good approximations to surds.

Here's a method that I learned long ago from Patrick X. Gallagher of Columbia University. For concreteness, let's suppose we want an approximation to √3. We start by finding a solution of Pell's equation. As noted above, we can do this just by tabulating the squares. Deeper theory (involving the continued fractions again) guarantees that there is a solution. Pick one; let's say we have settled on 7 and 4, for which 72 ≈ 3·42.

Then write √3 = √(48/16) = √(49/16·48/49) = 7/4·√(48/49). 48/49 is close to 1, and basic algebra tells us that √(1-ε) ≈ 1 - ε/2 when ε is small. So √3 ≈ 7/4 · (1 - 1/98). 7/4 is 1.75, but since we are multiplying by (1 - 1/98), the true approximation is about 1% less than this, or 1.7325. Which is very close—off by only about one part in 4000. Considering the very small amount of work we put in, this is pretty darn good. For a better approximation, choose a larger solution to Pell's equation.

More generally, Gallagher's method for approximating √n is: Find integers a and b for which a2 ±1 = nb2; such integers are guaranteed to exist unless n is a perfect square. Then write √n = √(nb2 / b2) = √((a2 ± 1) / b2) = √(a2/b2 · (a2 ± 1)/a2) = a / b · √((a2 ± 1) / a2) = a/b · √(1 ± 1/a2) ≈ a/b · (1 ± 1 / 2a2).

Who was Pell? Pell was nobody in particular, and "Pell's equation" is a complete misnomer. The problem was (in Europe) first studied and solved by Lord William Brouncker, who, among other things, was the founder and the first president of the Royal Society. The name "Pell's equation" was attached to the problem by Leonhard Euler, who got Pell and Brouncker confused—Pell wrote up and published an account of the work of Brouncker and John Wallis on the problem.

G.H. Hardy says that even in mathematics, fame and history are sometimes capricious, and gives the example of Rolle, who "figures in the textbooks of elementary calculus as if he had been a mathematician like Newton." Other examples abound: Kuratowski published the theorem that is now known as Zorn's Lemma in 1923, Zorn published different (although related) theorem in 1935. Abel's theorem was published by Ruffini in 1799, by Abel in 1824. Pell's equation itself was first solved by the Indian mathematician Brahmagupta around 628. But Zorn did discover, prove and publish Zorn's lemma, Abel did discover, prove and publish Abel's theorem, and Brouncker did discover, prove and publish his solution to Pell's equation. Their only failings are to have been independently anticipated in their work. Pell, in contrast, discovered nothing about the equation that carries his name. Hardy might have mentioned Brouncker, whose significant contribution to number theory was attributed to someone else, entirely in error. I know of no more striking mathematical example of the capriciousness of fame and history.

[Other articles in category /math] permanent link

Mon, 20 Mar 2006

The 20 most important tools

Forbes magazine recently ran an article on The

20 Most Important Tools. I always groan when I hear that some big

magazine has done something like that, because I know what kind of

dumbass mistake they are going to make: they are going to put Post-It

notes at #14. The Forbes folks did not make this

mistake. None of their 20 items were complete losers.

In fact, I think they did a pretty good job. They assembled a panel of experts, including Don Norman and Henry Petroski; they also polled their readers and their senior editors. The final list isn't the one I would have written, but I don't claim that it's worse than one I would have written.

Criticizing such a list is easy—too easy. To make the rules fair, it's not enough to identify items that I think should have been included. I must identify items that I think nearly everyone would agree should have been included.

Unfortunately, I think there are several of these.

First, to the good points of the list. It doesn't contain any major clinkers. And it does cover many vitally important tools. It provokes thought, which is never a bad thing. It was assembled thoughtfully, so one is not tempted to dismiss any item without first carefully considering why it is in there.

Here's the Forbes list:

- The Knife

- The Abacus

- The Compass

- The Pencil

- The Harness

- The Scythe

- The Rifle

- The Sword

- Eyeglasses

- The Saw

- The Watch

- The Lathe

- The Needle

- The Candle

- The Scale

- The Pot

- The Telescope

- The Level

- The Fish Hook

- The Chisel

Categories

The Forbes items are also allowed to stand for categories. For example, "the Rifle" really stands for portable firearms, including muskets and such. "The pencil" includes pens and writing brushes. (Why put "the pencil" and not "the pen"? I imagine Henry Petroski arguing about it until everyone else got tired and gave up.) The spoon, had they included it, would have stood for eating utensils in general.But here is my first quibble: it's not really clear why some items stood for whole groups, and others didn't. The explanatory material points out that five other items on the list are special cases of the knife: the scythe, lathe, saw, chisel, and sword. The inclusion of the knife as #1 on the list is, I think, completely inarguable. The power and the antiquity of the knife would put it in the top twenty already.

Consider its unmatched versatility as well and you just push it up into first place, and beyond. Make a big knife, and you have a machete; bigger still, and you have a sword. Put a knife on the end of a stick and you have an axe; put it on a longer stick and you have a spear. Bend a knife into a circle and you have a sickle; make a bigger sickle and you have a scythe. Put two knives on a hinge or a spring and you have shears. Any of these could be argued to be in the top twenty. When you consider that all these tools are minor variations on the same device, you inevitably come to the conclusion that the knife is a tool that, like Babe Ruth among baseball players, is ridiculously overqualified even for inclusion with the greatest.

But Forbes people gave the sword a separate listing (#8), and a sword is just a big knife. It serves the same function as a knife and it serves it in the same mechanical way. So it's hard to understand why the Forbes people listed them separately. If you're going to list the sword separately, how can you omit the axe or the spear? Grouping the items is a good idea, because otherwise the list starts to look like the twenty most important ways to use a knife. But I would have argued for listing the sword, axe, chisel, and scythe under the heading of "knife".

I find the other knifelike devices less objectionable. The saw is fundamentally different from a knife, because it is made and used differently, and operates in a different way: it is many tiny knives all working in the same direction. And the lathe is not a special case of the knife, because the essential feature of the lathe is not the sharp part but the spinning part. (I wouldn't consider the lathe a small, portable implement, but more about that below.)

Pounding

I said that I was required to identify items that everyone would agree are major omissions. I have two such criticisms. One is that the list has room for six cutting tools, but no pounding tools. Where is the club? Where is the hammer? I could write a whole article about the absurdity of omitting the hammer. It's like leaving Abraham Lincoln off of a list of the twenty greatest U.S. presidents. It's like leaving Albert Einstein off of a list of the twenty greatest scientists. It's like leaving Honus Wagner off of a list of the twenty greatest baseball players.No, I take it back. It's not like any of those things. Those things should all be described as analogous to leaving the hammer of the list of the twenty most important tools, not the other way around.

Was the hammer omitted because it's not a simple, portable physical implement? Clearly not.

Was the hammer omitted because it's an abstract fundamental machine, like the lever? Is a hammer really just a lever? Not unless a knife is just a wedge.

Is the hammer subsumed in one of the other items? I can't see any candidates. None of the other items is for pounding.

Did the Forbes panel just forget about it? That would have been weird enough. Two thousand Forbes readers, ten editors, and Henry Petroski all forgot about the hammer? Impossible. If you stop someone on the street and ask them to name a tool, odds are that they will say "hammer". And how can you make a list of the twenty most important tools, include the chisel as #20, and omit the hammer, without which the chisel is completely useless?

The article says:

We eventually came up with a list of more than 100 candidate tools. There was a great deal of overlap, so we collapsed similar items into a single category, and chose one tool to represent them. That left us with a final list of 33 items, each one a part of a particular class or style of tool; for instance, the spoon is representative of all eating utensils.Perhaps the hammer was one of the 13 classes of tools that didn't make the cut? The writer of the article, David M. Ewalt, kindly provided me with a complete list of the 33 classes, including the also-rans. The hammer was not with the also-rans; I'm not sure if I find that more or less disturbing.

Also-rans

Well, enough about hammers. The 13 classes that did not make the cut were:

- spoon

- longbow

- broom

- paper clip

- computer mouse

- floppy disk

- syringe

- toothbrush

- barometer

- corkscrew

- gas chromatograph

- condom

- remote control

"Gas chromatograph" seems to be someone's attempt to steer the list away from ancient inventions and to include some modern tools on the list. This is a worthy purpose. But I wish that they had thought of a better representative than the gas chromatograph. It seems to me that most tools of modern invention serve only very specialized purposes. The gas chromatograph is not an exception. I've never used a gas chromatograph. I don't think I know anyone who has. I've never seen a gas chromatograph. I might well go to the grave without using one. How is it possible that the gas chromatograph is one of the 33 most important tools of all time, beating out the hammer?

With "syringe", I imagine the authors were thinking of the hypodermic needle, but maybe they really were thinking of the syringe in general, which would include the meat syringe, the vacuum pipette, and other similar devices. If the latter, I have no serious complaint; I just wanted to point out the possible misunderstanding.

"Paper clip" is just the kind of thing I was afraid would appear. The paper clip isn't one of the top hundred most important tools, perhaps not even one of the top thousand. If the hammer were annihilated, civilization would collapse within twenty-four hours. If the paper clip were annihilated, we would shrug, we would go back to using pins, staples, and ribbons to bind our papers, and life would go on. If the pin isn't qualified for the list, the paper clip isn't even close.

I was speechless at the inclusion of the corkscrew in a list of essential tools that omits both bottles and corks, reduced to incoherent spluttering. The best I could do was mutter "insane".

I don't know exactly what was intended by "remote control", but it doesn't satisfy the criteria. The idea of remote control is certainly important, but this is not a list of important ideas or important functions but important tools. If there were a truly universal remote control that I could carry around with me everywhere and use to open doors, extinguish lights, summon vehicles, and so on, I might agree. But each particular remote control is too specialized to be of any major value.

Putting the computer mouse on the list of the twenty (or even 33) most important tools is like putting the pastrami on rye on the list of the twenty most important foods. Tasty, yes. Important? Surely not. In the same class as the soybean? Absurd.

The floppy disk is already obsolete.

Other comparisons

The telescope

Returning to the main list, eyeglasses and telescopes are both special cases of the lens, but their fundamentally different uses seem to me to clearly qualify them for separate listing; fair enough. I'm not sure I would have included the telescope, though. Is the telescope the most useful and important object of its type? Maybe I'm missing something, but it seems to me that most of the uses of the telescope are either scientific or military. The military value of the telescope is not in the same class as the value of the sword or the rifle. The scientific value of the telescope, however, is enormous. So it's on it scientific credentials that the telescope goes into the list, if at all.But the telescope has a cousin, the microscope. Is the telescope's scientific value comparable to that of the microscope? I would argue that it is not. Certainly the microscope is much more widely used, in almost any branch of science you could name except astronomy. The telescope enabled the discovery that the earth is not the center of the universe, a discovery of vast philosophical importance. Did the microscope lead to fundamental discoveries of equal importance? I would argue that the discovery of microorganisms was at least as important in every way.

Arguing that "X is in the list, so Y should be too" is a slippery slope that leads to a really fat list in which each mistaken inclusion justifies a dozen more. I won't make that argument in this article. But the reverse argument, that "Y isn't in the list so X shouldn't be either", is much safer. If the microscope isn't important enough to make the list, then neither is the telescope.

The level

This is the only tool on the list that I thought was a serious mistake, not quite on the order of the Post-It note, but silly in the same way, if to a much lesser degree. It is another item of the type exemplified by the telescope, an item that is on the list, but whose more useful and important cousin is omitted. Why the level and not the plumb line? The plumb line does everything a level does, and more. The level tells you when things are horizontal; the plumb line tells you when they are horizontal or vertical, depending on what you need. The plumb line is simpler and older. The plumb line finds the point or surface B that is directly below point A; the level does nothing of the kind.I'm boggled; I don't know what the level is doing there. But the fact that my most serious complaint about any particular item is with item #18 shows how well-done I think the list is overall.

Sewing

The needle made the list at #13, but thread did not. A lot of sewing things missed out. Most of these, I think, are not serious omissions. The spinning wheel, for example: hand-spinning works adequately, although more slowly. The thimble? Definitely not in the top twenty. The button, with frogs and other clasps included? Maybe, maybe not. But one omission is serious, and must be considered seriously: the loom. I suppose it was eliminated for being too big; there can be no other excuse. But the lathe is #12, and the lathe is not normally small or portable.There are small, portable lathes. But there are also small, portable looms, hand looms, and so on. I think the loom has a better claim to being a tool in this sense than a lathe does. Cloth is surely one of the ten most important technological inventions of all time, up there with the knife, the gun, and the pot. Cloth does not belong on the Forbes list, because it is not a tool. But omission of the loom surprises me.

Grinding

Similarly, the omission of the windmill is quite

understandable. But what about the quern? Flour is surely a

technology of the first importance., Grain can be ground into flour

without a windmill, and in many places was or still is. This morning

I planned to write that it must have been omitted because it is

hardly used any more, but then I thought a little harder and realized

that I own not one but two devices that are essentially querns. (One

for grinding coffee beans, the other for peppercorns.) I wouldn't want to argue that the quern is on the top twenty, but I think

it's worth considering.

Similarly, the omission of the windmill is quite

understandable. But what about the quern? Flour is surely a

technology of the first importance., Grain can be ground into flour

without a windmill, and in many places was or still is. This morning

I planned to write that it must have been omitted because it is

hardly used any more, but then I thought a little harder and realized

that I own not one but two devices that are essentially querns. (One

for grinding coffee beans, the other for peppercorns.) I wouldn't want to argue that the quern is on the top twenty, but I think

it's worth considering.

Male bias?

In fact, the list seems to omit a lot of important handicraft and home items that have fallen into disfavor. Male bias, perhaps? I briefly considered writing this article with the male-bias angle as the main point, but it's not my style. The authors might learn something from consideration of this question anyway.The pot made the list, but not the potter's wheel. An important omission, perhaps? I think not, that a good argument could be made that the potter's wheel was only an incremental improvement, not suitable for the top twenty.

I do wonder what happened to rope; here I could only imagine that they decided it wasn't a "tool". (M. Ewalt says that he is at a loss to explain the omission of rope.) And where's the basket? Here I can't imagine what the argument was.

Carrying

With the mention of baskets, I can't put off any longer my biggest grievance about the list: Where is the bag?The bag! Where is the bag?

I will say it again: Where is the bag?

Is the bag a small, portable implement? Yes, almost by definition. "Stop for a minute and think about what you've done today--every job you've accomplished, every task you've completed." begins the Forbes article. Did I have my bag with me? I did indeed. I started the day by opening up a bag of grapes to eat for breakfast. Then I made my lunch and put it in a bag, which I put into another, larger bag with my pens and work papers. Then I carried it all to work on my bicycle. Without the bag, I couldn't have carried these things to work. Could I have gotten that stuff to work without a bag? No, I would not have had my hands free to steer the bicycle. What if I had walked, instead of riding? Still probably not; I would have dropped all the stuff.

The bag, guys!. Which of you comes to work in the morning without a bag? I just polled the folks in my office; thirteen of fourteen brought bags to work today. Which of you carries your groceries home from the store without a bag? Paleolithic people carried their food in bags too. Did you use a lathe today? No? A telescope? No? A level? A fish hook? A candle? Did you use a bag today? I bet you did. Where is the bag?

The only container on the Forbes list is the pot. Could the bag be considered to be included under the pot? M. Ewalt says that it was, and it was omitted for that reason. I believe this is a serious error. The bag is fundamentally different from the pot. I can sum up the difference in one sentence: the pot is for storage; the bag is for transportation.

Each one has several advantages not possessed by the other. Unlike the pot, the bag is lightweight and easy to carry; pots are bulky. You can sling the bag over your shoulder. The bag is much more accommodating of funny-shaped objects: It's much easier to put a hacked-up animal or a heterogeneous bunch of random stuff into a bag than into a pot. My bag today contains some pads of paper, a package of crackers, another bag of pens, a toy octopus, and a bag of potato chips. None of this stuff would fit well into a pot. The bag collapses when it's empty; the pot doesn't.

The pot has several big advantages over the bag:

- The pot is rigid. It tends

to protect its contents more than a bag would, both from thumping and

banging, and from rodents, which can gnaw through bags but not through

pots.

- The pot is impermeable. This means that it is easy to clean,

which is an

important health and safety issue. Solids, such as grain or beans,

are protected from damp when stored in pots, but not in bags. And the

pot, being impermeable, can be used to store liquids such as food

and lighting oils; making a bag for

storing liquids is possible but nontrivial. (Sometimes permeability

is an advantage; we store dirty laundry in bags and baskets, never pots.)

- The pot is

fireproof, and so can be used for cooking. Being both fireproof

and impermeable, the pot enables the preparation of soup, which

increases the supply of available food and the energy that can be

extracted from the food.

I have no objection to Forbes' inclusion of the pot on their list, none at all. In fact, I think that it should be put higher than #16. But the bag needs to be listed too.

Other possible omissions

After the hammer, the bag, and rope, I have no more items that I think are so inarguable that they are sure substitutes for items in Forbes' list. There are items I think are probably better choices, but I think it is arguable, and, as I explained at the beginning of the article, I don't want to take cheap shots. Any list of the 20 most important tools will leave out a lot of important tools; switching around which tools are omitted is no guarantee of an objectively better list. For discussion purposes only, I'll mention tongs (including pliers), baskets, and shovels. Of the items on Forbes' near-miss list that I would want to consider are the bow, the broom and the spoon.

Revised list

Here, then, is my revised list. It's still not the list I would have made up from scratch, but I wanted to try to retain as much of the Forbes list as I could, because I think the items at the bottom are judgement calls, and there is plenty of room for reasonable disagreement about any of them.Linguists found a while ago that if you ask subjects to judge whether certain utterances are grammatically correct or not, they have some difficulty doing it, and their answers do not show a lot of agreement with other subjects'. But if you allow them an "I'm not sure" category, they have a lot less difficulty, and you do see a lot of agreement about which utterances people are unsure about. I think a similar method may be warranted here. Instead of the tools that are in or out of the list, I'm going to make two lists: tools that I'm sure are in the list, and tools that I'm not sure are out of the list.

The Big Eight, tools that I think you'd have to be crazy to omit, are:

- Knife (includes sword, axe, scythe, chisel, spear, shears, scissors)

- Hammer (includes club, mace, sledgehammer, mallet)

- Bag (includes wineskin, water skin, leather bottle, purse)

- Pot (includes plate, bowl, pitcher, rigid bottle, mortar)

- Rope (includes string and thread)

- Harness (includes collar and yoke)

- Pen (includes pencil, writing brush, etc.)

- Gun (includes rifle and musket, but not cannon)

- Compass

- Plumb line (includes level)

- Sewing needle

- Candle (includes lamp, lantern, torch)

- Ladder

- Eyeglasses (includes contact lenses)

- Saw

- Balance

- Fishhook

- Lathe

- Abacus (includes counting board)

- Microscope

The other adjustments are minor: The pot got a big promotion, from #16 to #4. The pencil is represented by the pen, instead of the other way around. The rifle is teamed with the musket as "the gun". The telescope is replaced with the microscope. The level is replaced with the plumb line. The scale is replaced by the balance, which is more a terminological difference than anything else.

The omission of mine that worries me the most is the basket. I left it out because although it didn't seem very much like either the pot or the bag, it did seem too much like both of them. I worry about omitting the pin, but I'm not sure it qualifies as a "tool".

If I were to get another 13 slots, I might include:

- Basket

- Broom

- Horn

- Pry bar

- Quern

- Radio (Walkie-talkies)

- Scraper

- Shovel

- Spoon

- Tape

- Tongs

- Touchstone

- Welding torch

[ Addendum 20190610: Miles Gould points out that the bag may in fact have been essential to the evolution of human culture. This blog post by Scott Alexander, reviewing The Secret Of Our Success (Joseph Henrich, Princeton University Press, 2015) says, in part:

Humans are persistence hunters: they cannot run as fast as gazelles, but they can keep running for longer than gazelles (or almost anything else). Why did we evolve into that niche? The secret is our ability to carry water. Every hunter-gatherer culture has invented its own water-carrying techniques, usually some kind of waterskin. This allowed humans to switch to perspiration-based cooling systems, which allowed them to run as long as they want.]

[Other articles in category /tech] permanent link

Blosxom sucks

Several people were upset at my recent

discussion of Blosxom. Specifically, I said that it sucked.

Strange to say, I meant this primarily as a compliment. I am going to

explain myself here.

Before I start, I want to set your expectations appropriately. Bill James tells a story about how he answered the question "What is Sparky Anderson's strongest point as a [baseball team] manager?" with "His won-lost record". James was surprised to discover that when people read this with the preconceived idea that he disliked Anderson's management, they took "his record" as a disparagement, when he meant it as a compliment.

I hope that doesn't happen here. This article is a long compliment to Blosxom. It contains no disparagement of Blosxom whatsoever. I have technical criticisms of Blosxom; I left them out of this article to avoid confusion. So if I seem at any point to be saying something negative about Blosxom, please read it again, because that's not the way I meant it.

As I said in the original article, I made some effort to seek out the smallest, simplest, lightest-weight blogging software I could. I found Blosxom. It far surpassed my expectations. The manual is about three pages long. It was completely trivial to set up. Before I started looking, I hypothesized that someone must have written blog software where all you do is drop a text file in a directory somewhere and it magically shows up on the blog. That was exactly what Blosxom turned out to be. When I said it was a smashing success, I was not being sarcastic. It was a smashing success.

The success doesn't end there. As I anticipated, I wasn't satisfied for long with Blosxom's basic feature set. Its plugin interface let me add several features without tinkering with the base code. Most of these have to do with generating a staging area where I could view posts before they were generally available to the rest of the world. The first of these simply suppressed all articles whose filenames contained test. This required about six lines of code, and took about fifteen minutes to implement, most of which was time spent reading the plugin manual. My instance of Blosxom is now running nine plugins, of which I wrote eight; the ninth generates the atom-format syndication file.

The success of Blosxom continued. As I anticipated, I eventually reached a point at which the plugin interface was insufficient for what I wanted, and I had to start hacking on the base code. The base code is under 300 lines. Hacking on something that small is easy and rewarding.

I fully expect that the success of Blosxom will continue. Back in January, when I set it up, I foresaw that I would start with the basic features, that later I would need to write some plugins, and still later I would need to start hacking on the core. I also foresaw that the next stage would be that I would need to throw the whole thing away and rewrite it from scratch to work the way I wanted to. I'm almost at that final stage. And when I get there, I won't have to throw away my plugins. Blosxom is so small and simple that I'll be able to write a Blosxom-compatible replacement for Blosxom. Even in death, the glory of Blosxom will live on.

So what did I mean by saying that Blosxom sucks? I will explain.

Following Richard Gabriel, I think there are essentially two ways to do a software design:

- You can try to do the Right Thing, where the Right Thing is very complex, subtle, and feature-complete.

- You can take the "Worse-is-Better" approach, and try to cover most of the main features, more or less, while keeping the implementation as simple as possible.

Worse, because the Right Thing is very difficult to achieve, and very complex and subtle, it is rarely achieved. Instead, you get a complex and subtle system that is complexly and subtly screwed up. Often, the designer shoots for something complex, subtle, and correct, and instead ends up with a big pile of crap. I could cite examples here, but I think I've offended enough people for one day.

In contrast, with the "Worse-is-Better" approach, you do not try to do the Right Thing. You try to do the Good Enough Thing. You bang out something short and simple that seems like it might do the job, run it up the flagpole, and see if it flies. If not, you bang on it some more.

Whereas the Right Thing approach is hard to get correct, the Worse-is-Better approach is impossible to get correct. But it is very often a win, because it is much easier to achieve "good enough" than it is to achieve the Right Thing. You expend less effort in doing so, and the resulting system is often simple and easy to manage.

If you screw up with the Worse-is-Better approach, the end user might be able to fix the problem, because the system you have built is small and simple. It is hard to screw it up so badly that you end with nothing but a pile of crap. But even if you do, it will be a much smaller pile of crap than if you had tried to construct the Right Thing. I would very much prefer to clean up a small pile of crap than a big one.

Richard Gabriel isn't sure whether Worse-is-Better is better or worse than The Right Thing. As a philosophical question, I'm not sure either. But when I write software, I nearly always go for Worse is Better, for the usual reasons, which you might infer from the preceding discussion. And when I go looking for other people's software, I look for Worse is Better, because so very few people can carry out the Right Thing, and when they try, they usually end up with a big pile of crap.

When I went looking for blog software back in January, I was conscious that I was looking for the Worse-is-Better software to an even greater degree than usual. In addition to all the reasons I have given above, I was acutely conscious of the fact that I didn't really know what I wanted the software to do. And if you don't know what you want, the Right Thing is the Wrong Thing, because you are not going to understand why it is the Right Thing. You need some experience to see the point of all the complexity and subtlety of the Right Thing, and that was experience I knew I did not have. If you are as ignorant as I was, your best bet is to get some experience with the simplest possible thing, and re-evaluate your requirements later on.

When I found Blosxom, I was delighted, because it seemed clear to me that it was Worse-is-Better through and through. And my experience has confirmed that. Blosxom is a triumph of Worse-is-Better. I think it could serve as a textbook example.

Here is an example of a way in which Blosxom subscribes to the Worse-is-Better philosophy. A program that handles plugins seems to need some way to let the plugins negotiate amongst themselves about which ones will run and in what order. Consider Blosxom's filter callback. What if one plugin wants to force Blosxom to run its filter callback before the other plugins'? What if one plugin wants to stop all the subsequent plugins from applying their filters? What if one plugin filters out an article, but a later plugin wants to rescue it from the trash heap? All these are interesting and complex questions. The Apache module interface is an interesting and complex answer to some of these questions, an attempt to do The Right Thing. (A largely successful attempt, I should add.)

Faced with these interesting and complex questions, Blosxom sticks its head in the sand, and I say that without any intent to disparage Blosxom. The Blosxom answer is:

- Plugins run in alphabetic order.

- Each one gets its crack at a data structure that contains the articles.

- When all the plugins have run, Blosxom displays whatever's left in the data structure.

So Blosxom is a masterpiece of Worse-is-Better. I am a Worse-is-Better kind of guy anyway, and Worse-is-Better was exactly what I was looking for in this case, so I was very pleased with Blosxom, and I still am. I got more and better Worse-is-Better for my software dollar than usual.

When I stuck a "BLOSXOM SUX" icon on my blog pages, I was trying to express (in 80×15 pixels) my evaluation of Blosxom as a tremendously successful Worse-is-Better design. Because it might be the Wrong Thing instead of the Right Thing, but it was the Right Wrong Thing, and I'd sure rather have the Right Wrong Thing than the Wrong Right Thing.

And when I said that "Blosxom really sucks... in the best possible way", that's what I meant. In a world full of bloated, grossly over-featurized software that still doesn't do quite what you want, Blosxom is a spectacular counterexample. It's a slim, compact piece of software that doesn't do quite what you want---but because it is slim and compact, you can scratch your head over it for couple of minutes, take out the hammer and tongs, and get it adjusted the way you want.

Thanks, Rael. I think you did a hell of a job. I am truly sorry that was not clear from my earlier article.

[Other articles in category /oops] permanent link

Sat, 18 Mar 2006

Mysteries of color perception

Color perception is incredibly complicated, and almost the only

generalization of it that can be made is "everything you think you

know is probably wrong." The color wheel, for example, is totally

going to flummox the aliens, when they arrive. "What the heck do you

mean, 'violet is a mixture of red and blue'? Violet is nothing

like red. Violet is less like red than any other color except

ultraviolet! Red is even less like violet than it is like blue, for

heaven's sake. What is wrong with you people?"

Well, what is wrong with us is that, because of an engineering oddity in our color sensation system, we think red and violet look somewhat similar, and more alike than red and green.

But anyway, my real point was to note that the colors in  look a lot different against

a gray background than they do against the blue background in the bar

on the left. People who read this blog through an aggregator are just

going to have to click through the link for once.

look a lot different against

a gray background than they do against the blue background in the bar

on the left. People who read this blog through an aggregator are just

going to have to click through the link for once.

[Other articles in category /aliens] permanent link

Who farted?

The software that generates these web pages from my blog entries is Blosxom. When I decided I wanted

to try blogging, I did a web search for something like "simplest

possible blogging software", and found a page that discussed Bryar.

So I located the Bryar manual. The first sentence in the Bryar manual

says "Bryar is a piece of blog production software, similar in style

to (but considerably more complex than) Rael Dornfest's

blosxom." So I dropped Bryar and got Blosxom. This was a

smashing success: It was running within ten minutes. Now, two months

later, I'm thinking about moving everything to Bryar, because Blosxom

really sucks.  .

But for me it was a huge success, and I probably wouldn't have started

a blog if I hadn't found it. It sucks in the best possible way:

because it's drastically under-designed and excessively lightweight.

That is much better than sucking because it is drastically

over-designed and excessively heavyweight. It also sucks in some

less ambivalent ways, but I'm not here (today) to criticize Blosxom,

so let's move on.

.

But for me it was a huge success, and I probably wouldn't have started

a blog if I hadn't found it. It sucks in the best possible way:

because it's drastically under-designed and excessively lightweight.

That is much better than sucking because it is drastically

over-designed and excessively heavyweight. It also sucks in some

less ambivalent ways, but I'm not here (today) to criticize Blosxom,

so let's move on.

Until the big move to Bryar, I've been writing plugins for Blosxom. When I was shopping for blog software, one of my requirements that that the thing be small and simple enough to be hackable, since I was sure I would inevitably want to do something that couldn't be accomplished any other way. With Blosxom, that happened a lot sooner than it should have (the plugin interface is inadequate), but the fallback position of hacking the base code worked quite well, because it is fairly small and fairly simple.

Most recently, I had to hack the Blosxom core to get the menu you can see on the left side of the page, with the titles of the recent posts. This should have been possible with a plugin. You need a callback (story) in the plugin that is invoked once per article, to accumulate a list of title-URL pairs, and then you need another callback (stories_done) that is invoked once after all the articles have been scanned, to generate the menu and set up a template variable for insertion into the template. Then, when Blosxom fills the template, it can insert the menu that was set up by the plugin.

With stock Blosxom, however, this is impossible. The first problem you encounter is that there is no stories_done callback. This is only a minor problem, because you can just have a global variable that holds the complete menu so far at all times; each time story is invoked, it can throw away the incomplete menu that it generated last time and replace it with a revised version. After the final call to story, the complete menu really is complete:

sub story {

my ($pkg, $path, $filename, $story_ref, $title_ref, $body_ref,

$dir, $date) = @_;

return unless $dir eq "" && $date eq "";

return unless $blosxom::flavour eq "html";

my $link = qq{<a href="$blosxom::url$path/$filename.$blosxom::flavour">} .

qq{<span class=menuitem>$$title_ref</span></a>};

push @menu, $link;

$menu = join " / ", @menu;

}

That strategy is wasteful of CPU time, but not enough to notice. You

could also fix it by hacking the base code to add a

stories_done callback, but that strategy is wasteful of

programmer time.But it turns out that this doesn't work, and I don't think there is a reasonable way to get what I wanted without hacking the base code. (Blosxom being what it is, hacking the base code is a reasonable solution.) This is because of a really bad architecture decision inside of Blosxom. The page is made up of three independent components, called the "head", the "body", and the "foot". There are separate templates of each of these. And when Blosxom generates a page, it does so in this sequence:

- Fill "head" template and append result to output

- Process articles

- Fill "body" template and append result to output

- Fill "foot" template and append result to output

So I had to go in and hack the Blosxom core to make it fractionally less spiky:

- Process articles

- Fill "head" template and append result to output

- Fill "body" template and append result to output

- Fill "foot" template and append result to output

- Process articles

- Fill and output templates:

- Fill "head" template and append result to output

- Fill "body" template and append result to output

- Fill "foot" template and append result to output

#include head

#include body

#include foot

But if I were to finish the article with that discussion, then

the first half of the article would have been relevant and to the

point, and as regular readers know, We Don't Do That Here. No, there

must come a point about a third of the way through each article at

which I say "But anyway, the real point of this note is...".Anyway, the real point of this note is to discuss was the debugging technique I used to fix Blosxom after I made this core change, which broke the output. The menu was showing up where I wanted, but now all the date headers (like the "Sat, 18 Mar 2006" one just above) appeared at the very top of the page body, before all the articles.

The way Blosxom generates output is by appending it to a variable $output, which eventually accumulates all the output, and is printed once, right at the very end. I had found the code that generated the "head" part of the output; this code ended with $output .= $head to append the head part to the complete output. I moved this section down, past the (much more complicated) section that scanned the articles themselves, so that the "head" template, when filled, would have access to information (such as menus) accumulated while scanning the articles.

But apparently some other part of the program was inserting the date headers into the output while scanning the articles. I had to find this. The right thing to do would have been just to search the code for $output. This was not sure to work: there might have been dozens of appearances of $output, making it a difficult task to determine which one was the responsible appearance. Also, any of the plugins, not all of which were written by me, could have been modifying $output, so it would not have been enough just to search the base code.

Still, I should have started by searching for $output, under the look-under-the-lamppost principle. (If you lose your wallet in a dark street start by looking under the lamppost. The wallet might not be there, but you will not waste much time on the search.) If I had looked under the lamppost, I would have found the lost wallet:

$curdate ne $date and $curdate = $date and $output .= $date;

That's not what I did. Daunted by the theoretical difficulties, I

got out a big hammer. The hammer is of some technical interest, and

for many problems it is not too big, so there is some value in

presenting it.Perl has a feature called tie that allows a variable to be enchanted so that accesses to it are handled by programmer-defined methods instead of by Perl's usual internal processes. When a tied variable $foo is stored into, a STORE method is called, and passed the value to be stored; it is the responsibility of STORE to put this value somewhere that it can be accessed again later by its counterpart, the FETCH method.

There are a lot of problems with this feature. It has an unusually strong risk of creating incomprehensible code by being misused, since when you see something like $this = $that you have no way to know whether $this and $that are tied, and that what you think is an assignment expression is actually STORE($this, FETCH($that)), and so is invoking two hook functions that could have completely arbitrary effects. Another, related problem is that the compiler can't know that either, which tends to disable the possibility of performing just about any compile-time optimization you could possibly think of. Perhaps you would like to turn this:

for (1 .. 1000000) { $this = $that }

into just this:

$this = $that;

Too bad; they are not the same, because $that might be tied

to a FETCH function that will open the pod bay doors the

142,857th time it is called. The unoptimized code opens the pod bay

doors; the "optimized" code does not.But tie is really useful for certain kinds of debugging. In this case the question is "who was responsible for inserting those date headers into $output?" We can answer the question by tieing $output and supplying a STORE callback that issues a report whenever $output is modified. The code looks like this:

package who_farted;

sub TIESCALAR {

my ($package, $fh) = @_;

my $value = "";

bless { value => \$value, fh => $fh } ;

}

This is the constructor; it manufactures an object to which the

FETCH and STORE methods can be directed. It is the

responsibility of this object to track the value of $output,

because Perl won't be tracking the value in the usual way. So the

object contains a "value" member, holding the value that is stored in

$object. It also contains a filehandle for printing

diagnostics. The FETCH method simply returns the current

stored value:

sub FETCH {

my $self = shift;

${$self->{value}};

}

The basic STORE method is simple:

sub STORE {

my ($self, $val) = @_;

my $fh = $self->{fh};

print $fh "Someone stored '$val' into \$output\n";

${$self->{value}} = $val;

}

It issues a diagnostic message about the new value, and stores the new

value into the object so that FETCH can get it later. But

the diagnostic here is not useful; all it says is that "someone"

stored the value; we need to know who, and where their code is. The

function st() generates a stack trace:

sub st {

my @stack;

my $spack = __PACKAGE__;

my $N = 1;

while (my @c = caller($N)) {

my (undef, $file, $line, $sub) = @c;

next if $sub =~ /^\Q$spack\E::/o;

push @stack, "$sub ($file:$line)";

} continue { $N++ }

@stack;

}

Perl's built-in caller() function returns information about

the stack frame N levels up. For clarity, st() omits

information about frames in the who_farted class itself.

(That's the next if... line.)The real STORE dumps the stack trace, and takes some pains to announce whether the value of $output was entirely overwritten or appended to:

sub STORE {

my ($self, $val) = @_;

my $old = $ {$self->{value}};

my $olen = length($old);

my ($act, $what) = ("set to", $val);

if (substr($val, 0, $olen) eq $old) {

($act, $what) = ("appended", substr($val, $olen));

}

$what =~ tr/\n/ /;

$what =~ s/\s+$//;

my $fh = $self->{fh};

print $fh "var $act '$what'\n";

print $fh " $_\n" for st();

print $fh "\n";

${$self->{value}} = $val;

}

To use this, you just tie $output at the top of the base

code:

open my($DIAGNOSTIC), ">", "/tmp/who-farted" or die $!;

tie $output, 'who_farted', $DIAGNOSTIC;

This worked well enough. The stack trace informed me that the

modification of interest was occurring in the

blosxom::generate function at line 290 of

blosxom.cgi. That was the precise location of the lost

wallet. It was, as I said, a big hammer to use to squash a mosquito of

a problem---but the mosquito is dead.A somewhat more useful version of this technique comes in handy in situations where you have some program, say a CGI program, that is generating the wrong output; maybe there is a "1" somewhere in the middle of the output and you don't know what part of the program printed "1" to STDOUT. You can adapt the technique to watch STDOUT instead of a variable. It's simpler in some ways, because STDOUT is written to but never read from, so you can eliminate the FETCH method and the data store:

package who_farted;

sub rig_fh {

my ($handle, $diagnostic) = shift;

my $mode = shift || "<";

open my($aux_handle), "$mode&=", $handle or die $!;

tie *$handle, __PACKAGE__, $aux_handle, $diagnostic;

}

sub TIEHANDLE {

my ($package, $aux_handle, $diagnostic) = @_;

bless [$aux_handle, $diagnostic] => $package;

}

sub PRINT {

my ($aux_handle, $diagnostic) = @$self;

print $aux_handle @_;

my $str = join("", @_);

print $diagnostic "$str:\n";

print $diagnostic " $_\n" for st();

print $diagnostic "\n";

}

To use this, you put something like rig_fh(\*STDOUT,

$DIAGNOSTIC, ">") in the main code. The only tricky part is that some

part of the code (rig_fh here) must manufacture a new, untied

handle that points to the same place that STDOUT did before

it was tied, so that the output can actually be printed.Something it might be worth pointing out about the code I showed here is that it uses the very rare one-argument form of bless:

package who_farted;

sub TIESCALAR {

my ($package, $fh) = @_;

my $value = "";

bless { value => \$value, fh => $fh } ;

}

Normally the second argument should be $package, so that the

object is created in the appropriate package; the default is to create

it in package who_farted. Aren't these the same? Yes,

unless someone has tried to subclass who_farted and inherit

the TIESCALAR method. So the only thing I have really gained

here is to render the TIESCALAR method uninheritable.

<sarcasm>Gosh, what a tremendous

benefit</sarcasm>. Why did I do it this way? In

regular OO-style code, writing a method that cannot be inherited is

completely idiotic. You very rarely have a chance to write a

constructor method in a class that you are sure will never be

inherited. This seemed like such a case. I decided to take advantage

of this non-feature of Perl since I didn't know when the opportunity would come by again."Take advantage of" is the wrong phrase here, because, as I said, there is not actually any advantage to doing it this way. And although I was sure that who_farted would never be inherited, I might have been wrong; I have been wrong about such things before. A smart programmer requires only ten years to learn that you should not do things the wrong way, even if you are sure it will not matter. So I violated this rule and potentially sabotaged my future maintenance efforts (on the day when the class is subclassed) and I got nothing, absolutely nothing in return.

Yes. It is completely pointless. A little Dada to brighten your day. Anyone who cannot imagine a lobster-handed train conductor sleeping in a pile of celestial globes is an idiot.

[Other articles in category /oops] permanent link

Fri, 17 Mar 2006

More on Emotions

In yesterday's

long article about emotions, I described the difficulty like

this:

There's another kind of embarrassment that occurs when you see something you shouldn't. For example, you walk into a room and see your mother-in-law putting on her bra. You are likely to feel embarrassed. What's the connection with the embarrassment you feel when you fall off a ledge? I don't know; I'm not even sure they are the same. Perhaps we need a new word.This morning I mentioned to Lorrie that the idea of "embarrassment" seemed to cover two essentially different situations. She told me that our old friend Robin Bernstein had noticed this also, and had suggested that the words "enza" and "zenza" be used respectively for the two feelings of embarrassment for one's self and for embarrassment for other people.

I also thought of another emotion that was not on my list of basic emotions, but seems different from the others. This emotion does not, so far as I know, have a word in English. It is the emotion felt (by most people) when regarding a happy baby, the one that evokes the "Awwww!" response.

This is a very powerful response in most people, for

evolutionarily obvious reasons. It is so powerful that it is even

activated by baby animals, dolls, koala bears, toy ducks, and, in

general, anything small and round. Even, to a slight extent, ball

bearings. (Don't you find ball bearings at least a little bit cute?

I certainly do.)

This is a very powerful response in most people, for

evolutionarily obvious reasons. It is so powerful that it is even

activated by baby animals, dolls, koala bears, toy ducks, and, in

general, anything small and round. Even, to a slight extent, ball

bearings. (Don't you find ball bearings at least a little bit cute?

I certainly do.)

The aliens might or might not have this emotion. If they are aliens who habitually protect and raise their young, I think it is inevitable. The aliens might be the type to eat their young, in which case they probably will not feel this way, although they might still have that response to their eggs, in which case expect them to feel warmly about ball bearings.

I also gave some more thought to Ashley, the Pacemate who claimed that her most embarrassing moment was crashing into the back of a trash truck and totaling her car. I tried to understand why I found this such a strange response. The conclusion I finally came to was that I had found it inappropriate because I would have expected fear, anger, or guilt to predominate. If Ashley is in a vehicle colision severe enough to ruin her car, I felt, she should experience fear for her own safety or that of others, anger at having wrecked her car, guilt at having carelessly damaged someone else's property or health. But embarrassment suggested to me that her primary concern was for her reputation: now the whole world thinks that Ashley is a bad driver.

If you don't see what I'm getting at here, the following situational

change might make it clearer:

If you don't see what I'm getting at here, the following situational

change might make it clearer:

Most Embarrassing momentYou almost crippled seventy schoolkids? Gosh, that must have been embarrassing!Ashley (alternate universe version): Crashing into the back of a school bus full of kids and totaling my car!

Having made the analysis explicit for myself, and pinned down what seemed strange to me about Ashley's embarrassment, it no longer seems so strange to me. Here's why: It wasn't a school bus, but a garbage truck. Garbage trucks are big and heavy. The occupants were much less likely to have been injured than was Ashley herself, partly because they were in a truck and also because Ashley struck the back of the truck and not the front. The truck was almost certainly less severely damaged than Ashley's car was, perhaps nearly unscathed. And of course it was impossible that the truck's cargo was damaged. So a large part of the motivation for fear and guilt is erased, simply because the other vehicle in the collision was a garbage truck. I would have been angry that my car was wrecked, but if Ashley isn't, who am I to judge? Probably she's just a better person than I am.

But I still find the reaction odd. I wonder if some of what Ashley takes to be embarrassment isn't actually disgust. But at least I no longer find it completely bizarre.

Finally, thinking about this led me to identify another emotion that I think might belong on the master list: relief.

[Other articles in category /aliens] permanent link

Thu, 16 Mar 2006

Emotions

I was planning to write another article about π, and its appearance

in Coulomb's law, or perhaps an article about how to calculate the volume

of a higher-dimensional analogue of a sphere.