Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2025: | JFMAM |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 245 |

| Programming | 99 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 22 |

| Physics | 21 |

| Perl | 17 |

| Biology | 15 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Mon, 03 Feb 2025

Here's a Math SE pathology that bugs me. OP will ask "I'm trying to prove that groups !!A!! and !!B!! are isomorphic, I constructed this bijection but I see that it's not a homomorphism. Is it sufficient, or do I need to find a bijective homomorphism?"

And respondent !!R!! will reply in the comments "How can a function which is not an homomorphism prove that the groups are isomorphic?"

Which is literally the exact question that OP was asking! "Do I need to find … a homomorphism?"

My preferred reply would be something like "Your function is not enough. You are correct that it needs to be a homomorphism."

Because what problem did OP really have? Clearly, their problem is that they are not sure what it means for two groups to be isomorphic. For the respondent to ask "How can a function which is not an homomorphism prove the the groups are isomorphic" is unhelpful because they know that OP doesn't know the answer to that question.

OP knows too, that's exactly what their question was! They're trying to find out the answer to that exact question! OP correctly identified the gap in their own understanding. Then they formulated a clear, direct question that would address the gap.

THEY ARE ASKING THE EXACT RIGHT QUESTION AND !!R!! DID NOT ANSWER IT

My advice to people answering questions on MSE:

It's all very well for !!R!! to imagine that they are going to be brilliant like Socrates, conducting a dialogue for that ages that draws from OP the realization that the knowledge they sought was within them all along. Except:

- !!R!! is not Socrates

- Nobody has time for this nonsense

- The knowledge was not within them all along

MSE is a site where people go to get answers to their questions. That is its sole and stated purpose. If !!R!! is not going to answer questions, what are they even doing there? In my opinion, just wasting everyone's time.

Important pedagogical note

It's sufficient to say "Your function is not enough", which answers the question.

But it is much better to say "Your function is not enough. You are correct that it needs to be a homomorphism". That acknowledges the student's contribution. It tells them that their analysis of the difficulty was correct!

They may not know what it means for two groups to be isomorphic, but they do know one something almost as good: that they are unsure what it means for two groups to be isomorphic. This is valuable knowledge.

This wise student recognises that they don't know. Socrates said that he was the wisest of all men, because he at least “knew that he didn't know”. If you want to take a lesson from Socrates, take that one, not his stupid theory that all knowledge is already within us.

OP did what students are supposed to do: they reflected on their knowledge, they realized it was inadequate, and they set about rectifying it. This deserves positive reinforcement.

Addenda

This is a real example. I have not altered it, because I am afraid that if I did you would think I was exaggerating.

I have been banging this drum for decades, but I will cut the scroll here. Expect a followup article.

20250206

The threatened followup article, about the EFNet #perl channel

in the early 2000's.

[Other articles in category /math/se] permanent link

Sat, 02 Dec 2023Content warning: grumpy complaining. This was a frustrating month.

Need an intuitive example for how "P is necessary for Q" means "Q⇒P"?

This kind of thing comes up pretty often. Why are there so many ways that the logical expression !!Q\implies P!! can appear in natural language?

- If !!Q!!, then !!P!!

- !!Q!! implies !!P!!

- !!P!! if !!Q!!

- !!Q!! is sufficient for !!P!!

- !!P!! is necessary for !!Q!!

Strange, isn't it? !!Q\land P!! is much simpler: “Both !!Q!! and !!P!! are true” is pretty much it.

Anyway this person wanted an intuitive example of “!!P!! is necessary for !!Q!!”

I suggested:

Suppose that it is necessary to have a ticket (!!P!!) in order to board a certain train (!!Q!!). That is, if you board the train (!!Q!!), then you have a ticket (!!P!!).

Again this follows the principle that rule enforcement is a good thing when you are looking for intuitive examples. Keeping ticketless people off the train is something that the primate brain is wired up to do well.

My first draft had “board a train” in place of “board a certain train”. One commenter complained:

many people travel on trains without a ticket, worldwide

I was (and am) quite disgusted by this pettifogging.

I said “Suppose that…”. I was not claiming that the condition applies to every train in all of history.

OP had only asked for an example, not some universal principle.

Does ...999.999... = 0?

This person is asking one of those questions that often puts Math StackExchange into the mode of insisting that the idea is completely nonsensical, when it is actually very close to perfectly mundane mathematics. (Previously: [1] [2] [3] ) That didn't happen this time, which I found very gratifying.

Normally, decimal numerals have a finite integer part on the left of the decimal point, and an infinite fractional part on the right of the decimal point, as with (for example) !!\frac{13}{3} = 4.333\ldots!!. It turns out to work surprisingly well to reverse this, allowing an infinite integer part on the left and a finite fractional part on the right, for example !!\frac25 = \ldots 333.4!!. For technical reasons we usually do this in base !!p!! where !!p!! is prime; it doesn't work as well in base !!10!!. But it works well enough to use: If we have the base-10 numeral !!\ldots 9999.0!! and we add !!1!!, using the ordinary elementary-school right-to-left addition algorithm, the carry in the units place goes to the tens place as usual, then the next carry goes to the hundreds place and so on to infinity, leaving us with !!\ldots 0000.0!!, so that !!\ldots 9999.0!! can be considered a representation of the number !!-1!!, and that means we don't need negation signs.

In fact this system is fundamental to the way numbers are represented in computer arithmetic. Inside the computer the integer !!-1!! is literally represented as the base-2 numeral !!11111111\;11111111\;11111111\;11111111!!, and when we add !!1!! to it the carry bit wanders off toward infinity on the left. (In the computer the numeral is finite, so we simulate infinity by just discarding the carry bit when it gets too far away.)

Once you've seen this a very reasonable next question is whether you can have numbers that have an infinite sequence of digits on both sides. I think something goes wrong here — for one thing it is no longer clear how to actually do arithmetic. For the infinite-to-the-left numerals arithmetic is straightforward (elementary-school algorithms go right-to-left anyway) and for the standard infinite-to-the-right numerals we can sort of fudge it. (Try multiplying the infinite decimal for !!\sqrt 2!! by itself and see what trouble you get into. Or simpler: What's !!4.666\ldots \times 3!!?)

OP's actual question was: If !!\ldots 9999.0 !! can be considered to represent !!-1!!, and if !!0.9999\ldots!! can be considered to represent !!1!!, can we add them and conclude that !!\ldots 9999.9999\ldots = 0!!?

This very deserving question got a good answer from someone who was not me. This was a relief, because my shameful answer was pure shitpostery. It should have been heavily downvoted, but wasn't. The gods of Math SE karma are capricious.

Why define addition with successor?

Ugh, so annoying. OP had read (Bertrand Russell's explanation of) the Peano definition of addition, and did not understand it. Several people tried hard to explain, but communication was not happening. Or, perhaps, OP was more interested in having an argument than in arriving at an understanding. I lost a bit of my temper when they claimed:

Russell's so-called definition of addition (as quoted in my question) is nothing but a tautology: ….

I didn't say:

If you think Bertrand Russell is stupid, it's because you're stupid.

although I wanted to at first. The reply I did make is still not as measured as I would like, and although it leaves this point implicit, the point is still there. I did at least shut up after that. I had answered OP's question as well as I was able, and carrying on a complex discussion in the comments is almost never of value.

Why is Ramanujan considered a great mathematician?

This was easily my best answer of the month, but the question was deleted, so you will only be able to see it if you have enough Math SE reputation.

OP asked a perfectly reasonable question: Ramanujan gets a lot of media hype for stuff like this:

$${\sqrt {\phi +2}}-\phi ={\cfrac {e^{{-2\pi /5}}}{1+{\cfrac {e^{{-2\pi }}}{1+{\cfrac {e^{{-4\pi }}}{1+{\cfrac {e^{{-6\pi }}}{1+\,\cdots }}}}}}}}$$

which is not of any obvious use, so “why is it given such high regard?”

OP appeared to be impugning a famous mathematician, and Math SE always responds badly to that; their heroes must not be questioned. And even worse, OP mentioned the notorious non-fact that $$1+2+3+\ldots =-\frac1{12}$$ which drives Math SE people into a frothing rage.

One commenter argued:

Mathematics is not inherently about its "usefulness". Even if you can't find practical use for those formulas, you still have to admit that they are by no means trivial

I think this is fatuous. OP is right here, and the commenter is wrong. Mathematicians are not considered great because they produce wacky and impractical equations. They are considered great because they solve problems, invent techniques that answer previously impossible questions, and because they contribute insights into deep and complex issues.

Some blockhead even said:

Most of the mathematical results are useless. Mathematics is more like an art.

Bullshit. Mathematics is about trying to understand stuff, not about taping a banana to the wall. I replied:

I don't think “mathematics is not inherently about its usefulness" is an apt answer here. Sometimes mathematical results have application to physics or engineering. But for many mathematical results the application is to other parts of mathematics, and mathematicians do judge the ‘usefulness’ of results on this basis. Consider for example Mochizuki's field of “inter-universal Teichmüller theory”. This was considered interesting only as long as it appeared that it might provide a way to prove the !!abc!! conjecture. When that hope collapsed, everyone lost interest in it.

My answer to OP elaborated on this point:

The point of these formulas wasn't that they were useful in themselves. It's that in order to find them he had to have a deep understanding of matters that were previously unknown. His contribution was the deep understanding.

I then discussed Hardy's book on the work he did with Ramanujan and Hardy's own estimation of Ramanujan's work:

The first chapter is somewhat negative, as it summarizes the parts of Ramanujan's work that he felt didn't have lasting value — because Hardy's next eleven chapters are about the work that he felt did have value.

So if OP wanted a substantive and detailed answer to their question, that would be the first place to look.

I also did an arXiv search for “Ramanujan” and found many recent references, including one with “applications to the Ramanujan !!τ!!-function”, and concluded:

The !!\tau!!-function is the subject of the entire chapter 10 of Hardy's book and appears to still be of interest as recently as last Monday.

The question was closed as “opinion-based” (a criticism that I think my answer completely demolishes) and then it was deleted. Now if someone else trying to find out why Ramanujan is held in high regard they will not be able to find my factual, substantive answer.

Screw you, Math SE. This month we both sucked.

[Other articles in category /math/se] permanent link

Fri, 24 Nov 2023

Math SE report 2023-09: Sense and reference, Wason tasks, what is a sequence?

Proving there is only one proof?

OP asks:

In mathematics, is it possible to prove that there is only one (shortest) proof of a given theorem (say, in ZFC)?

This was actually from back in July, when there was a fairly substantive answer. But it left out what I thought was a simpler, non-substantive answer: For a given theorem !!T!! it's actually quite simple to prove that there is (or isn't) only one proof of !!T!!: just generate all possible proofs in order by length until you find the shortest proofs of !!T!!, and then stop before you generate anything longer than those. There are difficult and subtle issues in provability theory, but this isn't one of them.

I say “non-substantive” because it doesn't address any of the possibly interesting questions of why a theorem would have only one proof, or multiple proofs, or what those proofs would look like, or anything like that. It just answers the question given: is it possible to prove that there is only one shortest proof.

So depending on what OP was looking for, it might be very unsatisfying. Or it might be hugely enlightening, to discover that this seemingly complicated question actually has a simple answer, just because proofs can be systematically enumerated.

This comes in handy in more interesting contexts. Gödel showed that arithmetic contains a theorem whose shortest proof is at least one million steps long! He did it by constructing an arithmetic formula !!G!! which can be interpreted as saying:

!!G!! cannot be proved in less than one million steps.

If !!G!! is false, it can be proved (in less than one million steps) and our system is inconsistent. So assuming that our axioms are consistent, then !!G!! is true and either:

- There is no proof of at all of !!G!!, or

- There are proofs of !!G!! but the shortest one is at least a million steps

Which is it? It can't be (1) because there is a proof of !!G!!: simply generate every single proof of one million steps or fewer, and check at the last line of each one to make sure that it is not !!G!!. So it must be (2).

What counts as a sequence, and how would we know that it isn't deceiving?

This is a philosophical question: What is a sequence, really? And:

if I write down random numbers with no pattern at all except for the fact that it gets larger, is it a viable sequence?

And several other related questions that are actually rather subtle: Is a sequence defined by its elements, or by some external rule? If the former how can you know when a sequence is linear, when you can only hope to examine a finite prefix?

I this is a great question because I think a sequence, properly construed, is both a rule and its elements. The definition says that a sequence of elements of !!S!! is simply a function !!f:\Bbb N\to S!!. This definition is a sort of spherical cow: it's a nice, simple model that captures many of the mathematical essentials of the thing being modeled. It works well for many purposes, but you get into trouble if you forget that it's just a model. It captures the denotation, but not the sense. I wouldn't yak so much about this if it wasn't so often forgotten. But the sense is the interesting part. If you forget about it, you lose the ability to ask questions like

Are sequences !!s_1!! and !!s_2!! the same sequence?

If all you have is the denotation, there's only one way to answer this question:

By definition, yes, if and only if !!s_1!! and !!s_2!! are the same function.

and there is nothing further to say about it. The question is pointless and the answer is useless. Sometimes the meaning is hidden a little deeper. Not this time. If we push down into the denotation, hoping for meaning, we find nothing but more emptiness:

Q: What does it mean to say that !!s_1!! and !!s_2!! are the same function?

A: It means that the sets $$S_1 = \{ \langle i, s_1(i) \rangle \mid i\in \Bbb N\}$$ and $$S_2 = \{ \langle i, s_2(i) \rangle \mid i\in \Bbb N\}$$ have exactly the same elements.

We could keep going down this road, but it goes nowhere and having gotten to the end we would have seen nothing worth seeing.

But we do ask and answer this kind of question all the time. For example:

- !!S_1(n)!! is the infinite sequence of odd numbers starting at !!1!!

- !!S_2(n)!! is the infinite sequence of numbers that are the difference between a square and its previous square, starting at !!1^2-0^2!!

Are sequences !!S_1!! and !!S_2!! the same sequence? Yes, yes, of course they are, don't focus on the answer. Focus on the question! What is this question actually asking?

The real essence of the question is not about the denotation, about just the elements. Rather: we're given descriptions of two possible computations, and the question is asking if these two computations will arrive at the same results in each case. That's the real question.

Well, I started this blog article back in October and it's still not ready because I got stuck writing about this question. I think the answer I gave on SE is pretty good, OP asked what is essentially a philosophical question and the backbone of my answer is on the level of philosophy rather than mathematics.

[ Addendum: On review, I am pleasantly surprised that this section of the blog post turned out both coherent and relevant. I really expected it to be neither. A Thanksgiving miracle! ]

Can inequalities be added the way that equations can be added?

OP says:

Suppose you have !!x + y > 6!! and !!x - y > 4!!. Adding the inequalities, the !!y!! terms cancel and you end up with … !!x > 5!!. It is not intuitively obvious to me that this holds true … I can see that you can't subtract inequalities, but is it always okay to add them?

I have a theory that if someone is having trouble with the intuitive meaning of some mathematical property, it's a good idea to turn it into a question about fair allocation of resources, or who has more of some commodity, because human brains are good at monkey tasks like seeing who got cheated when the bananas were shared out.

About ten years ago someone asked for an intuitive explanation of why you could add !!\frac a2!! to both sides of !!\frac a2 < \frac b2!! to get !!\frac a2+\frac a2 < \frac a2 + \frac b2!!. I said:

Say I have half a bag of cookies, that's !!\frac a2!! cookies, and you have half a carton of cookies, that's !!\frac b2!! cookies, and the carton is bigger than the bag, so you have more than me, so that !!\frac a2 < \frac b2!!.

Now a friendly djinn comes along and gives you another half a bag of cookies, !!\frac a2!!. And to be fair he gives me half a bag too, also !!\frac a2!!.

So you had more cookies before, and the djinn gave each of us an extra half a bag. Then who has more now?

I tried something similar this time around:

Say you have two bags of cookies, !!a!! and !!b!!. A friendly baker comes by and offers to trade with you: you will give the baker your bag !!a!! and in return you will get a larger bag !!c!! which contains more cookies. That is, !! a \lt c !!. You like cookies, so you agree.

Then the baker also trades your bag !!b!! for a bigger bag !!d!!.

Is it possible that you might not have more cookies than before you made the trades? … But that's what it would mean if !! a\lt c !! and !! b\lt d !! but not !! a+b \lt c+d !! too.

Someday I'll write up a whole blog article about this idea, that puzzles in arithmetic sometimes become intuitively obvious when you turn them into questions about money or commodities, and that puzzles in logic sometimes become intuitively obvious when you turn them into questions about contract and rule compliance.

I don't remember why I decided to replace the djinn with a baker this time around. The cookies stayed the same though. I like cookies. Here's another cookie example, this time to explain why !!1\div 0.5 = 2!!.

What is the difference between "for all" and "there exists" in set builder notation?

This is the same sort of thing again. OP was was asking about

$$B = \{n \in \mathbb{N} : \forall x \in \mathbb{N} \text{ and } n=2^x\}$$

but attempting to understand this is trying to swallow two pills at once. One pill is the logic part (what role is the !!\forall!! playing) and the other pill is the arithmetic part having to do with powers of !!2!!. If you're trying to understand the logic part and you don't have an instantaneous understanding of powers of !!2!!, it can be helpful to simplify matters by replacing the arithmetic with something you understand intuitively. In place of the relation !!a = 2^b!! I like to use the relation “!!a!! is the mother of !!b!!”, which everyone already knows.

Are infinities included in the closure of the real set !!\overline{\mathbb{R}}!!

This is a good question by the Chip Buchholtz criterion: The answer is much longer than the question was. OP wants to know if the closure of !!\Bbb R!! is just !!\Bbb R!! or if it's some larger set like !![-\infty, \infty]!!. They are running up against the idea that topological closure is not an absolute notion; it only makes sense in the context of an enclosing space.

I tried to draw an analogy between the closure and the complement of a set: Does the complement of the real numbers include the number !!i!!? Well, it depends on the context.

OP preferred someone else's answer, and I did too, saying:

I thought your answer was better because it hit all the important issues more succinctly!

I try to make things very explicit, but the downside of that is that it makes my answers longer, and shorter is generally better than longer. Sometimes it works, and sometimes it doesn't.

Vacuous falsehood - does it exist, and are there examples?

I really liked this question because I learned something from it. It brought me up short: “Huh,” I said. “I never thought about that.” Three people downvoted the question, I have no idea why.

I didn't know what a vacuous falsity would be either but I decided that since the negation of a vacuous truth would be false it was probably the first thing to look at. I pulled out my stock example of vacuous truth, which is:

All my rubies are red.

This is true, because all rubies are red, but vacuously so because I don't own any rubies.

Since this is a vacuous truth, negating it ought to give us a vacuous falsity, if there is such a thing:

I have a ruby that isn't red.

This is indeed false. And not in the way one would expect! A more typical false claim of this type would be:

I have a belt that isn't leather.

This is also false, in rather a different way. It's false, but not vacuously so, because to disprove it you have to get my belts out of the closet and examine them.

Now though I'm not sure I gave the right explanation in my answer. I said:

In the vacuously false case we don't even need to read the second half of the sentence:

there is a ruby in my vault that …… The irrelevance of the “…is not red” part is mirrored exactly in the irrelevance of the “… are red” part in the vacuously true statement:

all the rubies in my vault are …

But is this the right analogy? I could have gone the other way:

In the vacuously false case we don't even need to read the first half of the sentence:

there is a ruby … that is not red… The irrelevance of the “… in my vault …” part is mirrored exactly in the irrelevance of the “… are red” part in the vacuously true statement:

all the rubies in my vault are …

Ah well, this article has been drying out on the shelf for a month now, I'm making an editorial decision to publish it without thinking about it any more.

[Other articles in category /math/se] permanent link

Tue, 05 Sep 2023

Math SE report 2023-06: funky-looking Hasse diagrams, and what is a polynomial anyway?

Is !!x^4-x^4 = 0!! a fourth-degree equation?

This is actually a really good question! (You can tell because it's quick to ask and complicated to answer.) It goes back to a very fundamental problem that beginners in mathematics, which is that there is a difference between an object's true nature and the way it happens to be written down. And often these problems are compounded because there is no way to talk about the object except by referring to how it is written down.

OP says:

The best definition I could find for the degree of an equation is the following:

The highest power of the unknown term whose coefficient isn't zero in a given equation

And they are bothered by this, and rightly so. I was almost derailed at this point into writing an article about what an equation is, but I'm going to put it off for another day, because I think to get to this person's question what we really need to do is to say what a polynomial is.

One way is to describe it as an expression in a certain form, but this is a bit roundabout. It's like describing a rational number as an expression of the form !!\frac n d!! where !!n!! and !!d!! are relatively prime integers. Under this sort of definition, !!x^4-x^4!! isn't a polynomial at all, because it's not an expression of the correct form.

But I think the right way to define a polynomial is that it's an element of the free ring over some ring !!C!! of coefficients. This leaves completely open the question of how a polynomial is written, or what it looks like. It becomes a theorem that polynomials are in one-to-one correspondence with finite sequences of elements of !!C!!. Then we can say that the “degree” of a polynomial is one less than the length of the corresponding finite sequence, or something like that.

[ Sometimes we make an exception for the zero polynomial and say its degree is !!-\infty!!, to preserve the law !!\operatorname{deg}(pq) = \operatorname{deg}(p)+\operatorname{deg}(q)!!.) ]

In this view the zero polynomial is simply the zero element of the ring. The polynomial called “!!x^4!!” is the fourth power of the free element !!x!!.

Since the polynomials are elements of a ring, addition, subtraction, and multiplication come along automatically, and we can discuss the value of the expression !!x^4-x^4!!, which by the usual properties of !!-!! is also the zero polynomial.

Anyway that all is pretty much what I said:

!!x^4-x^4!! is just a way to write the polynomial !!0!!, which is not a fourth-degree polynomial. Similarly !!x^5+x^4-x^5!! is not a fifth-degree polynomial.

There's an underlying reality here, the abstract elements of the ring !!R[x]!!. And then there's a representation theorem, which is that elements of !!R[x]!! are in one-to-one correspondence with finite sequences of elements of !!R!!. The ring laws give us ways to normalize arbitrary expressions involving polynomials. And then there's also the important functor that turns every polynomial ring into a ring of functions, turning the polynomial !!x^4!! into the function !!x\mapsto x^4!!.

This kind of abstract approach isn't usually explained in secondary or tertiary education, and I'm not sure it would be an improvement if it were. (You'd have to explain rings, for one thing.) But the main conceptual point is that there is a difference between the thing itself (say, !!x^4!!) and the way we happen to write the thing (say, !!x^5+x^4-x^5!!), and some properties are properties of the thing itself and others are properties of expressions, of the way the thing has been written. The degree of a polynomial is a property of the thing itself, not of the way it happens to be written, so both of those expressions are ways to write the same polynomial, which is fourth-degree, regardless of the fact that in one of them, “the highest power of the unknown term whose coefficient isn't zero” is five.

There is one example of this abstraction that everyone learns in childhood, rational numbers. I lean hard on this example, because most people know it pretty well, even if they don't realize it yet. !!\frac15!! and !!\frac6{30}!! are the same thing, written in two different ways. Mathematicians will, without even thinking about it, speak of the numerator of a rational number, and without batting an eyelash will say that the numerator of the rational number !!\frac{6}{30}!! is !!1!!. The fraction !!\frac6{30}!! is a mere notation that represents a rational number, in this case the rational number !!\frac15!!, and this rational number has a numerator of !!1!!.

Beginning (and intermediate) computer programmers also have this issue, that the thing itself, usually some embedding of data in the computer's memory, may be serialized into a string in multiple ways. There's a string that represents the thing, and then there's the thing itself, but it's hard to talk about the thing itself because anything you can write is a string. I wish this were made explicit in CS education. Computer programmers who don't pick up on this crucial point, at least on an intuitive level, do not progress past intermediate.

What are the names given to statements that can be true or false?

I think I totally flubbed this one. OP is really concerned with open and closed formulas. For example, “!!x > 2!!” is true, or false, depending on the value of !!x!!. And OP astutely noted that while “!!x>4 \to x> 2!!” is always true, its meaning still depends on the value of !!x!!.

I did get around to explaining that part of the issue, eventually. The crucial point, which is that there are formulas which may have free variables and then there are statements which do not, is buried at the end after a lot of barely-relevant blather about Quinian quasiquotation. What was I thinking? Perhaps I should go back and shorten the answer to just the relevant part.

How does one identify the weakest preconditions in Hoare triples?

I wrote a detailed explanation of how one identifies weakest

preconditions in Hoare triples, before realizing that actually OP

understood this perfectly, and their

confusion was because their book wrote “{x≠1}” where it should have

had “{x≠-1}”.

Sheesh.

Artifacts of mathematical logic

This was fun. OP wants actual physical artifacts that embody concepts from mathematical logic, the way one models of the platonic solids embody concepts from solid geometry.

I couldn't think of anything good, but then Michael Weiss brought up Lewis Carroll's Game of Logic. This reminded me that Martin Gardner had written a book about embodiments of simple logic, including the Carroll one, so I mentioned that. It's a fun book. Check out the account of Ramon Llull, who missed being canonized because his martyrdom looked a bit too much like FAFO.

I find this answer a little unsatisfying though. The logic machines in Gardner's book do only a little boolean algebra, or maybe at best a tiny bit of predicate logic. But I'd love to see a physical system that somehow encapsulated sequent calculus or natural deduction or something like that. Wouldn't it be cool to have blocks stuck together with magnets or physical couplings, and if you could physically detach the !!B!! from !!A\to B!! only if you already had an assemblage that matched !!A!! exactly? I have no idea how you'd do it. Maybe a linear logic model would be more feasible: once you used !!A!! with !!A\to B!! to get !!B!!, you wouldn't be able to use either one again.

We need some genius to come and invent some astonishing mechanism that formerly seemed impossible. I wonder if Ernő Rubik is available?

Joachim Breitner's Incredible Proof Machine is a fun thing along these lines, but it's not at all an artifact.

Is there a name for this refinement of the subset ordering?

This was my question. I've never seen this ordering elsewhere, but it has a simple description. We start with a totally ordered finite set !!S!!. Then if !!A!! and !!B!! are subsets of !!S!!, we deem !!A \preceq B!! if there is an injective mapping !!f:A\to B!! where !!a \le f(a)!! for each !!a\in A!!.

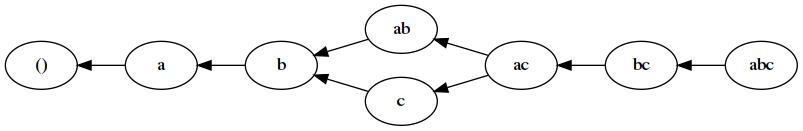

So for example, if !!S!! has three elements !!a\lt b\lt c!! then the ordering I want, on !!2^S!!, has this Hasse diagram:

!!\{b\}\prec\{a,b\}!! because we can match the !!b!!'s. And !!\{a,b\}\prec \{a, c\}!! because we can match !!a!! with !!a!! and !!b!! with !!c!!. But !!\{c\}\not\prec\{a, b\}!! because we can't match !!c!! with either !!a!! or with !!b!!, and !!\{a,b\}\not\prec\{c\}!! because, while we can match either of !!a!! or !!b!! with !!c!!, we aren't allowed to match both of them with !!c!!.

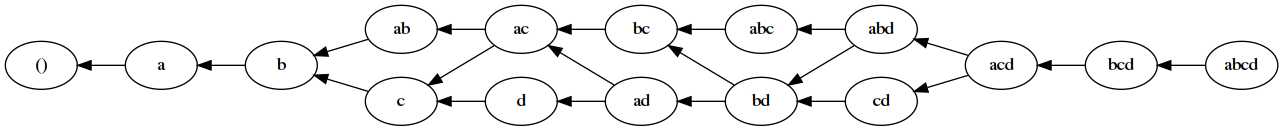

Here's the corresponding Hasse diagram for !!|S|=4!!:

Maybe a better way to describe this is: the bottom element is !!\varnothing!!. To go up the lattice one step, you either increment one of the elements of the current set, or you insert an !!a!! if there isn't one already. So from !!\{b,d\}!! you can either increment the !!b!! to move up to !!\{c, d\}!! or you can insert an !!a!! to move up to !!\{a, b, d\}!!.

This ordering comes up in connection with a problem I've thought about a lot: Say you have a number !!N!! and you want to find !!AB=N!! with !!A!! and !!B!! as close together as possible. Even if you have the prime factorization of !!N!! available, it's not usually clear what the answer is. (In general it's NP-hard.)

If !!N!! is the product of two primes, !!N=p_1p_2!! the answer is obvious. And if !!N!! is a product of three primes !!N =p_1p_2p_3!! there is a definitive answer. Without loss of generality, !!p_1 ≤ p_2 ≤ p_3!!, and the answer is simply that !!A=p_1p_2, B=p_3!! is always optimal.

But if !!N =p_1p_2p_3p_4!! it can go two different ways. Assuming !!p_1 ≤ p_2 ≤ p_3 ≤ p_4!!, it usually turns out that the optimal solution is !!A=p_1p_4, B=p_2p_3!!. But sometimes the optimal solution is !!A=p_1p_2p_3, B=p_4!!. These are the only two possibilities.

Which ways of splitting the prime factors might be optimal relates to those Hasse diagrams above. The possibly-optimal splits between !!A!! and !!B!! correspond to nodes that are just at the boundary of the left and right halves of the diagram.

Nobody had an answer for what this order was called, so I could not look it up. This is OK, I will figure it all out eventually.

[Other articles in category /math/se] permanent link

Fri, 09 Jun 2023

Math SE report 2023-05: Arguments that don't work, why I am a potato, and set theory as a monastery

How to shift a power series to be centered at !!a!!?

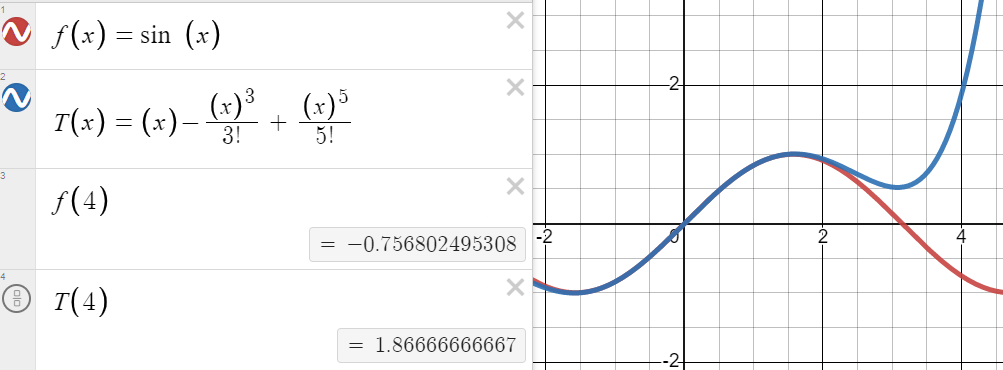

OP observed that while the Taylor series for !!\sin x!!, centered at zero, is a good approximation near !!x=0!!, it is quite inaccurate for computing !!\sin 4!!:

They wanted to know how to use it to compute a good approximation for !!\sin 4!!. But the Taylor series centered around !!4!! is no good for this, because it only tells you that when !!x!! is close to !!4!!, $$\sin x \approx \sin 4 + (x-4)\cos 4 + \ldots, $$ which is obviously useless: put !!x=4!! and you get !!\sin 4 = \sin 4!!.

I'd written about Taylor series centering at some length before, but that answer was too long and detailed to repeat this time. It was about theory (why do we do it at all) and not about computation.

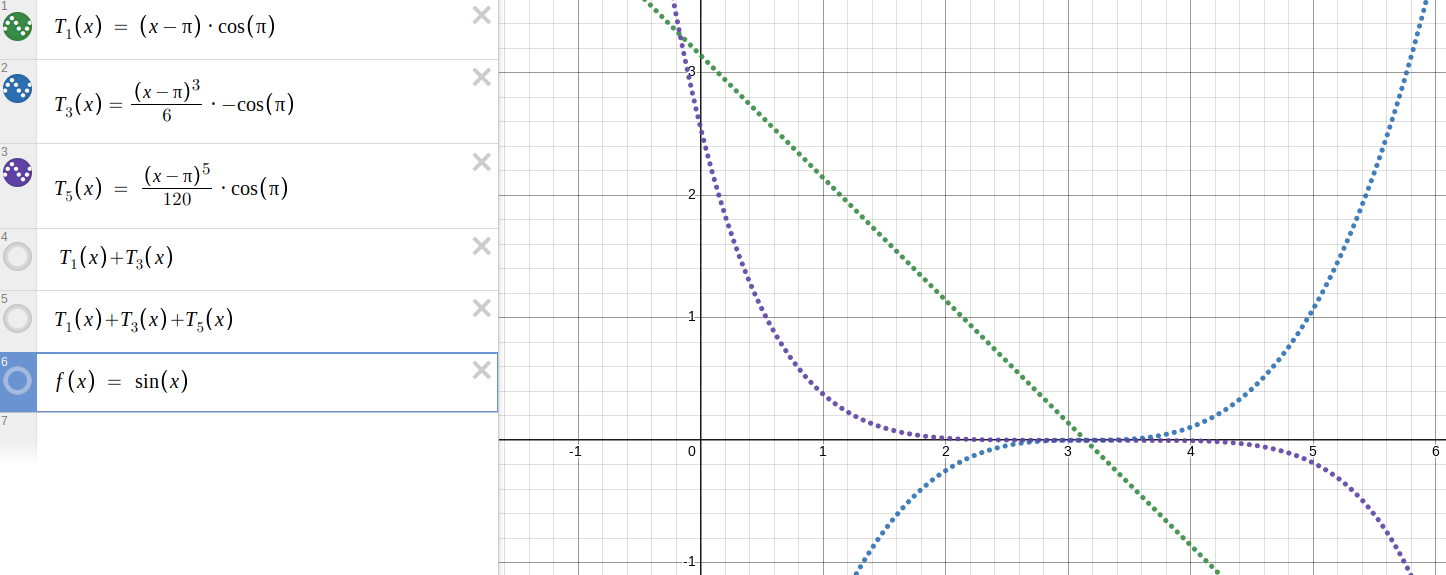

So I took a good suggestion from the comments, which is that if you want to compute !!\sin 4!! you should start with the Taylor series centered around !!π!!:

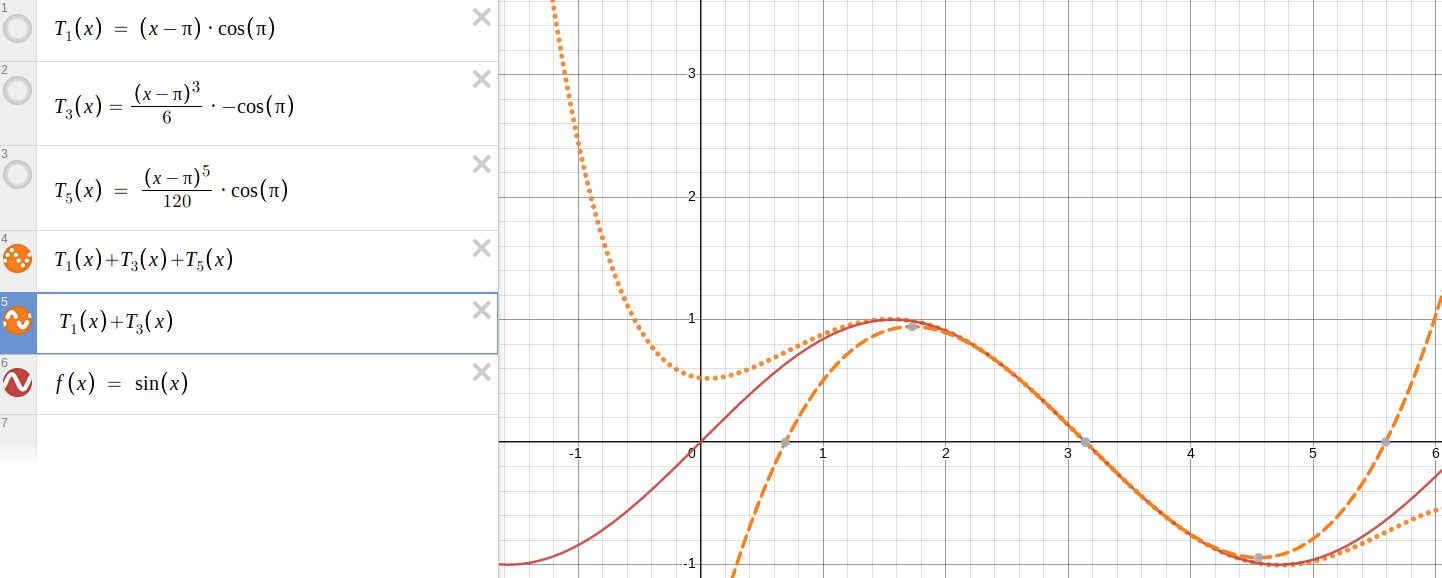

$$\begin{align} \sin x & \approx \sin \pi + (x-\pi)\cos \pi - \frac{(x-\pi)^2}{2}\sin \pi - \frac{(x-\pi)^3}{6}\cos \pi + \ldots \\ & = -(x-\pi) + \frac{(x-\pi)^3}{6} - \frac{(x-\pi)^5}{120} + \ldots \end{align} $$

because the !!\sin \pi!! terms vanish and !!\cos \pi = -1!!. I did some nice rainbow-colored graphs in Desmos.

I just realized I already wrote this up last month. And do you know why? It's because I copied this article from last month's, forgot to change the subject line from “2023-04” to “2023-05”, and because of that forgot that I was doing May and not April. Wheeee! This is what comes of writing blog articles at 3 AM.

Well anyway, continuing with May, we have…

Rational solutions for !!x^3+y^3=1!! where both x and y are non-negative

OP wanted solutions to $$x^3 + y^3 = 1,$$ and had done some research, finding a relevant blog post that they didn't understand, which observed that if !!x!! and !!y!! were solutions, so too would be certain functions of !!x!! and !!y!!, and this allows an infinite family of solutions to be developed if one knows a solution to begin with.

Unfortunately, there are no nontrivial rational solutions to !!x^3 + y^3 = 1!!, as has been known for some time. The blog post that OP found was discussing !!x^3 + y^3 = 9!!, for which !!\langle x, y\rangle = \langle 1, 2\rangle !! is an obvious starting point.

OP asked a rather odd question in the comments:

Why is !!(0, 1)!! not a start?

Had they actually tried this, they would have seen that if they started with !!\langle x, y\rangle = \langle 0, 1\rangle !!, when they computed the two functions that were supposed to give them another solution, they got !!\langle 0, 1\rangle !! back again. I told OP to try it and see what happened. (Surprising how often people forget this. Lower Mathematics!)

This reminds me a bit of a post I replied to long ago that asked why we can't use induction to prove the Goldbach conjecture. Well, what happens when you try? The base case is trivial, so far so good. The induction case says here you go, for every even number !!k < n!! I give you primes !!p_k!! and !!q_k!! with !!p_k+q_k = k!!. Your job is to use these to find primes !!p_{k+2}!! and !!q_{k+2}!! with !!p_{k+2}+q_{k+2} = k+2!!. Uhhh? What now?

Proving !!n(n^2+5)!! is always even

Mathematicially this is elementary, but the pedagogy is interesting.

OP had already proved this by considering even and odd cases separately, but wanted to know if an induction proof was possible. They had started one, but gotten stuck.

Three people, apparently not reading the question, provided proofs by considering even and odd cases separately. One other provided a proof by induction that was “a bit hairy”. But I think a better answer engages with OP's attempt at an induction proof: Instead of “here's a way it could be done”, it's better to provide “here's how you could have made your way work”.

I used a trick, which is that instead of taking !!\Phi(x)!! to mean “!!f(x)!! is even”, and proving !!\Phi(x)!! for all !!x!! by induction, I took !!\Phi(x)!! to mean “!!f(x)!! is even and !!f(x+1)!! is also even”. You have to prove more, but you have more to work with. For a similar approach to a similar problem, see Proof that every third Fibonacci number is even.

The key feature that makes this a good answer is where it says:

For !!f(n+2)!! we will use your method. …. Subtracting !!n(n^2+5) = n^3 + 5n!! as you suggested ….

It's important to point out to the student when their idea would have worked. This is important in code reviews too. The object is not to make the junior programmer do it the same way you would have, it's to help them make their own idea work well. I ought to write an article about that.

Is an argument valid if assuming its premises and conclusion leads to no contradiction?

This was one of those questions where OP proposed some logical principle that was totally invalid and asked why it isn't allowed, something about why you can't assume the conclusion and show that it satisfies the required properties.

It's a curious question because there's such a failure of instruction here: OP has not grasped what it means to be a valid deduction, that the logic used in mathematics is the same logic that is used everywhere else, and that mathematical arguments are valid or invalid for the same reasons that those same arguments are valid or invalid when thinking about anything else: the invalid arguments lead you to the wrong conclusions!

Anyway, I don't want to quote my whole answer here, but you should check it out, it's amusing. OP didn't like it though.

Proving or disproving that if !!A^2X=λ^2X!! then !!AX=λX!!

OP did like this one, and so do I, it's hilarious. The question is apparently something about linear transformations and eigenvalues and stuff like that, which I never learned as well as I should have, owing to my undergraduate linear algebra class being very poor. (Ugh, so many characteristic polynomials.)

Someone else posted a linear algebra (dis)proof which was very reasonable and which got several upvotes. But I realized that this is not actually a question about eigenvalues! It is elementary algebra: If you have an example where !!A^2X=λ^2X!!, then !!-\lambda!! has this property also and is a counterexample to the claim. OP was pleased with this and accepted my answer instead of the smart one with the upvotes.

This kind of thing is why my Math SE avatar is a potato.

Can we treat two equal sets as being distinct mathematical objects?

There was an answer to this that I felt was subtly wrong. It said:

The axiom that answers your question is known as Extensionality: Sets are uniquely determined by their elements.

and then started talking about ZFC, which seems to me to be an irrelevant confusion.

The formal idea of sets comes from the axioms, but the axioms themselves come from a sort of preformal idea of sets. We want to study what happens when we have these things-that-have-elements, and when we ignore any other properties that they might have. The axiom is just a more formal statement of that. Do sets have properties, such as identities, other than their elements? It's tempting to say “no” as this other person did. But I think the more correct answer is “it doesn't matter”.

Think of a monastery where, to enter, you must renounce all your worldly possessions. Must you legally divest yourself of the possessions in order to enter the monastery? Will the monks refuse you entry if, in the view of the outside world, you still own a Lamborghini? No, they won't, because they don't care. The renunciation is what counts. If you are a monk and you ask another monk whether you still own the Lamborgini, they will just be puzzled. You have renounced your possessions, so why are you asking this? Monks are not concerned with Lamborghinis.

Set theory is a monastery where the one requirement for entry is that you must renounce your interest in properties of sets other than those that come from their elements. Whether a set owns a Lamborghini is of no consequence to set theorists.

[Other articles in category /math/se] permanent link

Fri, 28 Apr 2023

Show how the student could have solved it

A few days ago I offered these maxims about pedagogy:

It's not enough to show the student the answer; you should try to show them how to find the answer.

It's not enough to show the student how you can find the answer; you should try to show them how they could have found the answer.

A nice illustration popped up on Math SE this morning. OP asks:

If all eigenvalues of a matrix are 0 or 1, does that imply the matrix is idempotent?

Shortly afterward a comment from PrincessEev said, opaquely:

The matrices $$\left[\begin{matrix} 0&x\\ 0&0 \end{matrix}\right]$$ are obvious counterexamples for !!x\ne 0!!.

Uh, they are? It wasn't obvious to me. I mean, I think I see why the eigenvalues must be zero, without doing the calculation. But where did this example come from?

But then later they redeemed themselves by adding another comment:

it was just my first instinct to try a few examples with what felt like a bold claim: matrices with enough well-placed zeroes tend to vanish when raising them to powers

I understand now! Yeah, I could have thought of that, but didn't. So the second comment actually taught me something, not what the answer is, which not very useful, because who cares?, but how to find the answer, which contains knowledge that might be generally useful.

[Other articles in category /math/se] permanent link

Thu, 20 Apr 2023

Math SE report 2023-04: Simplest-possible examples, pointy regions, and nearly-orthogonal vectors

Polyhedra has more corners than facets

This one was a bit puzzling because it asked:

Is it true that [a polyhedron] has always more/as many corners than facets? I haven't found a counterexample…

(By ‘facets’ I assume OP meant ‘faces’.)

This is puzzling because there are so many counterexamples. For example, every dipyramid has this property. A dipyramid is what you get if you take two pyramids and glue their bases together. Maybe you want to say this is obscure, but an octahedron is a dipyramid and one might expect anyone asking about polyhedra to know about octahedra. I wonder what examples this person did consider?

In fact, for any polyhedron with !!F!! faces and !!V!! vertices, there is a corresponding “dual” polyhedron with !!V!! faces and !!F!! vertices, so for almost any polyhedron you can think of, if that polyhedron is not already a counterexample, then its dual is. A cube is not, but its dual is — this is the octahedron again.

Finding sets !!A!!, !!B!!, and !!C!! such that !!A\in B!!, !!B \in C!!, but !!A \notin C!!

I thought this one was pedagogically interesting. OP made a mistake in their approach that is quite common:

The problem tells us !!B = \{A,b_1,b_2,\ldots\}!! and !!C = \{B,c_1,c_2\}!!.

The mistake OP made here was to start by trying to find the most general possible example. Yes, if !!A\in B!! then in general !!B = \{A,b_1,b_2,\ldots\}!!. This might be a more helpful observation if the question had asked for some universal property of all such !!A, B, C!!. Then you could add constraints to the general case and see if you had anything left at the end. But this problem only asked for one example. So instead of considering the most general case of !!A\in B!!, and therefore the most complex form of the idea, the first thing one should try is the simplest possible example of !!A\in B!!, which is just !!B = \{A\}.!!

Then similarly one should try !!C = \{B\}!!. Obviously the required properties !!A\in B!! and !!B\in C!! are satisfied. What about !!A\notin C!!?

Since the only element of !!C!! is !!B!!, the answer is easy: !!A\notin C!! unless !!A=B = \{A\}!!

So now we just have to avoid !!A=\{A\}!!. Again let's try the simplest thing that could possibly work: !!A=\emptyset!!. And then we win, because indeed !!\emptyset\ne \{\emptyset\}!!, since the left side is empty and the right side isn't.

Did we get lucky here? No! The axiom of foundation guarantees that literally any !!A!! will work. But you don't have to know that to find an example, because literally any !!A!! will work.

This is Lower Mathematics in action. The abstract approach is useful if you are trying to prove some theorem, but if all you want is to find an example, the abstract approach is overkill.

Volume obtained by rotating a region around two different lines

OP considered the region bounded by the curves !!y=x^2!! and !!y=\sqrt x!! for !!0\le x, y\le 1!!, and then the solids of revolution obtained by revolving this region around the lines !!y=0!! and !!y=1!!. They said:

I expected the volume obtained by rotating about !!y=1!! to be identical with the volume obtained by rotating about the !!x!!-axis. To my surprise, calculation shows different results.

Many people would have posted an answer to this that simply did the calculation, sometimes one with no words in it. But I think this misses the point of the question, which is about OP's intuition. Why were they wrong?

Something that has been on my mind lately is an elaboration of a certain principle of pedagogy. Everyone knows the principle:

It's not enough to show the student the answer; you should try to show them how to find the answer.

Not everyone follows this, but at least most people are aware of it.

But my decades of experience watching people teach math have led me to believe that this is insufficient. There's a higher-order version of this principle that is also important:

It's not enough to show the student how you can find the answer; you should try to show them how they could have found the answer.

And by ‘they’ I don't mean ‘a student’; I mean the student, the specific one sitting in front of you, who knows what they know and can do what they can do.

This is hard.

(I think the !!A\in B, B\in C, A\notin C!! thing above is another example of this. Three people answered that question by pulling solutions out of thin air, but how much does that help OP solve the next problem of this type?)

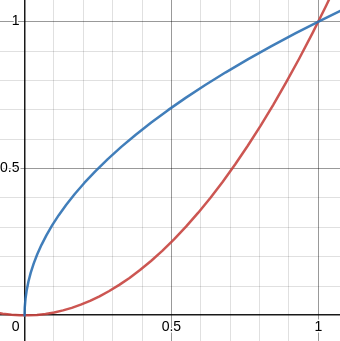

Anyway, I digress. The region in question looks like this:

I observed that the upper end is much narrower than the lower end. You could count boxes to verify this, but I think it's obvious, and said:

Which end would you rather be poked with?

Then I pointed out that if you revolve the region around !!y=1!!, the thick end travels a long way and sweeps out a large volume, whereas if you revolve it around !!y=0!! the thick end is closer to the axis of revolution, so does not sweep out so much volume. So just from looking at the picture, one might guess that the volume will be larger when revolved around !!y=1!!, which is what OP originally reported.

I did not actually do the calculation, so it's conceivable that I was utterly wrong, but I suspect not.

Definition of Graph Isomorphism

This was not that interesting, but it is a demonstration of a couple of things:

Finding the simplest possible example

Because it's usually easier for someone to understand a simple example well enough to generalize it than it is for them to understand an abstract, general construction well enough to specialize it to an example.

Math SE will often ignore subtle answers to challenging questions, while giving many upvotes to trivialities

This post and the octahedron one were my most upvoted posts of the month and also the most trivial. This is why one should ignore upvotes: they are not correlated with anything of real importance.

Help understanding proof: classifying groups of order 21

I may have kinda blown this one. My answer was way too long. OP was asking about specific steps in some group theory proof, ultimately related to the formula $$(aba^{-1})^n = ab^na^{-1}.$$

Algebraically this is quite easy to show, and I did. But it also has deep and essential intuitive content, which I summarized like this:

It says that if you are going to repeat several times the operation of turning your head, then doing !!b!!, then turning your head back, you can skip some of the head-turning and just turn your head once, do operation !!b!! repeatedly, and turn your head back at the end.

This !!aba^{-1}!! thing, called “conjugation”, is incredibly important in group theory, and I have often felt that my group theory course did not make this clear. As I recall the course observed that the mapping !!\varphi_a : x\mapsto axa^{-1}!! is always a group automorphism, went on from there. Which indeed it is, but so what? Why do we care about that particular transformation, anyway?

But the intuitive content of the statement about the automophism is that the symmetries of an object don't change when you turn your head. That's why it's important!

When are two rotations of a sphere conjugate? Exactly when they rotate by the same amount around their respective axes. (“Turn your head!”)

Why are two permutations conjugate if and only if they have the same cycle structure? Because this exactly when they are equivalent under renaming of the objects being permuted; renaming the objects is analogous to "turning your head" for this kind of symmetry.

So whenever the topic of conjugation comes up, I am tempted to launch into a long explanation of the significance of conjugation and its intuitive understanding. Which might have been helpful in this case, but it might have been a completely unnecessary distraction, and I should probably have resisted.

What definition of "nearly orthogonal" would result in "In a 10,000-dimensional space there are millions of nearly orthogonal vectors"?

This was one of those cases where OP asked a very slightly under-baked question and several people jumped in to say it made no mathematical sense at all. (“It's a figure of speech” says one comment. No, it isn't. “I doubt that reference is to a precisely defined concept”, says another. There are more things in heaven and earth, Horatio… “I call bullshit, or imprecise speech,” says a third. Heavens, such foul language!)

I have complained about this at length in the past: I think Math SE persons are too quick to jump from “I have not heard of that” to “it does not exist” and then to “it cannot exist”, or from “I don't quite understand that” to “nobody can understand that” and then to “that is incomprehensible nonsense”.

Two vectors !!u!! and !!v!! are said to be orthogonal if their inner product !!\langle u,v\rangle!! is exactly zero. So if you don't know what “nearly orthogonal” means, you might guess that it means that the inner product is nearly zero: $$\left\lvert \langle u,v\rangle \right\rvert < \epsilon$$ for some small specified !!\epsilon!!. The angle between !!u!! and !!v!! would then be approximately between !!\frac\pi2 - \epsilon!! and !!\frac\pi2 + \epsilon!!, which is nearly a right angle; hence “nearly orthogonal”. This is not exactly subtle thinking.

Another user helpfully linked to a Math Overflow post that discussed essentially the same question, with the title “Almost orthogonal vectors”. So bullshit it isn't, and the question there was sufficiently clear that six people thought it was worth answering, including some guy named Tim Gowers.

I didn't know the answer (although I do now!), but if you don't know the answer, you can still sometimes be useful by writing up the answers of other people who are smarter than yourself, that is called “scholarship”.

In writing it up I almost made a horrible mistake. At one point my draft said something like:

The top answer there gives a bound and claims it is implied by the Johnson-Lindenstrauss lemma. I think the bound might not be quite correct, because the Johnson-Lindenstrauss lemma seems to apply to a somewhat different situation, and…

Fortunately, I realized before posting that that person who had written that answer that was in fact William B. Johnson after whom the Johnson-Lindenstrauss lemma was named, and there was quite a good chance that he did not misapply his own theorem. Heh. Yikes.

It's funny now, but if I had actually made that mistake I would have been mortified.

Also

I have an article with pretty diagrams about how to expand a Taylor series around !!x=\pi!!, with a nice Desmos demonstration that you might enjoy playing with. Press the little ▶️ button in the box that defines the parameter !!a!! and watch the cubic polynomials whip back and forth.

[ Addendum 20230421: Eric Roode says that the animation reminds him of “the robot from Lost in Space sliding back and forth, waving its arms wildly, and saying ‘Danger, Will Robinson! Danger!’”. Same. ]

I have already written a separate article about this post that asks how to compute the integral $$\int_0^{2000} e^{x/2-\lfloor x/2\rfloor}\; dx$$

which you might like to read if you didn't already.

[Other articles in category /math/se] permanent link

Sat, 15 Apr 2023

I liked this simple calculus exercise

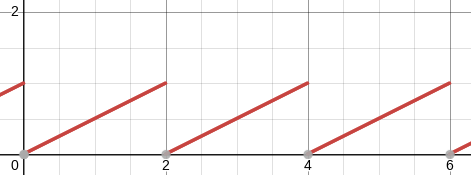

A recent Math SE question asked for help computing the value of $$\int_0^{2000} e^{x/2-\left\lfloor x/2\right\rfloor}\; dx.\tag{$\star$}$$

(!!\left\lfloor \frac x2 \right\rfloor!! means !!\frac x2!! rounded down to the nearest integer.)

Often when I see someone's homework problems I exclaim “what blockhead TA assigned this?” But I think this is a really good exercise. Here's why.

In a calculus class, some people will have learned to integrate common functions by rote manipulatation of the expressions. They have learned a set of rules for converting $$\int_a^b x^k\; dx$$ to $$\left.\frac{x^{k+1}}{k+1}\right\rvert_a^b$$ and then to $$\frac{b^{k+1}}{k+1}- \frac{a^{k+1}}{k+1}$$ and such like, and they grind through the algebra. If this is all someone knows how to do, they are going to have a lot of trouble with !!(\star)!!. They might say “But nobody ever taught us how to integrate functions with !!\left\lfloor \frac x2\right\rfloor!!”.

A calculus tyro trying to deal with this analytically might also try rewriting $$e^{x/2-\left\lfloor x/2\right\rfloor}$$ as $$\frac{e^{x/2}}{e^{\left\lfloor x/2\right\rfloor}}$$ but that makes the problem harder, not easier.

To solve this, the student has to actually understand what the integral is computing, and if they don't they will have to learn something about it. The integral is computing the area under a curve. if you graph the function $$\frac x2-\left\lfloor \frac x2\right\rfloor$$

you find that it looks like this:

If the interval of integration in !!(\star)!! were only !!(0,2)!! instead of !!(0, 2000)!!, the problem would be very easy because, on this interval, the complicated exponent is identically equal to !!\frac x2!!:

$$\begin{align} \int_0^2 e^{x/2-\left\lfloor x/2\right\rfloor}\; dx & = \int_0^2 e^{x/2}\; dx \\ & = \left. 2e^{x/2} \right\rvert_0^2 \\ & = 2e-2 \end{align} $$

Since the function is completely periodic, integrating over any of the !!1000!! intervals of length !!2!! will produce the same value, so the final answer is simply $$1000\cdot (2e-2).$$

But just pushing around the symbols won't get you there, to solve this problem you have to actually know something about calculus.

The student who overcomes this problem might learn the following useful techniques:

If some expression looks complicated, try graphing it and see if you get any insight into how it behaves.

Some complicated functions can be understood by breaking them into simple parts and dealing with the parts separately.

Piecewise-continuous functions can be integrated by breaking them into continuous intervals and integrating the intervals separately.

You can exploit symmetry to reduce the amount of calculation required.

None of this is deep stuff, but it's all valuable technique. Also they might make the valuable observation that not every problem should be solved by pushing around the symbols.

[Other articles in category /math/se] permanent link

Sun, 19 Feb 2023I had an unusually interesting batch of Math Stack Exchange posts recently.

I think all of my answers to these questions are worth reading in full, and if you like the math posts on my blog, you will like reading these SE posts also. Well, most of them. Maybe.

Summaries follow.

Confusion about equality: mathematical objects versus the symbols that describe them

This one is from last September but I'm really happy with it because it thoroughly addresses up a very common misconception about mathematical notation:

Based on my understanding of equality, the statement !!(1+1)+1=1!!, contains no mathematical content beyond !!1=1!!, since the group element !!(1+1)+1!! literally is the group element !!1!!. This bothers me...

My answer begins with

It should; it's wrong.

I'm frequently surprised by how often this fallacy shows up on Math SE, often asserted as an obvious truth by people I thought would know better. So it's worth explaining in detail. I expect I'll be able to refer people to this answer when it comes up in the future.

A brief summary of my answer is:

- Mathematical expressions denote computations, not values.

- !!A=B!! means that that two computations eventually produce the same value.

- This does not, in general, mean that the computations have the same meaning.

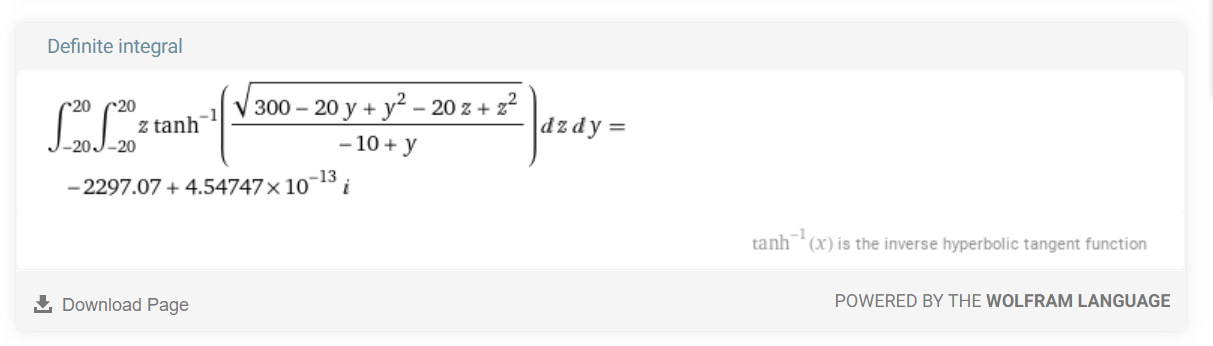

What does italic i mean in integral calculator?

The i means the imaginary unit, that !!i^2=-1!! thing. No surprise there. But the reason was a bit interesting. OP had Wolfram α compute some horrendous double integral:

and the answer should have been a real number, so what was !!i!! doing in there?

The answer: Floating-point roundoff error. Check out Claude Leibovici's detailed explanation of where the roundoff error comes from, it's much smarter than what I said, which was to mumble something about how Wolfram α's “probably … used … some advanced technique …” which sounds wise but actually I had no idea what it might have done. Claude Leibovici actually has an explanation.

I was going to leave this out but I wanted to remind you all how much I despise floating-point arithmetic.

Can Peano's 9th axiom be expressed using a self-referential set definition?

This is one of those not-quite-baked questions where the initial answers act like it does not make sense (tier 4 or 5). But it does make sense and there is a good answer (tier 1).

The question asks if you can define the set of natural numbers by saying something like

If !!K = \{0\} \cup \{S(k)\mid k \in K\}!!, then !!K=\mathbb{N}!!.

The initial comments said no, it's self-referential. But so is:

$$ n! = \begin{cases} 1, & \text{if $n$ = 0} \\ n\cdot (n-1)!& \text{otherwise} \end{cases} $$

and nobody bats an eyelash at that. (The author of the comments later retracted his rejection.)

In fact it requires only a little bit of elaboration to make sense of such “circular” definitions. To interpret $$X = f(X)$$ you need to do two things. First, think of !!f!! as a mapping, and ask if it has any fixed points, any arguments !!x!! for which !!x=f(x)!! holds. And then, from the set of fixed points, find some unambiguous way to identify one of the fixed points as the one you want. If !!f!! is a mapping from sets to sets, it often happens that the family of fixed points is closed under intersections, and you can select the unique minimal fixed point that is a proper subset of all the others.

This was all formalized by Dana Scott in the 1960s and it continues to underlie formal treatments of programming language semantics.

Is there a scenario for when changing the order of different quantifiers in a nested quantifier retain the original meaning?

This is interesting because some of the replies make the mistake of conflating the meaning of an expression with its value, a problem I discussed above in connection with something else. Two expressions of first-order logic may be logically equivalent, but this does not imply that they have the same meaning.

The question also looks superficially like “What is the difference between !!\forall x\exists y. R(x,y)!! and !!\exists y\forall x. R(x,y)!!, which is a FAQ. But it is not that question.

The question concerned expressions of the type !!\forall x.\exists y.P(x,y)!! and was further complicated by the implicit quantifier on the !!P!!. Are we asking if !!\forall x.\exists y.P(x,y)!! always has a different meaning from !!\forall y.\exists x.P(x,y)!! for all !!P!!? Or for a particular !!P!!? There are several similar-sounding questions that could be asked here, and my thinking about the variations is still not clear to me.

English (and standard mathematical terminology) is not well-equipped to discuss this sort of thing intelligibly. Or perhaps I just don't know how to do it. I had to work hard to write something I was satisfied with.

Cantor set - is it made of !![a,b]!! intervals or exclusively of singletons?

This question is a bit confused (every set is made of singletons) and I was worried that some know-it-all would jump in and tell this person that really the Cantor set is very simple. When actually the Cantor set is really weird and this is why it is such an important counterexample to so many plausible-seeming conjectures. As Von Neumann supposedly said, in mathematics one doesn't understand things, one just gets used to them. It can be hard for people who have gotten used to the Cantor set to remember what it is like for people who are grappling with it for the first time — or to remember that they themselves may not understand as well as they imagine they do.

When I write an answer to a question like this, in which I need to say “your idea is somewhat confused”, I like to place that remark in close proximity to “… because the situation is a confusing one” so that OP doesn't feel that they are the only person in the world who is puzzled by the Cantor set.

(Sometimes they are the only person in the world who is puzzled by whatever it is, and then it's okay for them to feel that way. I wouldn't lie and say that the situation was a confusing one when I thought it wasn't. If the matter is actually simple it's better to say so, because that can be valuable information. Beginners often overthink simple issues. But the Cantor set is not one of those situations!)

A valuable pedagogical strategy is finding a simpler example. The Cantor set does not have all the same properties as !!\Bbb Q!!. But !!\Bbb Q!! does seem to share with the Cantor set the specific properties that were troubling this person. Does !!\Bbb Q!! contain any intervals? Like the Cantor set, no. Is !!\Bbb Q!! a union of singletons? It's not clear what OP meant by this, but, uh, probably? And if not we can at least find out more about what OP thought they meant, by asking about !!\Bbb Q!!. So it's a good idea to take the focus off of the Cantor set, which is weird, complicated, and unfamiliar, and put it on !!\Bbb Q!!, which is much less weird, somewhat less complicated, and much more familiar. Then with that foundation laid, you are in a better position to climb up to !!\Bbb R\setminus\Bbb Q!! (Similar to !!\Bbb Q!!, but uncountable) and then to the Cantor set itself.

Here I am talking about the Cantor set.

Deriving that a cube has six sides via a square and combinatorics

This is probably my favorite question of the month, because it seems quite half-baked, but there is an excellent answer available. As often happens with half-baked questions, the people who don't know the answer jump to the conclusion that no answer is possible, and say dumb stuff like:

What is the definition of a "cube" in your problem?

This is going the wrong direction. The point is to find the ‘right’ definition of the cube; if OP could define “cube” in the way they wanted, they wouldn't need to ask the question.

A better way to answer this question is to understand that what OP is looking for is actually a suitable definition of “cube”. A more mathematically sophisticated person might have asked:

How can we understand the cube as a combinatorial object, developed from the square?

The word “cube” in this question does not mean some specific mathematical object, but rather the informal intuitive cube. A correct answer will explain how to approach the informal idea of the cube in a mathematical way.

There is a nice (tier 1!) answer in this case: A segment is composed of an interior !!i!! and two endpoints, so we can represent it as !!S=i+2!!. Then !!S^3!! is a cube and its analogous combinatorial description is !!(i+2)^3 =i^3+6i^2+12i+8!!. Ta daa! The answer has a more detailed explanation.

There were a couple of followup comments that annoyed me, objecting that what I had presented was not a proof. That was a feature, not a bug. The question hadn't asked for a proof, and I had not tried to provide one.

One of the comments went further, and called it “a nice coincidence”. It's not, it's just generating functions.

I think the “coincidence” person has a profound misunderstanding of how mathematics operates. I wrote several hundred words explaining why but then realized that I had finally been able to articulate an idea I've been groping around to get hold of for decades. This is too precious to me to stick in at the tail end of an anthology article; it deserves its own article. So I am saving the next five paragraphs for next week. Or next year. Whenever I can do it justice.

Algebraic descriptions of the cube.

Thanks for reading.

[ Addendum 20230221: The original question also wanted to identify the faces of a cube with pairs of something there were four of, maybe the sides or the corners of a square. I did find a way to identify faces of a cube with pairs of something interesting. ]

[Other articles in category /math/se] permanent link

Mon, 11 Apr 2022

At last, an internet commenter I can agree with

Browsing around Math StackExchange today, I encountered this question, ‘Unique’ doesn't have a unique meaning, which pointed out that the phrase “Every boy has a unique shirt” is at least confusing. (Do all the boys share a single shirt?)

“Aha,” I said. “I know what's wrong there: it should be ‘every boy has a distinct shirt’.” I scrolled down to see if I should write that as an answer. But I noticed that the question had been posted in 2012, and guessed that probably someone had already said what I was going to say. Indeed, when I looked at the comments, I saw that the third one said:

If I meant that no shirt belongs to two boys, I would say "every boy has a distinct shirt".

Okay, that saves me the trouble of replying at least. I went to click the upvote button on the comment, but there was no button,

because

the comment had been posted, in August of 2012, by me.

[Other articles in category /math/se] permanent link

Wed, 09 Mar 2022

Bad but interesting mathematical notation idea

Zaz Brown showed up on Math SE yesterday with a proposal to make mathematical notation more uniform. It's been pointed out several times that the expressions

$$y^n = x \qquad n = \log_y x \qquad y=\sqrt[n]x $$

all mean the same thing, and yet look completely different. This has led to proposals to try to unify the three notations, although none has gone anywhere. (For example, this Math SE thread .)

!!\def\o{\overline}\def\u{\underline}!!

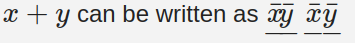

In this new thread, M. Brown has an interesting observation: exponentiation also unifies addition and multiplication. So write !!\o x!! to mean !!e^x!!, and !!\u x!! to mean !!\ln x!!, and leave multiplication as it is. Now !!x^y!! can be written as !!\o{\u x y}!! and !!x+y!! can be written as !!\u{\bar x \! \bar y}!!.

Well, this is a terrible idea, and I'll explain why I think so in some detail. But I really hope nobody will think I mean this as any sort of criticism of its author. I have a lot of ideas too, and most of them are amazingly bad, way worse than this one. Having bad ideas doesn't make someone a bad person. And just because an idea is bad, doesn't mean it wasn't worth considering; thinking about ideas is how you decide which ones are bad and which aren't. M. Brown's idea was interesting enough for me to think about it and write an article. That's a compliment, not a criticism.

I'm deeply interested in notation. I think mathematicians don't yet understand the power of mathematical notation and what it does. We use it, but we don't understand it. I've observed before that you can solve algebraic equations or calculus problems just by “pushing around the symbols”. But why can you do that? Where is the meaning, and how do the symbols capture the meaning? How does that work? The fact that symbols in general can somehow convey meaning is a deep philosophical mystery, not just in mathematics but in all communication, and nobody understands how it works. Mathematical symbols can be even more amazing: they don't just tell you what other people were thinking, they tell you things themselves. You rearrange them in a certain way and they smile and whisper secrets: “now you can see this function is everywhere zero”, “this is evidently unbounded” or “the result is undefined when !!\lvert x_1\rvert > \frac 23!!”. It's almost as if the symbols are doing some of the thinking for you.

Anyway this particular idea is not good, but maybe we can learn something from its failure modes?

Here's how you would write !!x^2+x!!: $$\u{\o{\o{2\u x}}{\o x}}$$

Zaz Brown suggested that this expression might be better written as !!x{\u{\o x \o 1}}!!, which is analogous to !!x(x+1)!!, but I think that reply misses a very important point: you need to be able to write both expressions so that you can equate them, or transform one into the other. The expression !!x(x+1)!! is useful because you can see at a glance that it is composite for all integer !!x!! larger than 1, and actually twice a composite for sufficiently large !!x!!. (This is the kind of thing I had in mind when I said the symbols whisper secrets to you.) !!x^2+x!! is useful in different ways: you can see that it's !!\Theta(x^2)!! and it's !!(x+1)^2 - (x+1)!! and so on. Both are useful and you need to be able to turn one into the other easily. Good notation facilitates that sort of conversion.

M. Brown's proposal actually has at least two components. One component is its choice of multiplication, exponentials and logarithms as the only first-class citizens. The other is the specific way that was chosen to write these, with the over- and underbars. This second component is no good at all, for purely typographic reasons. These three expressions look almost identical but have completely different meanings: $$ \u{\o a\, \o c}\qquad \u{\o { ac}} \qquad \o{\u a\, \u c}.$$

In fact, the two on the right were almost indistinguishable until I told MathJax to put in some extra space. I'm sure you can imagine similar problems with !!\u{\o{\o{2\u x}}}{\o x}!! turning into !!\u{\o{\o{2\u x x}}}!! or !!\u{\o{\o{2\u x }x}}!! or whatever. Think of how easy it is to drop a minus sign; this is much worse.

[ Addendum 20220308: Earlier, I had said that !!x+y!! could be written as !!\u{\bar x\bar y}!!. A Gentle Reader pointed out that the bar on the bottom wasn't connected but should have been, as on the far right of this screenshot:

I meant it to be connected and what I wrote asked for it to be connected, but MathJax, which formats the math formulas on the blog, didn't connect it. To remove the gap, I had to explicitly subtract space between the !!x!! and the !!y!!. ]

But maybe the other component of the proposal has something to it and we will find out what it is if we fix the typographic problem with the bars. What's a good alternative?

Maybe !!\o x = x^\bullet!! and !!\u x = x_\bullet!! ? On the one hand we get the nice property that !!x^\bullet_\bullet = x!!. But I think the dots would make my head swim. Perhaps !!\o x = x\top!! and !!\u x = x\bot!!? Let's try.

Good notation facilitates transformation of expressions into equal expressions. The !!\top\bot!! notation allows us to easily express the simple identities $$a\top\bot \quad = \quad a\bot\top \quad = \quad a.$$ That kind of thing is good, although the dots did it better. But I couldn't find anything else like it.

Let's see what the distributive law looks like. In standard notation it is $$a(b+c) = ab + ac.$$ In the original bar notation it was $$a\u{\o b\o c} = \u{\o{ab}\, \o{ac}}.$$ This looks uncouth but perhaps would not be worse once one got used to it.

With the !!\top\bot!! idea we have

$$ a(b\top c\top)\bot = ((ab)\top(ac)\top)\bot. $$

I had been hoping that by making the !!\top!! and !!\bot!! symbols postfix we'd be able to avoid parentheses. That didn't happen: without the parentheses you can't distinguish between !!(ab)\top!! and !!a(b\top)!!. Postfix notation is famous for allowing you to omit parentheses, but that's only if your operators all have fixed arity. Here the invisible variadic multiplication ruins that. And making it visible dyadic multiplication is not really an improvement:

$$ ab\top c\top\cdot\cdot\bot = ab\cdot\top ac\cdot \top\cdot \bot. $$

You know what I think would happen if we actually tried to use this idea? Someone would very quickly invent an abbreviation for !!\u{\o {x_1}\, \o {x_2} \cdots \o{x_k}}!!, I don't know, something like “!!x_1 + x_2 + \ldots + x_k!!” maybe. (It looks crazy, I know, but it might just work.) Because people might like to discuss the fact that $$ \u{\o 2\, \o 3 } = 5$$ and without an addition sign there seems to be no way to explain why this should be.

Well, I have been turning away from the real issue for a while now, but !!a(b\top c\top)\bot = !! !!((ab)\top(ac)\top)\bot!! forces me to confront it. The standard expression of the distributive law equates a computation with two operations and another with three. The computations expressed by the new notation involve five and six operations respectively. Put this way, the distributive law is no longer simple!

This reminds me of the earlier suggestion that if !!x^2+x!! is too complicated, one can write !!x(x+1)!! instead. But expressions don't only express a result, they express a way of arriving at that result. The purpose of an equation is to state that two different computations arrive at the same result. Yes, it's true that $$a+b = \ln e^ae^b,$$ but the two computations are not the same! If they were, the statement would be vacuous. Instead, it says that the simple computation on the left arrives at the same result as the complicated one on the right, an interesting thing to know. “!!2+3=5!!” might imply that !!e^2\cdot e^3=e^5!! but it doesn't say the same thing.

Here's my takeaway from consideration of the Zaz Brown proposal: