Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2026: | J |

| 2025: | JFMAMJ |

| JASOND | |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 245 |

| Programming | 100 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 22 |

| Physics | 21 |

| Perl | 17 |

| Biology | 16 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Sun, 30 Jul 2023

The shell and its crappy handling of whitespace

I'm about thirty-five years into Unix shell programming now, and I continue to despise it. The shell's treatment of whitespace is a constant problem. The fact that

for i in *.jpg; do

cp $i /tmp

done

doesn't work is a constant pain. The problem here is that if one of

the filenames is bite me.jpg then the cp command will turn into

cp bite me.jpg /tmp

and fail, saying

cp: cannot stat 'bite': No such file or directory

cp: cannot stat 'me.jpg': No such file or directory

or worse there is a file named bite that is copied even though

you did not want to copy it, maybe overwriting /tmp/bite that you

wanted to keep.

To make it work properly you have to say

for i in *; do

cp "$i" /tmp

done

with the quotes around the $i.

Now suppose I have a command that strips off the suffix from a filename. For example,

suf foo.html

simply prints foo to standard output. Suppose I want to change the

names of all the .jpeg files to the corresponding names with .jpg

instead. I can do it like this:

for i in *.jpeg; do

mv $i $(suf $i).jpg

done

Ha ha, no, some of the files might have spaces in their names. I have to write:

for i in *.jpeg; do

mv "$i" $(suf "$i").jpg # two sets of quotes

done

Ha ha, no, fooled you, the output of suf will also have spaces. I

have to write:

for i in *.jpeg; do

mv "$i" "$(suf "$i")".jpg # three sets of quotes

done

At this point it's almost worth breaking out a real language and using something like this:

ls *.jpeg | perl -nle '($z = $_) =~ s/\.jpeg$/.jpg/; rename $_ => $z'

I think what bugs me most about this problem in the shell is that it's so uncharacteristic of the Bell Labs people to have made such an unforced error. They got so many things right, why not this?

It's not even a hard choice! 99% of the time you don't want your

strings implicitly split on spaces, why would you?

For example they got the behavior of for i in *.jpeg right; if one of those

files is bite me.jpeg the loop still runs only once for that file.

And the shell

doesn't have this behavior for any other sort of special character.

If you have a file named foo|bar and a variable z='foo|bar' then

ls $z doesn't try to pipe the output of ls foo into the bar

command, it just tries to list the file foo|bar like you wanted. But

if z='foo bar' then ls $z wants to list files foo and bar.

How did the Bell Labs wizards get everything right except the

spaces?

Even if it was a simple or reasonable choice to make in the beginning,

at some point around 1979 Steve Bourne had a clear opportunity to

realize he had made a mistake. He introduced $* and must shortly

therefter have discovered that it wasn't useful. This should have

gotten him thinking.

$* is literally useless. It is the variable that is supposed to

contain the arguments to the current shell. So you can write a shell

script:

#!/bin/sh

# “yell”

echo "I am about to run '$*' now!!1!"

exec $*

and then run it:

$ yell date

I am about to run 'date' now!!1!

Wed Apr 2 15:10:54 EST 1980

except that doesn't work because $* is useless:

$ ls *.jpg

bite me.jpg

$ yell ls *.jpg

I am about to run 'ls bite me.jpg' now!!1!

ls: cannot access 'bite': No such file or directory

ls: cannot access 'me.jpg': No such file or directory

Oh, I see what went wrong, it thinks it got three arguments, instead

of two, because the elements of $* got auto-split. I needed to use

quotes around $*. Let's fix it:

#!/bin/sh

echo "I am about to run '$*' now!!1!"

exec "$*"

$ yell ls *.jpg

yell: 3: exec: ls /tmp/bite me.jpg: not found

No, the quotes disabled all the splitting so that now I got one argument that happens to contain two spaces.

This cannot be made to work. You have to fix the shell itself.

Having realized that $* is useless, Bourne added a workaround to the

shell, a unique special case with special handling. He added a $@ variable which

is identical to $* in all ways but one: when it is in

double-quotes. Whereas $* expands to

$1 $2 $3 $4 …

and "$*" expands to

"$1 $2 $3 $4 …"

"$@" expands to

"$1" "$2" "$3" "$4" …

so that inside of yell ls *jpg, an exec "$@" will turn into

exec "ls" "bite me.jpg" and do what you wanted exec $* to do in

the first place.

I deeply regret that, at the moment that Steve Bourne coded up this weird special case, he didn't instead stop and think that maybe something deeper was wrong. But he didn't and here we are. Larry Wall once said something about how too many programmers have a problem, think of a simple solution, and implement the solution, and what they really need to be doing is thinking of three solutions and then choosing the best one. I sure wish that had happened here.

Anyway, having to use quotes everywhere is a pain, but usually it works around the whitespace problems, and it is not much worse than a million other things we have to do to make our programs work in this programming language hell of our own making. But sometimes this isn't an adequate solution.

One of my favorite trivial programs is called lastdl. All it does

is produce the name of the file most recently written in

$HOME/Downloads, something like this:

#!/bin/sh

cd $HOME/Downloads

echo $HOME/Downloads/"$(ls -t | head -1)"

Many programs stick files into that directory, often copied from the

web or from my phone, and often with long and difficult names like

e15c0366ecececa5770e6b798807c5cc.jpg or

2023_3_20230310_120000_PARTIALPAYMENT_3028707_01226.PDF or

gov.uscourts.nysd.590045.212.0.pdf that I do not want to type or

even autocomplete. No problem, I just do

rm $(lastdl)

or

okular $(lastdl)

or

mv $(lastdl) /tmp/receipt.pdf

except ha ha, no I don't, because none of those works reliably, they all fail if the difficult filename happens to contain spaces, as it often does. Instead I need to type

rm "$(lastdl)"

okular "$(lastdl)"

mv "$(lastdl)" /tmp/receipt.pdf

which in a command so short and throwaway is a noticeable cost, a cost extorted by the shell in return for nothing. And every time I do it I am angry with Steve Bourne all over again.

There is really no good way out in general. For lastdl there is a decent

workaround, but it is somewhat fishy. After my lastdl command finds the

filename, it renames it to a version with no spaces and then prints

the new filename:

#!/bin/sh

# This is not the real code

# and I did not test it

cd $HOME/Downloads

fns="$HOME/Downloads/$(ls -t | head -1)" # those stupid quotes again

fnd="$HOME/Downloads/$(echo "$fns" | tr ' \t\n' '_')" # two sets of stupid quotes this time

mv "$fns" $fnd # and again

echo $fnd

The actual script is somewhat more reliable, and is written in Python, because shell programming sucks.

[ Addendum 20230731: Drew DeVault has written a reply article about

how the rc shell does not have these problems.

rc was designed in the late 1980s by Tom Duff of Bell Labs, and I

was a satisfied user (of the Byron Rakitzis clone) for many years. Definitely give it a look. ]

[ Addendum 20230806: Chris Siebenmann also discusses rc. ]

[Other articles in category /Unix] permanent link

Mon, 14 Mar 2022Yesterday I discussed /dev/full and asked why there wasn't a

generalization of it, and laid out out some very 1990s suggestions that I

have had in the back of my mind since the 1990s. I ended by

acknowledging that there was probably a more modern solution in user

space:

Eh, probably the right solution these days is to

LD_PRELOADa complete mock filesystem library that has any hooks you want in it.

Carl Witty suggested that there is a more modern solution in userspace, FUSE, and Leah Neukirchen filled in the details:

UnreliableFS is a FUSE-based fault injection filesystem that allows to change fault-injections in runtime using simple configuration file.

Also, Dave Vasilevsky suggested that something like this could be done with the device mapper.

I think the real takeaway from this is that I had not accepted the hard truth that all Unix is Linux now, and non-Linux Unix is dead.

Thanks everyone who sent suggestions.

[ Addendum: Leah Neukirchen informs me that FUSE also runs on FreeBSD, OpenBSD and macOS, and reminds me that there are a great many MacOS systems. I should face the hard truth that my knowledge of Unix systems capabilities is at least fifteen yers out of date. ]

[Other articles in category /Unix] permanent link

Sun, 13 Mar 2022Suppose you're writing some program that does file I/O. You'd like to include a unit test to make sure it properly handles the error when the disk fills up and the write can't complete. This is tough to simulate. The test itself obviously can't (or at least shouldn't) actually fill the disk.

A while back some Unix systems introduced a device called

/dev/full. Reading from /dev/full returns zero bytes, just like

/dev/zero. But all attempts to write to /dev/full fail with

ENOSPC, the system error that indices a full disk. You can set up

your tests to try to write to /dev/full and make sure they fail

gracefully.

That's fun, but why not generalize it? Suppose there was a

/dev/error device:

#include <sys/errdev.h>

error = open("/dev/error", O_RDWR);

ioctl(error, ERRDEV_SET, 23);

The device driver would remember the number 23 from this ioctl call,

and the next time the process tried to read or write the error

descriptor, the request would fail and set errno to 23, whatever

that is. Of course you wouldn't hardwire the 23, you'd actually do

#include <sys/errno.h>

ioctl(error, ERRDEV_SET, EBUSY);

and then the next I/O attempt would fail with EBUSY.

Well, that's the way I always imagined it, but now that I think about

it a little more, you don't need this to be a device driver. It

would be better if instead of an ioctl it was an fcntl that you

could do on any file descriptor at all.

Big drawback: the most common I/O errors are probably EACCESS and

ENOENT, failures in the open, not in the actual I/O. This idea

doesn't address that at all. But maybe some variation would work

there. Maybe for those we go back to the original idea, have a

/dev/openerror, and after you do ioctl(dev_openerror, ERRDEV_SET,

EACCESS), the next call to open fails with EACCESS. That might

be useful.

There are some security concerns with the fcntl version of the idea.

Suppose I write a malicious program that opens some file descriptor,

dups it to standard input, does fcntl(1, ERRDEV_SET,

ESOMEWEIRDERROR), then execs the target program t. Hapless t tries

to read standard input, gets ESOMEWEIRDERROR, and then does

something unexpected that it wasn't supposed to do. This particular attack is

easily foiled: exec should reset all the file descriptor saved-error

states. But there might be something more subtle that I haven't

thought of and in OS security there usually is.

Eh, probably the right solution these days is to LD_PRELOAD a

complete mock filesystem library that has any hooks you want in it. I

don't know what the security implications of LD_PRELOAD are but I

have to believe that someone figured them all out by now.

[ Addendum 20220314: Better solutions exist. ]

[Other articles in category /Unix] permanent link

Sun, 06 Feb 2022

A one-character omission caused my Python program to hang (not)

I just ran into a weird and annoying program behavior. I was

writing a Python program, and when I ran it, it seemed to hang.

Worried that it was stuck in some sort of resource-intensive loop I

interrupted it, and then I got what looked like an error message from

the interpreter. I tried this several more times, with the same

result; I tried putting exit(0) near the top of the program to

figure out where the slowdown was, but the behavior didn't change.

The real problem was that the first line which said:

#/usr/bin/env python3

when it should have been:

#!/usr/bin/env python3

Without that magic #! at the beginning, the file is processed not by

Python but by the shell, and the first thing the shell saw was

import re

which tells it to run the import command.

I didn't even know there was an import command. It runs an X client

that waits for the user to click on a window, and then writes a dump

of the window contents to a file. That's why my program seemed to

hang; it was waiting for the click.

I might have picked up on this sooner if I had actually looked at the error messages:

./license-plate-game.py: line 9: dictionary: command not found

./license-plate-game.py: line 10: syntax error near unexpected token `('

./license-plate-game.py: line 10: `words = read_dictionary(dictionary)'

In particular, dictionary: command not found is the shell giving

itself away. But I was so worried about the supposedly resource-bound

program crashing my session that I didn't look at the actual output,

and assumed it was Python-related syntax errors.

I don't remember making this mistake before but it seems like it would be an easy mistake to make. It might serve as a good example when explaining to nontechnical people how finicky and exacting programming can be. I think it wouldn't be hard to understand what happened.

This computer stuff is amazingly complicated. I don't know how anyone gets anything done.

[Other articles in category /Unix] permanent link

Fri, 03 Jan 2020

Benchmarking shell pipelines and the Unix “tools” philosophy

Sometimes I look through the HTTP referrer logs to see if anyone is

talking about my blog. I use the f 11 command to extract the

referrer field from the log files, count up the number of occurrences

of each referring URL, then discard the ones that are internal

referrers from elsewhere on my blog. It looks like this:

f 11 access.2020-01-0* | count | grep -v plover

(I've discussed f before. The f 11 just

prints the eleventh field of each line. It is

essentially shorthand for awk '{print $11}' or perl -lane 'print

$F[10]'. The count utility is even simpler; it counts the number

of occurrences of each distinct line in its input, and emits a report

sorted from least to most frequent, essentially a trivial wrapper

around sort | uniq -c | sort -n. Civilization advances by extending the number of

important operations which we can perform without thinking about

them.)

This has obvious defects, but it works well enough. But every time I

used it, I wondered: is it faster to do the grep before the count,

or after? I didn't ever notice a difference. But I still wanted to

know.

After years of idly wondering this, I have finally looked into it. The point of this article is that the investigation produced the following pipeline, which I think is a great example of the Unix “tools” philosophy:

for i in $(seq 20); do

TIME="%U+%S" time \

sh -c 'f 11 access.2020-01-0* | grep -v plover | count > /dev/null' \

2>&1 | bc -l ;

done | addup

I typed this on the command line, with no backslashes or newlines, so it actually looked like this:

for i in $(seq 20); do TIME="%U+%S" time sh -c 'f 11 access.2020-01-0* | grep -v plover |count > /dev/null' 2>&1 | bc -l ; done | addup

Okay, what's going on here? The pipeline I actually want to analyze,

with f | grep| count, is

there in the middle, and I've already explained it, so let's elide it:

for i in $(seq 20); do

TIME="%U+%S" time \

sh -c '¿SOMETHING? > /dev/null' 2>&1 | bc -l ;

done | addup

Continuing to work from inside to out, we're going to use time to

actually do the timings. The time command is standard. It runs a

program, asks the kernel how long the program took, then prints

a report.

The time command will only time a single process (plus its

subprocesses, a crucial fact that is inexplicably omitted from the man

page). The ¿SOMETHING? includes a pipeline, which must be set up by

the shell, so we're actually timing a shell command sh -c '...'

which tells time to run the shell and instruct it to run the pipeline we're

interested in. We tell the shell to throw away the output of

the pipeline, with > /dev/null, so that the output doesn't get mixed

up with time's own report.

The default format for the report printed by time is intended for human consumption. We can

supply an alternative format in the $TIME variable. The format I'm using here is %U+%S, which comes out as something

like 0.25+0.37, where 0.25 is the user CPU time and 0.37 is the

system CPU time. I didn't see a format specifier that would emit the

sum of these directly. So instead I had it emit them with a + in

between, and then piped the result through the bc command, which performs the requested arithmetic

and emits the result. We need the -l flag on bc

because otherwise it stupidly does integer arithmetic. The time command emits its report to

standard error, so I use 2>&1

to redirect the standard error into the pipe.

[ Addendum 20200108: We don't actually need -l here; I was mistaken. ]

Collapsing the details I just discussed, we have:

for i in $(seq 20); do

(run once and emit the total CPU time)

done | addup

seq is a utility I invented no later than 1993 which has since

become standard in most Unix systems. (As with

netcat, I am not claiming to be the

first or only person to have invented this, only to have invented it

independently.) There are many variations of seq, but

the main use case is that seq 20 prints

1

2

3

…

19

20

Here we don't actually care about the output (we never actually use

$i) but it's a convenient way to get the for loop to run twenty

times. The output of the for loop is the twenty total CPU

times that were emitted by the twenty invocations of bc. (Did you know that

you can pipe the output of a loop?) These twenty lines of output are

passed into addup, which I wrote no later than 2011. (Why did it

take me so long to do this?) It reads a list of numbers and prints the

sum.

All together, the command runs and prints a single number like 5.17,

indicating that the twenty runs of the pipeline took 5.17 CPU-seconds

total. I can do this a few times for the original pipeline, with

count before grep, get times between 4.77 and 5.78, and then try

again with the grep before the count, producing times between 4.32

and 5.14. The difference is large enough to detect but too small to

notice.

(To do this right we also need to test a null command, say

sh -c 'sleep 0.1 < /dev/null'

because we might learn that 95% of the reported time is spent in running the shell, so the actual difference between the two pipelines is twenty times as large as we thought. I did this; it turns out that the time spent to run the shell is insignificant.)

What to learn from all this? On the one hand, Unix wins: it's

supposed to be quick and easy to assemble small tools to do whatever it is

you're trying to do. When time wouldn't do the arithmetic I needed

it to, I sent its output to a generic arithmetic-doing utility. When

I needed to count to twenty, I had a utility for doing that; if I

hadn't there are any number of easy workarounds. The

shell provided the I/O redirection and control flow I needed.

On the other hand, gosh, what a weird mishmash of stuff I had to

remember or look up. The -l flag for bc. The fact that I needed

bc at all because time won't report total CPU time. The $TIME

variable that controls its report format. The bizarro 2>&1 syntax

for redirecting standard error into a pipe. The sh -c trick to get

time to execute a pipeline. The missing documentation of the core

functionality of time.

Was it a win overall? What if Unix had less compositionality but I could use it with less memorized trivia? Would that be an improvement?

I don't know. I rather suspect that there's no way to actually reach that hypothetical universe. The bizarre mishmash of weirdness exists because so many different people invented so many tools over such a long period. And they wouldn't have done any of that inventing if the compositionality hadn't been there. I think we don't actually get to make a choice between an incoherent mess of composable paraphernalia and a coherent, well-designed but noncompositional system. Rather, we get a choice between a incoherent but useful mess and an incomplete, limited noncompositional system.

(Notes to self: (1) In connection with Parse::RecDescent, you once wrote

about open versus closed systems. This is another point in that

discussion. (2) Open systems tend to evolve into messes. But closed

systems tend not to evolve at all, and die. (3) Closed systems are

centralized and hierarchical; open systems, when they succeed, are

decentralized and organic. (4) If you are looking for another

example of a successful incoherent mess of composable paraphernalia,

consider Git.)

[ Addendum: Add this to the list of “weird mishmash of trivia”: There are two

time commands. One, which I discussed above, is a separate

executable, usually in /usr/bin/time. The other is built into the

shell. They are incompatible. Which was I actually using? I would

have been pretty confused if I had accidentally gotten the built-in

one, which ignores $TIME and uses a $TIMEFORMAT that is

interpreted in a completely different way. I was fortunate, and got

the one I intended to get. But it took me quite a while to understand

why I had! The appearance of the TIME=… assignment at the start of

the shell command disabled the shell's special builtin treatment of the

keyword time, so it really did use /usr/bin/time. This computer stuff

is amazingly complicated. I don't know how anyone gets anything done. ]

[ Addenda 20200104: (1) Perl's module ecosystem is another example of a

successful incoherent mess of composable paraphernalia. (2) Of the

seven trivia I included in my “weird mishmash”, five were related to

the time command. Is this a reflection on time, or is it just

because time was central to this particular example? ]

[ Addendum 20200104: And, of course, this is exactly what Richard Gabriel was thinking about in Worse is Better. Like Gabriel, I'm not sure. ]

[Other articles in category /Unix] permanent link

Thu, 08 Nov 2018

How not to reconfigure your sshd

Yesterday I wanted to reconfigure the sshd on a remote machine.

Although I'd never done sshd itself, I've done this kind of thing

a zillion times before. It looks like this: there is a configuration

file (in this case /etc/ssh/sshd-config) that you modify. But this

doesn't change the running server; you have to notify the server that

it should reread the file. One way would be by killing the server and

starting a new one. This would interrupt service, so instead you can

send the server a different signal (in this case SIGHUP) that tells

it to reload its configuration without exiting. Simple enough.

Except, it didn't work. I added:

Match User mjd

ForceCommand echo "I like pie!"

and signalled the server, then made a new connection to see if it

would print I like pie! instead of starting a shell. It started a

shell. Okay, I've never used Match or ForceCommand before, maybe

I don't understand how they work, I'll try something simpler. I

added:

PrintMotd yes

which seemed straightforward enough, and I put some text into

/etc/motd, but when I connected it didn't print the motd.

I tried a couple of other things but none of them seemed to work.

Okay, maybe the sshd is not getting the signal, or something? I

hunted up the logs, but there was a report like what I expected:

sshd[1210]: Received SIGHUP; restarting.

This was a head-scratcher. Was I modifying the wrong file? It semed

hardly possible, but I don't administer this machine so who knows? I

tried lsof -p 1210 to see if maybe sshd had some other config file

open, but it doesn't keep the file open after it reads it, so that was

no help.

Eventually I hit upon the answer, and I wish I had some useful piece of advice here for my future self about how to figure this out. But I don't because the answer just struck me all of a sudden.

(It's nice when that happens, but I feel a bit cheated afterward: I solved the problem this time, but I didn't learn anything, so how does it help me for next time? I put in the toil, but I didn't get the full payoff.)

“Aha,” I said. “I bet it's because my connection is multiplexed.”

Normally when you make an ssh connection to a remote machine, it

calls up the server, exchanges credentials, each side authenticates

the other, and they negotiate an encryption key. Then the server

forks, the child starts up a login shell and mediates between the

shell and the network, encrypting in one direction and decrypting in

the other. All that negotiation and authentication takes time.

There is a “multiplexing” option you can use instead. The handshaking

process still occurs as usual for the first connection. But once the

connection succeeds, there's no need to start all over again to make a

second connection. You can tell ssh to multiplex several virtual

connections over its one real connection. To make a new virtual

connection, you run ssh in the same way, but instead of contacting

the remote server as before, it contacts the local ssh client that's

already running and requests a new virtual connection. The client,

already connected to the remote server, tells the server to allocate a

new virtual connection and to start up a new shell session for it.

The server doesn't even have to fork; it just has to allocate another

pseudo-tty and run a shell in it. This is a lot faster.

I had my local ssh client configured to use a virtual connection if

that was possible. So my subsequent ssh commands weren't going

through the reconfigured parent server. They were all going through

the child server that had been forked hours before when I started my

first connection. It wasn't affected by reconfiguration of the parent

server, from which it was now separate.

I verified this by telling ssh to make a new connection without

trying to reuse the existing virtual connection:

ssh -o ControlPath=none -o ControlMaster=no ...

This time I saw the MOTD and when I reinstated that Match command I

got I like pie! instead of a shell.

(It occurs to me now that I could have tried to SIGHUP the child server process that my connections were going through, and that would probably have reconfigured any future virtual connections through that process, but I didn't think of it at the time.)

Then I went home for the day, feeling pretty darn clever, right up

until I discovered, partway through writing this article, that I can't

log in because all I get is I like pie! instead of a shell.

[Other articles in category /Unix] permanent link

Mon, 21 May 2018

More about disabling standard I/O buffering

In yesterday's article I described a simple and useful feature that could have been added to the standard I/O library, to allow an environment variable to override the default buffering behavior. This would allow the invoker of a program to request that the program change its buffering behavior even if the program itself didn't provide an option specifically for doing that.

Simon Tatham directed me to the GNU Coreutils stdbuf

command

which does something of this sort. It is rather like the

pseudo-tty-pipe program I described, but instead of using the

pseudo-tty hack I suggested, it works by forcing the child program to

dynamically

load

a custom replacement for

stdio.

There appears to be a very similar command in FreeBSD.

[ Addendum 20240820: This description is not accurate; see below. ]

Roderick Schertler pointed out that Dan Bernstein wrote a utility

program, pty, in 1990, atop which my pseudo-tty-pipe program could

easily be built; or maybe its ptybandage utility is exactly what I

wanted. Jonathan de Boyne Pollard has a page explaining it in

detail, and related

packages.

A later version of pty is still

available.

Here's M. Bernstein's blurb about it:

ptygetis a universal pseudo-terminal interface. It is designed to be used by any program that needs a pty.

ptygetcan also serve as a wrapper to improve the behavior of existing programs. For example,ptybandage telnetis liketelnetbut can be put into a pipeline.nobuf grepis likegrepbut won't block-buffer if it's redirected.Previous pty-allocating programs —

rlogind,telnetd,sshd,xterm,screen,emacs,expect, etc. — have caused dozens of security problems. There are two fundamental reasons for this. First, these programs are installed setuid root so that they can allocate ptys; this turns every little bug in hundreds of thousands of lines of code into a potential security hole. Second, these programs are not careful enough to protect the pty from access by other users.

ptygetsolves both of these problems. All the privileged code is in one tiny program. This program guarantees that one user can't touch another user's pty.

ptygetis a complete rewrite ofpty4.0, my previous pty-allocating package.pty4.0's session management features have been split off into a separate package,sess.

Leonardo Taccari informed me that NetBSD's stdio actually

has the environment variable feature I was asking for! Christos

Zoulas suggested adding stdbuf similar to the GNU and FreeBSD

implementations, but the NetBSD people observed, as I did, that it

would be simpler to just control stdio directly with an environment

variable, and did it. Here's the relevant part of the NetBSD setbuf(3) man

page:

The default buffer settings can be overwritten per descriptor (

STDBUFn) where n is the numeric value of the file descriptor represented by the stream, or for all descriptors (STDBUF). The environment variable value is a letter followed by an optional numeric value indicating the size of the buffer. Valid sizes range from 0B to 1MB. Valid letters are:

Uunbuffered

Lline buffered

Ffully buffered

Here's the discussion from the NetBSD tech-userlevel mailing

list.

The actual patch looks almost exactly the way I imagined it would.

Finally, Mariusz Ceier pointed out that there is an ancient bug report in

glibc

suggesting essentially the same environment variable mechanism that I

suggested and that was adopted in NetBSD. The suggestion was firmly

and summarily rejected. (“Hell, no … this is a terrible idea.”)

Interesting wrinkle: the bug report was submitted by Pádraig Brady,

who subsequently wrote the stdbuf command I described above.

Thank you, Gentle Readers!

Addenda

20240820

nabijaczleweli has pointed out that my explanation of the GNU stdbuf

command above is not accurate. I said "it works by forcing the child

program to dynamically load a custom replacement for stdio". It's

less heavyweight than that. Instead, it arranges to load

a dynamic library

that runs before the rest of the program starts, examines the

environment, and simply calls setvbuf as needed on the three

standard streams.

[Other articles in category /Unix] permanent link

Sun, 20 May 2018

Proposal for turning off standard I/O buffering

Some Unix commands, such as grep, will have a command-line flag to

say that you want to turn off the buffering that is normally done in

the standard I/O library. Some just try to guess what you probably

want. Every command is a little different and if the command you want

doesn't have the flag you need, you are basically out of luck.

Maybe I should explain the putative use case here. You have some

command (or pipeline) X that will produce dribbles of data at

uncertain intervals. If you run it at the terminal, you see each

dribble timely, as it appears. But if you put X into a pipeline,

say with

X | tee ...

or

X | grep ...

then the dribbles are buffered and only come out of X when an entire

block is ready to be written, and the dribbles could be very old

before the downstream part of the pipeline, including yourself, sees

them. Because this is happening in user space inside of X, there is

not a damn thing anyone farther downstream can do about it. The only

escape is if X has some mode in which it turns off standard I/O

buffering. Since standard I/O buffering is on by default, there is a

good chance that the author of X did not think to affirmatively add

this feature.

Note that adding the --unbuffered flag to the downstream grep does

not solve the problem; grep will produce its own output timely, but

it's still getting its input from X after a long delay.

One could imagine a program which would interpose a pseudo-tty, and

make X think it is writing to a terminal, and then the standard I/O

library would stay in line-buffered mode by default. Instead of

running

X | tee some-file | ...

or whatever, one would do

pseudo-tty-pipe -c X | tee some-file | ...

which allocates a pseudo-tty device, attaches standard output to it,

and forks. The child runs X, which dribbles timely into the

pseudo-tty while the parent runs a read loop to remove dribbles from

the master end of the TTY and copy them timely into the pipe. This

would work. Although tee itself also has no --unbuffered flag

so you might even have to:

pseudo-tty-pipe -c X | pseudo-tty-pipe -c 'tee some-file' | ...

I don't think such a program exists, and anyway, this is all

ridiculous, a ridiculous abuse of the standard I/O library's buffering

behavior: we want line buffering, the library will only give it to us

if the process is attached to a TTY device, so we fake up a TTY just

to fool stdio into giving us what we want. And why? Simply because

stdio has no way to explicitly say what we want.

But it could easily expose this behavior as a controllable feature. Currently there is a branch in the library that says how to set up a buffering mode when a stream is opened for the first time:

if the stream is for writing, and is attached to descriptor 2, it should be unbuffered; otherwise …

if the stream is for writing, and connects descriptor 1 to a terminal device, it should be line-buffered; otherwise …

if the moon is waxing …

…

otherwise, the stream should be block-buffered

To this, I propose a simple change, to be inserted right at the beginning:

If the environment variable

STDIO_BUFis set to"line", streams default to line buffering. If it's set to"none", streams default to no buffering. If it's set to"block", streams default to block buffered. If it's anything else, or unset, it is ignored.

Now instead of this:

pseudo-tty-pipe --from X | tee some-file | ...

you write this:

STDIO_BUF=line X | tee some-file | ...

Problem solved.

Or maybe you would like to do this:

export STDIO_BUF=line

which then it affects every program in every pipeline in the rest of the session:

X | tee some-file | ...

Control is global if you want it, and per-process if you want it.

This feature would cost around 20 lines of C code in the standard I/O

library and would impose only an insigificant run-time cost. It would

effectively add an --unbuffered flag to every program in the

universe, retroactively, and the flag would be the same for every

program. You would not have to remember that in mysql the magic

option is -n and that in GNU grep it is --line-buffered and that

for jq is is --unbuffered and that Python scripts can be

unbuffered by supplying the -u flag and that in tee you are just

SOL, etc. Setting STDIO_BUF=line would Just Work.

Programming languages would all get this for free also. Python

already has PYTHONUNBUFFERED but in other languages

you have to do something or other; in Perl you use some

horrible Perl-4-ism like

{ my $ofh = select OUTPUT; $|++; select $ofh }

This proposal would fix every programming language everywhere. The Perl code would become:

$ENV{STDIO_BUF} = 'line';

and every other language would be similarly simple:

/* In C */

putenv("STDIO_BUF=line");

[ Addendum 20180521: Mariusz Ceier corrects me, pointing out that this will not work for the process’ own standard streams, as they are pre-opened before the process gets a chance to set the variable. ]

It's easy to think of elaborations on this: STDIO_BUF=1:line might

mean that only standard output gets line-buffering by default,

everything else is up to the library.

This is an easy thing to do. I have wanted this for twenty years. How is it possible that it hasn't been in the GNU/Linux standard library for that long?

[ Addendum 20180521: it turns out there is quite a lot to say about the state of the art here. In particular, NetBSD has the feature very much as I described it. ]

[Other articles in category /Unix] permanent link

Mon, 01 Jan 2018

Converting Google Docs to Markdown

I was on vacation last week and I didn't bring my computer, which has been a good choice in the past. But I did bring my phone, and I spent some quiet time writing various parts of around 20 blog posts on the phone. I composed these in my phone's Google Docs app, which seemed at the time like a reasonable choice.

But when I got back I found that it wasn't as easy as I had expected

to get the documents back out. What I really wanted was Markdown.

HTML would have been acceptable, since Blosxom accepts that also. I

could download a single document in one of several formats, including

HTML and ODF, but I had twenty and didn't want to do them one at a

time. Google has a bulk download feature, to download a zip file of

an entire folder, but upon unzipping I found that all twenty documents

had been converted to Microsoft's docx format and I didn't know a

good way to handle these. I could not find an option for a bulk

download in any other format.

Several tools will compose in Markdown and then export to Google docs, but the only option I found for translating from Google docs to Markdown was Renato Mangini's Google Apps script. I would have had to add the script to each of the 20 files, then run it, and the output appears in email, so for this task, it was even less like what I wanted.

The right answer turned out to be: Accept Google's bulk download of

docx files and then use Pandoc to convert the

docx to Markdown:

for i in *.docx; do

echo -n "$i ? ";

read j; mv -i "$i" $j.docx;

pandoc --extract-media . -t markdown -o "$(suf "$j" mkdn)" "$j.docx";

done

The read is because I had given the files Unix-unfriendly names like

Polyominoes as orthogonal polygons.docx and I wanted to give them

shorter names like orthogonal-polyominoes.docx.

The suf command is a little utility that performs the very common

task of removing or changing the suffix of a filename. The suf "$j"

mkdn command means that if $j is something like foo.docx it

should turn into foo.mkdn. Here's the tiny source code:

#!/usr/bin/perl

#

# Usage: suf FILENAME [suffix]

#

# If filename ends with a suffix, the suffix is replaced with the given suffix

# otheriswe, the given suffix is appended

#

# For example:

# suf foo.bar baz => foo.baz

# suf foo baz => foo.baz

# suf foo.bar => foo

# suf foo => foo

@ARGV == 2 or @ARGV == 1 or usage();

my ($file, $suf) = @ARGV;

$file =~ s/\.[^.]*$//;

if (defined $suf) {

print "$file.$suf\n";

} else {

print "$file\n";

}

sub usage {

print STDERR "Usage: suf filename [newsuffix]\n";

exit 1;

}

Often, I feel that I have written too much code, but not this time.

Some people might be tempted to add bells and whistles to this: what

if the suffix is not delimited by a dot character? What if I only

want to change certain suffixes? What if my foot swells up? What if

the moon falls out of the sky? Blah blah blah. No, for that we can

break out sed.

Next time I go on vacation I will know better and I will not use Google Docs. I don't know yet what instead. StackEdit maybe.

[ Addendum 20180108: Eric Roode pointed out that the program above has

a genuine bug: if given a filename like a.b/c.d it truncates the

entire b/c.d instead of just the d. The current version fixes

this. ]

[Other articles in category /Unix] permanent link

Sun, 02 Apr 2017A Unix system administrator of my acquaintance once got curious about

what people were putting into /dev/null. I think he also may have

had some notion that it would contain secrets or other interesting

material that people wanted thrown away. Both of these ideas are

stupid, but what he did next was even more stupid: he decided to

replace /dev/null with a plain file so that he could examine its

contents.

The root filesystem quickly filled up and the admin had to be called

back from dinner to fix it. But he found that he couldn't fix it: to

create a Unix device file you use the mknod command, and its

arguments are the major and minor device numbers of the device to

create. Our friend didn't remember the correct minor device

number. The ls -l command will tell you the numbers of a device file

but he had removed /dev/null so he couldn't use that.

Having no other system of the same type with an intact device file to

check, he was forced to restore /dev/null from the tape backups.

[Other articles in category /Unix] permanent link

Thu, 28 Jul 2016

Controlling the KDE screen locking works now

Yesterday I wrote about how I was trying to control the KDE screenlocker's timeout from a shell script and all the fun stuff I learned along the way. Then after I published the article I discovered that my solution didn't work. But today I fixed it and it does work.

What didn't work

I had written this script:

timeout=${1:-3600}

perl -i -lpe 's/^Enabled=.*/Enabled=False/' $HOME/.kde/share/config/kscreensaverrc

qdbus org.freedesktop.ScreenSaver /MainApplication reparseConfiguration

sleep $timeout

perl -i -lpe 's/^Enabled=.*/Enabled=True/' $HOME/.kde/share/config/kscreensaverrc

qdbus org.freedesktop.ScreenSaver /MainApplication reparseConfiguration

The strategy was: use perl to rewrite the screen locker's

configuration file, and then use qdbus to send a D-Bus message to

the screen locker to order it to load the updated configuration.

This didn't work. The System Settings app would see the changed

configuration, and report what I expected, but the screen saver itself

was still behaving according to the old configuration. Maybe the

qdbus command was wrong or maybe the whole theory was bad.

More strace

For want of anything else to do (when all you have is a hammer…), I

went back to using strace to see what else I could dig up, and tried

strace -ff -o /tmp/ss/s /usr/bin/systemsettings

which tells strace to write separate files for each process or

thread.

I had a fantasy that by splitting the trace for each process into a

separate file, I might solve the mysterious problem of the missing

string data. This didn't come true, unfortunately.

I then ran tail -f on each of the output files, and used

systemsettings to update the screen locker configuration, looking to

see which the of the trace files changed. I didn't get too much out

of this. A great deal of the trace was concerned with X protocol

traffic between the application and the display server. But I did

notice this portion, which I found extremely suggestive, even with the

filenames missing:

3106 open(0x2bb57a8, O_RDWR|O_CREAT|O_CLOEXEC, 0666) = 18

3106 fcntl(18, F_SETFD, FD_CLOEXEC) = 0

3106 chmod(0x2bb57a8, 0600) = 0

3106 fstat(18, {...}) = 0

3106 write(18, 0x2bb5838, 178) = 178

3106 fstat(18, {...}) = 0

3106 close(18) = 0

3106 rename(0x2bb5578, 0x2bb4e48) = 0

3106 unlink(0x2b82848) = 0

You may recall that my theory was that when I click the “Apply” button

in System Settings, it writes out a new version of

$HOME/.kde/share/config/kscreensaverrc and then orders the screen

locker to reload the configuration. Even with no filenames, this part

of the trace looked to me like the replacement of the configuration

file: a new file is created, then written, then closed, and then the

rename replaces the old file with the new one. If I had been

thinking about it a little harder, I might have thought to check if

the return value of the write call, 178 bytes, matched the length of

the file. (It does.) The unlink at the end is deleting the

semaphore file that System Settings created to prevent a second

process from trying to update the same file at the same time.

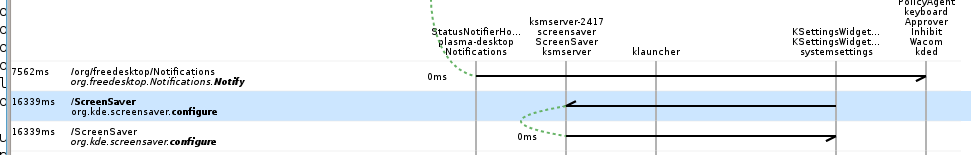

Supposing that this was the trace of the configuration update, the next section should be the secret sauce that tells the screen locker to look at the new configuration file. It looked like this:

3106 sendmsg(5, 0x7ffcf37e53b0, MSG_NOSIGNAL) = 168

3106 poll([?] 0x7ffcf37e5490, 1, 25000) = 1

3106 recvmsg(5, 0x7ffcf37e5390, MSG_CMSG_CLOEXEC) = 90

3106 recvmsg(5, 0x7ffcf37e5390, MSG_CMSG_CLOEXEC) = -1 EAGAIN (Resource temporarily unavailable)

3106 sendmsg(5, 0x7ffcf37e5770, MSG_NOSIGNAL) = 278

3106 sendmsg(5, 0x7ffcf37e5740, MSG_NOSIGNAL) = 128

There is very little to go on here, but none of it is inconsistent with the theory that this is the secret sauce, or even with the more advanced theory that it is the secret suace and that the secret sauce is a D-Bus request. But without seeing the contents of the messages, I seemed to be at a dead end.

Thrashing

Browsing random pages about the KDE screen locker, I learned that the lock screen configuration component could be run separately from the rest of System Settings. You use

kcmshell4 --list

to get a list of available components, and then

kcmshell4 screensaver

to run the screensaver component. I started running strace on this

command instead of on the entire System Settings app, with the idea

that if nothing else, the trace would be smaller and perhaps simpler,

and for some reason the missing strings appeared. That suggestive

block of code above turned out to be updating the configuration file, just

as I had suspected:

open("/home/mjd/.kde/share/config/kscreensaverrcQ13893.new", O_RDWR|O_CREAT|O_CLOEXEC, 0666) = 19

fcntl(19, F_SETFD, FD_CLOEXEC) = 0

chmod("/home/mjd/.kde/share/config/kscreensaverrcQ13893.new", 0600) = 0

fstat(19, {st_mode=S_IFREG|0600, st_size=0, ...}) = 0

write(19, "[ScreenSaver]\nActionBottomLeft=0\nActionBottomRight=0\nActionTopLeft=0\nActionTopRight=2\nEnabled=true\nLegacySaverEnabled=false\nPlasmaEnabled=false\nSaver=krandom.desktop\nTimeout=60\n", 177) = 177

fstat(19, {st_mode=S_IFREG|0600, st_size=177, ...}) = 0

close(19) = 0

rename("/home/mjd/.kde/share/config/kscreensaverrcQ13893.new", "/home/mjd/.kde/share/config/kscreensaverrc") = 0

unlink("/home/mjd/.kde/share/config/kscreensaverrc.lock") = 0

And the following secret sauce was revealed as:

sendmsg(7, {msg_name(0)=NULL, msg_iov(2)=[{"l\1\0\1\30\0\0\0\v\0\0\0\177\0\0\0\1\1o\0\25\0\0\0/org/freedesktop/DBus\0\0\0\6\1s\0\24\0\0\0org.freedesktop.DBus\0\0\0\0\2\1s\0\24\0\0\0org.freedesktop.DBus\0\0\0\0\3\1s\0\f\0\0\0GetNameOwner\0\0\0\0\10\1g\0\1s\0\0", 144}, {"\23\0\0\0org.kde.screensaver\0", 24}], msg_controllen=0, msg_flags=0}, MSG_NOSIGNAL) = 168

sendmsg(7, {msg_name(0)=NULL, msg_iov(2)=[{"l\1\1\1\206\0\0\0\f\0\0\0\177\0\0\0\1\1o\0\25\0\0\0/org/freedesktop/DBus\0\0\0\6\1s\0\24\0\0\0org.freedesktop.DBus\0\0\0\0\2\1s\0\24\0\0\0org.freedesktop.DBus\0\0\0\0\3\1s\0\10\0\0\0AddMatch\0\0\0\0\0\0\0\0\10\1g\0\1s\0\0", 144}, {"\201\0\0\0type='signal',sender='org.freedesktop.DBus',interface='org.freedesktop.DBus',member='NameOwnerChanged',arg0='org.kde.screensaver'\0", 134}], msg_controllen=0, msg_flags=0}, MSG_NOSIGNAL) = 278

sendmsg(7, {msg_name(0)=NULL, msg_iov(2)=[{"l\1\0\1\0\0\0\0\r\0\0\0j\0\0\0\1\1o\0\f\0\0\0/ScreenSaver\0\0\0\0\6\1s\0\23\0\0\0org.kde.screensaver\0\0\0\0\0\2\1s\0\23\0\0\0org.kde.screensaver\0\0\0\0\0\3\1s\0\t\0\0\0configure\0\0\0\0\0\0\0", 128}, {"", 0}], msg_controllen=0, msg_flags=0}, MSG_NOSIGNAL) = 128

sendmsg(7, {msg_name(0)=NULL,

msg_iov(2)=[{"l\1\1\1\206\0\0\0\16\0\0\0\177\0\0\0\1\1o\0\25\0\0\0/org/freedesktop/DBus\0\0\0\6\1s\0\24\0\0\0org.freedesktop.DBus\0\0\0\0\2\1s\0\24\0\0\0org.freedesktop.DBus\0\0\0\0\3\1s\0\v\0\0\0RemoveMatch\0\0\0\0\0\10\1g\0\1s\0\0",

144},

{"\201\0\0\0type='signal',sender='org.freedesktop.DBus',interface='org.freedesktop.DBus',member='NameOwnerChanged',arg0='org.kde.screensaver'\0",

134}]

(I had to tell give strace the -s 256 flag to tell it not to

truncate the string data to 32 characters.)

Binary gibberish

A lot of this is illegible, but it is clear, from the frequent

mentions of DBus, and from the names of D-Bus objects and methods,

that this is is D-Bus requests, as theorized. Much of it is binary

gibberish that we can only read if we understand the D-Bus line

protocol, but the object and method names are visible. For example,

consider this long string:

interface='org.freedesktop.DBus',member='NameOwnerChanged',arg0='org.kde.screensaver'

With qdbus I could confirm that there was a service named

org.freedesktop.DBus with an object named / that supported a

NameOwnerChanged method which expected three QString arguments.

Presumably the first of these was org.kde.screensaver and the others

are hiding in other the 134 characters that strace didn't expand.

So I may not understand the whole thing, but I could see that I was on

the right track.

That third line was the key:

sendmsg(7, {msg_name(0)=NULL,

msg_iov(2)=[{"… /ScreenSaver … org.kde.screensaver … org.kde.screensaver … configure …", 128}, {"", 0}],

msg_controllen=0,

msg_flags=0},

MSG_NOSIGNAL) = 128

Huh, it seems to be asking the screensaver to configure itself. Just

like I thought it should. But there was no configure method, so what

does that configure refer to, and how can I do the same thing?

But org.kde.screensaver was not quite the same path I had been using

to talk to the screen locker—I had been using

org.freedesktop.ScreenSaver, so I had qdbus list the methods at

this new path, and there was a configure method.

When I tested

qdbus org.kde.screensaver /ScreenSaver configure

I found that this made the screen locker take note of the updated configuration. So, problem solved!

(As far as I can tell, org.kde.screensaver and

org.freedesktop.ScreenSaver are completely identical. They each

have a configure method, but I had overlooked it—several times in a

row—earlier when I had gone over the method catalog for

org.freedesktop.ScreenSaver.)

The working script is almost identical to what I had yesterday:

timeout=${1:-3600}

perl -i -lpe 's/^Enabled=.*/Enabled=False/' $HOME/.kde/share/config/kscreensaverrc

qdbus org.freedesktop.ScreenSaver /ScreenSaver configure

sleep $timeout

perl -i -lpe 's/^Enabled=.*/Enabled=True/' $HOME/.kde/share/config/kscreensaverrc

qdbus org.freedesktop.ScreenSaver /ScreenSaver configure

That's not a bad way to fail, as failures go: I had a correct idea

about what was going on, my plan about how to solve my problem would

have worked, but I was tripped up by a trivium; I was calling

MainApplication.reparseConfiguration when I should have been calling

ScreenSaver.configure.

What if I hadn't been able to get strace to disgorge the internals

of the D-Bus messages? I think I would have gotten the answer anyway.

One way to have gotten there would have been to notice the configure

method documented in the method catalog printed out by qdbus. I

certainly looked at these catalogs enough times, and they are not very

large. I don't know why I never noticed it on my own. But I might

also have had the idea of spying on the network traffic through the

D-Bus socket, which is under /tmp somewhere.

I was also starting to tinker with dbus-send, which is like qdbus

but more powerful, and can post signals, which I think qdbus can't

do, and with gdbus, another D-Bus introspector. I would have kept

getting more familiar with these tools and this would have led

somewhere useful.

Or had I taken just a little longer to solve this, I would have

followed up on Sumana Harihareswara’s suggestion to look at

Bustle, which is

a utility that logs and traces D-Bus requests. It would certainly

have solved my problem, because it makes perfectly clear that clicking

that apply button invoked the configure method:

I still wish I knew why strace hadn't been able to print out those

strings through.

[Other articles in category /Unix] permanent link

Wed, 27 Jul 2016

Controlling KDE screen locking from a shell script

Lately I've started watching stuff on Netflix. Every time I do this, the screen locker kicks in sixty seconds in, and I have to unlock it, pause the video, and adjust the system settings to turn off the automatic screen locker. I can live with this.

But when the show is over, I often forget to re-enable the automatic screen locker, and that I can't live with. So I wanted to write a shell script:

#!/bin/sh

auto-screen-locker disable

sleep 3600

auto-screen-locker enable

Then I'll run the script in the background before I start watching, or at least after the first time I unlock the screen, and if I forget to re-enable the automatic locker, the script will do it for me.

The question is: how to write auto-screen-locker?

strace

My first idea was: maybe there is actually an auto-screen-locker

command, or a system-settings command, or something like that, which

was being run by the System Settings app when I adjusted the screen

locker from System Settings, and all I needed to do was to find out

what that command was and to run it myself.

So I tried running System Settings under strace -f and then looking

at the trace to see if it was execing anything suggestive.

It wasn't, and the trace was 93,000 lines long and frighting. Halfway

through, it stopped recording filenames and started recording their

string addresses instead, which meant I could see a lot of calls to

execve but not what was being execed. I got sidetracked trying to

understand why this had happened, and I never did figure it

out—something to do with a call to clone, which is like fork, but

different in a way I might understand once I read the man page.

The first thing the cloned process did was to call set_robust_list,

which I had never heard of, and when I looked for its man page I found

to my surprise that there was one. It begins:

NAME

get_robust_list, set_robust_list - get/set list of robust futexes

And then I felt like an ass because, of course, everyone knows all about the robust futex list, duh, how silly of me to have forgotten ha ha just kidding WTF is a futex? Are the robust kind better than regular wimpy futexes?

It turns out that Ingo Molnár wrote a lovely explanation of robust futexes which are actually very interesting. In all seriousness, do check it out.

I seem to have digressed. This whole section can be summarized in one sentence:

stracewas no help and took me a long way down a wacky rabbit hole.

Sorry, Julia!

Stack Exchange

The next thing I tried was Google search for kde screen locker. The

second or third link I followed was to this StackExchange question,

“What is the screen locking mechanism under

KDE?

It wasn't exactly what I was looking for but it was suggestive and

pointed me in the right direction. The crucial point in the answer

was a mention of

qdbus org.freedesktop.ScreenSaver /ScreenSaver Lock

When I saw this, it was like a new section of my brain coming on line. So many things that had been obscure suddenly became clear. Things I had wondered for years. Things like “What are these horrible

Object::connect: No such signal org::freedesktop::UPower::DeviceAdded(QDBusObjectPath)

messages that KDE apps are always spewing into my terminal?” But now the light was on.

KDE is built atop a toolkit called Qt, and Qt provides an interprocess

communication mechanism called “D-Bus”. The qdbus command, which I

had not seen before, is apparently for sending queries and commands on

the D-Bus. The arguments identify the recipient and the message you

are sending. If you know the secret name of the correct demon, and

you send it the correct secret command, it will do your bidding. (

The mystery message above probably has something to do with the app

using an invalid secret name as a D-Bus address.)

Often these sorts of address hierarchies work well in theory and then

fail utterly because there is no way to learn the secret names. The X

Window System has always had a feature called “resources” by which

almost every aspect of every application can be individually

customized. If you are running xweasel and want just the frame of

just the error panel of just the output window to be teal blue, you

can do that… if you can find out the secret names of the xweasel

program, its output window, its error panel, and its frame. Then you

combine these into a secret X resource name, incant a certain command

to load the new resource setting into the X server, and the next time

you run xweasel the one frame, and only the one frame, will be blue.

In theory these secret names are documented somewhere, maybe. In

practice, they are not documented anywhere. you can only extract them

from the source, and not only from the source of xweasel itself but

from the source of the entire widget toolkit that xweasel is linked

with. Good luck, sucker.

D-Bus has a directory

However! The authors of Qt did not forget to include a directory mechanism in D-Bus. If you run

qdbus

you get a list of all the addressable services, which you can grep for

suggestive items, including org.freedesktop.ScreenSaver. Then if

you run

qdbus org.freedesktop.ScreenSaver

you get a list of all the objects provided by the

org.freedesktop.ScreenSaver service; there are only seven. So you

pick a likely-seeming one, say /ScreenSaver, and run

qdbus org.freedesktop.ScreenSaver /ScreenSaver

and get a list of all the methods that can be called on this object, and their argument types and return value types. And you see for example

method void org.freedesktop.ScreenSaver.Lock()

and say “I wonder if that will lock the screen when I invoke it?” And then you try it:

qdbus org.freedesktop.ScreenSaver /ScreenSaver Lock

and it does.

That was the most important thing I learned today, that I can go

wandering around in the qdbus hierarchy looking for treasure. I

don't yet know exactly what I'll find, but I bet there's a lot of good stuff.

When I was first learning Unix I used to wander around in the filesystem looking at all the files, and I learned a lot that way also.

“Hey, look at all the stuff in

/etc! Huh, I wonder what's in/etc/passwd?”“Hey,

/etc/protocolshas a catalog of protocol numbers. I wonder what that's for?”“Hey, there are a bunch of files in

/usr/spool/mailnamed after users and the one with my name has my mail in it!”“Hey, the manuals are all under

/usr/man. I could grep them!”

Later I learned (by browsing in /usr/man/man7) that there was a

hier(7) man page that listed points of interest, including some I

had overlooked.

The right secret names

Everything after this point was pure fun of the “what happens if I

turn this knob” variety. I tinkered around with the /ScreenSaver

methods a bit (there are twenty) but none of them seemed to be quite

what I wanted. There is a

method uint Inhibit(QString application_name, QString reason_for_inhibit)

method which someone should be calling, because that's evidently what you call if you are a program playing a video and you want to inhibit the screen locker. But the unknown someone was delinquent and it wasn't what I needed for this problem.

Then I moved on to the /MainApplication object and found

method void org.kde.KApplication.reparseConfiguration()

which wasn't quite what I was looking for either, but it might do: I

could perhaps modify the configuration and then invoke this method. I

dimly remembered that KDE keeps configuration files under

$HOME/.kde, so I ls -la-ed that and quickly found

share/config/kscreensaverrc, which looked plausible from the

outside, and more plausible when I saw what was in it:

Enabled=True

Timeout=60

among other things. I hand-edited the file to change the 60 to

243, ran

qdbus org.freedesktop.ScreenSaver /MainApplication reparseConfiguration

and then opened up the System Settings app. Sure enough, the System

Settings app now reported that the lock timeout setting was “4

minutes”. And changing Enabled=True to Enabled=False and back

made the System Settings app report that the locker was enabled or

disabled.

The answer

So the script I wanted turned out to be:

timeout=${1:-3600}

perl -i -lpe 's/^Enabled=.*/Enabled=False/' $HOME/.kde/share/config/kscreensaverrc

qdbus org.freedesktop.ScreenSaver /MainApplication reparseConfiguration

sleep $timeout

perl -i -lpe 's/^Enabled=.*/Enabled=True/' $HOME/.kde/share/config/kscreensaverrc

qdbus org.freedesktop.ScreenSaver /MainApplication reparseConfiguration

Problem solved, but as so often happens, the journey was more important than the destination.

I am greatly looking forward to exploring the D-Bus hierarchy and sending all sorts of inappropriate messages to the wrong objects.

Just before he gets his ass kicked by Saruman, that insufferable know-it-all Gandalf says “He who breaks a thing to find out what it is has left the path of wisdom.” If I had been Saruman, I would have kicked his ass at that point too.

Addendum

Right after I posted this, I started watching Netflix. The screen locker cut in after sixty seconds. “Aha!” I said. “I'll run my new script!”

I did, and went back to watching. Sixty seconds later, the screen

locker cut in again. My script doesn't work! The System Settings app

says the locker has been disabled, but it's mistaken. Probably it's only

reporting the contents of the configuration file that I edited, and

the secret sauce is still missing. The System Settings app does

something to update the state of the locker when I click that “Apply”

button, and I thought that my qdbus command was doing the same

thing, but it seems that it isn't.

I'll figure this out, but maybe not today. Good night all!

[ Addendum 20160728: I figured it out the next day ]

[ Addendum 20160729: It has come to my attention that there is

actually a program called xweasel. ]

[Other articles in category /Unix] permanent link

Tue, 21 Apr 2015

Another use for strace (isatty)

(This is a followup to an earlier article describing an interesting use of strace.)

A while back I was writing a talk about Unix internals and I wanted to

discuss how the ls command does a different display when talking to

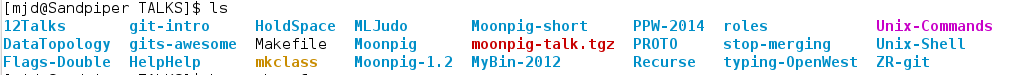

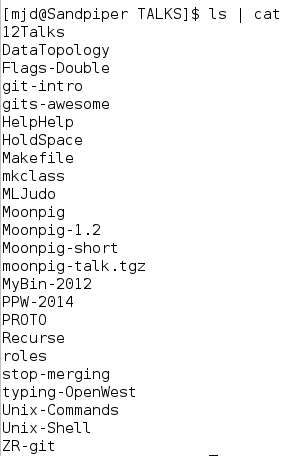

a terminal than otherwise:

ls to a terminal

ls not to a terminal

How does ls know when it is talking to a terminal? I expect that is

uses the standard POSIX function isatty. But how does isatty find

out?

I had written down my guess. Had I been programming in C, without

isatty, I would have written something like this:

@statinfo = stat STDOUT;

if ( $statinfo[2] & 0060000 == 0020000

&& ($statinfo[6] & 0xff) == 5) { say "Terminal" }

else { say "Not a terminal" }

(This is Perl, written as if it were C.) It uses fstat (exposed in

Perl as stat) to get the mode bits ($statinfo[2]) of the inode

attached to STDOUT, and then it masks out the bits the determine if

the inode is a character device file. If so, $statinfo[6] is the

major and minor device numbers; if the major number (low byte) is

equal to the magic number 5, the device is a terminal device. On my

current computers the magic number is actually 136. Obviously this

magic number is nonportable. You may hear people claim that those bit

operations are also nonportable. I believe that claim is mistaken.

The analogous code using isatty is:

use POSIX 'isatty';

if (isatty(STDOUT)) { say "Terminal" }

else { say "Not a terminal" }

Is isatty doing what I wrote above? Or something else?

Let's use strace to find out. Here's our test script:

% perl -MPOSIX=isatty -le 'print STDERR isatty(STDOUT) ? "terminal" : "nonterminal"'

terminal

% perl -MPOSIX=isatty -le 'print STDERR isatty(STDOUT) ? "terminal" : "nonterminal"' > /dev/null

nonterminal

Now we use strace:

% strace -o /tmp/isatty perl -MPOSIX=isatty -le 'print STDERR isatty(STDOUT) ? "terminal" : "nonterminal"' > /dev/null

nonterminal

% less /tmp/isatty

We expect to see a long startup as Perl gets loaded and initialized,

then whatever isatty is doing, the write of nonterminal, and then

a short teardown, so we start searching at the end and quickly

discover, a couple of screens up:

ioctl(1, SNDCTL_TMR_TIMEBASE or TCGETS, 0x7ffea6840a58) = -1 ENOTTY (Inappropriate ioctl for device)

write(2, "nonterminal", 11) = 11

write(2, "\n", 1) = 1

My guess about fstat was totally wrong! The actual method is that

isatty makes an ioctl call; this is a device-driver-specific

command. The TCGETS parameter says what command is, in this case

“get the terminal configuration”. If you do this on a non-device, or

a non-terminal device, the call fails with the error ENOTTY. When

the ioctl call fails, you know you don't have a terminal. If you do

have a terminal, the TCGETS command has no effects, because it is a

passive read of the terminal state. Here's the successful call:

ioctl(1, SNDCTL_TMR_TIMEBASE or TCGETS, {B38400 opost isig icanon echo ...}) = 0

write(2, "terminal", 8) = 8

write(2, "\n", 1) = 1

The B38400 opost… stuff is the terminal configuration; 38400 is the baud rate.

(In the past the explanatory text for ENOTTY was the mystifying “Not

a typewriter”, even more mystifying because it tended to pop up when

you didn't expect it. Apparently Linux has revised the message to the

possibly less mystifying “Inappropriate ioctl for device”.)

(SNDCTL_TMR_TIMEBASE is mentioned because apparently someone decided

to give their SNDCTL_TMR_TIMEBASE operation, whatever that is, the

same numeric code as TCGETS, and strace isn't sure which one is

being requested. It's possible that if we figured out which device was

expecting SNDCTL_TMR_TIMEBASE, and redirected standard output to

that device, that isatty would erroneously claim that it was a

terminal.)

[ Addendum 20150415: Paul Bolle has found that the

SNDCTL_TMR_TIMEBASE pertains to the old and possibly deprecated OSS

(Open Sound System)

It is conceivable that isatty would yield the wrong answer when

pointed at the OSS /dev/dsp or /dev/audio device or similar. If

anyone is running OSS and willing to give it a try, please contact me at mjd@plover.com. ]

[ Addendum 20191201: Thanks to Hacker News user

jwilk for pointing

out that strace is

now able to distinguish TCGETS from SNDCTL_TMR_TIMEBASE. ]

[Other articles in category /Unix] permanent link

Sun, 19 Apr 2015

Another use for strace (groff)

The marvelous Julia Evans is always looking for ways to express her

love of strace and now has written a zine about

it. I don't use

strace that often (not as often as I should, perhaps) but every once

in a while a problem comes up for which it's not only just the right

thing to use but the only thing to use. This was one of those

times.

I sometimes use the ancient Unix drawing language

pic. Pic has many

good features, but is unfortunately coupled too closely to the Roff

family of formatters (troff, nroff, and the GNU project version,

groff). It only produces Roff output, and not anything more

generally useful like SVG or even a bitmap. I need raw images to

inline into my HTML pages. In the past I have produced these with a

jury-rigged pipeline of groff, to produce PostScript, and then GNU

Ghostscript (gs) to translate the PostScript to a PPM

bitmap, some PPM utilities to crop and

scale the result, and finally ppmtogif or whatever. This has some

drawbacks. For example, gs requires that I set a paper size, and

its largest paper size is A0. This means that large drawings go off

the edge of the “paper” and gs discards the out-of-bounds portions.

So yesterday I looked into eliminating gs. Specifically I wanted to

see if I could get groff to produce the bitmap directly.

GNU groff has a -Tdevice option that specifies the "output"

device; some choices are -Tps for postscript output and -Tpdf for

PDF output. So I thought perhaps there would be a -Tppm or

something like that. A search of the manual did not suggest anything

so useful, but did mention -TX100, which had something to do with

100-DPI X window system graphics. But when I tried this groff only said:

groff: can't find `DESC' file

groff:fatal error: invalid device `X100`

The groff -h command said only -Tdev use device dev. So what

devices are actually available?

strace to the rescue! I did:

% strace -o /tmp/gr groff -Tfpuzhpx

and then a search for fpuzhpx in the output file tells me exactly

where groff is searching for device definitions:

% grep fpuzhpx /tmp/gr

execve("/usr/bin/groff", ["groff", "-Tfpuzhpx"], [/* 80 vars */]) = 0

open("/usr/share/groff/site-font/devfpuzhpx/DESC", O_RDONLY) = -1 ENOENT (No such file or directory)

open("/usr/share/groff/1.22.2/font/devfpuzhpx/DESC", O_RDONLY) = -1 ENOENT (No such file or directory)

open("/usr/lib/font/devfpuzhpx/DESC", O_RDONLY) = -1 ENOENT (No such file or directory)

I could then examine those three directories to see if they existed, and if so find out what was in them.

Without strace here, I would be reduced to groveling over the

source, which in this case is likely to mean trawling through the

autoconf output, and that is something that nobody wants to do.

Addendum 20150421: another article about strace. ]

[ Addendum 20150424: I did figure out how to prevent gs from

cropping my output. You can use the flag -p-P48i,48i to groff to

set the page size to 48 inches (48i) by 48 inches. The flag is

passed to grops, and then resulting PostScript file contains

%%DocumentMedia: Default 3456 3456 0 () ()

which instructs gs to pretend the paper size is that big. If it's

not big enough, increase 48i to 120i or whatever. ]

[Other articles in category /Unix] permanent link

Fri, 17 Feb 2012

It came from... the HOLD SPACE

Since 2002, I've given a talk almost every December for the Philadelphia Linux Users'

Group. It seems like most of their talks are about the newest and

best developments in Linux applications, which is a topic I don't know

much about. So I've usually gone the other way, talking about the

oldest and worst stuff. I gave a couple of pretty good talks about

how files work, for example, and what's in the inode structure.

I recently posted about my work on Zach Holman's spark program, which culminated in a ridiculous workaround for the shell's lack of fractional arithmetic. That work inspired me to do a talk about all the awful crap we had to deal with before we had Perl. (And the other 'P' languages that occupy a similar solution space.) Complete materials are here. I hope you check them out, because i think they are fun. This post is a bunch of miscellaneous notes about the talk.

One example of awful crap we had to deal with before Perl etc. were invented was that some people used to write 'sed scripts', although I am really not sure how they did it. I tried once, without much success, and then for this talk I tried again, and again did not have much success.

The little guy to the right is known

as hallucigenia. It is a creature so peculiar that when

the paleontologists first saw the fossils, they could not even agree

on which side was uppermost. It has nothing to do with Unix, but I

put it on the slide to illustrate "alien horrors from the dawn of

time".

The little guy to the right is known

as hallucigenia. It is a creature so peculiar that when

the paleontologists first saw the fossils, they could not even agree

on which side was uppermost. It has nothing to do with Unix, but I

put it on the slide to illustrate "alien horrors from the dawn of

time".

Between slides 9 and 10 (about the ed line editor) I did a

quick demo of editing with ed. You will just have to imagine

this. I first learned to program with a line editor like ed,

on a teletypewriter just like the one on slide 8.

Modern editors are much better. But it used to

be that Unix sysadmins were expected to know at least a little ed,

because if your system got into some horrible state where it couldn't

mount the /usr partition, you wouldn't be able to run

/usr/bin/vi or /usr/local/bin/emacs, but you would

still be able to use /bin/ed to fix /etc/fstab or

whatever else was broken. Knowing ed saved my bacon several

times.

(Speaking of

teletypewriters, ours had an attachment for punching paper tape, which

you can see on the left side of the picture. The punched chads fell

into a plastic chad box (which is missing in the picture), and when I

was about three I spilled the chad box. Chad was everywhere, and it

was nearly impossible to pick up. There were still chads stuck

in the cracks in the floorboards when we moved out three years

later. That's why, when the contested election of 2000 came around, I