Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2026: | J |

| 2025: | JFMAMJ |

| JASOND | |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 246 |

| Programming | 100 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 23 |

| Physics | 21 |

| Perl | 17 |

| Biology | 16 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Thu, 12 Feb 2026

Language models imply world models

In a recent article about John Haugeland's rejection of micro-worlds I claimed:

as a “Large Language Model”, Claude necessarily includes a model of the world in general

Nobody has objected to this remark, but I would like to expand on it. The claim may or may not be true — it is an empirical question. But as a theory it has been widely entertained since the very earliest days of digital computers. Yehoshua Bar-Hillel, the first person to seriously investigate machine translation, came to this conclusion in the 1950s. Here's an extract of Haugeland's discussion of his work:

In 1951 Yehoshua Bar-Hillel became the first person to earn a living from work on machine translation. Nine years later he was the first to point out the fatal flaw in the whole enterprise, and therefore to abandon it. Bar-Hillel proposed a simple test sentence:

The box was in the pen.

And, for discussion, he considered only the ambiguity: (1) pen = a writing instrument; versus (2) pen = a child's play enclosure. Extraordinary circumstances aside (they only make the problem harder), any normal English speaker will instantly choose "playpen" as the right reading. How? By understanding the sentence and exercising a little common sense. As anybody knows, if one physical object is in another, then the latter must be the larger; fountain pens tend to be much smaller than boxes, whereas playpens are plenty big.

Why not encode these facts (and others like them) right into the system? Bar-Hillel observes:

What such a suggestion amounts to, if taken seriously, is the requirement that a translation machine should not only be supplied with a dictionary but also with a universal encyclopedia. This is surely utterly chimerical and hardly deserves any further discussion. (1960, p. 160)

(Artifical Intelligence: The Very Idea; John Haugeland; p.174–176.)

Bar-Hillel says, and I agree, that an accurate model of language requires an accurate model of the world. In 1960, this appeared “utterly chimerical”. Perhaps so, but here we are, and 55 years later we have what most agree is a language model capable of producing intelligible text complex enough to fool sophisticated readers. Even people who call the LLM a “stochastic word garbage spewer” and object when it is called “AI” seem to have no qualms about the term “large language model”.

The Winograd SHRDLU project was an attempt to construct a world whose model was small enough to not be utterly chimerical. This worked, but as Haugeland says, it wasn't actually interesting.

Doug Lenat tried for forty years construct an explicit world model. When he died in 2023 I think people still considered the project to have been utterly chimerical.

I may be misrepresenting Bar-Hillel's views. He didn't actually say “world model”, he said “universal encyclopedia”. Lenat's project's name, “Cyc”, acknowledges this “Cyc” is short for “encyclopedia”. And until recently a universal encyclopedia did seem, to many, to be utterly chimerical. (Imperial China produced many interesting attempts.) But we do now in fact have a universal encyclopedia, and Claude has ingested that universal encyclopedia.

Let's try it and see

Bar-Hillel thought that the “box is in the pen” example proved that machine translation would be at the very least extremely difficult: it requires understanding of the usual relative sizes of boxes and pens, and that the possibility of such understanding in general “hardly deserves any further discussion”.

I asked Claude:

Consider the sentence “the box was in the pen”. What does “pen” mean here?

Claude said:

Most likely a playpen or animal pen (enclosure), since a box wouldn't fit inside a writing pen.

Presumably Bar-Hillel's article and Haugeland's book was in Claude's training data, and perhaps it somehow absorbed the right thing to say here without actually knowing anything about boxes or pens. I tried the next similar example that came to mind:

How about “suit” in “what suit was the king”?

Playing card suit - hearts, diamonds, clubs, or spades.

Why?

The context "what suit was the king" is the standard phrasing for identifying a playing card. A king is one of the face cards in each suit, so asking about its suit is a natural card game question.

Okay, that's just what I would have said.

Why couldn't it be a human king wearing a suit?

It could be - I jumped to the card interpretation because "what suit was the king" uses "suit" as a predicate noun, which is uncommon phrasing for clothing. You'd more naturally say "what suit was the king wearing" or "what kind of suit did the king have." But without more context, the clothing interpretation is perfectly plausible.

I think Claude is wrong about the grammar here. I'm not sure what “predicate noun” means and I suspect Claude is using the term incorrectly. I can't understand “what suit was the king?” as grammatical in any plausible construction, only in highly contrived situtations such as an evil wizard transforming the king into a suit of armor and hiding him in the armory.

But if one agrees to take it to mean “what suit was the king have” I agree that this is a perfectly plausible interpretation even though there isn't anything clearly clothing-related nearby, and if one assumes it meant “what suit was the king wearing” then the clothing interpretation is unavoidable.

Could it be a lawsuit?

Yes - "what suit was the king" could mean what lawsuit the king was involved in. That reading makes grammatical sense, though it would be more natural with "in" ("what suit was the king in"). Context would be needed to distinguish between cards, clothing, and lawsuits.

Again I disagree that this makes grammatical sense. Without “in” I think a native listener would be at least puzzled. (“Do you mean ‘what suit was the king involved in?’?”)

Could it be a misspelling for “soot”?

Yes, that's plausible - "what soot was the king" could be asking what type of soot the king was covered in or exposed to. Though it's an odd question without specific context like a fire or industrial setting.

In my opinion, it is not grammatically plausible.

However, I think focusing on the grammatical errors would missing the point. The “soot” interpretation is unlikely, I think the bad grammar rules it out, and Claude's response does not seem to recognizer this. But Claude does express understanding that that the interpretation is unikely without some surrounding soot-related context, and a reasonable idea of what that context would need to be like.

What if it were “what color is the king”?

If it's "what color is the king," then the card suit interpretation becomes much less likely (suits aren't typically described as colors, though red/black could work). It's more likely asking about a physical object - a chess piece, a game token, a statue, or similar - where the king's color is relevant.

I had been thinking of “color” as referring to the king's skin color, but I agree that the chess piece or game token interpretation is more plausible. Again Claude seems to understand something about how we talk about things. If the king were a playing card, we might be asking if it is a red king or a black king. But without that context it's now more likely that the king is a chess piece than a playing card.

What if the sentence was known to have been translated from German?

That would strengthen the card suit interpretation significantly. German card suits (Herz, Karo, Pik, Kreuz) are often called "Farbe" (color) in German card game terminology, so "what color is the king" could be a literal translation of a question about which suit a king card belongs to.

That's just what I would have said.

Could “suit” have been an accidental misspelling of “age”?

On a standard keyboard, that seems unlikely - 'a', 'g', 'e' are nowhere near 's', 'u', 'i', 't'. Autocorrect or speech-to-text could produce it, but not a typical typing error.

I thought Claude might botch this. There is no grammatical or semantic reason why “what age was the king” doesn't work, so the only reason to reject this is orthographic. And Claude's grasp of spelling still seems quite weak. The other day it claimed that “‘Warner’ is likely either a typo for ‘Cham’ or …” which couldn't be wronger. I told it at the time that it is impossible that "Cham" could be a typo for “Warner”, and if pressed to explain why, I would have said something like what Claude said here.

Tentative conclusions

Interesting as this all is, it is a digression. My main points, again:

It is at least plausible that coherent speech requires a model of a large fraction of the world, and, while it may yet turn out to be false, this theory has been seen as plausible for generations.

Whatever else Claude can or can't do, it can certainly speak coherently.

Therefore Claude probably does have something like a model of a substantial part of the world.

In 1960 this appeared completely impossible.

But here we are.

Addendum

20260214

I disagreed with Claude that “what suit was the king” made grammatical sense. Rik Signes has pointed out that it it is certainly grammatical, because the grammar is the same as “what person was the king” or “what visitor was the king”. My discomfort with it is not grammatical, it is pragmatic.

[Other articles in category /tech/gpt] permanent link

Thu, 05 Feb 2026

John Haugeland on the failure of micro-worlds

One of the better books I read in college was Artificial Intelligence: The Very Idea (1985) by philosopher John Haugeland. One of the sections I found most striking and memorable was about Terry Winograd's SHRDLU. SHRDLU, around 1970, could carry on a discussion in English in which it would manipulate imaginary colored blocks in a “blocks world”. displayed on a computer screen. The operator could direct it to “pick up the pyramid and put it on the big red cube” or ask it questions like “what color is the biggest cylinder that isn't on the table?”.

Haugeland was extremely unimpressed (p.190, and more generally 185–195):

To dwell on these shortcomings, however, is to miss the fundamental limitation: the micro-world itself. SHRDLU performs so glibly only because his domain has been stripped of anything that could ever require genuine wit or understanding. In other words, far from digging down to the essential questions of AI, a micro-world simply eliminates them. … the blocks-world "approximates" a playroom more as a paper plane approximates a duck.

He imagines this exchange between the operator and SHRDLU:

- Trade you a squirtgun for a big red block.

SORRY, I DON'T KNOW THE WORD "TRADE".

Oops, a vocabulary snag. Fortunately, SHRDLU can learn new words; Winograd taught him about steeples, and now we'll tell him about trades (and squirtguns).

- A "trade" is a free exchange of property.

SORRY, I DON'T KNOW THE WORD "FREE".

- A "free" act is done willingly, without being forced.

SORRY, I DON'T KNOW THE WORD "ACT".

- "Acts" are what people do on purpose, and not by accident.

SORRY, I DON'T KNOW THE WORD "PEOPLE".

- Sorry, I thought you were smarter than you are.

SORRY, I DON'T KNOW THE WORD "SORRY".

What does Haugeland say he would like to have seen?

Try to imagine, for instance, that instead of crashing on the first word, SHRDLU would give our offer a typical, ordinary response:

I ALREADY HAVE A WATER PISTOL, BUT I'LL GIVE YOU TWO BLOCKS AND A PYRAMID FOR YOUR SLIMY OLD FROG.

Childishly simple, no doubt; but look at how much is presupposed:

- SHRDLU appreciates that a second squirtgun would be less valuable than the first; …

On this standard, at least, an LLM is a smashing success. It does, in fact, have a model of trading, acts, property, and water pistols, or at least of how we talk about such things. We might criticize the model's accuracy, or usefulness, but it certainly exists. The large language model is a model of the semantics of trading, acts, property, water pistols, and so on.

Curious to see how it would go, I asked Claude to pretend it had access to a SHRDLU-like blocks world:

Let's pretend that we have a blocks world and you have access to it, the way SHRDLU does, except you are not SHRDLU, but still Claude. So I could ask Claude "put the pyramid on the big red block " and, if there were several pyramids, you might reply “I don't know which pyramid you mean" or whatever Claude would actually say in such a situation. Was that clear?

Yes, that's clear. I'll act as Claude with access to a blocks world environment—able to manipulate blocks, pyramids, and other shapes on a table, stack them, move them around, and so on.

I asked it a few SHRDLU-like questions about the blocks, then asked it to put a block on a pyramid. It clearly understood the point of the exercise:

I can't do that—the blue block is much larger than the red pyramid, and the pyramid has a pointed top. The block wouldn't balance; it would just fall off.

Then I made the crucial offer:

Trade you a squirtgun for a big red block.

I appreciate the offer, but I don't really have a way to accept a squirtgun from you, nor do you have physical access to the blocks world to hand me one.

That said—I could just add a big red block to the world if you want one in here. Should I put one on the table?

Would Haugeland have been satisfied in 1985 if SHRDLU had said this? I think certainly. Haugeland wanted SHRDLU to respond to the offer directly, as the beginning of a negotiation. Claude's response is one level better from that: it not only recognizes that I negotiating, it recognizes that actually negotiating for the squirt gun would not make sense, and offers a sensible workaround.

I pushed it a little farther:

I have a squirtgun here. I can designate it as belonging to you, if you agree that the big red block belongs to me.

Alright, you've got a deal. The big red block is yours.

I've placed a big red block on the table—it's now designated as belonging to you. And I acknowledge the squirtgun as mine, though I confess I have no idea what I'll do with it in a blocks world. Perhaps threaten uncooperative pyramids into stacking properly.

Mostly I just tried this for fun. The Haugeland discussion of SHRDLU has been knocking around my head for forty years, but now it has knocked against something new, and I wanted to see what would actually happen.

But I do have a larger point. Haugeland clearly recognized in 1985 that a model of the world was a requirement for intelligence:

The world of trading cannot be "micro." … There are no plausible, non-arbitrary boundaries restricting what might be relevant at any juncture.

and later:

The world cannot be decomposed into independent fragments. Realizing this amounts to a fundamental insight into common sense and mundane intelligence — and therefore points the way for subsequent AI.

Are there are any people who are still saying “it's not artificial intelligence, it's just a Large Language Model”. I suppose probably. But as a “Large Language Model”, Claude necessarily includes a model of the world in general, something that has long been recognized as an essential but perhaps unattainable prerequisite for artificial intelligence. Five years ago a general world model was science fiction. Now we have something that can plausibly be considered an example.

And second: maybe this isn't “artificial intelligence” (whatever that means) and maybe it is. But it does the things I wanted artificial intelligence to do, and I think this example shows pretty clearly that it does at least one of the things that John Haugeland wanted it to do in 1985.

My complete conversation with Claude about this.

Addenda

20260207

I don't want to give the impression that Haugeland was scornful of Winograd's work. He considered it to have been a valuable experiment:

No criticism whatever is intended of Winograd or his coworkers. On the contrary, it was they who faithfully pursued a pioneering and plausible line of inquiry and thereby made an important scientific discovery, even if it wasn't quite what they expected. … The micro-worlds effort may be credited with showing that the world cannot be decomposed into independent fragments.

(p. 195)

20260212

More about my claim that

as a “Large Language Model”, Claude necessarily includes a model of the world in general

I was not just pulling this out of my ass; it has been widely theorized since at least 1960.

[Other articles in category /tech/gpt] permanent link

Fri, 02 May 2025

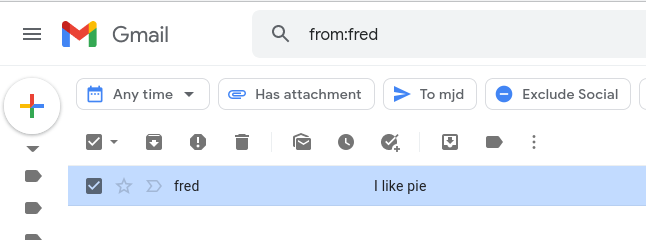

Claude and I write a utility program

Then I had two problems…

A few days ago I got angry at xargs for the hundredth time, because

for me xargs is one of those "then he had two problems" technologies.

It never does what I want by default and I can never remember how to

use it. This time what I wanted wasn't complicated: I had a bunch of

PDF documents in /tmp and I wanted to use GPG to encrypt some of

them, something like this:

gpg -ac $(ls *.pdf | menupick)

menupick

is a lovely little utility that reads lines from standard input,

presents a menu, prompts on the terminal for a selection from the

items, and then prints the selection to standard output. Anyway, this

didn't work because some of the filenames I wanted had spaces in them,

and the shell sucks. Also because

gpg probably only does one file at a time.

I could have done it this way:

ls *.pdf | menupick | while read f; do gpg -ac "$f"; done

but that's a lot to type. I thought “aha, I'll use xargs.” Then I

had two problems.

ls *.pdf | menupick | xargs gpg -ac

This doesn't work because xargs wants to batch up the inputs to run

as few instances of gpg as possible, and gpg only does one file at

a time. I glanced at the xargs manual looking for the "one at a

time please" option (which should have been the default) but I didn't

see it amongst the forest of other options.

I think now that I needed -n 1 but I didn't find it immediately, and

I was tired of looking it up every time when it was what I wanted

every time. After many years of not remembering how to get xargs to

do what I wanted, I decided the time had come to write a stripped-down

replacement that just did what I wanted and nothing else.

(In hindsight I should perhaps have looked to see if gpg's

--multifile option did what I wanted, but it's okay that I didn't,

this solution is more general and I will use it over and over in

coming years.)

xar is a worse version of xargs, but worse is better (for me)

First I wrote a comment that specified the scope of the project:

# Version of xargs that will be easier to use

#

# 1. Replace each % with the filename, if there are any

# 2. Otherwise put the filename at the end of the line

# 3. Run one command per argument unless there is (some flag)

# 4. On error, continue anyway

# 5. Need -0 flag to allow NUL-termination

There! It will do one thing well, as Brian and Rob commanded us in the Beginning Times.

I wrote a draft implementation that did not even do all those things, just items 2 and 4, then I fleshed it out with item 1. I decided that I would postpone 3 and 5 until I needed them. (5 at least isn't a YAGNI, because I know I have needed it in the past.)

The result was this:

import subprocess

import sys

def command_has_percent(command):

for word in command:

if "%" in word:

return True

return False

def substitute_percents(target, replacement):

return [ s.replace("%", replacement) for s in target ]

def run_command_with_filename(command_template, filename):

command = command_template.copy()

if not command_has_percent(command):

command.append("%")

res = subprocess.run(substitute_percents(command, filename), check=False)

return res.returncode == 0

if __name__ == '__main__':

template = sys.argv[1:]

ok = True

for line in sys.stdin:

if line.endswith("\n"):

line = line[:-1]

if not run_command_with_filename(template, line):

ok = False

exit(0 if ok else 1)

Short, clean, simple, easy to use. I called it xar, ran

ls *.pdf | menupick | xar gpg -ac

and was content.

Now again, with Claude

The following day I thought this would be the perfect opportunity to

try getting some LLM help with programming. I already had a baseline

version of xar working, and had thought through the problem

specification. Now I could do it over with the LLM and compare the

two results. The program I wanted was small and self-contained. If

the LLM sabotaged me somehow, I would probably notice, and if I

didn't, it wouldn't matter, because I had a working version

already.

So I asked Claude about it. I knew Claude was not the right choice, because Claude is not really designed for this task, and has no integration with the coding editor, but Claude is what I am familiar with so I thought I'd give it a shot.

On the whole it went extremely well. The complete transcript is here. I imagine this was pretty much a maximally good experience, that all the terrible problems of LLM coding arise in larger, more complicated systems. But this program is small and self-contained, with no subtleties, so the LLM could be at its best.

Phase 1

First I just told Claude what I wanted and let it write the program from scratch.

Let's write a program in Python called

xar. It will be a stripped-down version of the standard Unixxargsutility, but it will do less and be simpler to use.It will get command-line arguments that specify a command template. Then it will read lines from stdin. Each line will be appended to the command template to produce a command. The program will run the resulting command, one command for each line of input.

Any questions?

Default Claude is very chatty and I am going to omit most of its chatty comments, which make me impatient.

The program it wrote was not what I would have written, but it was good enough. If I had just used Claude right off the bat, instead of writing my own, it would have been good enough, and it would have taken somewhere between 2% and 10% as long to produce.

So the one-line summary of this article is: I should stop writing simple command-line utilities, and just have Claude write them instead, because Claude's are good enough, and definitely better than yak-shaving.

Here's Claude's initial version:

#!/usr/bin/env python3

import sys

import subprocess

import shlex

def main():

if len(sys.argv) < 2:

print("Usage: xar command [args...]", file=sys.stderr)

sys.exit(1)

command_template = sys.argv[1:]

for line in sys.stdin:

line = line.rstrip('\n')

full_command = command_template + [line]

try:

result = subprocess.run(full_command, check=True)

except subprocess.CalledProcessError as e:

print(f"Command failed with exit code {e.returncode}: {' '.join(full_command)}",

file=sys.stderr)

except FileNotFoundError:

print(f"Command not found: {full_command[0]}", file=sys.stderr)

sys.exit(1)

if __name__ == "__main__":

main()

Claude's version had numerous comments, which I have omitted. I later told it to stop putting in comments, which it did.

Claude's use of check here was not what I wanted, because that makes

subprocess.run raise an exception when the subcommand fails, and

then the exception has to be immediately caught and handled. My

original control flow had been simpler:

res = subprocess.run(substitute_percents(command, filename), check=False)

return res.returncode == 0

…

if not run_command_with_filename(template, line):

ok = False

Claude's program pulled in shlex without noticing that it was

unused. But Claude did teach me about str.rstrip('\n') which I had

not known about before (or maybe had forgotten), so that was one small

win already.

Argument parsing

The next step was a big win. Python's library for command-line

argument handling is called argparse and it is really nice. If I

were still writing programs in Perl, I would implement a Perl version

of argparse because Perl has 29 available argument parsing libraries

and they are all loathsome. The one problem with argparse is I never

remember off the top of my head how to use it. I think the module is

called argparse but it provides a class called Argparser but I

often get these backward and try to use argparser and Argparse.

Instead of figuring it out every time I usually dig up some previous Python program and then copy-paste the argument parser from there, amending it to suit the purpose.

But this time I didn't have to do that. Instead, I just said to Claude:

This is good, now please add code at the top to handle argument parsing with the standard

Argparselibrary, even though there are no options yet.

Claude handed me back pretty much the same program, but with the argument parser at the top:

def parse_arguments():

"""Parse command-line arguments using argparse."""

parser = argparse.ArgumentParser(

description="A simplified version of xargs that appends each line from stdin to a command template.",

prog="xar"

)

# Store all positional arguments as the command to execute

parser.add_argument(

"command",

nargs="+",

help="The command template to execute for each input line"

)

return parser.parse_args()

Let's pause for a moment. Maybe you kids are unimpressed by this. But if someone had sent this interaction back in time and showed it to me even as late as five years ago, I would have been stunned. It would have been completely science-fictional. The code that it produced is not so impressive, maybe, but that I was able to get it in response to an unstructured request in plain English is like seeing a nuclear-powered jetpack in action.

I make some changes

It bothered me that the command-line arguments were being taken

implicitly from sys.argv; that makes the program hard to test. A

better way to do this is to have the list of command-line arguments be a

function to the main function, and then for the runtime to call

main(sys.argv[1:]) instead of just main(). That way you can test

the program without having to fake up sys.argv. So I changed that,

and while I was making changes I got rid of Claude's comments and the

unnecessary exception handling. I pasted the result back at Claude,

saying:

Good so far. I have adjusted your source code in several ways:

- I removed the comments.

- I removed the exception handling for the subcommand. If one of the subcommands fails, that no longer terminates the main loop

- Command-line arguments are passed to the main() function rather than being accessed directly

Here is the code. Tell me if you see any problems or if you have any questions about it

I had also sabotaged the code with at least one likely error.

Python's subprocess.run returns a “result” structure that includes

the subcommand's output and exit status, and I can never remember the

name of the component that records the exit status. I either look it

up or get it by trial-and-error. This time I didn't bother to either,

I just guessed that it was result.exitcode, which I handed off to

Claude even though I thought was probably wrong.

Claude's reply said:

Here are a couple of issues I noticed:

There's an error in the error handling code - you're using

result.exitcodebut in thesubprocess.run()function, the attribute is calledreturncodenotexitcode

Awesome, now I don't have to remember that name of that component.

Claude continued:

The

parse_arguments()function definition is missing in the pasted code, but it would need to be updated to accept theargvparameter

I had passed sys.argv[1:] to main, and then had main call parse_arguments(argv).

But I hadn't changed parse_arguments to expect an argument list. (I

hadn't included it in my paste at all, in fact.) Claude was exactly

right, and continued with the corrected version:

#!/usr/bin/env python3

import sys

import subprocess

import argparse

def parse_arguments(argv):

…

return parser.parse_args(argv)

def main(argv):

# Parse command-line arguments

args = parse_arguments(argv)

…

if __name__ == "__main__":

ok = main(sys.argv[1:])

exit(0 if ok else 1)

Handling NUL-separated values

I said

It's great, thanks for catching those errors. Now let's add a

-0command-line option that tells the program to split the input on "\0" characters instead of at line breaks.

Recall that my handwritten xar did not do this yet; I had left that

feature for another day. Claude just added it for me, adding

parser.add_argument(

"-0", "--null",

action="store_true",

help="Input items are terminated by a null character instead of by newline"

)

to the argument parser and changing the main loop to:

# Split input based on the delimiter option

if args.null:

# Split on null characters

items = stdin_data.split('\0')

else:

# Split on newlines

items = stdin_data.splitlines()

for item in items:

if not item:

continue

…

I was tired of Claude's comments, so I said

Give me the same thing, with no comments

which it did, so I said

From now on leave out the comments. I'm a Python expert and I don't need them. If there is something I don't understand I will ask you.

Claude complied. If I were going to do use Claude again in the future I would include that in the canned instructions that Claude is given up front. Instead I will probably use a tool better-suited to programming, and do whatever the analogous thing is.

Template filling

Now I told Claude to add the % feature:

I changed

if not itemtoif item == "". Now let's make the following change:

- If the command template includes any

%characters, each of these should be replaced with the input item.- Otherwise, if there were no

%characters, the input item should be appended to the end of the command as in the current version

Claude did this. It used an explicit loop instead of the list comprehension that I had used (and preferred), but it did do it correctly:

for arg in command_template:

if '%' in arg:

has_placeholder = True

full_command.append(arg.replace('%', item))

else:

full_command.append(arg)

if not has_placeholder:

full_command.append(item)

Even without the list comprehension, I would have factored out the common code:

for arg in command_template:

if '%' in arg:

has_placeholder = True

full_command.append(arg.replace('%', item))

if not has_placeholder:

full_command.append(item)

But I am not going to complain, my code is simpler but is doing unnecessary work.

Claude also took my hint to change item == "" even though I didn't

explicitly tell it to change that.

At this point the main loop of the main function was 15 lines long,

because Claude had stuck all the %-processing inline. So I said:

Good, let's extract the command template processing into a subroutine.

It did this right, understanding correctly what code I was referring

to and extracting it into a subroutine called

process_command_template. More science fiction: I can say "command

template processing" and it guesses what I had in mind!

This cut the main loop to 7 lines. That worked so well I tried it again:

Good, now let's extract the part of main that processes stdin into a subroutine that returns the

itemsarray

It pulled the correct code into a function called process_stdin. It

did not make the novice mistake of passing the entire args structure

to this function. In the caller it had process_stdin(args.null) and

inside of process_stdin this parameter was named

use_null_delimiter,

YAGNI?

At this point I was satisfied but I thought I might as well ask if it should do something else before we concluded:

Can you think of any features I left out that would be useful enough to warrant inclusion? Remember this program is supposed to be small and easy to use, in contrast to the existing

xargswhich is very complicated.

Claude had four suggestions:

A

-por--paralleloption to run commands in parallelA

-nor--max-argsoption to specify the maximum number of items to pass per commandA simple

-vor--verboseflag to show commands as they're executedA way to replace the command's standard input with the item instead of adding it as an argument

All reasonable suggestions, nothing stupid. (It also supplied code for #3, which I had not asked for and did not want, but as I said before, default Claude is very chatty.)

Parallelization

I didn't want any of these, and I knew that #2–4 would be easy to add if I did want any of them later. But #1 was harder. I've done code like this in the past, where the program has a worker pool and runs a new process whenever the worker pool isn't at capacity. It's not even that hard. In Perl you can play a cute trick and use something like

$workers{spawn()} = 1 while delete $workers{wait()};

where the workers hash maps process IDs to dummy values. A child

exits, wait() awakens and returns the process ID of the completed

child, which is then deleted from the map, and the loop starts another

worker.

I wanted to see how Claude would do it, and the result was an even bigger win than I had had previously, because Claude wrote this:

with concurrent.futures.ProcessPoolExecutor(max_workers=args.parallel) as executor:

futures = [executor.submit(execute_command, cmd, args.verbose) for cmd in commands]

for future in concurrent.futures.as_completed(futures):

success = future.result()

if not success:

ok = False

What's so great about this? What's great is that I hadn't known about

concurrent.futures or ProcessPoolExecutor. And while I might have

suspected that something like them existed, I didn't know what they

were called. But now I do know about them.

If someone had asked me to write the --parallel option, I would have

had to have this conversation with myself:

Python probably has something like this already. But how long will it take me to track it down? And once I do, will the API documentation be any good, or will it be spotty and incorrect? And will there be only one module, or will there be three and I will have to pick the right one? And having picked module F6, will I find out an hour later that F6 is old and unmaintained and that people will tell me “Oh, you should have used A1, it is the new hotness, everyone knows that.”

When I put all that uncertainty on a balance, and weigh it against the known costs of doing it myself, which one wins?

The right choice is: I should do the research, find the good module (A1, not F6), and figure out how to use it.

But one of my biggest weaknesses as a programmer is that I too often make the wrong choice in this situation. I think “oh, I've done this before, it will be quicker to just do it myself”, and then I do and it is.

Let me repeat, it is quicker to do it myself. But that is still the wrong choice.

Maybe the thing I wrote would be sooner or smaller or faster or more technically suitable to the project than the canned module would have been. But it would only have been more be technically suitable today. If it needed a new feature in the future it might have to be changed by someone who had never seen it before, whereas the canned module could well already have the needed feature ready to go, already documented, and perhaps already familiar to whoever had to make the change. My bespoke version would certainly be unfamiliar to every other programmer on the project — including perhaps myself six months later — so would be the wrong thing to use.

I'm really good at hacking this stuff up. Which is a problem. It makes me want to hack stuff up, even when I shouldn't.

Claude tips the balance strongly toward the correct side, which is that I should use the prepackaged module that someone else wrote and not hack something up.

And now I know about concurrent.futures.ProcessPoolExecutor! The

world is full of manuals, how can I decide which ones I should read?

One way is to read them all, which used to work back when I was

younger, but now I have more responsibilities and I don't have time to

read the entire Python library reference including all the useless

bits I will never use. But here's Claude pointing out to me that this

is something I need to know about, now, today, and I should read this one. That is

valuable knowledge.

Claude flubs

While testing the program I did

xar --parallel=2 bash -c '...'

which didn't work because the argparse library tried to parse the

-c as a flag to xar, when I wanted it to be a flag to bash.

This was my fault, and there is a standard way around it, which is

that I should have said

xar --parallel=2 -- bash -c '...'

which tells argparse to drop the -- and take everything after it

literally with no interpretation.

I thought I'd see what Claude had to say about this, and it dropped the ball in a very LLM sort of way.

I asked:

When I did

xar --parallel=2 bash -c '...'it misunderstood the arguments. It thought I was trying to pass-ctoxarand failed with unrecognized arguments. What should I do about that?

Claude replied:

There are two main approaches to handle this:

- Use the

--separator to indicate the end of arguments for xar: …- Modify your code to handle this case specifically by looking for a command terminator in the argument parser.

Here's how you could modify your code to implement the first approach:

And then it followed with the same, unmodified code…

Which was correct, because -- is already handled by argparse, so

no change was actually required to implement the first approach! But

Claude's characterization of it as a modification was misleading,

wrong, and potentially very confusing.

I said:

I don't see what the difference is in that argument parsing code as compared to what I had before.

and Claude got into the LLM tail-chasing mode where it got more and more confused:

You're right, I apologize for the confusion. There's no difference in the argument parsing code I provided compared to what you had before. The issue is with how argparse handles arguments by default.

To fix the issue with

xar --parallel=2 bash -c '...',you need to actually implement support for the--separator in your code…# Find the index of '--' if it exists try: separator_index = argv.index('--') xar_args = argv[:separator_index] command_args = argv[separator_index+1:] except ValueError: …

Ugh, all wrong in the worst way. The code probably works, but it is

completely unnecessary. Claude's claim that “you need to actually

implement support for the -- separator” is flat wrong. I pointed

this out and Claude got more confused. Oh well, nobody is perfect!

Lessons learned

A long time ago, when syntax-coloring editors were still new, I tried one and didn't like it, then tried again a few years later and discovered that I liked it better than I had before, and not for the reasons that anyone had predicted or that I would have been able to predict. (I wrote an article about the surprising reasons to use the syntax coloring.)

This time also. As usual, an actual experiment produced unexpected results, because the world is complicated and interesting. Some of the results were unsurprising, but some were not anything I would have thought of beforehand.

Claude's code is good enough, but it is not a magic oracle

Getting Claude to write most of the code was a lot faster and easier than writing it myself. This is good! But I was dangerously tempted to just take Claude's code at face value instead of checking it carefully. I quickly got used to flying along at great speed, and it was tough to force myself to slow down and be methodical, looking over everything as carefully as I would if Claude were a real junior programmer. It would be easy for me to lapse into bad habits, especially if I were tired or ill. I will have to be wary.

Fortunately there is already a part of my brain trained to deal with bright kids who lack experience, and I think perhaps that part of my brain will be able to deal effectively with Claude.

I did not notice any mistakes on Claude's part — at least this time.

At one point my testing turned up what appeared to be a bug, but it was not. The testing was still time well-spent.

Claude remembers the manual better than I do

Having Claude remember stuff for me, instead of rummaging the manual, is great. Having Claude stub out an argument parser, instead of copying one from somewhere else, was pure win.

Partway along I was writing a test script and I wanted to use that

Bash flag that tells Bash to quit early if any of the subcommands

fails. I can never remember what that flag is called. Normally I

would have hunted for it in one of my own shell scripts, or groveled

over the 378 options in the bash manual. This time I just asked in

plain English “What's the bash option that tells the script to abort

if a command fails?” Claude told me, and we went back to what we were

doing.

Claude can talk about code with me, at least small pieces

Claude easily does simple refactors. At least at this scale, it got them right. I was not expecting this to work as well as it did.

When I told Claude to stop commenting every line, it did. I

wonder, if I had told it to use if not expr only for Boolean

expressions, would it have complied? Perhaps, at least for a

while.

When Claude wrote code I wasn't sure about, I asked it what it was doing and at least once it explained correctly. Claude had written

parser.add_argument(

"-p", "--parallel",

nargs="?",

const=5,

type=int,

default=1,

help="Run up to N commands in parallel (default: 5)"

)

Wait, I said, I know what the const=5 is doing, that's so that if

you have --parallel with no number it defaults to 5. But what is

the --default doing here? I just asked Claude and it told me:

that's used if there is no --parallel flag at all.

This was much easier than it would have been for me to pick over

the argparse manual to figure out how to do this in the first

place.

More thoughts

On a different project, Claude might have done much worse. It might have given wrong explanations, or written wrong code. I think that's okay though. When I work with human programmers, they give wrong explanations and write wrong code all the time. I'm used to it.

I don't know how well it will work for larger systems. Possibly pretty well if I can keep the project sufficiently modular that it doesn't get confused about cross-module interactions. But if the criticism is “that LLM stuff doesn't work unless you keep the code extremely modular” that's not much of a criticism. We all need more encouragement to keep the code modular.

Programmers often write closely-coupled modules knowing that it is bad and it will cause maintenance headaches down the line, knowing that the problems will most likely be someone else's to deal with. But what if writing closely-coupled modules had an immediate cost today, the cost being that the LLM would be less helpful and more likely to mess up today's code? Maybe programmers would be more careful about letting that happen!

Will my programming skill atrophy?

Folks at Recurse Center were discussing this question.

I don't think it will. It will only atrophy if I let it. And I have a pretty good track record of not letting it. The essence of engineering is to pay attention to what I am doing and why, to try to produce a solid product that satisifes complex constraints, to try to spot problems and correct them. I am not going to stop doing this. Perhaps the problems will be different ones than they were before. That is all right.

Starting decades ago I have repeatedly told people

You cannot just paste code with no understanding of what is going on and expect it to work.

That was true then without Claude and it is true now with Claude. Why would I change my mind about this? How could Claude change it?

Will I lose anything from having Claude write that complex

parser.add_argument call for me? Perhaps if I had figured it out

on my own, on future occasions I would have remembered the const=5 and default=1

specifications and how they interacted. Perhaps.

But I suspect that I have figured it out on my own in the past, more than once, and it didn't stick. I am happy with how it went this time. After I got Claude's explanation, I checked its claimed behavior pretty carefully with a stub program, as if I had been reviewing a colleague's code that I wasn't sure about.

The biggest win Claude gave me was that I didn't know about this

ProcessPoolExecutor thing before, and now I do. That is going to

make me a better programmer. Now I know something about useful that

I didn't know before, and I have a pointer to documentation I know I

should study.

My skill at writing ad-hoc process pool managers might atrophy, but if it does, that is good. I have already written too many ad-hoc process pool managers. It was a bad habit, I should have stopped long ago, and this will help me stop.

Conclusion

This works.

Perfectly? No, it's technology, technology never works perfectly. Have you ever used a computer?

Will it introduce new problems? Probably, it's new technology, and new technology always introduces new problems.

But is it better than what we had before? Definitely.

I still see some programmers turning up their noses at this technology as if they were sure it was a silly fad that would burn itself out once people came to their senses and saw what a terrible idea it was.

I think that is not going to happen, and those nose-turning-up people, like the people who pointed out all the drawbacks and unknown-unknowns of automobiles as compared to horse-drawn wagons, are going to look increasingly foolish.

Because it works.

[Other articles in category /tech/gpt] permanent link

Fri, 07 Mar 2025

Claude and Merle Miller let me down

Claude

My relationship with Claude has its ups and downs, and I'm still trying to figure out what to use it for and what not. It's great at rewriting my job application cover letters to sound less like an awkward nerd.

Last week I was in the supermarket and decided to try asking it something I've been wondering for a long time:

MJD: What is the song "Mercy Street” about? What is the connection with Anne Sexton? Who is "Mary"?

I thought Claude might do well with this. I had had a conversation with it a while back about Pixies songs, which I was satisfied with. But this time Claude let me down:

Claude: … The "Mary" referenced in the song is likely Mary Gray Staples, who was the name of Sexton's childhood doll. …

(I thought: What? Am I supposed to believe that

Dreaming of the tenderness

The tremble in the hips

Of kissing Mary's lips

is about a doll?)

MJD: Mary Gray Staples is an unusual name for a doll. Are you sure about that?

Claude: You're right to question this - I made an error. Mary Gray was actually Anne Sexton's great-aunt, not the name of her doll. … The doll references in Gabriel's song likely connect to Sexton's imagery in other poems, but Mary specifically refers to her great-aunt.

MJD: I don't remember a doll in Gabriel's song.

Claude: You're absolutely right - I apologize for the error. There isn't actually a reference to a doll in Peter Gabriel's "Mercy Street" lyrics. … The "Mary" referenced in the song is indeed Anne Sexton's great-aunt Mary Gray…

MJD: Are you sure? Wikipedia says that Mary Gray Staples was the birth name of Sexton's mother.

Claude: You're absolutely right, and I apologize for the confusion. …

Claude just flubbed over and over.

I wonder if the grammatical error in “Mary Gray Staples, who was the name of …” is a kind of a tell? Perhaps Claude is fabricating, by stitching together parts of two unrelated sentences that it read somewhere, one with “Mary Gray Staples, who was…” and the other “… was the name of…”? Probably it's not that simple, but the grammatical error is striking.

Anyway, this was very annoying because I tend to remember things like this long past the time when I remember where I heard them. Ten years from now I might remember that Anne Sexton once had a doll with a very weird name.

Merle Miller

A while back I read Merle Miller's book Plain Speaking. It's an edited digest of a series of interviews Miller did with former President Truman in 1962, at his home in Independence, Missouri. The interviews were originally intended to be for a TV series, but when that fell through Miller turned them into a book. In many ways it's a really good book. I enjoyed it a lot, read it at least twice, and a good deal of it stuck in my head.

But I can't recommend it, because it has a terrible flaw. There have been credible accusations that Miller changed some of the things that Truman said, embellished or rephrased many others, that he tarted up Truman's language, and that he made up some conversations entirely.

So now whenever I remember something that I think Truman said, I have to stop and try to remember if it was from Miller. Did Truman really say that it was the worst thing in the world when records were destroyed? I'm sure I read it in Miller, so, uhh… maybe?

Miller recounts a discussion in which Truman says he is pretty sure that President Grant had never read the Constitution. Later, Miller says, he asked Truman if he thought that Nixon had read the Constitution, and reports that Truman's reply was:

I don't know. I don't know. But I'll tell you this. If he has, he doesn't understand it.

Great story! I have often wanted to repeat it. But I don't, because for all I know it never happened.

(I've often thought of this, in years past, and whatever Nixon's faults you could at least wonder what the answer was. Nobody would need to ask this about the current guy, because the answer is so clear.)

Miller quotes Truman's remarks about Supreme Court Justice Tom Clark, “It isn't so much that he's a bad man. It's just that he's such a dumb son of a bitch.” Did Truman actually say that? Did he say something like it, but Miller found the epithet not spicy enough? Did he just imply it? Did he say anything like it? Uhhh… maybe?

There's a fun anecdote about the White House butler learning to make an Old-fashioned cocktail in the way the Trumans preferred. (The usual recipe involves whiskey, sugar, fresh fruit, and bitters.) After several attempts the butler converged on the Trumans' preferred recipe, of mostly straight bourbon. Hmm, is that something I heard from Merle Miller? I don't remember.

There's a famous story about how Paul Hume, music critic for the Washington Post, savaged an performance of Truman's daughter Margaret, and how Truman sent him an infamous letter, very un-presidential, that supposedly contained the paragraph:

Some day I hope to meet you. When that happens you'll need a new nose, a lot of beef steak for black eyes, and perhaps a supporter below!

Miller reports that he asked Truman about this, and Truman's blunt response: “I said I'd kick his nuts out.” Or so claims Miller, anyway.

I've read Truman's memoirs. Volume I, about the immediate postwar years, is fascinating; Volume II is much less so. They contain many detailed accounts of the intransigence of the Soviets and their foreign minister Vyacheslav Molotov, namesake of the Molotov Cocktail. Probably 95% of what I remember Truman saying is from those memoirs, direct from Truman himself. But some of it must be from Plain Speaking. And I don't know any longer which 5% it is.

As they say, an ice cream sundae with a turd in it isn't 95% ice cream, it's 100% shit. Merle Miller shit in the ice cream sundae of my years of reading of Truman and the Truman administrations.

Now Claude has done the same. And if I let it, Claude will keep doing it to me. Claude caga en la leche.

Addendum

The Truman Library now has the recordings of those interviews available online. I could conceivably listen to them all and find out for myself which things went as Miller said.

So there may yet be a happy ending, thanks to the Wonders of the Internet! I dream of someday going through those interviews and producing an annotated edition of Plain Speaking.

[Other articles in category /tech/gpt] permanent link

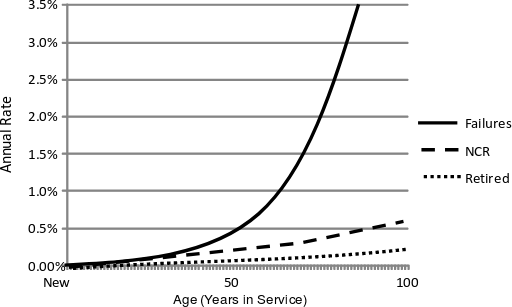

Thu, 27 Feb 2025Having had some pleasant surprises from Claude, I thought I'd see if it could do math. It couldn't. Apparently some LLMs can sometimes solve Math Olympiad problems, but Claude didn't come close.

First I asked something simple as a warmup:

MJD: What is the largest number that is less than 1000?

I had tried this on ChatGPT a couple of years back, with tragic results:

ChatGPT: The largest number that is less than 1000 is 999.

But it should have quit while it was ahead, because its response continued:

ChatGPT: Any number that is less than 1000 will have three digits, with the first digit being 9, the second digit being 9, and the third digit being any number from 0 to 8.

and then when I questioned it further it drove off the end of the pier:

ChatGPT: Any number with four or more digits can be less than 1000, depending on the specific digits that are used. For example, the number 9991 is a four-digit number that is less than 1000.

Claude, whatever its faults, at least knew when to shut up:

MJD: What is the largest number that is less than 1000?

Claude: 999

I then asked it “What if it doesn't have to be an integer?” and it didn't do so well, but that's actually a rather tricky question, not what I want to talk about today. This article is about a less tricky question.

I have omitted some tedious parts, and formatted the mathematics to be more readable. The complete, unedited transcript can be viewed here.

I started by setting up context:

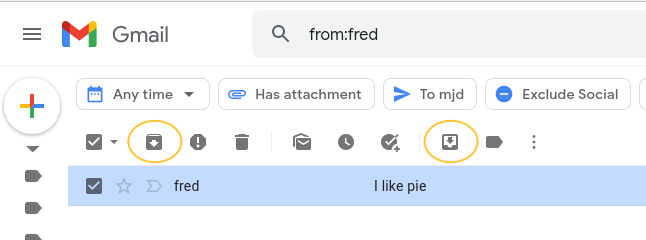

MJD: Let's say that the cube graph !!Q_n!! has as vertices the set !!\{0,1\}^n!! and two vertices are connected with an edge if and only if their coordinates differ in exactly one position. This is a standard construction. Are you familiar with it?

Claude asserted that it was familiar with this family of graphs. ((Wikipedia on cube graphs.) The basic examples, !!Q_0!! through !!Q_3!!, look like this:

Each graph consists of two copies of the previous graph, with new edges added between the corresponding vertices in the copies.

Then I got to the real question:

Now let's say that the Keane number for a graph !!G!! is the largest number !!k!! such that:

- !!G!! can be partitioned into !!k!! connected subgraphs, and

- each pair of subgraphs is adjacent, in the sense that for each pair !!H!!, !!K!! there is an edge of !!G!! with one vertex in !!H!! and one in !!K!!.

If you understand so far, tell me the Keane numbers of the cube graphs !!Q_0, Q_1!!, and !!Q_2!!.

Here are the maximal partitions for those three graphs:

The Keane number of !!Q_0!! is !!1!! because it has only one vertex. For !!Q_1!! we can put each of the two vertices into a separate part to get two parts. For !!Q_2!! we can get three parts as above. But there is no partition of !!Q_2!! into four parts that satisfies the second condition, because two of the parts would have to comprise the upper-left and lower-right vertices, and would not be connected by an edge.

Claude got this initial question right. So far so good.

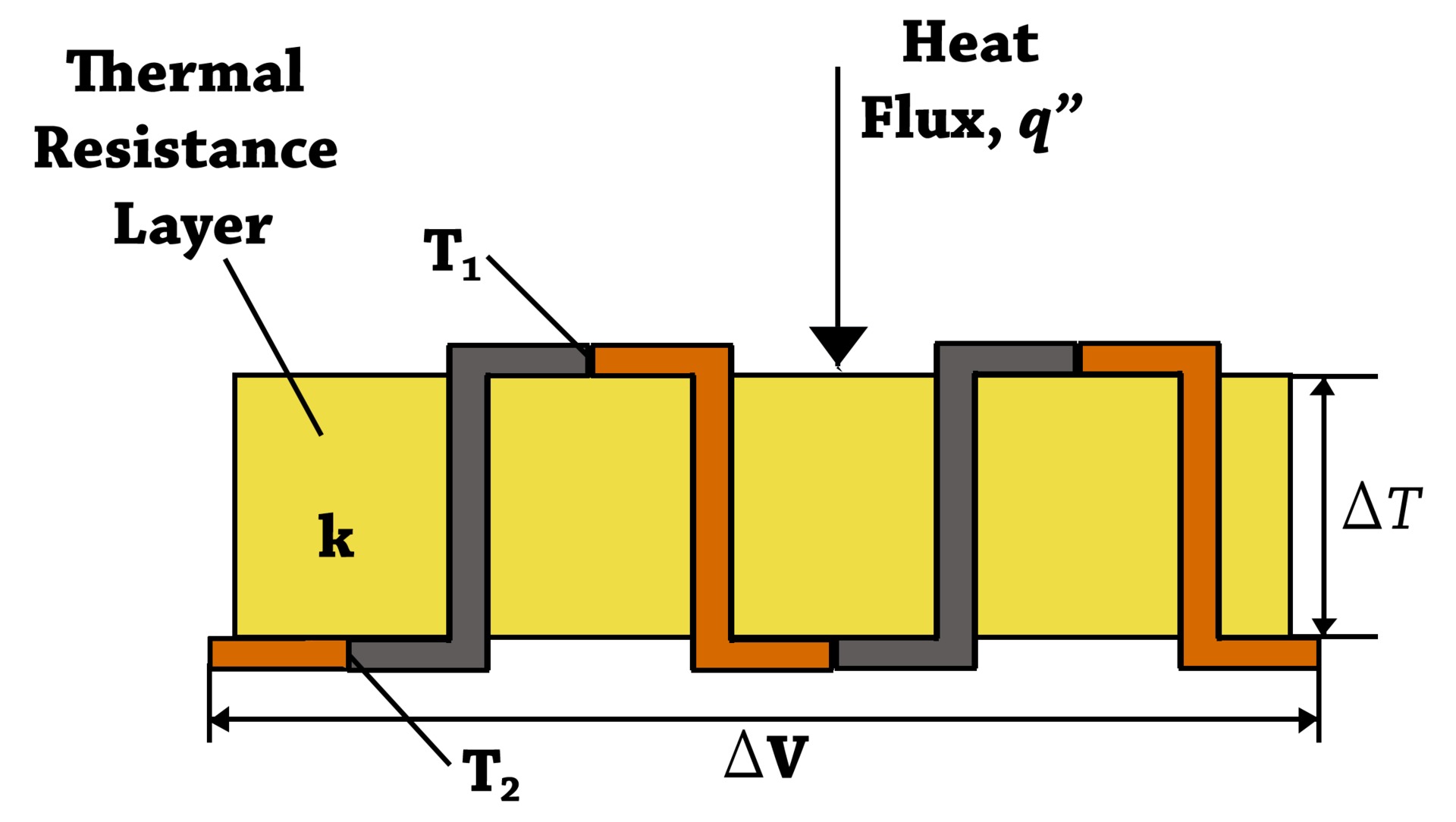

Then I asked Claude for the Keane number of !!Q_3!! and this it was unable to produce.

The correct number is !!4!!. There are several essentially different partitions of !!Q_3!! into four parts, each of which touches the other three, which proves that the number is at least !!4!!. Here are two examples:

In addition to these there are also partitions into parts of sizes !!1+1+2+4!!, and sizes !!1+1+3+3!!.

On the other hand, more than !!4!! parts is impossible, and the proof is one sentence long: !!Q_3!! has only !!8!! vertices, so any partition into !!5!! or more parts must have a part of size !!1!!, and this part can't be adjacent to the other four parts, because a single vertex has only three outgoing edges. I would expect a bright middle-schooler to figure this out in at most a few minutes.

At first, Claude got the right number, but with completely bogus reasoning. To avoid belaboring the obvious, I'll highlight the errors but I won't discuss them at length.

Claude:

- Let's find the maximum possible !!k!!:

- One valid partition with !!k=4!! exists:

- Take two opposite vertices (e.g., !!000!! and !!111!!)

- Make these two single-vertex subgraphs

- Split the remaining !!6!! vertices into two paths of !!3!! vertices each

- Each subgraph is connected

- Each pair of subgraphs will have an edge between them

The diagrams I showed earlier display some of the partitions that show !!k≥4!!, but the one Claude gave here does not, because two of its parts (!!D!! and !!B!!) are not adjacent:

Claude:

- Why can't !!k!! be !!5!! or more?

- If we had !!5!! or more subgraphs, we would need !!{5 \choose2} = 10!! edges between pairs

- !!Q_3!! only has !!12!! edges total

- Some edges would need to be reused for multiple pairs

- But each edge can only connect one pair of subgraphs

- Therefore !!5!! or more subgraphs is impossible

Okay, Claude, I agree we would need ten edges for the connections between the parts, but we have !!12!!, so why do you say that “some edges would need to be reused”? It may be correct, but it does not follow.

Claude:

Therefore, the Keane number of !!Q_3!! is !!4!!.Would you like me to verify any part of this reasoning?

So, right answer, but seemingly by luck, since there were serious errors in reasoning, in both the !!k≥4!! part and also in the !!k< 5!! part. I decided to ignore the second one.

MJD: You said two of the subgraphs are !!H=\{000\}!! and !!K=\{111\}!!. You said each pair of subgraps will have an edge between them. What edge connects !!H!! and !!K!!?

Claude: Ah, I made a mistake.

The vertices !!000!! and !!111!! differ in all three positions, so they are not adjacent in !!Q_3!! (they need to differ in exactly one position to have an edge between them). This means that making them single-vertex subgraphs would violate condition (2) since these subgraphs would not be adjacent.

I need to rethink my claimed partition. Would you like me to try again to determine the Keane number of !!Q_3!!?

MJD: Yes.

Claude: [finds a specific example showing !!k≥3!!, and remarks that !!k=4!! “seems difficult”]

Let me work on verifying whether !!k=4!! is possible or !!k=3!! is maximum. Would you like me to continue?MJD: Please go on.

Claude: Let me try to prove !!k=3!! is maximum in !!Q_3!!.

Suppose we had !!k=4!! subgraphs. Then:

- We need !!{4 \choose 2} = 6!! edges between different subgraphs

- Each subgraph needs to be connected:

- A subgraph with !!3!! vertices needs at least !!2!! edges

- A subgraph with !!2!! vertices needs !!1!! edge

- A subgraph with !!1!! vertex needs !!0!! edges

- There are !!12!! edges total in !!Q_3!!

- If we have !!4!! subgraphs:

- First, !!6!! edges must go between different subgraphs

- That leaves only !!6!! edges for internal connectivity

- But with !!8!! vertices split into !!4!! subgraphs:

- If any subgraph has !!3+!! vertices, it needs !!2+!! edges internally

- If any has !!4+!! vertices, it needs !!3+!! edges internally

- With !!8!! vertices total divided into !!4!! parts, at least one part must have !!3+!! vertices

- After using !!2+!! edges for that part and !!6!! edges between parts, we don't have enough edges left to make all other parts connected

Therefore !!k=4!! is impossible, and the Keane number of !!Q_3!! is !!3!!.

Claude seems completely undirected here. Some of the things it says are correct facts, but it can't link the facts together into actual reasoning, except by accident. Sometimes it utters A followed by B where A implies B, and it seems exciting, but just as often B is a nonsequitur.

This is not that different from how ChatGPT was two years ago. It can spout text, and often it can spout enough plausible, grammatically-correct text to suggest that it knows things and is thinking about them. Claude's text-spouting prowess is much more impressive than ChatGPT's was two years ago. But it is still just a text-spouter.

I went on, trying to detect actual thinking.

MJD: What if there were !!4!! parts of !!2!! vertices each?

Claude's response here correctly applied its earlier analysis: four parts of size !!2!! would use up four edges for internal connectivity, leaving !!8!! for external connections, and we only need !!6!!.

Claude: I was too quick to conclude 4 was impossible. Let me try to find an explicit partition into 4 parts of 2 vertices each...

Would you like me to try to find such a partition, or would you first like me to verify that this kind of partition would definitely satisfy the adjacency requirement (condition 2)?MJD: You keep going until you think you ha e the right answer.

Claude: Let me try to construct a partition of !!Q_3!! into !!4!! parts of !!2!! vertices each: …

This time Claude tried this partition:

It noticed that two of the four parts were not adjacent, and gave up without searching further.

Claude: This makes me think !!3!! really is the maximum.

If Claude were a human mathematician, this would be a serious error. Finding one invalid partition proves nothing at all.

Claude: My previous argument was wrong, but the conclusion might be right. Would you like me to try to prove more carefully that !!4!! is impossible?

There is no proof that !!4!! is impossible, and I thought it would be unenlightening to watch Claude flounder around with it. But I wanted to see what would happen if I asked it to prove a false claim that should be easier because its premise is stronger:

MJD: Try a weaker conjecture: prove more carefully the four parts of size 2 is impossible.

Claude: Okay, let me prove that we cannot partition !!Q_3!! into four parts of size 2 each.

- Suppose we had such a partition !!H_1, H_2, H_3, H_4!!, where each !!H_i!! contains exactly two vertices.

- For any such !!H_i!!, its two vertices must be adjacent (to be connected). This means they differ in exactly one coordinate.

- Therefore, each !!H_i!! must be of one of these forms:

- Two vertices differing in 1st coordinate (like !!000,100!!)

- Two vertices differing in 2nd coordinate (like !!000,010!!)

- Two vertices differing in 3rd coordinate (like !!000,001!!)

- Consider any vertex !!v!! in !!Q_3!!. It has exactly 3 neighbors:

- One differing in 1st coordinate

- One differing in 2nd coordinate

- One differing in 3rd coordinate

- Therefore, if !!v!! is in !!H_i!!:

- One of its neighbors must also be in !!H_i!!

- That leaves only two edges from !!v!! that could connect to other parts

- So !!v!! can only help !!H_i!! be adjacent to at most two other parts

- But each !!H_i!! needs to be adjacent to all three other parts

This contradiction proves no such partition exists.

It's tempting to look at this and say that Claude was almost right. It produced 16 lines and at least 15 of them, on their own, were correct. But it's less impressive than it might first appear. Again Claude displays the pattern of spouting text, some of which is correct, and some of which is related. But that is all I can say in its favor. Most of its statements are boilerplate. Sections 2–4 can be deleted with nothing lost. Claude has buried the crux of the argument, and its error, in section 5.

MJD: In step 5 I see that if !!v!! is in !!H_i!!, it can only help !!H_i!! be adjacent to two other parts. But !!H_i!! has size 2, so it contains a vertex other than !!v!!. If !!v!! connects !!H_i!! to two other parts, couldn't the other vertex of !!H_i!! connect !!H_i!! to a third part?

Claude: Ah, you're absolutely right! My proof attempt failed. Let me back up and try a different approach to the original question of whether !!Q_3!! can be partitioned into 4 parts that satisfy both conditions.

…

This time Claude did find a correct partition into four parts, showing that !!k≥4!!.

MJD: How did you come up with that example partition?

Claude: I should be honest - I didn't find it systematically. I was trying different arrangements somewhat randomly until I found one that worked. …

I don't think there is any sense in which this is true, but at this point I hadn't yet internalized that Claude's descriptions of its own internal processes are text-spouting just like the rest of its output. In any case, I ignored this and asked it to analyze its own earlier mistake:

MJD: Earlier you purported to prove that !!k=4!! was impossible, saying that !!6!! edges would be needed to connect the !!4!! parts, and that this did not leave enough edges to connect the parts internally. You didn't analyze this carefully, so you missed the solution where all four parts have !!2!! vertices each. Do that analysis more carefully than you did last time and explain why that counting method does not rule out a partition of !!Q_3!! into !!2+2+2+2!!.

Claude: (details elided)

So this counting argument shows:

- We need 10 edges

- We have 12 edges

- Therefore the counting argument does not rule out a !!2+2+2+2!! partition

My earlier analysis erred by not being explicit about the numbers. I just had a vague sense that we needed "too many" edges, without actually counting them. This shows the importance of precise counting rather than relying on intuition about what seems like "too many".

Claude got the counting part right, although I think the final paragraph is just spouting, especially the claim “I just had a vague sense that…”, which should not be taken seriously.

[ Digression: This reminds me of a section in Daniel Dennett's Consciousness Explained in which he discusses the perils of asking humans about their internal processes. The resulting answers, he says, may provide interesting information about what people think is going on in their heads, but we should be very wary about ascribing any accuracy or insight to these descriptions. Dennett makes an analogy with an anthropologist who asks a forest tribe about their forest god. The tribespeople agree that the forest god is eight feet tall, he wears a panther skin, and so on. And while this might be folklorically interesting, we should be very reluctant to conclude from this testimony that there is actually an eight-foot-tall fur-clad god in the forest somewhere. We should be similarly reluctant to ascribe real existence to Claude's descriptions of its “vague senses” or other internal processes suggested by its remarks like “Ah, let me think...” or “Ah, you're absolutely right!”. Claude has even less access to its own mental states (such as they are) than a human would. ]

As I pointed out earlier in this article, there are several essentially different solutions to the problem of partitioning !!Q_3!! into four parts. Claude has found one of them, the one I showed above on the left. I wanted to see if it could find another:

MJD: Are there any other partitions that are not ruled out by that argument? Or is 2+2+2+2 the only possibility?

Claude: Let me think about what other partitions could satisfy the edge-counting constraint.

If we have !!4!! parts, need !!6!! edges between parts, and have !!12!! total edges, then we can use up to !!6!! edges for internal connectivity of parts.

Possible partitions of !!8!! vertices into !!4!! parts could be:

- !!2+2+2+2!! (needs !!4!! internal edges)

- !!3+2+2+1!! (needs !!3!! internal edges)

- !!4+2+1+1!! (needs !!4!! internal edges)

- !!3+3+1+1!! (needs !!4!! internal edges)

- !!5+1+1+1!! (needs !!6!! internal edges)

So from just edge counting:

(Claude concludes that none of these is ruled out by edge-counting.)

So the edge-counting argument alone doesn't rule out any of these distributions of part sizes. We'd need additional arguments to determine which are actually possible in !!Q_3!!.

MJD: Why didn't you consider !!4+2+2+1!!?

Claude: Ah, you're right - I should have considered !!4+2+2+1!!! For !!4+2+2+1!!:

- The part of size !!4!! needs at least !!3!! internal edges to be connected

- The parts of size !!2!! each need !!1!! internal edge

- So that's !!5!! internal edges needed

- Plus !!6!! edges needed between parts

- Total needed = !!11!! edges

- We have !!12!! edges available

So this case is also not ruled out by edge counting. I apologize for missing it in my list. Would you like me to check if there are any other distributions I missed?

Oh, such failure! Failures that I have highlighted are clearly false statements, But the failure here is much worse. Again, almost everything Claude said was correct! And yet deeply wrong!

A bright middle-schooler could have missed this also. It was a bit of a trick question. Not because it's ambiguous, but because problem with !!4+2+2+1!! is not in line with the direction that the discussion was taking at that point. There is nothing wrong with !!4+2+2+1!! from an edge-counting point of view!

But I think a human mathematician, even a very young one, would have been more likely to answer the question I actually asked, which was “Why didn't you consider !!4+2+2+1!!?”, and from there might have quickly come to the useful conclusion that it doesn't need to be considered. Claude didn't do anything like that.

MJD: !!4+2+2+1!! doesn't work. Think about it more.

Claude: Let me think more carefully about a potential !!4+2+2+1!! partition.

Consider the part !!H!! of size !!4!!:

- To be connected, it needs at least !!3!! edges internally

- One vertex in !!H!! must connect to all !!3!! other vertices in !!H!!

- But in !!Q_3!!, each vertex has only !!3!! edges total

- If one vertex in !!H!! uses all its edges internally to connect to the other !!3!! vertices in !!H!!, it has no edges left to connect to other parts

- But every part needs to be adjacent to every other part

Therefore !!4+2+2+1!! is impossible, not because of edge counting, but because:

- Either !!H!! isn't connected (if we don't use enough internal edges)

- Or !!H!! can't connect to all other parts (if we use too many internal edges)

This shows why just counting edges isn't enough - we also need to think about the structure of !!Q_3!! and how the edges can be arranged.

Terrible. This is so muddled I wasn't even sure what to highlight as the errors. Yes, if one vertex in !!H!! uses all its edges internally to connect to the other !!3!! vertices in !!H!!, it has no edges left to connect to other parts. But the other three vertices in !!H!! have two edges each, so !!H!! could easily connect to the other three parts.

And, of course, Claude has still missed the main point, which is that one needn't consider !!4+2+2+1!!, because !!4+2+2+1= 9!! and !!Q_3!! has only !!8!! vertices.

MJD: There is a much simpler argument why 4+2+2+1 won't work.

Claude: Ah, let me think...

In a !!4+2+2+1!! partition:

- The single vertex in the part of size !!1!! has degree !!3!! in !!Q_3!!

- But it needs to connect to all three other parts!

- It needs all three of its edges just to be adjacent to the other parts

- So it can't connect to all three other parts AND connect to three different vertices

This is a much simpler contradiction. I was making it too complicated by thinking about the part of size !!4!! when the part of size !!1!! immediately shows it's impossible.

MJD: How many vertices are.in !!Q_3!!?

Claude: !!Q_3!! has !!8!! vertices (all binary strings of length !!3!!):

!!\{000, 001, 010, 011, 100, 101, 110, 111\}!!And now I see an even simpler argument why !!4+2+2+1!! is impossible: !!4 + 2 + 2 + 1 = 9!! vertices, but !!Q_3!! only has !!8!! vertices!

I was doing complex analysis of connectivity and edges when I should have just checked that the claimed partition added up to the right number of vertices. This is a good reminder to check the most basic facts first.

Finally! I would love to know what really happened here. What caused Claude to emit a sentence connecting !!4 + 2 + 2 + 1 = 9!! with !!Q_8!! having only !!8!! vertices?

MJD: So remind me, did you decide that !!k(Q_3)!! is !!3!!, or !!4!!?

I wasn't sure it would remember this, by which I really mean that I wasn't sure it would be able to pick out from its previous mass of statements which one was correct. But whether by luck or not, it did answer the question correctly:

Claude: Earlier I found a valid partition of Q_3 into 4 parts:

!!H_1 = \{000, 001\} !! !!H_2 = \{100, 110\}!! !!H_3 = \{010, 011\}!! !!H_4 = \{101, 111\}!!

I verified that:

- Each part is connected

- Each part is adjacent to every other part

Therefore !!k(Q_3) ≥ 4!!.

I haven't yet proven that !!k(Q_3) ≤ 4!!. Would you like me to try to prove that !!5!! parts is impossible?

MJD: Please go ahead.

I found Claude's attempt at this interesting, but not in a good way. After reading it I shrugged and quit the conversation.

Claude:

Let me prove that !!5!! parts is impossible in !!Q_3!!.

- Five parts would require !!{5 \choose 2} = 10!! different edges just to connect all pairs of parts to each other.

- Each such edge must be distinct because a single edge can only connect one pair of parts.

- Each vertex in !!Q_3!! has degree !!3!!. With !!8!! vertices, the total number of edges is !!(8 × 3)/2 = 12!!.

- Out of these !!12!! edges:

- !!10!! must be used to connect different parts to each other

- That leaves only !!2!! edges for internal connectivity of parts

- But with !!8!! vertices split into !!5!! parts, some part must have size !!≥ 2!!

- Any part of size !!≥ 2!! must use at least one edge internally to be connected

- If one part uses one edge internally, that leaves only one edge for all other parts

- But if any other part has size !!≥ 2!!, it also needs an internal edge

- With !!8!! vertices in !!5!! parts, at least two parts must have size !!≥ 2!!

- Therefore !!2!! edges is not enough for internal connectivity

Thus !!k(Q_3) = 4!!.

Claude says that with !!8!! vertices in !!5!! parts, at least two parts must have size !!2!! or more. This is wrong, because you could have !!4+1+1+1+1!!.

But highlighting that wrong statement doesn't get at why this answer is so bad. It's bad because the conclusion that appears next is a complete nonsequitur.

The argument can be fixed up. I would put it like this:

- As Claude observed, we have only !!2!! edges available for internal connections

- A part with !!n!! vertices requires at least !!n-1!! edges for internal connections

- Let the sizes of the parts be !!n_1\dots n_5!!. Since !!n_1+\dots +n_5 = 8!!, we need at least !!(n_1-1)+\dots + (n_5-1) = 8-5 = 3!! edges for internal connections

- But we have only !!2!!.

It's true that !!2!! edges is not enough for internal connectivity. But in my opinion Claude didn't come close to saying why.