Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2025: | JFMAMJ |

| JAS | |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

Subtopics:

| Mathematics | 245 |

| Programming | 99 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 22 |

| Physics | 21 |

| Perl | 17 |

| Biology | 16 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Sat, 05 Aug 2017

Another example of a machine perception failure

IEEE Spectrum has yet another article about fooling computer vision algorithms with subtle changes that humans don't even notice. For more details and references to the literature, see this excellent article by Andrej Karpathy. Here is a frequently-reprinted example:

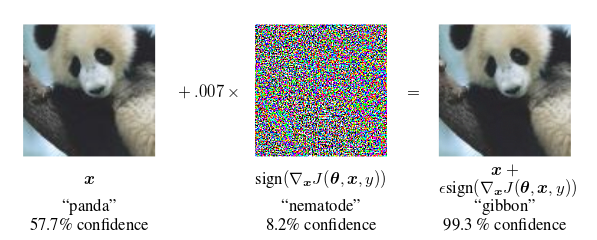

The classifier is 57.7% confident that the left-hand image is a panda. When the image is perturbed—by less than one part in 140—with the seemingly-random pattern of colored dots to produce the seemingly identical image on the right, the classifier identifies it as a gibbon with 99.3% confidence.

(Illustration from Goodfellow, Shlens, and Szegedy, “Explaining and Harnessing Adversarial Examples”, International Conference on Learning Representations 2015.)

Here's an interesting complementary example that surprised me recently. I have the Shazam app on my phone. When activated, the app tries to listen for music, and then it tries to tell you what the music was. If I'm in the pharmacy and the background music is something I like but don't recognize, I can ask Shazam what it is, and it will tell me. Magic!

Earlier this year I was in the car listening to the radio and I tried this, and it failed. I ran it again, and it failed again. I pulled over to the side of the road, activated the app, and held the phone's microphone up to the car's speaker so that Shazam could hear clearly. Shazam was totally stumped.

So I resumed driving and paid careful attention when the piece ended so that I wouldn't miss when the announcer said what it was. It had been Mendelssohn's fourth symphony.

Shazam can easily identify Mendelssohn's fourth symphony, as I confirmed later. In fact, it can identify it much better than a human can—in some ways. When I tested it, it immediately recognized not only the piece, but the exact recording I used for the test: it was the 1985 recording by the London Symphony Orchestra, conducted by Claudio Abbado.

Why had Shazam failed to recognize the piece on the radio? Too much background noise? Poor Internet connectivity? Nope. It was because the piece was being performed live by the Detroit Symphony Orchestra and as far as Shazam was concerned, it had never heard it before. For a human familiar with Mendelssohn's fourth symphony, this would be of no import. This person would recognize Mendelssohn's fourth symphony whenever it was played by any halfway-competent orchestra.

But Shazam doesn't hear the way people do. I don't know what it does (really I have no idea), but I imagine that it does some signal processing to remove background noise, accumulates digests of short sections of the audio data, and then matches these digests against a database of similar digests, compiled in advance from a corpus of recordings. The Detroit Orchestra's live performance hadn't been in the corpus, so there was no match in the database.

Shazam's corpus has probably a couple of dozen recordings of Mendelssohn's fourth symphony, but it has no idea that all these recordings are of the same piece, or that they sound very similar, because to Shazam they don't sound similar at all. I imagine it doesn't even have a notion of whether two pieces in the corpus sound similar, because it knows them only as distillations of short snatches, and it never compares corpus recordings with one another. Whatever Shazam is doing is completely different from what people do. One might say it hears the sound but not the music, just as the classifier from the Goodfellow paper sees the image but not the panda.

I wonder about a different example. When I hear an unfamiliar piece on the radio, I can often guess who wrote it. “Aha,” I say. “This is obviously Dvořák.” And then more often than not I am right, and even when I am not right, I am usually very close. (For some reasonable meaning of “close” that might be impossible to explain to Shazam.) In one particularly surprising case, I did this with Daft Punk, at that time having heard exactly two Daft Punk songs in my life. Upon hearing this third one, I said to myself “Huh, this sounds just like those Daft Punk songs.” I not claiming a lot of credit for this; Daft Punk has a very distinctive sound. I bring it up just to suggest that whatever magic Shazam is using probably can't do this even a little bit.

Do any of my Gentle Readers know anything about research on the problem of getting a machine to identify the author or genre of music from listening to it?

[ Addendum 20170806: Julia Evans has provided a technical reference and a high-level summary of Shazam's algorithm. This also led me to a trove of related research. ]

[Other articles in category /tech] permanent link