Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2026: | J |

| 2025: | JFMAMJ |

| JASOND | |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 246 |

| Programming | 100 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 23 |

| Physics | 21 |

| Perl | 17 |

| Biology | 16 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Sun, 18 Dec 2022

Recently I encountered the Dutch phrase den goede of den kwade, which means something like "the good [things] or the bad [ones]”, something like the English phrase “for better or for worse”.

Goede is obviously akin to “good”, but what is kwade? It turns out it is the plural of kwaad, which does mean “bad”. But are there any English cognates? I couldn't think of any, which is surprising, because Dutch words usually have one. (English is closely related to Frisian, which is still spoken in the northern Netherlands.)

I rummaged the dictionary and learned that it kwaad is akin to “cud”, the yucky stuff that cows regurgitate. And “cud” is also akin to “quid”, which is a chunk of chewing tobacco that people chew on like a cow's cud. (It is not related to the other quids.)

I was not expecting any of that.

[ Addendum: this article, which I wrote at 3:00 in the morning, is filled with many errors, including some that I would not have made if it had been daytime. Please disbelieve what you have read, and await a correction. ]

[ Addendum 20221229: Although I wrote that attendum the same day, I forgot to publish it. I am now so annoyed that I can't bring myself to write the corrections. I will do it next year. Thanks to all the very patient Dutch people who wrote to correct my many errors. ]

[Other articles in category /lang/etym] permanent link

A few days ago I was thinking about Rosneft (Росне́фть), the Russian national oil company. The “Ros” is obviously short for Rossiya, the Russian word for Russia, but what is neft?

“Hmm,” I wondered. “Maybe it is akin to naphtha?”

Yes! Ultimately both words are from Persian naft, which is the Old Persian word for petroleum. Then the Greeks borrowed it as νάφθα (naphtha) and the Russians, via Turkish. Petroleum is neft in many other languages, not just the ones you would expect like Azeri, Dari, and Turkmen, but also Finnish, French, Hebrew, and Japanese.

Sometimes I guess this stuff and it's just wrong, but it's fun when I get it right. I love puzzles!

[ Addendum 20230208: Tod McQuillin informs me that the Japanese word for petroleum is not related to naphtha; he says it is 石油 /sekiyu/ (literally "rock oil") or オイル /oiru/. The word I was thinking of was ナフサ /nafusa/ which M. McQuillin says means naphtha, not petroleum. (M. McQuillin also supposed that the word is borrowed from English, which I agree seems likely.)

I think my source for the original claim was this list of translations on Wiktionary. It is labeled as a list of words meaning “naturally occurring liquid petroleum”, and includes ナフサ and also entries purporting to be Finish, French, and Hebrew. I did not verify any of the the claims in Wiktionary, which could be many varieties of incorrect. ]

[Other articles in category /lang/etym] permanent link

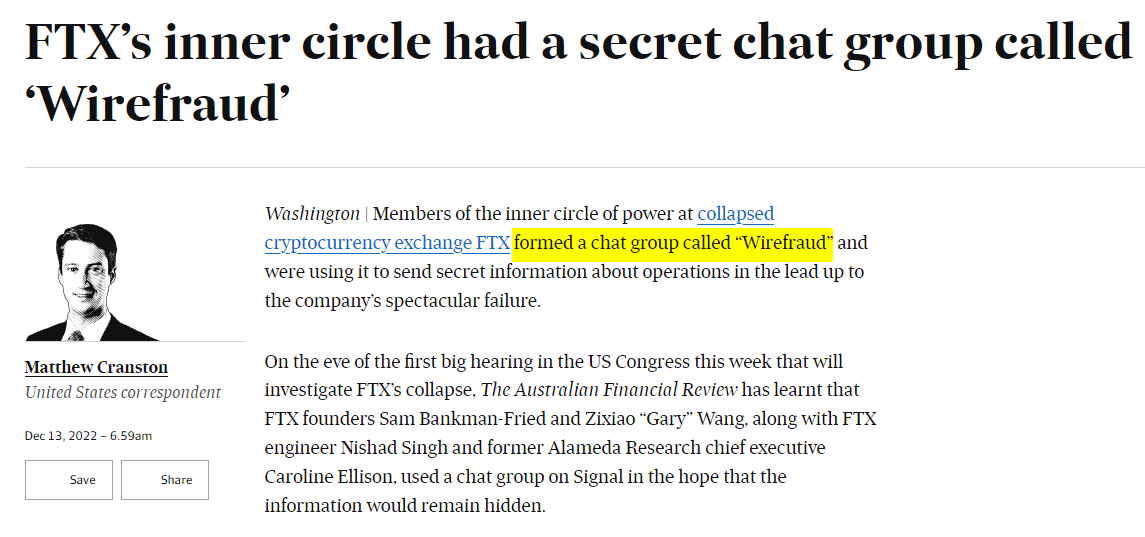

Tue, 13 Dec 2022A while back I wrote about how Katara disgustedly reported that some of her second-grade classmates had formed a stealing club and named it “Stealing Club”.

Anyway,

(Original source: Australian Financial Review)

[Other articles in category /law] permanent link

Sun, 04 Dec 2022

Addenda to recent articles 202211

I revised my chart of Haskell's numbers to include a few missing things, uncrossed some of the arrows, and added an explicit public domain notice,

The article contained a typo, a section titled “Shuff that don't work so good”. I decided this was a surprise gift from the Gods of Dada, and left it uncorrected.

My very old article about nonstandard adjectives now points out that the standard term for “nonstandard adjective” is “privative adjective”.

Similar to my suggested emoji for U.S. presidents, a Twitter user suggested emoji for UK prime ministers, some of which I even understand.

I added some discussion of why I did not use a cat emoji for President Garfield. A reader called January First-of-May suggested a tulip for Dutch-American Martin Van Buren, which I gratefully added.

In my article on adaptive group testing, Sam Dorfman and I wondered if there wasn't earlier prior art in the form of coin-weighing puzzles. M. January brought to my attention that none is known! The earliest known coin-weighing puzzles date back only to 1945. See the article for more details.

Some time ago I wrote an article on “What was wrong with SML?”. I said “My sense is that SML is moribund” but added a note back in April when a reader (predictably) wrote in to correct me.

However, evidence in favor of my view appeared last month when the Haskell Weekly News ran their annual survey, which included the question “Which programming languages other than Haskell are you fluent in?”, and SML was not among the possible choices. An oversight, perhaps, but a rather probative one.

I wondered if my earlier article was the only one on the Web to include the phrase “wombat coprolites”. It wasn't.

[Other articles in category /addenda] permanent link

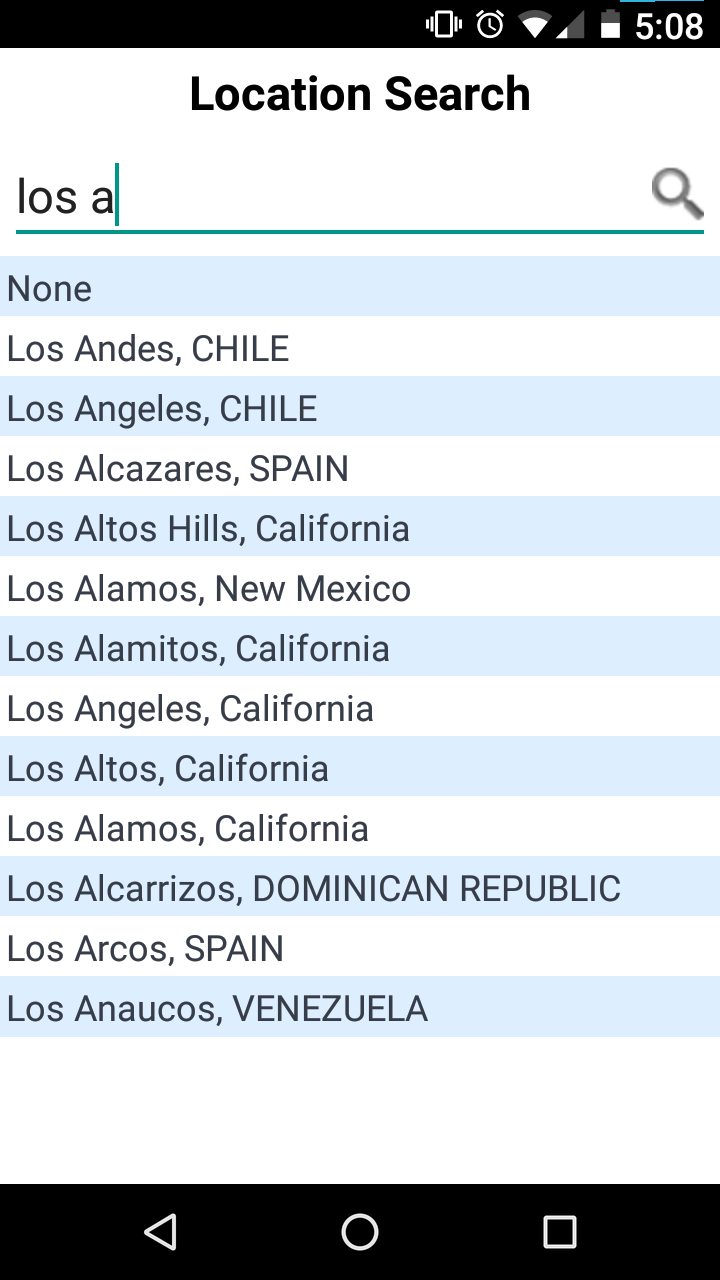

Software horror show: SAP Concur

This complaint is a little stale, but maybe it will still be interesting. A while back I was traveling to California on business several times a year, and the company I worked for required that I use SAP Concur expense management software to submit receipts for reimbursement.

At one time I would have had many, many complaints about Concur. But today I will make only one. Here I am trying to explain to the Concur phone app where my expense occurred, maybe it was a cab ride from the airport or something.

I had to interact with this control every time there was another expense to report, so this is part of the app's core functionality.

There are a lot of good choices about how to order this list. The best ones require some work. The app might use the phone's location feature to figure out where it is and make an educated guess about how to order the place names. (“I'm in California, so I'll put those first.”) It could keep a count of how often this user has chosen each location before, and put most commonly chosen ones first. It could store a list of the locations the user has selected before and put the previously-selected ones before the ones that had never been selected. It could have asked, when the expense report was first created, if there was an associated location, say “California”, and then used that to put California places first, then United States places, then the rest. It could have a hardwired list of the importance of each place (or some proxy for that, like population) and put the most important places at the top.

The actual authors of SAP Concur's phone app did none of these things. I understand. Budgets are small, deadlines are tight, product managers can be pigheaded. Sometimes the programmer doesn't have the resources to do the best solution.

But this list isn't even alphabetized.

There are two places named Los Alamos; they are not adjacent. There are two places in Spain; they are also not adjacent. This is inexcusable. There is no resource constraint that is so stringent that it would prevent the programmers from replacing

displaySelectionList(matches)

with

displaySelectionList(matches.sorted())

They just didn't.

And then whoever reviewed the code, if there was a code review, didn't

say “hey, why didn't you use displaySortedSelectionList here?”

And then the product manager didn't point at the screen and say “wouldn't it be better to alphabetize these?”

And the UX person, if there was one, didn't raise any red flag, or if they did nothing was done.

I don't know what Concur's software development and release process is like, but somehow it had a complete top-to-bottom failure of quality control and let this shit out the door.

I would love to know how this happened. I said a while back:

Assume that bad technical decisions are made rationally, for reasons that are not apparent.

I think this might be a useful counterexample. And if it isn't, if the individual decision-makers all made choices that were locally rational, it might be an instructive example on how an organization can be so dysfunctional and so filled with perverse incentives that it produces a stack of separately rational decisions that somehow add up to a failure to alphabetize a pick list.

Addendum : A possible explanation

Dennis Felsing, a former employee of SAP working on their HANA database, has suggested how this might have come about. Suppose that the app originally used a database that produced the results already sorted, so that no sorting in the client was necessary, or at least any omitted sorting wouldn't have been noticed. Then later, the backend database was changed or upgraded to one that didn't have the autosorting feature. (This might have happened when Concur was acquired by SAP, if SAP insisted on converting the app to use HANA instead of whatever it had been using.)

This change could have broken many similar picklists in the same way. Perhaps there was large and complex project to replace the database backend, and the unsorted picklist were discovered relatively late and were among the less severe problems that had to be overcome. I said “there is no resource constraint that is so stringent that it would prevent the programmers from (sorting the list)”. But if fifty picklists broke all at the same time for the same reason? And you weren't sure where they all were in the code? At the tail end of a large, difficult project? It might have made good sense to put off the minor problems like unsorted picklists for a future development cycle. This seems quite plausible, and if it's true, then this is not a counterexample of “bad technical decisions are made rationally for reasons that are not apparent”. (I should add, though, that the sorting issue was not fixed in the next few years.)

In the earlier article I said “until I got the correct explanation, the only explanation I could think of was unlimited incompetence.” That happened this time also! I could not imagine a plausible explanation, but M. Felsing provided one that was so plausible I could imagine making the decision the same way myself. I wish I were better at thinking of this kind of explanation.

[Other articles in category /prog] permanent link

Sun, 27 Nov 2022

Whatever became of the Peanuts kids?

One day I asked Lorrie if she thought that Schroeder actually grew up to be a famous concert pianist. We agreed that he probably did. Or at least Schroeder has as good a chance as anyone does. To become a famous concert pianist, you need to have talent and drive. Schroeder clearly has talent (he can play all that Beethoven and Mozart on a toy piano whose black keys are only painted on) and he clearly has drive. Not everyone with talent and drive does succeed, of course, but he might make it, whereas some rando like me has no chance at all.

That led to a longer discussion about what became of the other kids. Some are easier than others. Who knows what happens to Violet, Sally, (non-Peppermint) Patty, and Shermy? I imagine Violet going into realty for some reason.

As a small child I did not understand that Lucy's “psychiatric help 5¢” lemonade stand was hilarious, or that she would have been the literally worst psychiatrist in the world. (Schulz must have known many psychiatrists; was Lucy inspired by any in particular?) Surely Lucy does not become an actual psychiatrist. The world is cruel and random, but I refuse to believe it is that cruel. My first thought for Lucy was that she was a lawyer, perhaps a litigator. Now I like to picture her as a union negotiator, and the continual despair of the management lawyers who have to deal with her.

Her brother Linus clearly becomes a university professor of philosophy, comparative religion, Middle-Eastern medieval literature, or something like that. Or does he drop out and work in a bookstore? No, I think he's the kind of person who can tolerate the grind of getting a graduate degree and working his way into a tenured professorship, with a tan corduroy jacket with patches on the elbows, and maybe a pipe.

Peppermint Patty I can imagine as a high school gym teacher, or maybe a yoga instructor or massage therapist. I bet she'd be good at any of those. Or if we want to imagine her at the pinnacle of achievement, coach of the U.S. Olympic softball team. Marcie is calm and level-headed, but a follower. I imagine her as a highly competent project manager.

In the conversation with Lorrie, I said “But what happens to Charlie Brown?”

“You're kidding, right?” she asked.

“No, why?”

“To everyone's great surprise, Charlie Brown grows up to be a syndicated cartoonist and a millionaire philanthropist.”

Of course she was right. Charlie Brown is good ol' Charlie Schulz, whose immense success surprised everyone, and nobody more than himself.

Charles M. Schulz was born 100 years ago last Saturday.

[ Addendum 20221204: I forgot Charlie Brown's sister Sally. Unfortunately, the vibe I get from Sally is someone who will be sucked into one of those self-actualization cults like Lifespring or est. ]

[Other articles in category /humor] permanent link

Sat, 26 Nov 2022I was delighted to learn some time ago that there used to be giant wombats, six feet high at the shoulders, unfortunately long extinct.

It's also well known (and a minor mystery of Nature) that wombats have cubical poop.

Today I wondered, did the megafauna wombat produce cubical megaturds? And if so, would they fossilize (as turds often do) and leave ten-thousand-year-old mineral cubescat littering Australia? And if so, how big are these and where can I see them?

A look at Intestines of non-uniform stiffness mold the corners of wombat feces (Yang et al, Soft Matter, 2021, 17, 475–488) reveals a nice scatter plot of the dimensions of typical wombat scat, informing us that for (I think) the smooth-nosed (common) wombat:

- Length: 4.0 ± 0.6 cm

- Height: 2.3 ± 0.3 cm

- Width: 2.5 ± 0.3 cm

Notice though, not cubical! Clearly longer than they are thick. And I wonder how one distinguishes the width from the height of a wombat turd. Probably the paper explains, but the shitheads at Soft Matter want £42.50 plus tax to look at the paper. (I checked, and Alexandra was not able to give me a copy.)

Anyway the common wombat is about 40 cm long and 20 cm high, while the extinct giant wombats were nine or ten times as big: 400 cm long and 180 cm high, let's call it ten times. Then a propportional giant wombat scat would be a cuboid approximately 24 cm (9 in) wide and tall, and 40 cm (16 in) long. A giant wombat poop would be as long as… a wombat!

But not the imposing monoliths I had been hoping for.

Yang also wrote an article Duration of urination does not change with body size, something I have wondered about for a long time. I expected bladder size (and so urine quantity) to scale with the body volume, the cube of the body length. But the rate of urine flow should be proportional to the cross-sectional area of the urethra, only the square of the body length. So urination time should be roughly proportional to body size. Yang and her coauthors are decisive that this is not correct:

we discover that all mammals above 3 kg in weight empty their bladders over nearly constant duration of 21 ± 13 s.

What is wrong with my analysis above? It's complex and interesting:

This feat is possible, because larger animals have longer urethras and thus, higher gravitational force and higher flow speed. Smaller mammals are challenged during urination by high viscous and capillary forces that limit their urine to single drops. Our findings reveal that the urethra is a flow-enhancing device, enabling the urinary system to be scaled up by a factor of 3,600 in volume without compromising its function.

Wow. As Leslie Orgel said, evolution is cleverer than you are.

However, I disagree with the conclusion: 21±13 is not “nearly constant duration”. This is a range of 8–34s, with some mammals taking four times as long as others.

The appearance of the fibonacci numbers here is surely coincidental, but wouldn't it be awesome if it wasn't?

[ Addendum: I wondered if this was the only page on the web to contain the bigram “wombat coprolites”, but Google search produced this example from 2018:

Have wombats been around for enough eons that there might be wombat coprolites to make into jewelry? I have a small dinosaur coprolite that is kind of neat but I wouldn't make that turd into a necklace, it looks just like a piece of poop.

]

[ Addendum 20230209: I read the paper, but it does not explain what the difference is between the width of a wombat scat and the height. I wrote to Dr. Yang asking for an explantion, but she did not reply. ]

[Other articles in category /bio] permanent link

Tue, 08 Nov 2022

Addenda to recent articles 202210

I haven't done one of these in a while. And there have been addenda. I thought hey, what if I ask Git to give me a list of commits from October that contain the word ‘Addendum’. And what do you know, that worked pretty well. So maybe addenda summaries will become a regular thing again, if I don't forget by next month.

Most of the addenda resulted in separate followup articles, which I assume you will already have seen. ([1] [2] [3]) I will not mention this sort of addendum in future summaries.

In my discussion of lazy search in Haskell I had a few versions that used

do-notation in the list monad, but eventually abandoned it n favor of explicitconcatMap. For example:s nodes = nodes ++ (s $ concatMap childrenOf nodes)I went back to see what this would look like with

donotation:s nodes = (nodes ++) . s $ do n <- nodes childrenOf nMeh.

Regarding the origin of the family name ‘Hooker’, I rejected Wiktionary's suggestion that it was an occupational name for a maker of hooks, and speculated that it might be a fisherman. I am still trying to figure this out. I asked about it on English Language Stack Exchange but I have not seen anything really persuasive yet. One of the answers suggests that it is a maker of hooks, spelled hocere in earlier times.

(I had been picturing wrought-iron hooks for hanging things, and wondered why the occupational term for a maker of these wasn't “Smith”. But the hooks are supposedly clothes-fastening hooks, made of bone or some similar finely-workable material. )

The OED has no record of hocere, so I've asked for access to the Dictionary of Old English Corpus of the Bodleian library. This is supposedly available to anyone for noncommercial use, but it has been eight days and they have not yet answered my request.

I will post an update, if I have anything to update.

[Other articles in category /addenda] permanent link

Fri, 04 Nov 2022

A map of Haskell's numeric types

I keep getting lost in the maze of Haskell's numeric types. Here's the map I drew to help myself out. (I think there might have been something like this in the original Haskell 1998 report.)

Ovals are typeclasses. Rectangles are types. Black mostly-straight arrows show instance relationships. Most of the defined functions have straightforward types like !!\alpha\to\alpha!! or !!\alpha\to\alpha\to\alpha!! or !!\alpha\to\alpha\to\text{Bool}!!. The few exceptions are shown by wiggly colored arrows.

Basic plan

After I had meditated for a while on this picture I began to understand the underlying organization. All numbers support !!=!! and !!\neq!!. And there are three important properties numbers might additionally have:

Ord: ordered; supports !!\lt\leqslant\geqslant\gt!! etc.Fractional: supports divisionEnum: supports ‘pred’ and ‘succ’

Integral types are both Ord and Enum, but they are not

Fractional because integers aren't closed under division.

Floating-point and rational types are Ord and Fractional but not

Enum because there's no notion of the ‘next’ or ‘previous’ rational

number.

Complex numbers are numbers but not Ord because they don't admit a

total ordering. That's why Num plus Ord is called Real: it's

‘real’ as constrasted with ‘complex’.

More stuff

That's the basic scheme. There are some less-important elaborations:

Real plus Fractional is called RealFrac.

Fractional numbers can be represented as exact rationals or as

floating point. In the latter case they are instances of

Floating. The Floating types are required to support a large

family of functions like !!\log, \sin,!! and π.

You can construct a Ratio a type for any a; that's a fraction

whose numerators and denominators are values of type a. If you do this, the

Ratio a that you get is a Fractional, even if a wasn't one. In particular,

Ratio Integer is called Rational and is (of course) Fractional.

Shuff that don't work so good

Complex Int and Complex Rational look like they should exist, but

they don't really. Complex a is only an instance of Num when a

is floating-point. This means you can't even do 3 :: Complex

Int — there's no definition of fromInteger.

You can construct values of type Complex Int, but you can't do

anything with them, not even addition and subtraction. I think the

root of the problem is that Num requires an abs

function, and for complex numbers you need the sqrt function to be

able to compute abs.

Complex Int could in principle support most of the functions

required by Integral (such as div and mod) but Haskell

forecloses this too because its definition of Integral requires

Real as a prerequisite.

You are only allowed to construct Ratio a if a is integral.

Mathematically this is a bit odd. There is a generic construction,

called the field of quotients, which takes

a ring and turns it into a field, essentially by considering all the

formal fractions !!\frac ab!! (where !!b\ne 0!!), and with !!\frac ab!!

considered equivalent to !!\frac{a'}{b'}!! exactly when !!ab' = a'b!!.

If you do this with the integers, you get the rational numbers; if you

do it with a ring of polynomials, you get a field of rational functions, and

so on. If you do it to a ring that's already a field, it still

works, and the field you get is trivially isomorphic to the original

one. But Haskell doesn't allow it.

I had another couple of pages written about yet more ways in which the numeric class hierarchy is a mess (the draft title of this article was "Haskell's numbers are a hot mess") but I'm going to cut the scroll here and leave the hot mess for another time.

[ Addendum: Updated SVG and PNG to version 1.1. ]

[Other articles in category /prog/haskell] permanent link

Mon, 31 Oct 2022Content warning: something here to offend almost everyone

A while back I complained that there were no emoji portraits of U.S. presidents. Not that there a Chester A. Arthur portrait would see a lot of use. But some of the others might come in handy.

I couldn't figure them all out. I have no idea what a Chester Arthur emoji would look like. And I assigned 🧔🏻 to all three of Garfield, Harrison, and Hayes, which I guess is ambiguous but do you really need to be able to tell the difference between Garfield, Harrison, and Hayes? I don't think you do. But I'm pretty happy with most of the rest.

| George Washington | 💵 |

| John Adams | |

| Thomas Jefferson | 📜 |

| James Madison | |

| James Monroe | |

| John Quincy Adams | 🍐 |

| Andrew Jackson | |

| Martin Van Buren | 🌷 |

| William Henry Harrison | 🪦 |

| John Tyler | |

| James K. Polk | |

| Zachary Taylor | |

| Millard Fillmore | ⛽ |

| Franklin Pierce | |

| James Buchanan | |

| Abraham Lincoln | 🎭 |

| Andrew Johnson | 💩 |

| Ulysses S. Grant | 🍸 |

| Rutherford B. Hayes | 🧔🏻 |

| James Garfield | 🧔🏻 |

| Chester A. Arthur | |

| Grover Cleveland | 🔂 |

| Benjamin Harrison | 🧔🏻 |

| Grover Cleveland | 🔂 |

| William McKinley | |

| Theodore Roosevelt | 🧸 |

| William Howard Taft | 🛁 |

| Woodrow Wilson | 🎓 |

| Warren G. Harding | 🫖 |

| Calvin Coolidge | 🙊 |

| Herbert Hoover | ⛺ |

| Franklin D. Roosevelt | 👨🦽 |

| Harry S. Truman | 🍄 |

| Dwight D. Eisenhower | 🪖 |

| John F. Kennedy | 🍆 |

| Lyndon B. Johnson | 🗳️ |

| Richard M. Nixon | 🐛 |

| Gerald R. Ford | 🏈 |

| Jimmy Carter | 🥜 |

| Ronald Reagan | 💸 |

| George H. W. Bush | 👻 |

| William J. Clinton | 🎷 |

| George W. Bush | 👞 |

| Barack Obama | 🇰🇪 |

| Donald J. Trump | 🍊 |

| Joseph R. Biden | 🕶️ |

Honorable mention: Benjamin Franklin 🪁

Dishonorable mention: J. Edgar Hoover 👚

If anyone has better suggestions I'm glad to hear them. Note that I considered, and rejected 🎩 for Lincoln because it doesn't look like his actual hat. And I thought maybe McKinley should be 🏔️ but since they changed the name of the mountain back I decided to save it in case we ever elect a President Denali.

(Thanks to Liam Damewood for suggesting Harding, and to Colton Jang for Clinton's saxophone.)

Addenda

20221106

Twitter user Simon suggests emoji for UK prime ministers.

20221108

Rasmus Villemoes makes a good suggestion of 😼 for Garfield. I had considered this angle, but abandoned it because there was no way to be sure that the cat would be orange, overweight, or grouchy. Also the 🧔🏻 thing is funnier the more it is used. But I had been unaware that there is CAT FACE WITH WRY SMILE until M. Villemoes brought it to my attention, so maybe. (Had there been an emoji resembling a lasagna I would have chosen it instantly.)

20221108

January First-of-May has suggested 🌷 for Maarten van Buren, a Dutch-American whose first language was not English but Dutch. Let it be so!

20221218

Lorrie Kim reminded me that an old friend of ours, a former inhabitant of New Orleans, used to pass through Jackson Square, see the big statue of Andrew Jackson, and reflect gleefully on how he was covered in pigeon droppings. Lorrie suggested that I add a pigeon to the list above.

However, while there is a generic "bird" emoji, it is not specifically a pigeon. There are also three kinds of baby chicks; chickens both male and female; doves, ducks, eagles, flamingos, owls, parrots, peacocks, penguins, swans and turkeys; also single eggs and nests with multiple eggs. But no pigeons.

20250205

I got Anthropic's LLM, Claude, to help me with these, and it had some excellent suggestions.

[Other articles in category /history] permanent link

Sun, 30 Oct 2022A while back, discussing Vladimir Putin (not putain) I said

In English we don't seem to be so quivery. Plenty of people are named “Hoare”. If someone makes a joke about the homophone, people will just conclude that they're a boor.

Today I remembered Frances Trollope and her son Anthony Trollope. Where does the name come from? Surely it's not occupational?

Happily no, just another coincidence. According to Wikipedia it is a toponym, referring to a place called Troughburn in Northumberland, which was originally known as Trolhop, “troll valley”. Sir Andrew Trollope is known to have had the name as long ago as 1461.

According to the Times of London, Joanna Trollope, a 6th-generation descendant of Frances, once recalled

a night out with a “very prim and proper” friend who had the surname Hoare. The friend was dismayed by the amusement she caused in the taxi office when she phoned to book a car for Hoare and Trollope.

I guess the common name "Hooker" is occupational, perhaps originally referring to a fisherman.

[ Frances Trollope previously on this blog: [1] [2] ]

[ Addendum: (Wiktionary says that Hooker is occupational, a person who makes hooks. I find it surprising that this would be a separate occupattion. And what kind of hooks? I will try to look into this later. ]

[Other articles in category /lang] permanent link

Sun, 23 Oct 2022

This search algorithm is usually called "group testing"

Yesterday I described an algorithm that locates the ‘bad’ items among a set of items, and asked:

does this technique have a name? If I wanted to tell someone to use it, what would I say?

The answer is: this is group testing, or, more exactly, the “binary splitting” version of adaptive group testing, in which we are allowed to adjust the testing strategy as we go along. There is also non-adaptive group testing in which we come up with a plan ahead of time for which tests we will perform.

I felt kinda dumb when this was pointed out, because:

- A typical application (and indeed the historically first application) is for disease testing

- My previous job was working for a company doing high-throughput disease testing

- I found out about the job when one of the senior engineers there happened to overhear me musing about group testing

- Not only did I not remember any of this when I wrote the blog post, I even forgot about the disease testing application while I was writing the post!

Oh well. Thanks to everyone who wrote in to help me! Let's see, that's Drew Samnick, Shreevatsa R., Matt Post, Matt Heilige, Eric Harley, Renan Gross, and David Eppstein. (Apologies if I left out your name, it was entirely unintentional.)

I also asked:

Is the history of this algorithm lost in time, or do we know who first invented it, or at least wrote it down?

Wikipedia is quite confident about this:

The concept of group testing was first introduced by Robert Dorfman in 1943 in a short report published in the Notes section of Annals of Mathematical Statistics. Dorfman's report – as with all the early work on group testing – focused on the probabilistic problem, and aimed to use the novel idea of group testing to reduce the expected number of tests needed to weed out all syphilitic men in a given pool of soldiers.

Eric Harley said:

[It] doesn't date back as far as you might think, which then makes me wonder about the history of those coin weighing puzzles.

Yeah, now I wonder too. Surely there must be some coin-weighing puzzles in Sam Loyd or H.E. Dudeney that predate Dorfman?

Dorfman's original algorithm is not the one I described. He divides the items into fixed-size groups of n each, and if a group of n contains a bad item, he tests the n items individually. My proposal was to always split the group in half. Dorfman's two-pass approach is much more practical than mine for disease testing, where the test material is a body fluid sample that may involve a blood draw or sticking a swab in someone's nose, where the amount of material may be limited, and where each test offers a chance to contaminate the sample.

Wikipedia has an article about a more sophisticated of the binary-splitting algorithm I described. The theory is really interesting, and there are many ingenious methods.

Thanks to everyone who wrote in. Also to everyone who did not. You're all winners.

[ Addendum 20221108: January First-of-May has brought to my attention section 5c of David Singmaster's Sources in Recreational Mathematics, which has notes on the known history of coin-weighing puzzles. To my surprise, there is nothing there from Dudeney or Loyd; the earliest references are from the American Mathematical Monthly in 1945. I am sure that many people would be interested in further news about this. ]

[Other articles in category /prog] permanent link

Fri, 21 Oct 2022

More notes on deriving Applicative from Monad

A year or two ago I wrote about what you do if you already have a Monad and you need to define an Applicative instance for it. This comes up in converting old code that predates the incorporation of Applicative into the language: it has these monad instance declarations, and newer compilers will refuse to compile them because you are no longer allowed to define a Monad instance for something that is not an Applicative. I complained that the compiler should be able to infer this automatically, but it does not.

My current job involves Haskell programming and I ran into this issue again in August, because I understood monads but at that point I was still shaky about applicatives. This is a rough edit of the notes I made at the time about how to define the Applicative instance if you already understand the Monad instance.

pure is easy: it is identical to return.

Now suppose we

have >>=: how can we get <*>? As I eventually figured out last

time this came up, there is a simple solution:

fc <*> vc = do

f <- fc

v <- vc

return $ f v

or equivalently:

fc <*> vc = fc >>= \f -> vc >>= \v -> return $ f v

And in fact there is at least one other way to define it is just as good:

fc <*> vc = do

v <- vc

f <- fc

return $ f v

(Control.Applicative.Backwards

provides a Backwards constructor that reverses the order of the

effects in <*>.)

I had run into this previously

and written a blog post about it.

At that time I had wanted the second <*>, not the first.

The issue came up again in August because, as an exercise, I was trying to

implement the StateT state transformer monad constructor from scratch. (I found

this very educational. I had written State before, but StateT was

an order of magnitude harder.)

I had written this weird piece of code:

instance Applicative f => Applicative (StateT s f) where

pure a = StateT $ \s -> pure (s, a)

stf <*> stv = StateT $

\s -> let apf = run stf s

apv = run stv s

in liftA2 comb apf apv where

comb = \(s1, f) (s2, v) -> (s1, f v) -- s1? s2?

It may not be obvious why this is weird. Normally the definition of

<*> would look something like this:

stf <*> stv = StateT $

\s0 -> let (s1, f) = run stf s0

let (s2, v) = run stv s1

in (s2, f v)

This runs stf on the initial state, yielding f and a new state

s1, then runs stv on the new state, yielding v and a final state

s2. The end result is f v and the final state s2.

Or one could just as well run the two state-changing computations in the opposite order:

stf <*> stv = StateT $

\s0 -> let (s1, v) = run stv s0

let (s2, f) = run stf s1

in (s2, f v)

which lets stv mutate the state first and gives stf the result

from that.

I had been unsure of whether I wanted to run stf or stv first. I

was familiar with monads, in which the question does not come up. In

v >>= f you must run v first because you will pass its value

to the function f. In an Applicative there is no such dependency, so I

wasn't sure what I neeeded to do. I tried to avoid the question by

running the two computations ⸢simultaneously⸣ on the initial state

s0:

stf <*> stv = StateT $

\s0 -> let (sf, f) = run stf s0

let (sv, v) = run stv s0

in (sf, f v)

Trying to sneak around the problem, I was caught immediately, like a

small child hoping to exit a room unseen but only getting to the

doorway. I could run the computations ⸢simultaneously⸣ but on the

very next line I still had to say what the final state was in the end:

the one resulting from computation stf or the one resulting from

computation stv. And whichever I chose, I would be discarding the

effect of the other computation.

My co-worker Brandon Chinn

opined that this must

violate one of the

applicative functor laws.

I wasn't sure, but he was correct.

This implementation of <*> violates the applicative ”interchange”

law that requires:

f <*> pure x == pure ($ x) <*> f

Suppose f updates the state from !!s_0!! to !!s_f!!. pure x and

pure ($ x), being pure, leave it unchanged.

My proposed implementation of <*> above runs the two

computations and then updates the state to whatever was the result of

the left-hand operand, sf discarding any updates performed by the right-hand

one. In the case of f <*> pure x the update from f is accepted

and the final state is !!s_f!!.

But in the case of pure ($ x) <*> f the left-hand operand doesn't do

an update, and the update from f is discarded, so the final state is

!!s_0!!, not !!s_f!!. The interchange law is violated by this implementation.

(Of course we can't rescue this by yielding (sv, f v) in place of

(sf, f v); the problem is the same. The final state is now the

state resulting from the right-hand operand alone, !!s_0!! on the

left side of the law and !!s_f!! on the right-hand side.)

Stack Overflow discussion

I worked for a while to compose a question about this for Stack Overflow, but it has been discussed there at length, so I didn't need to post anything:

<**>is a variant of<*>with the arguments reversed. What does "reversed" mean?- How arbitrary is the "ap" implementation for monads?

- To what extent are Applicative/Monad instances uniquely determined?

That first thread contains this enlightening comment:

Functors are generalized loops

[ f x | x <- xs];Applicatives are generalized nested loops

[ (x,y) | x <- xs, y <- ys];Monads are generalized dynamically created nested loops

[ (x,y) | x <- xs, y <- k x].

That middle dictum provides another way to understand why my idea of running the effects ⸢simultaneously⸣ was doomed: one of the loops has to be innermost.

The second thread above (“How arbitrary is the ap implementation for

monads?”) is close to what I was aiming for in my question, and

includes a wonderful answer by Conor McBride (one of the inventors of

Applicative). Among other things, McBride points out that there are at least

four reasonable Applicative instances consistent with the monad

definition for nonempty lists.

(There is a hint in his answer here.)

Another answer there sketches a proof that if the applicative

”interchange” law holds for some applicative functor f, it holds for

the corresponding functor which is the same except that its <*>

sequences effects in the reverse order.

[Other articles in category /prog/haskell] permanent link

Thu, 20 Oct 2022Last week I was in the kitchen and Katara tried to tell Toph a secret she didn't want me to hear. I said this was bad opsec, told them that if they wanted to exchange secrets they should do it away from me, and without premeditating it, I uttered the following:

You shouldn't talk about things you shouldn't talk about while I'm in the room while I'm in the room.

I suppose this is tautological. But it's not any sillier than Tarski's observation that "snow is white" is true exactly if snow is white, and Tarski is famous.

I've been trying to think of more examples that really work. The best I've been able to come up with is:

You shouldn't eat things you shouldn't eat because they might make you sick, because they might make you sick.

I'm trying to decide if the nesting can be repeated. Is this grammatical?

You shouldn't talk about things you shouldn't talk about things you shouldn't talk about while I'm in the room while I'm in the room while I'm in the room.

I think it isn't. But if it is, what does it mean?

[ Previously, sort of. ]

[Other articles in category /lang] permanent link

Wed, 19 Oct 2022

What's this search algorithm usually called?

Consider this problem:

Input: A set !!S!! of items, of which an unknown subset, !!S_{\text{bad}}!!, are ‘bad’, and a function, !!\mathcal B!!, which takes a subset !!S'!! of the items and returns true if !!S'!! contains at least one bad item:

$$ \mathcal B(S') = \begin{cases} \mathbf{false}, & \text{if $S'\cap S_{\text{bad}} = \emptyset$} \\ \mathbf{true}, & \text{otherwise} \\ \end{cases} $$

Output: The set !!S_{\text{bad}}!! of all the bad items.

Think of a boxful of electronic components, some of which are defective. You can test any subset of components simultaneously, and if the test succeeds you know that each of those components is good. But if the test fails all you know is that at least one of the components was bad, not how many or which ones.

The obvious method is simply to test the components one at a time:

$$ S_{\text{bad}} = \{ x\in S \mid \mathcal B(\{x\}) \} $$

This requires exactly !!|S|!! calls to !!\mathcal B!!.

But if we expect there to be relatively few bad items, we may be able to do better:

- Call !!\mathcal B(S)!!. That is, test all the components at once. If none is bad, we are done.

- Otherwise, partition !!S!! into (roughly-equal) halves !!S_1!! and !!S_2!!, and recurse.

In the worst case this takes (nearly) twice as many calls as just calling !!\mathcal B!! on the singletons. But if !!k!! items are bad it requires only !!O(k\log |S|)!! calls to !!\mathcal B!!, a big win if !!k!! is small compared with !!|S|!!.

My question is: does this technique have a name? If I wanted to tell someone to use it, what would I say?

It's tempting to say "binary search" but it's not very much like binary search. Binary search finds a target value in a sorted array. If !!S!! were an array sorted by badness we could use something like binary search to locate the first bad item, which would solve this problem. But !!S!! is not a sorted array, and we are not really looking for a target value.

Is the history of this algorithm lost in time, or do we know who first invented it, or at least wrote it down? I think it sometimes pops up in connection with coin-weighing puzzles.

[ Addendum 20221023: this is the pure binary-splitting variation of adaptive group testing. I wrote a followup. ]

[Other articles in category /prog] permanent link

Tue, 18 Oct 2022In Perl I would often write a generic tree search function:

# Perl

sub search {

my ($is_good, $children_of, $root) = @_;

my @queue = ($root);

return sub {

while (1) {

return unless @queue;

my $node = shift @queue;

push @queue, $children_of->($node);

return $node if $is_good->($node);

}

}

}

For example, see Higher-Order Perl, section 5.3.

To use this, we provide two callback functions. $is_good checks

whether the current item has the properties we were searching for.

$children_of takes an item and returns its children in the tree.

The search function returns an iterator object, which, each time it is

called, returns a single item satisfying the $is_good predicate, or

undef if none remains. For example, this searches the space of all

strings over abc for palindromic strings:

# Perl

my $it = search(sub { $_[0] eq reverse $_[0] },

sub { return map "$_[0]$_" => ("a", "b", "c") },

"");

while (my $pal = $it->()) {

print $pal, "\n";

}

Many variations of this are possible. For example, replacing push

with unshift changes the search from breadth-first to depth-first.

Higher-Order Perl shows how to modify it to do heuristically-guided search.

I wanted to do this in Haskell, and my first try didn’t work at all:

-- Haskell

search1 :: (n -> Bool) -> (n -> [n]) -> n -> [n]

search1 isGood childrenOf root =

s [root]

where

s nodes = do

n <- nodes

filter isGood (s $ childrenOf n)

There are two problems with this. First, the filter is in the wrong

place. It says that the search should proceed downward only from the

good nodes, and stop when it reaches a not-good node.

To see what's wrong with this, consider a search for palindromes. Th

string ab isn't a palindrome, so the search would be cut off

at ab, and never proceed downward to find aba or abccbccba.

It should be up to childrenOf to decide how to

continue the search. If the search should be pruned at a particular

node, childrenOf should return an empty list of children. The

$isGood callback has no role here.

But the larger problem is that in most cases this function will compute

forever without producing any output at all, because the call to s

recurses before it returns even one list element.

Here’s the palindrome example in Haskell:

palindromes = search isPalindrome extend ""

where

isPalindrome s = (s == reverse s)

extend s = map (s ++) ["a", "b", "c"]

This yields a big fat !!\huge \bot!!: it does nothing, until memory is exhausted, and then it crashes.

My next attempt looked something like this:

search2 :: (n -> Bool) -> (n -> [n]) -> n -> [n]

search2 isGood childrenOf root = filter isGood $ s [root]

where

s nodes = do

n <- nodes

n : (s $ childrenOf n)

The filter has moved outward, into a single final pass over the

generated tree. And s now returns a list that at least has the node

n on the front, before it recurses. If one doesn’t look at the nodes

after n, the program doesn’t make the recursive call.

The palindromes program still isn’t right though. take 20

palindromes produces:

["","a","aa","aaa","aaaa","aaaaa","aaaaaa","aaaaaaa","aaaaaaaa",

"aaaaaaaaa", "aaaaaaaaaa","aaaaaaaaaaa","aaaaaaaaaaaa",

"aaaaaaaaaaaaa","aaaaaaaaaaaaaa", "aaaaaaaaaaaaaaa",

"aaaaaaaaaaaaaaaa","aaaaaaaaaaaaaaaaa", "aaaaaaaaaaaaaaaaaa",

"aaaaaaaaaaaaaaaaaaa"]

It’s doing a depth-first search, charging down the leftmost branch to

infinity. That’s because the list returned from s (a:b:rest) starts

with a, then has the descendants of a, before continuing with b

and b's descendants. So we get all the palindromes beginning with

“a” before any of the ones beginning with "b", and similarly all

the ones beginning with "aa" before any of the ones beginning with

"ab", and so on.

I needed to convert the search to breadth-first, which is memory-expensive but at least visits all the nodes, even when the tree is infinite:

search3 :: (n -> Bool) -> (n -> [n]) -> n -> [n]

search3 isGood childrenOf root = filter isGood $ s [root]

where

s nodes = nodes ++ (s $ concat (map childrenOf nodes))

This worked. I got a little lucky here, in that I had already had the

idea to make s :: [n] -> [n] rather than the more obvious s :: n ->

[n]. I had done that because I wanted to do the n <- nodes thing,

which is no longer present in this version. But it’s just what we need, because we

want s to return a list that has all the nodes at the current

level (nodes) before it recurses to compute the nodes farther down.

Now take 20 palindromes produces the answer I wanted:

["","a","b","c","aa","bb","cc","aaa","aba","aca","bab","bbb","bcb",

"cac", "cbc","ccc","aaaa","abba","acca","baab"]

While I was writing this version I vaguely wondered if there was something that combines concat and map, but I didn’t follow up on it until just now. It turns out there is and it’s called concatMap. 😛

search3' :: (n -> Bool) -> (n -> [n]) -> n -> [n]

search3' isGood childrenOf root = filter isGood $ s [root]

where

s nodes = nodes ++ (s $ concatMap childrenOf nodes)

So this worked, and I was going to move on. But then a brainwave hit me: Haskell is a lazy language. I don’t have to generate and filter the tree at the same time. I can generate the entire (infinite) tree and filter it later:

-- breadth-first tree search

bfsTree :: (n -> [n]) -> [n] -> [n]

bfsTree childrenOf nodes =

nodes ++ bfsTree childrenOf (concatMap childrenOf nodes)

search4 isGood childrenOf root =

filter isGood $ bfsTree childrenOf [root]

This is much better because it breaks the generation and filtering into independent components, and also makes clear that searching is nothing more than filtering the list of nodes. The interesting part of this program is the breadth-first tree traversal, and the tree traversal part now has only two arguments instead of three; the filter operation afterwards is trivial. Tree search in Haskell is mostly tree, and hardly any search!

With this refactoring we might well decide to get rid of search entirely:

palindromes4 = filter isPalindrome allStrings

where

isPalindrome s = (s == reverse s)

allStrings = bfsTree (\s -> map (s ++) ["a", "b", "c"]) [""]

And then I remembered something I hadn’t thought about in a long, long time:

[Lazy evaluation] makes it practical to modularize a program as a generator that constructs a large number of possible answers, and a selector that chooses the appropriate one.

That's exactly what I was doing and what I should have been doing all along. And it ends:

Lazy evaluation is perhaps the most powerful tool for modularization … the most powerful glue functional programmers possess.

(”Why Functional Programming Matters”, John Hughes, 1990.)

I felt a little bit silly, because I wrote a book about lazy functional programming and yet somehow, it’s not the glue I reach for first when I need glue.

[ Addendum 20221023: somewhere along the way I dropped the idea of

using the list monad for the list construction, instead using explicit

map and concat. But it could be put back. For example:

s nodes = (nodes ++) . s $ do

n <- nodes

childrenOf n

I don't think this is an improvement on just using concatMap. ]

[Other articles in category /prog/haskell] permanent link

Thu, 13 Oct 2022

Today I realized I'm annoyed by the word "stethoscope". "Scope" is Greek for "look at". The telescope is for looking at far things (τῆλε). The microscope is for looking at small things (μικρός). The endoscope is for looking inside things (ἔνδον). The periscope is for looking around things (περί). The stethoscope is for looking at chests (στῆθος).

Excuse me? The hell it is! Have you ever tried looking through a stethoscope? You can't see for shit.

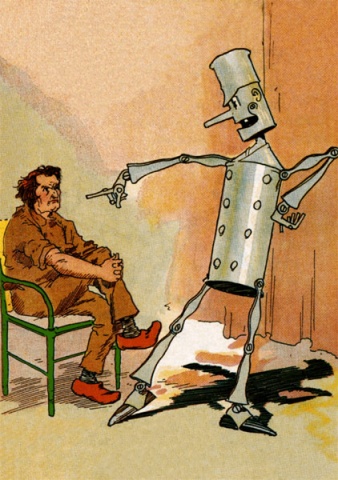

It should obviously have been called the stethophone.

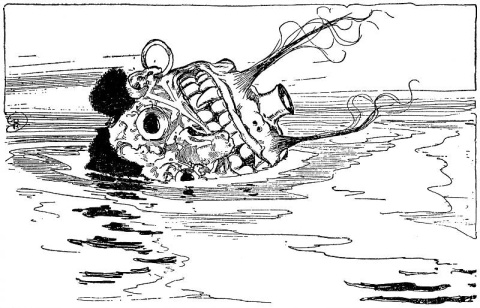

(It turns out that “stethophone” was adopted as the name for a later elaboration of the stethoscope, shown at right, that can listen to two parts of the chest at the same time, and deliver the sounds to different ears.)

Stethophone illustration is in the public domain, via Wikipedia.

[Other articles in category /lang/etym] permanent link

Mon, 12 Sep 2022

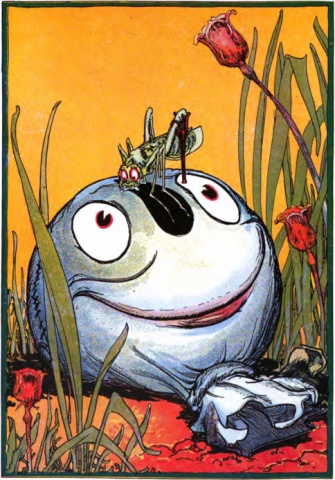

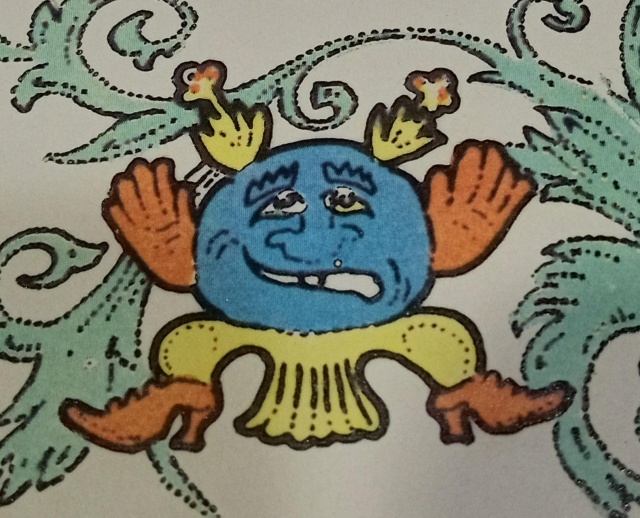

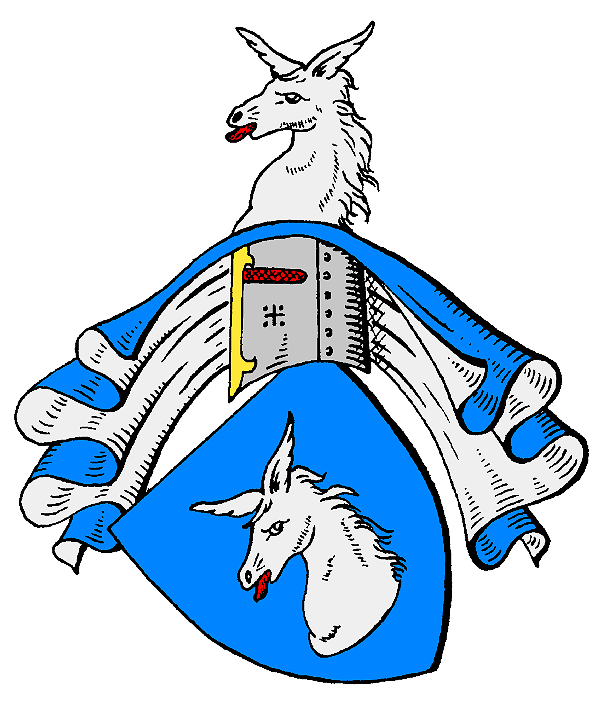

Coat of arms of Zeppelin-Wappen

"Can we choose a new coat of arms?"

"What's wrong with the one we have?"

"What's wrong with it? It's nauseated goat!"

"I don't know what your problem is, it's been the family crest for generations."

"Please, I'm begging."

"Okay, how about a compromise: I'll get a new coat of arms, but it must include a reference to the old one."

"I guess I can live with that."

[ Source: Wikipedia ]

[Other articles in category /misc] permanent link

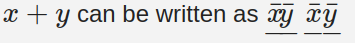

Thu, 08 Sep 2022When Albino Luciani was crowned Pope, he chose his papal name by concatenating the names of his two predecessors, John XXIII and Paul VI, to become John Paul. He died shortly after, and was succeeded by Karol Wojtyła who also took the name John Paul. Wojtyła missed a great opportunity to adopt Luciani's strategy. Had he concatenated the names of his predecessors, he would have been Paul John Paul. In this alternate universe his successor, Benedict XVI, would have been John Paul Paul John Paul, and the current pope, Francis, would have been Paul John Paul John Paul Paul John Paul. Each pope would have had a unique name, at the minor cost of having the names increase exponentially in length.

(Now I wonder if any dynasty has ever adopted the less impractical strategy of naming their rulers after binary numerals, say:

King Juan

King Juan Cyril

King Juan Juan

King Juan Cyril Cyril

King Juan Cyril Juan

King Juan Juan Cyril

(etc)

Or perhaps !!0, 1, 00, 01, 10, 11, 000, \ldots!!. There are many variations, some actually reasonable.)

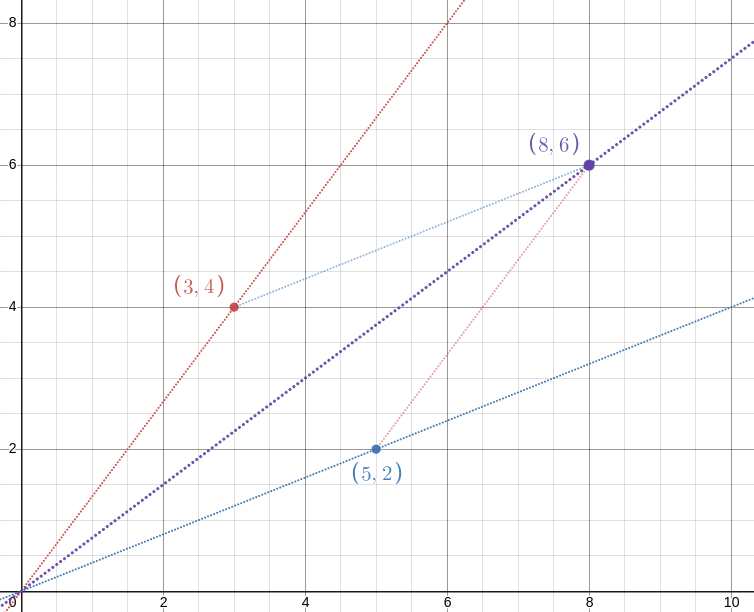

I sometimes fantasize that Philadelphia-area Interstate highways are going to do this. The main east-west highway around here is I-76. (Not so-called, as many imagine, in honor of Philadelphia's role in the American revolution of 1776, but simply because it lies south of I-78 and north of I-74 and I-70.) A connecting segment that branches off of I-76 is known as I-676. Driving to one or the other I often see signs that offer both:

I often fantasize that this is a single sign for I-76676, and that this implies an infinite sequence of highways designated I-67676676, I-7667667676676, and so on.

Finally, I should mention the cleverly-named fibonacci salad, which you make by combining the leftovers from yesterday's salad and the previous day's.

[ Addendum: Do you know the name of the swagman in Waltzing Matilda? It's Juan. The song says so: “Juan's a jolly swagman…” ]

[Other articles in category /math] permanent link

Wed, 06 Jul 2022

Things I wish everyone knew about Git (Part II)

This is a writeup of a talk I gave in December for my previous employer. It's long so I'm publishing it in several parts:

- Part I:

- Part II (you are here):

- More coming later:

- Branches are fictitious

- Committing partial changes

- Push and fetch; tracking branches

- Aliases and custom commands

The most important material is in Part I.

It is really hard to lose stuff

A Git repository is an append-only filesystem. You can add snapshots

of files and directories, but you can't modify or delete anything.

Git commands sometimes purport to modify data. For

example git commit --amend suggests that it amends a commit.

It doesn't. There is no such thing as amending a commit; commits are

immutable.

Rather, it writes a completely new commit, and then kinda turns its back on the old one. But the old commit is still in there, pristine, forever.

In a Git repository you can lose things, in the sense of forgetting

where they are. But they can almost always be found again, one way or

another, and when you find them they will be exactly the same as they

were before. If you git commit --amend and change your mind later,

it's not hard to get the old ⸢unamended⸣ commit back if you want it

for some reason.

If you have the SHA for a file, it will always be the exact same version of the file with the exact same contents.

If you have the SHA for a directory (a “tree” in Git jargon) it will always contain the exact same versions of the exact same files with the exact same names.

If you have the SHA for a commit, it will always contain the exact same metainformation (description, when made, by whom, etc.) and the exact same snapshot of the entire file tree.

Objects can have other names and descriptions that come and go, but the SHA is forever.

(There's a small qualification to this: if the SHA is the only way to refer to a certain object, if it has no other names, and if you haven't used it for a few months, Git might discard it from the repository entirely.)

But what if you do lose something?

There are many good answers to this question but I think the one to

know first is git-reflog, because it covers the great majority of

cases.

The git-reflog command means:

“List the SHAs of commits I have visited recently”

When I run git reflog the top of the output says what commits I

had checked out at recently, with the top line being the commit I have checked

out right now:

523e9fa1 HEAD@{0}: checkout: moving from dev to pasha

5c31648d HEAD@{1}: pull: Fast-forward

07053923 HEAD@{2}: checkout: moving from pr2323 to dev

...

The last thing I did was check out the branch named pasha; its tip

commit is at 523e9f1a.

Before

that, I did git pull and Git updated my local dev branch from the

remote one, updating it to 5c31648d.

Before that, I had switched to dev from a different branch,

pr2323. At that time, before the pull, dev referred to commit

07053923.

Farther down in the output are some commits I visited last August:

...

58ec94f6 HEAD@{928}: pull --rebase origin dev: checkout 58ec94f6d6cb375e09e29a7a6f904e3b3c552772

e0cfbaee HEAD@{929}: commit: WIP: model classes for condensedPlate and condensedRNAPlate

f8d17671 HEAD@{930}: commit: Unskip tests that depend on standard seed data

31137c90 HEAD@{931}: commit (amend): migrate pedigree tests into test/pedigree

a4a2431a HEAD@{932}: commit: migrate pedigree tests into test/pedigree

1fe585cb HEAD@{933}: checkout: moving from LAB-808-dao-transaction-test-mode to LAB-815-pedigree-extensions

...

Suppose I'm caught in some horrible Git nightmare. Maybe I deleted the entire test suite or accidentally put my Small Wonder fanfic into a commit message or overwrote the report templates with 150 gigabytes of goat porn. I can go back to how things were before. I look in the reflog for the SHA of the commit just before I made my big blunder, and then:

git reset --hard 881f53fa

Phew, it was just a bad dream.

(Of course, if my colleagues actually saw the goat porn, it can't fix that.)

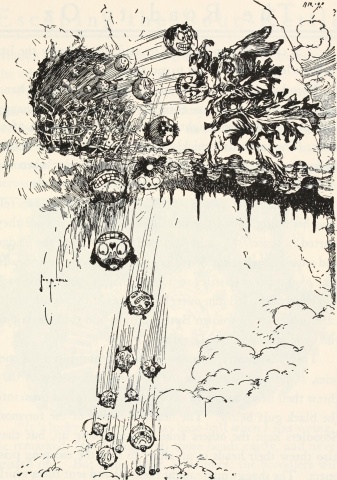

I would like to nominate Wile E. Coyote to be the mascot of Git. Because Wile E. is always getting himself into situations like this one:

But then, in the next scene, he is magically unharmed. That's Git.

Finding old stuff with git-reflog

git reflogby itself lists the places thatHEADhas beengit reflog some-branchlists the places thatsome-branchhas been- That

HEAD@{1}thing in thereflogoutput is another way to name that commit if you don't want to use the SHA. - You can abbreviate it to just

@{1}. The following locutions can be used with any git command that wants you to identify a commit:

@{17}(HEADas it was 17 actions ago)@{18:43}(HEADas it was at 18:43 today)@{yesterday}(HEADas it was 24 hours ago)dev@{'3 days ago'}(devas it was 3 days ago)some-branch@{'Aug 22'}(some-branchas it was last August 22)

(Use with

git-checkout,git-reset,git-show,git-diff, etc.)Also useful:

git show dev@{'Aug 22'}:path/to/some/file.txt“Print out that file, as it was on

dev, asdevwas on August 22”

It's all still in there.

What if you can't find it?

Don't panic! Someone with more experience can probably find it for you. If you have a local Git expert, ask them for help.

And if they are busy and can't help you immediately, the thing you're looking for won't disappear while you wait for them. The repository is append-only. Every version of everything is saved. If they could have found it today, they will still be able to find it tomorrow.

(Git will eventually throw away lost and unused snapshots, but typically not anything you have used in the last 90 days.)

What if you regret something you did?

Don't panic! It can probably put it back the way it was.

Git leaves a trail

When you make a commit, Git prints something like this:

your-topic-branch 4e86fa23 Rework foozle subsystem

If you need to find that commit again, the SHA 4e86fa23 is in your

terminal scrollback.

When you fetch a remote branch, Git prints:

6e8fab43..bea7535b dev -> origin/dev

What commit was origin/dev before the fetch? At 6e8fab43.

What commit is it now? bea7535b.

What if you want to look at how it was before? No problem, 6e8fab43

is still there. It's not called origin/dev any more, but the SHA is

forever. You can still check it out and look at it:

git checkout -b how-it-was-before 6e8fab43

What if you want to compare how it was with how it is now?

git log 6e8fab43..bea7535b

git show 6e8fab43..bea7535b

git diff 6e8fab43..bea7535b

Git tries to leave a trail of breadcrumbs in your terminal. It's constantly printing out SHAs that you might want again.

A few things can be lost forever!

After all that talk about how Git will not lose things, I should point

out the exceptions. The big exception is that if you have created

files or made changes in the working tree, Git is unaware of them

until you have added them with git-add. Until then, those changes

are in the working tree but not in the repository, and if you discard

them Git cannot help you get them back.

Good advice is Commit early and often. If you don't commit, at

least add changes with git-add. Files added but not committed are

saved in the repository,

although they can be hard to find

because they haven't been packaged into a commit with a single SHA id.

Some people automate this: they have a process that runs every few minutes and commits the current working tree to a special branch that they look at only in case of disaster.

The dangerous commands are

git-resetandgit-checkout

which modify the working tree, and so might wipe out changes that aren't in the repository. Git will try to warn you before doing something destructive to your working tree changes.

git-rev-parse

We saw a little while ago that Git's language for talking about commits and files is quite sophisticated:

my-topic-branch@{'Aug 22'}:path/to/some/file.txt

Where is this language documented? Maybe not where you would expect: it's in the

manual for git-rev-parse.

The git rev-parse command is less well-known than it should be. It takes a

description of some object and turns it into a SHA.

Why is that useful? Maybe not, but

The

git-rev-parseman page explains the syntax of the descriptions Git understands.

A good habit is to skim over the manual every few months. You'll pick up something new and useful every time.

My favorite is that if you use the syntax :/foozle you get the most

recent commit on the current branch whose message mentions

foozle. For example:

git show :/foozle

or

git log :/introduce..:/remove

Coming next week (probably), a few miscellaneous matters about using Git more effectively.

[Other articles in category /prog/git] permanent link

Wed, 29 Jun 2022

Things I wish everyone knew about Git (Part I)

This is a writeup of a talk I gave in December for my previous employer. It's long so I'm publishing it in several parts:

- Part I (you are here):

- Part II: (coming later)

- More coming later still:

- Branches are fictitious

- Committing partial changes

- Push and fetch; tracking branches

- Aliases and custom commands

How to approach Git; general strategy

Git has an elegant and powerful underlying model based on a few simple concepts:

- Commits are immutable snapshots of the repository

- Branches are named sequences of commits

- Every object has a unique ID, derived from its content

Built atop this elegant system is a flaming trash pile.

The command set wasn't always well thought out, and then over the years it grew by accretion, with new stuff piled on top of old stuff that couldn't be changed because Backward Compatibility. The commands are non-orthogonal and when two commands perform the same task they often have inconsistent options or are described with different terminology. Even when the individual commands don't conflict with one another, they are often badly-designed and confusing. The documentation is often very poorly written.

What this means

With a lot of software, you can opt to use it at a surface level without understanding it at a deeper level:

“I don't need to

know how it works.

I just want to know which commands to run.”

This is often an effective strategy, but

with Git, this does not work.

You can't “just know which commands to run” because the commands do not make sense!

To work effectively with Git, you must have a model of what the repository is like, so that you can formulate questions like “is the repo on this state or that state?” and “the repo is in this state, how do I get it into that state?”. At that point you look around for a command that answers your question, and there are probably several ways to do what you want.

But if you try to understand the commands without the model, you will suffer, because the commands do not make sense.

Just a few examples:

git-resetdoes up to three different things, depending on flagsgit-checkoutis worseThe opposite of

git-pushis notgit-pull, it'sgit-fetchetc.

If you try to understand the commands without a clear idea of the model, you'll be perpetually confused about what is happening and why, and you won't know what questions to ask to find out what is going on.

READ THIS

When I first used Git it drove me almost to tears of rage and frustration. But I did get it under control. I don't love Git, but I use it every day, by choice, and I use it effectively.

The magic key that rescued me was

John Wiegley's

Git From the

Bottom Up

Git From the Bottom Up explains the model. I read it. After that I wept no more. I understood what was going on. I knew how to try things out and how to interpret what I saw. Even when I got a surprise, I had a model to fit it into.

That's the best advice I have. Read Wiegley's explanation. Set aside time to go over it carefully and try out his examples. It fixed me.

If I were going to tell every programmer just one thing about Git, that would be it.

The rest of this series is all downhill from here.

But if I were going to tell everyone just one more thing, it would be:

It is very hard to

permanently lose work.

If something seems to have gone wrong, don't panic.

Remain calm and ask an expert.

Many more details about that are in the followup article.

[Other articles in category /prog/git] permanent link

Thu, 02 Jun 2022

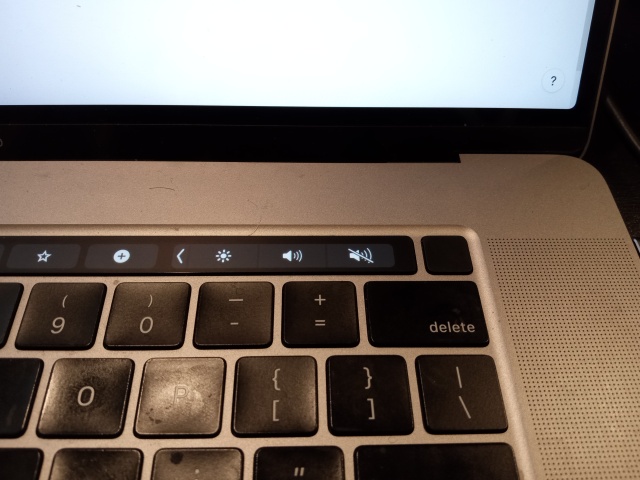

Disabling the awful Macbook screen lock key

(The actual answer is at the very bottom of the article, if you want to skip my complaining.)

My new job wants me to do my work on a Macbook Pro, which in most ways is only a little more terrible than the Linux laptops I am used to. I don't love anything about it, and one of the things I love the least is the Mystery Key. It's the blank one above the delete key:

This is sometimes called the power button, and sometimes the TouchID. It is a sort of combined power-lock-unlock button. It has something to do with turning the laptop on and off, putting it to sleep and waking it up again, if you press it in the right way for the right amount of time. I understand that it can also be trained to recognize my fingerprints, which sounds like something I would want to do only a little more than stabbing myself in the eye with a fork.

If you tap the mystery button momentarily, the screen locks, which is very convenient, I guess, if you have to pee a lot. But they put the mystery button right above the delete key, and several times a day I fat-finger the delete key, tap the corner of the mystery button, and the screen locks. Then I have to stop what I am doing and type in my password to unlock the screen again.

No problem, I will just turn off that behavior in the System Preferences. Ha ha, wrong‑o. (Pretend I inserted a sub-article here about the shitty design of the System Preferences app, I'm not in the mood to actually do it.)

Fortunately there is a discussion of the issue on the Apple community support forum. It was posted nearly a year ago, and 316 people have pressed the button that says "I have this question too". But there is no answer. YAAAAAAY community support.

Here it is again. 292 more people have this question. This time there is an answer!

practice will teach your muscle memory from avoiding it.

This question was tough to search for. I found a lot of questions about disabling touch ID, about configuring the touch ID key to lock the screen, basically every possible incorrect permutation of what I actually wanted. I did eventually find what I wanted on Stack Exchange and on Quora — but no useful answers.

There was a discussion of the issue on Reddit:

How do you turn off the lock screen when you press the Touch ID button on MacBook Pro. Every time I press the Touch ID button it locks my screen and its super irritating. how do I disable this?

I think the answer might be my single favorite Reddit comment ever:

My suggestion would be not to press it unless you want to lock the screen. Why do you keep pressing it if that does something you don't want?

Victory!

I did find a solution! The key to the mystery was provided by Roslyn

Chu. She suggested

this page from 2014

which has an incantation that worked back in ancient times.

That incantation didn't work on my computer, but it put me on the

trail to the right one. I did need to use the defaults command and

operate on the com.apple.loginwindow thing, but the property name

had changed since 2014. There seems to be no way to interrogate the

meaningful property names; you can set anything you want, just like

Unix environment variables. But

The current developer documentation for the com.apple.loginwindow thing

has the current list of properties and one of them is the one I want.

To fix it, run the following command in a terminal:

defaults write com.apple.loginwindow DisableScreenLockImmediate -bool yes

They documenation claims will it will work on macOS 10.13 and later; it did work on my 12.4 system.

Something something famous Macintosh user experience.

[ The cartoon is from howfuckedismydatabase.com. ]

[ Update 20250130: While looking for the answer I found a year-old post on Reddit asking for the same thing, but with no answer. So when I learned the answer, I posted it there. Now, three years later still, I have a grateful reply from someone who needed the same formula! ]

[Other articles in category /tech] permanent link

Sat, 28 May 2022

“Llaves” and other vanishing consonants

Lately I asked:

Where did the ‘c’ go in llave (“key”)? It's from Latin clavīs…

Several readers wrote in with additional examples, and I spent a little while scouring Wiktionary for more. I don't claim that this list is at all complete; I got bored partway through the Wiktionary search results.

| Spanish | English | Latin antecedent |

|---|---|---|

| llagar | to wound | plāgāre |

| llama | flame | flamma |

| llamar | to summon, to call | clāmāre |

| llano | flat, level | plānus |

| llantén | plaintain | plantāgō |

| llave | key | clavis |

| llegar | to arrive, to get, to be sufficient | plicāre |

| lleno | full | plēnus |

| llevar | to take | levāre |

| llorar | to cry out, to weep | plōrāre |

| llover | to rain | pluere |

I had asked:

Is this the only Latin word that changed ‘cl’ → ‘ll’ as it turned into Spanish, or is there a whole family of them?

and the answer is no, not exactly. It appears that llave and llamar are the only two common examples. But there are many examples of the more general phenomenon that

(consonant) + ‘l’ → ‘ll’

including quite a few examples where the consonant is a ‘p’.

Spanish-related notes

Eric Roode directed me to this discussion of “Latin CL to Spanish LL” on the WordReference.com language forums. It also contains discussion of analogous transformations in Italian. For example, instead of plānus → llano, Italian has → piano.

Alex Corcoles advises me that Fundéu often discusses this sort of issue on the Fundéu web site, and also responds to this sort of question on their Twitter account. Fundéu is the Foundation of Emerging Spanish, a collaboration with the Royal Spanish Academy that controls the official Spanish language standard.

Several readers pointed out that although llave is the key that opens your door, the word for musical keys and for encryption keys is still clave. There is also a musical instrument called the claves, and an associated technical term for the rhythmic role they play. Clavícula (‘clavicle’) has also kept its ‘c’.

The connection between plicāre and llegar is not at all clear to me. Plicāre means “to fold”; English cognates include ‘complicated’, ‘complex’, ‘duplicate’, ‘two-ply’, and, farther back, ‘plait’. What this has to do with llegar (‘to arrive’) I do not understand. Wiktionary has a long explanation that I did not find convincing.

The levāre → llevar example is a little weird. Wiktionary says "The shift of an initial 'l' to 'll' is not normal".

Llaves also appears to be the Spanish name for the curly brace characters

{and}. (The square brackets are corchetes.)

Not related to Spanish

The llover example is a favorite of the Universe of Discourse, because Latin pluere is the source of the English word plover.

French parler (‘to talk’) and its English descendants ‘parley’ and ‘parlor’ are from Latin parabola.

Latin plōrāre (‘to cry out’) is obviously the source of English ‘implore’ and ‘deplore’. But less obviously, it is the source of ‘explore’. The original meaning of ‘explore’ was to walk around a hunting ground, yelling to flush out the hidden game.

English ‘autoclave’ is also derived from clavis, but I do not know why.

Wiktionary's advanced search has options to order results by “relevance” and last-edited date, but not alphabetically!

Thanks

- Thanks to readers Michael Lugo, Matt Hellige, Leonardo Herrera, Leah Neukirchen, Eric Roode, Brent Yorgey, and Alex Corcoles for hints clues, and references.

[ Addendum: Andrew Rodland informs me that an autoclave is so-called because the steam pressure inside it forces the door lock closed, so that you can't scald yourself when you open it. ]

[ Addendum 20230319: llevar, to rise, is akin to the English place name Levant which refers to the region around Syria, Israel, Lebanon, and Palestine: the “East”. (The Catalán word llevant simply means “east”.) The connection here is that the east is where the sun (and everything else in the sky) rises. We can see the same connection in the way the word “orient”, which also means an eastern region, is from Latin orior, “to rise”. ]

[Other articles in category /lang/etym] permanent link

Thu, 26 May 2022

Quick Spanish etymology question

Where did the ‘c’ go in llave (“key”)? It's from Latin clavīs, like in “clavicle”, “clavichord”, “clavier” and “clef”.

Is this the only Latin word that changed ‘cl’ → ‘ll’ as it turned into Spanish, or is there a whole family of them?

[ Addendum 20220528: There are more examples. ]

[Other articles in category /lang/etym] permanent link

Sat, 21 May 2022Sometime in the previous millennium, my grandfather told me this joke:

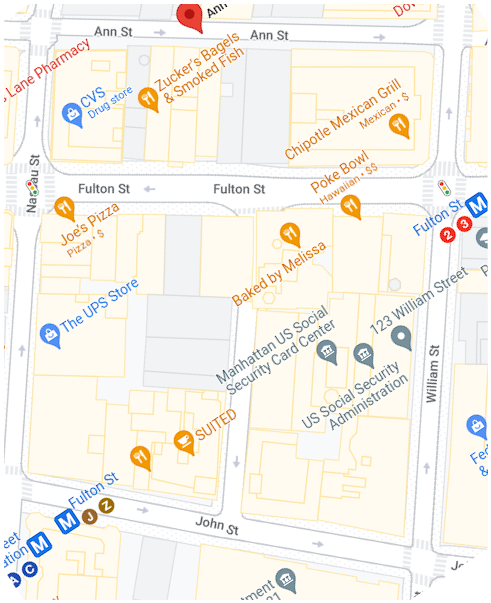

Why is Fulton Street the hottest street in New York?

Because it lies between John and Ann.

I suppose this might have been considered racy back when he heard it from his own grandfather. If you didn't get it, don't worry, it wasn't actually funny.

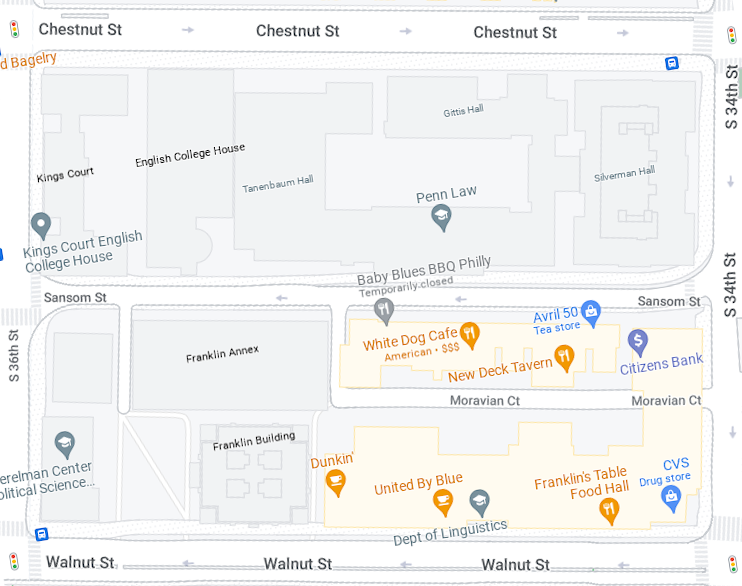

Today I learned the Philadelphia version of the joke, which is a little better:

What's long and black and lies between two nuts?

Sansom Street.

I think it that the bogus racial flavor improves it (it looks like it might turn out to be racist, and then doesn't). Some people may be more sensitive; to avoid making them uncomfortable, one can replace the non-racism with additional non-obscenity and ask instead “what's long and stiff and lies between two nuts?”.

There was a “what's long and stiff” joke I heard when I was a kid:

What's long and hard and full of semen?

A submarine.

Eh, okay. My opinion of puns is that they can be excellent, when they are served hot and fresh, but they rapidly become stale and heavy, they are rarely good the next day, and the prepackaged kind is never any good at all.

The antecedents of the “what's long and stiff” joke go back hundreds of years. The Exeter Book, dating to c. 950 CE, contains among other things ninety riddles, including this one I really like:

A curious thing hangs by a man's thigh,

under the lap of its lord. In its front it is pierced,

it is stiff and hard, it has a good position.

When the man lifts his own garment

above his knee, he intends to greet

with the head of his hanging object that familiar hole

which is the same length, and which he has often filled before.

(The implied question is “what is it?”.)

The answer is of course a key. Wikipedia has the original Old English if you want to compare.

Finally, it is off-topic but I do not want to leave the subject of the Exeter Book riddles without mentioning riddle #86. It goes like this:

Wiht cwom gongan

þær weras sæton

monige on mæðle,

mode snottre;

hæfde an eage

ond earan twa,