Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2026: | J |

| 2025: | JFMAMJ |

| JASOND | |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 246 |

| Programming | 100 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 23 |

| Physics | 21 |

| Perl | 17 |

| Biology | 16 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

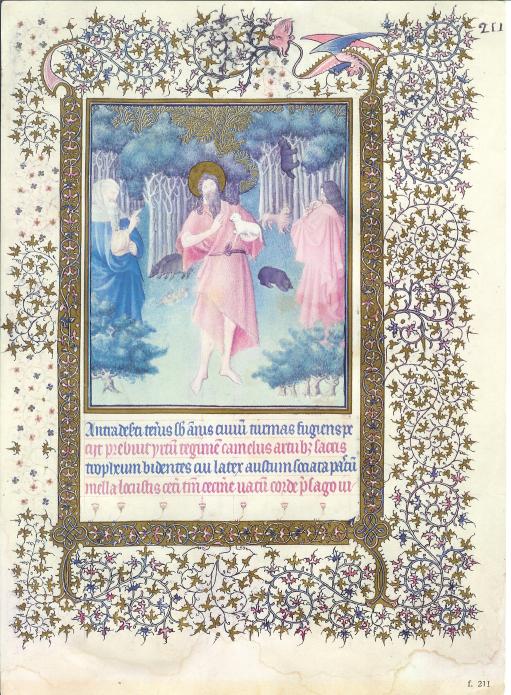

Sun, 31 Dec 2006

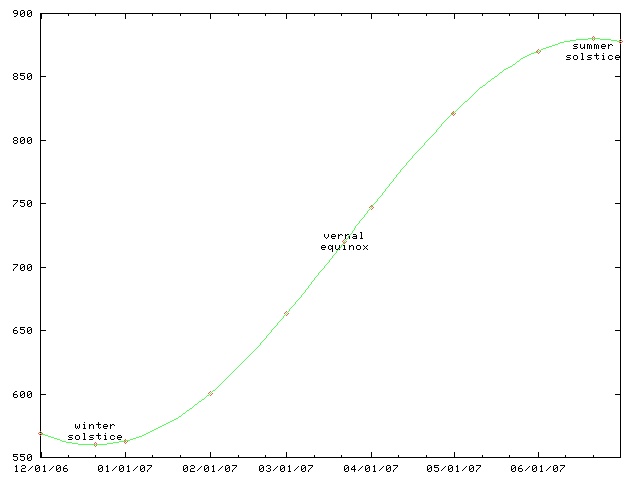

Daylight chart

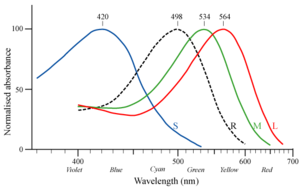

My wife is always sad this time of the year because the days are so

short and dark. I made a chart like this one a couple of years ago

so that she could see the daily progress from dark to light as the

winter wore on to spring and then summer.

This chart only works for Philadelphia and other places at the same latitude, such as Boulder, Colorado. For higher latitutdes, the y axis must be stretched. For lower latitudes, it must be squished. In the southern hemisphere, the whole chart must be flipped upside-down.

(The chart will work for places inside the Arctic or Antarctic circles, if you use the correct interpretations for y values greater than 1440 minutes and less than 0 minutes.)

This time of year, the length of the day is only increasing by about thirty seconds per day. (Decreasing, if you are in the southern hemishphere.) On the equinox, the change is fastest, about 165 seconds per day. (At latitudes 40°N and 40°S.) After that, the rate of increase slows, until it reaches zero on the summer solstice; then the days start getting shorter again.

As with all articles in the physics section of my blog, readers are cautioned that I do not know what I am talking about, although I can spin a plausible-sounding line of bullshit. For this article, readers are additionally cautioned that I failed observational astronomy twice in college.

[Other articles in category /physics] permanent link

Sat, 30 Dec 2006

Notes on Neal Stephenson's Baroque novels

Earlier this year I was reading books by Robert Hooke, John Wilkins,

Sir Thomas Browne, and other Baroque authors; people kept writing to

me to advise me to read Neal Stephenson's "Baroque cycle", in which

Hooke and Wilkins appear as characters.

I ignored this advice for a while, because those books are really fat, and because I hadn't really liked the other novels of Stephenson's that I'd read.

But I do like Stephenson's non-fiction. His long, long article about undersea telecommunications cables was one of my favorite reads of 1996, and I still remember it years later and reread it every once in a while. I find his interminable meandering pointless and annoying in his fiction, where I'm not sure why I should care about all the stuff he's describing. When the stuff is real, it's a lot easier to put up with it.

My problems with Stephenson's earlier novels, The Diamond Age and Snow Crash, will probably sound familiar: they're too long; they're disorganized; they don't have endings; too many cannons get rolled onstage and never fired.

Often "too long" is a pinheaded criticism, and when I see it I'm immediately wary. How long is "too long"? It calls to mind the asinine complaint from Joseph II that Mozart's music had "too many notes". A lot of people who complain that some book is "too long" just mean that they were too lazy to commit the required energy. When I say that Stephenson's earlier novels were "too long", I mean that he had more good ideas than he could use, and put a lot of them into the books even when they didn't serve the plot or the setting or the characters. A book is like a house. It requires a plan, and its logic dictates portions of the plan. You don't put in eleven bathtubs just because you happen to have them lying around, and you don't stick Ionic columns on the roof just because Home Depot had a sale on Ionic columns the week you were building it.

So the first thing about Stephenson's Baroque Trilogy is that it's not actually a trilogy. Like The Lord of the Rings, it was published in three volumes because of physical and commercial constraints. But the division into three volumes is essentially arbitrary.

The work totals about 2,700 pages. Considered as a trilogy, this is three very long books. Stephenson says in the introduction that it is actually eight novels, not three. He wants you to believe that he has actually written eight middle-sized books. But he hasn't; he is lying, perhaps in an attempt to shut up the pinheads who complain that his books are "too long". This is not eight middle-sized books. It is one extremely long book.

The narrative of the Baroque cycle is continuous, following the same characters from about 1650 up through about 1715. There is a framing story, introduced in the first chapters, which is followed by a flashback that lasts about 1,600 pages. Events don't catch up to the frame story until the third volume. If you consider Quicksilver to be a novel, the opening chapters are entirely irrelevant. If you consider it to be three novels, the opening chapters of the first novel are entirely irrelevant. It starts nowhere and ends nowhere, a vermiform appendix. But as a part of a single novel, it's not vestigial at all; it's a foreshadowing of later developments, which are delivered in volume III, or book 6, depending on how you count.

Another example: The middle volume, titled The Confusion, alternates chapters from two of the eight "novels" that make up the cycle. Events in these two intermingled ("con-fused") novels take place concurrently. Stephenson claims that they are independent, but they aren't.

So from now on I'm going to drop the pretense that this is a trilogy or a "cycle", and I'm just going to call this novel the "Baroque novel".

This was quite a surprise to me. The world is full of incoherent ramblers, and most of them, if you really take the time to listen to them carefully, and at length, turn out to be completely full of shit. You get nothing but more incoherence.

Stephenson at 600 pages is a semi-coherent rambler; to really get what he is saying, you have to turn him up to 2,700 pages. Most people would have been 4.5 times as incoherent; Stephenson is at least 4.5 times as lucid. His ideas are great; he just didn't have enough space to explain them before! The Baroque novel has a single overarching theme, which is the invention of the modern world. One of the strands of this theme is the invention of science, and the modern conception of science; another is the invention of money, and the modern conception of money.

I've written before about what I find so interesting about the Baroque thinkers. Medieval, and even Renaissance thought seems very alien to me. In the baroque writers, I have the first sense of real understanding, of people grappling with the same sorts of problems that I do, in the same sorts of ways. For example, I've written before about John Wilkins' attempt to manufacture a universal language of thought. People are still working on this. Many of the particular features of Wilkins' attempt come off today as crackpottery, but to the extent that they do, it's only because we know now that these approaches won't work. And the reason we know that today is that Wilkins tried those approaches in 1668 and it didn't work.

I find that almost all of Stephenson's annoying habits are much less annoying in the context of historical fiction. For example, many plot threads are left untied at the end. Daniel Waterhouse (fictional) becomes involved with Thomas Newcomen (real) and his Society for the Raising of Water by Fire. (That is, using steam engines to pump water out of mines.) This society figures in the plot of the last third of the novel, but what becomes of it? Stephenson drops it; we don't find out. In a novel, this would be annoying. But in a work of historical fiction, it's no problem, because we know what became of Newcomen and his steam engines: They worked well enough for pumping out coal mines, where a lot of coal was handy to fire them, and well enough to prove the concept, which really took off around 1775 when a Scot named James Watt made some major improvements. Sometime later, there were locomotives and nuclear generating plants. You can read all about it in the encyclopedia.

Another way in which Stephenson's style works better in historical fiction than in speculative fiction is in his long descriptions of technologies and processes. When they're fictitious technologies and imaginary processes, it's just wankery, a powerful exercise of imagination for no real purpose. Well, maybe the idea will work, and maybe it won't, and it is necessarily too vague to really give you a clear idea of what is going on. But when the technologies are real ones, the descriptions are illuminating and instructive. You know that the idea will work. The description isn't vague, because Stephnson had real source material to draw on, and even if you don't get a clear idea, you can go look up the details yourself, if you want. And Stephenson is a great explainer. As I said before, I love his nonfiction articles.

A lot of people complain that his novels don't have good endings. He's gotten better at wrapping things up, and to the extent that he hasn't, that's all right, because, again, the book is a historical novel, and history doesn't wrap up. The Baroque novel deals extensively with the Hanoverian succession to the English throne. Want to know what happened next? Well, you probably do know: a series of Georges, Queen Victoria, et cetera, and here we are. And again, if you want, the details are in the encyclopedia.

So I really enjoyed this novel, even though I hadn't liked Stephenson's earlier novels. As I was reading it, I kept thinking how glad I was that Stephenson had finally found a form that suits his talents and his interests.

[ Addendum 20170728: I revisited some of these thoughts in connection with Stephenson's 2016 novel Seveneves. ]

[ Addendum 20191216: I revived an article I wrote in 2002 about Stephenson's first novel The Big U. ]

[Other articles in category /book] permanent link

Wed, 27 Dec 2006

Linogram development: 20061227 Update

Defective constraints (see yesterday's article)

are now handled. So the example from yesterday's

article has now improved to:

define regular_polygon[N] closed {

param number radius, rotation=0;

point vertex[N], center;

line edge[N];

constraints {

vertex[i] = center + radius * cis(rotation + 360*i/N);

edge[i].start = vertex[i];

edge[i].end = vertex[i+1];

}

}

[Other articles in category /linogram] permanent link

Tue, 26 Dec 2006

Linogram development: 20061226 Update

I have made significant progress on the "arrays" feature. I

recognized that it was actually three features; I have two of them

implemented now. Taking the example from further down the page:

define regular_polygon[N] closed {

param number radius, rotation=0;

point vertex[N], center;

line edge[N];

constraints {

vertex[i] = center + radius * cis(rotation + 360*i/N);

edge[i].start = vertex[i];

edge[i].end = vertex[i+1];

}

}

The stuff in green is working just right; the stuff in red is not.The following example works just fine:

number p[3];

number q[3];

constraints {

p[i] = q[i];

q[i] = i*2;

}

What's still missing? Well, if you write

number p[3];

number q[3];

constraints {

p[i] = q[i+1];

}

This should imply three constraints on elements of p and

q:

p0 = q1

p1 = q2

p2 = q3

The third of these is defective, because there is no q3. If the figure is "closed" (which is the default) the subscripts should wrap around, turning the defective constraint into p2 = q0 instead. If the figure is declared "open", the defective constraint should simply be discarded.

The syntax for parameterized definitions (define regular_polygon[N] { ... }) is still a bit up in the air; I am now leaning toward a syntax that looks more like define regular_polygon { param index N; ... } instead.

The current work on the "arrays" feature is in the CVS repository on the branch arrays; get the version tagged tests-pass to get the most recent working version. Most of the interesting work has been on the files lib/Chunk.pm and lib/Expression.pm.

[Other articles in category /linogram] permanent link

Wed, 20 Dec 2006

Reader's disease

Reader's disease is an occupational hazard of editors and literary

critics. Critics are always looking for the hidden meaning, the

clever symbol, and, since they are always looking for it, they always

find it, whether it was there or not. (Discordians will recognize

this as a special case of the law of fives.) Sometimes the

critics are brilliant, and find hidden meanings that are undoubtedly

there even though the author was unaware of them; more often, they are

less fortunate, and sometimes they even make fools of themselves.

The idea of reader's disease was introduced to me by professor David Porush, who illustrated it with the following anecdote. Nathaniel Hawthorne's The Scarlet Letter is prefaced by an introduction called The Custom-House, in which the narrator claims to have found documentation of Hester Prynne's story in the custom house where he works. The story itself is Hawthorne's fantasy, but the custom house is not; Hawthorne did indeed work in a custom house for many years.

Porush's anecdote concerned Mary Rudge, the daughter of Ezra Pound. Rudge, reading the preface of The Scarlet Letter, had a brilliant insight: the custom house, like so many other buildings of the era, was a frame house and was built in the shape of the letter "A". It therefore stands as a physical example of the eponymous letter.

Rudge was visiting Porush in the United States, and told him about her discovery.

"That's a great theory," said Porush, "But it doesn't look anything like a letter 'A'."

Rudge argued the point.

"Mary," said Porush, "I've seen it. It's a box."

Rudge would not be persuaded, so together they got in Porush's car and drove to Salem, Massachusetts, where the custom house itself still stands.

But Rudge, so enamored of her theory that she could not abandon it, concluded that some alternative explanation must be true: the old custom house had burnt down and been rebuilt, or the one in the book was not based on the real one that Hawthorne had worked in, or Porush had led her to the wrong building.

But anyway, all that is just to introduce my real point, which is to relate one of the most astounding examples of reader's disease I have ever encountered personally. The Mary Rudge story is secondhand; for all I know Porush made it up, or exaggerated, or I got the details wrong. But this example I am about to show you is in print, and is widely available.

I have a nice book called The Treasury of the Encyclopædia Britannica, which is a large and delightful collection of excerpts from past editions of the Britannica, edited by Clifton Fadiman. This is out of print now, but it was published in 1992 and is easy to come by.

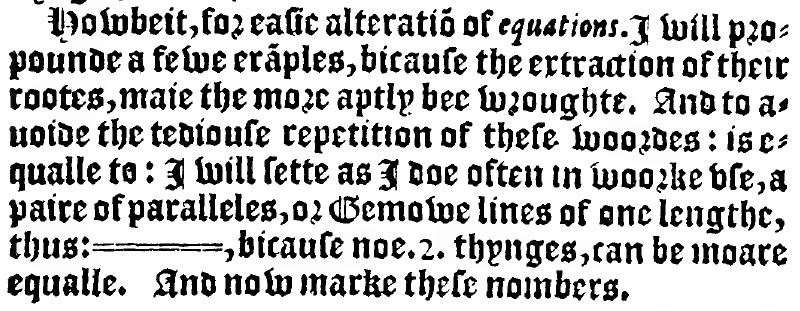

Each extract is accompanied by some introductory remarks by Fadiman and sometimes by one of the contributing editors. One long section in the book concerns early articles about human flight; in the Second Edition (published 1778-1783) there was an article on "Flying" by then editor James Tytler. Contributing editor Bruce L. Felknor's remarks include the following puzzled query:

What did Tytler mean by his interjection of "Fa" after Friar Bacon's famous and fanciful claim that man had already succeeded in flying? It hardly seems a credulous endorsement, an attitude sometimes attributed to Tytler.

Fadiman adds his own comment on this:

As for Tytler's "Fa": Could it have been an earlier version of our "Faugh!"? In any case we suddenly hear an unashamed human voice.

Gosh, what could Tytler have meant by this curious interjection? A credulous endorsement? An exclamation of disgust? An unedited utterance of the unashamed human voice? Let's have a look:

The secret consisted in a couple of large thin hollow copper-globes exhausted of air; which being much lighter than air, would sustain a chair, whereon a person might sit. Fa. Francisco Lana, in his Prodromo, proposes the same thing...

Felknor and Fadiman have mistaken "Fa" for a complete sentence. But it is apparently an abbreviation of Father Francisco Lana's ecclesiastical title.

Oops.

[Other articles in category /book] permanent link

Tue, 12 Dec 2006

ssh-agent

When you do a remote login with ssh, the program needs to use your

secret key to respond to the remote system's challenge. The secret

key is itself kept scrambled on the disk, to protect it from being

stolen, and requires a passphrase to be unscrambled. So the remote

login process prompts you for the passphrase, unscrambles the secret

key from the disk, and then responds to the challenge.

Typing the passphrase every time you want to do a remote login or run a remote process is a nuisance, so the ssh suite comes with a utility program called "ssh-agent". ssh-agent unscrambles the secret key and remembers it; it then provides the key to any process that can contact it through a certain network socket.

The process for login is now:

plover% eval `ssh-agent`

Agent pid 23918

plover% ssh-add

Need passphrase for /home/mjd/.ssh/identity

Enter passphrase for mjd@plover: (supply passphrase here)

plover% ssh remote-system-1

(no input required here)

...

plover% ssh remote-system-2

(no input required here either)

...

The important thing here is that once ssh-agent is started up and

supplied with the passphrase, which need be done only once, ssh itself

can contact the agent through the socket and get the key as many times

as needed.How does ssh know where to contact the agent process? ssh-agent prints an output something like this one:

SSH_AUTH_SOCK=/tmp/ssh-GdT23917/agent.23917; export SSH_AUTH_SOCK;

SSH_AGENT_PID=23918; export SSH_AGENT_PID;

echo Agent pid 23918;

The eval `...` command tells the shell to execute this output

as code; this installs two variables into the shell's environment,

whence they are inherited by programs run from the shell, such as ssh.

When ssh runs, it looks for the SSH_AUTH_SOCK variable. Finding it, it

connects to the specified socket, in

/tmp/ssh-GdT23917/agent.23917, and asks the agent for the

required private key.Now, suppose I log in to my home machine, say from work. The new login shell does not have any environment settings pertaining to ssh-agent. I can, of course, run a new agent for the new login shell. But there is probably an agent process running somewhere on the machine already. If I run another every time I log in, the agent processes will proliferate. Also, running a new agent requires that the new agent be supplied with the private key, which would require that I type the passphrase, which is long.

Clearly, it would be more convenient if I could tell the new shell to contact the existing agent, which is already running. I can write a command which finds an existing agent and manufactures the appropriate environment settings for contacting that agent. Or, if it fails to find an already-running agent, it can just run a new one, just as if ssh-agent had been invoked directly. The program is called ssh-findagent.

I put a surprising amount of work into this program. My first idea was that it can look through /tmp for the sockets. For each socket, it can try to connect to the socket; if it fails, the agent that used to be listening on the other end is dead, and ssh-findagent should try a different socket. If none of the sockets are live, ssh-findagent should run the real ssh-agent. But if there is an agent listening on the other end, ssh-findagent can print out the appropriate environment settings. The socket's filename has the form /tmp/ssh-XXXPID/agent.PID; this is the appropriate value for the SSH_AUTH_SOCK variable.

The SSH_AGENT_PID variable turned out to be harder. I thought I would just get it from the SSH_AUTH_SOCK value with a pattern match. Wrong. The PID in the SSH_AUTH_SOCK value and in the filename is not actually the pid of the agent process. It is the pid of the parent of the agent process, which is typically, but not always, 1 less than the true pid. There is no reliable way to calculate the agent's pid from the SSH_AUTH_SOCK filename. (The mismatch seems unavoidable to me, and the reasons why seem interesting, but I think I'll save them for another blog article.)

After that I had the idea of having ssh-findagent scan the process table looking for agents. But this has exactly the reverse problem: You can find the agent processes in the process table, but you can't find out which socket files they are attached to.

My next thought was that maybe linux supports the getpeerpid system call, which takes an open file descriptor and returns the pid of the peer process on other end of the descriptor. Maybe it does, but probably not, because support for this system call is very rare, considering that I just made it up. And anyway, even if it were supported, it would be highly nonportable.

I had a brief hope that from a pid P, I could have ssh-findagent look in /proc/P/fd/* and examine the process file descriptor table for useful information about which process held open which file. Nope. The information is there, but it is not useful. Here is a listing of /proc/31656/fd, where process 31656 is an agent process:

lrwx------ 1 mjd users 64 Dec 12 23:34 3 -> socket:[711505562]

File descriptors 0, 1, and 2 are missing. This is to be expected; it

means that the agent process has closed stdin, stdout, and stderr.

All that remains is descriptor 3, clearly connected to the socket.

Which file represents the socket in the filesystem? No telling. All

we have here is 711595562, which is an index into some table of

sockets inside the kernel. The kernel probably doesn't know the

filename either. Filenames appear in directories, where they are

associated with file ID numbers (called "i-numbers" in Unix jargon.)

The file ID numbers can be looked up in the kernel "inode" table to

find out what kind of files they are, and, in this case, what socket

the file represents. So the kernel can follow the pointers from the

filesystem to the inode table to the open socket table, or from the

process's open file table to the open socket table, but not from the

process to the filesystem.Not easily, anyway; there is a program called lsof which grovels over the kernel data structures and can associate a process with the names of the files it has open, or a filename with the ID numbers of the processes that have it open. My last attempt at getting ssh-findagent to work was to have it run lsof over the possible socket files, getting a listing of all processes that had any of the socket files open, and, for each process, which file. When it found a process-file pair in the output of lsof, it could emit the appropriate environment settings.

The final code was quite simple, since lsof did all the heavy lifting. Here it is:

#!/usr/bin/perl

open LSOF, "lsof /tmp/ssh-*/agent.* |"

or die "couldn't run lsof: $!\n";

<LSOF>; # discard header

my $line = <LSOF>;

if ($line) {

my ($pid, $file) = (split /\s+/, $line)[1,-1];

print "SSH_AUTH_SOCK=$file; export SSH_AUTH_SOCK;

SSH_AGENT_PID=$pid; export SSH_AGENT_PID;

echo Agent pid $pid;

";

exit 0;

} else {

print "echo starting new agent\n";

exec "ssh-agent";

die "Couldn't run new ssh-agent: $!\n";

}

I complained about the mismatch between the agent's pid and the pid in

the socket filename on IRC, and someone there mentioned offhandedly

how they solve the same problem. It's hard to figure out what the settings should be. So instead of trying to figure them out, one should save them to a file when they're first generated, then load them from the file as required.

Oh, yeah. A file. Duh.

It looks like this: Instead of loading the environment settings from ssh-agent directly into the shell, with eval `ssh-agent`, you should save the settings into a file:

plover% ssh-agent > ~/.ssh/agent-env

Then tell the shell to load the

settings from the file:

plover% . ~/.ssh/agent-env

Agent pid 23918

plover% ssh remote-system-1

(no input required here)

The . command reads the file and acquires the settings. And

whenever I start a fresh login shell, it has no settings, but I can

easily acquire them again with . ~/.ssh/agent-env,

without running another agent process.

I wonder why I didn't think of this before I started screwing around

with sockets and getpeerpid and /proc/*/fd and

lsof and all that rigmarole.Oh well, I'm not yet a perfect sage.

[ Addendum 20070105: I have revisited this subject and the reader's responses. ]

[Other articles in category /oops] permanent link

Wed, 06 Dec 2006

Serendipitous web searches

In yesterday's article about a

paper of Robert French, I mentioned that I had run across it while

looking for something entirely unrelated. The unrelated thing is

pretty interesting itself.

Back in the 1950's

and 1960's, James V. McConnell at the University of Michigan was

doing some really interesting work on learning and memory in planaria

flatworms, shown at right. They used to publish their papers in their

own private journal of flatworm science, The Journal of

Biological Psychology. They didn't have enough material for a

full journal, so when you were done reading the Journal,

you could flip it over and read the back half, which was a

planaria-themed humor magazine called The Worm-Runner's

Digest. I swear I'm not making this up.

Back in the 1950's

and 1960's, James V. McConnell at the University of Michigan was

doing some really interesting work on learning and memory in planaria

flatworms, shown at right. They used to publish their papers in their

own private journal of flatworm science, The Journal of

Biological Psychology. They didn't have enough material for a

full journal, so when you were done reading the Journal,

you could flip it over and read the back half, which was a

planaria-themed humor magazine called The Worm-Runner's

Digest. I swear I'm not making this up.

(I think it's time to revive the planaria-themed humor magazine. Planaria are funny even when they aren't doing anything in particular. Look at those googly eyes!)

(For some reason I've always found planaria fascinating, and I've known about them from an early age. We would occasionally visit my cousin in Oradell, who had a stuffed toy which was probably intended to be a snake, but which I invariably identified as a flatworm. "We're going to visit your Uncle Ronnie," my parents would say, and I would reply. "Can I play with Susan's flatworm?")

Anyway, to get on with the point of this article, McConnell made the astonishing discovery that memory has an identifiable chemical basis. He trained flatworms to run mazes, and noted how long it took to do so. (The mazes were extremely simple T shapes. The planarian goes in the bottom foot of the T. Food goes in one of the top arms, always the same one. Untrained planaria swim up the T and then turn one way or the other at random; trained planaria know to head toward the arm where the food always is. Pretty impressive, for a worm.)

Then McConnell took the trained worms and ground them up and fed them to untrained worms. The untrained worms learned to run the maze a lot faster than the original worms had, apparently demonstrating that there was some sort of information in the trained worms that survived being ground up and ingested. The hypothesis was that the information was somehow encoded in RNA molecules, and could be physically transferred from one individual to another. Isn't that a wonderful dream?

You can still see echoes of this in the science fiction of the era. For example, a recurring theme in Larry Niven's early work is "memory RNA", people getting learning injections, and pills that impart knowledge when you swallow them. See World Out of Time and The Fourth Profession, for example. And I once had a dream that I taught a giant planarian to speak Chinese, then fried it in cornmeal and ate it, after which I was able to speak Chinese. So when I say it's a wonderful dream, I'm speaking both figuratively and literally.

Unfortunately, later scientists were not able to reproduce McConnell's findings, and the "memory RNA" theory has been discredited. How to explain the cannibal flatworms' improved learning times, then? It seems to have been sloppy experimental technique. The original flatworms left some sort of chemical trail in the mazes, that remained after they had been ground up. McConnell's team didn't wash the mazes in between tests, and the cannibal flatworms were able to follow the trails later; it had nothing to do with their diet. Bummer.

So a couple of years ago I was poking around, looking for more information about this, and in particular for a copy of McConnell's famous paper Memory transfer through cannibalism in planarium, which I didn't find. But I did find the totally unrelated Robert French paper. It mentions McConnell, as an example of another cool-sounding and widely-reported theory that took a long time to dislodge, because it's hard to produce clear evidence that cannibal flatworms aren't in fact learning from their lunch meat, and because the theory that they aren't learning is so much less interesting-sounding than the theory that they are. News outlets reported a lot about the memory RNA breakthrough, and much less about the later discrediting of the theory.

French's paper, you will recall, refutes the interesting-sounding hypothesis that infants resemble their fathers more strongly then they do their mothers, and has many of the same difficulties.

There are counterexamples. Everyone seems to have heard that the Fleischmann and Pons tabletop cold fusion experiment was an error. And the Hwang Woo-Suk stem cell fraud is all over the news these days.

[Other articles in category /bio] permanent link

Tue, 05 Dec 2006

Do infants resemble their fathers more than their mothers?

Back in 1995, Christenfeld and Hill published a paper claimed to have

found evidence that infants tended to resemble their fathers more than

they resembled their mothers. The evolutionary explanation for this,

it was claimed, is that children who resemble their fathers are less

likely to be abandoned by them, because their paternity would be less

likely to be doubted. The pop science press got hold of

it—several years later, as they often do—and it was widely

reported for a while. Perhaps you heard about it.

A couple years ago, while looking for something entirely unrelated, I ran across the paper of French et al. titled The Resemblance of One-year-old Infants to Their Fathers: Refuting Christenfeld & Hill. French and his colleagues had tried to reproduce Christenfeld and Hill's results, with little success; they suggested that the conclusion was false, and offered a number of arguments as to why the purported resemblance should not exist. Of course, the pop science press was totally uninterested.

At the time, I thought, "Wow, I wish I had a way to get a lot of people to read this paper." Then last month I realized that my widely-read blog is just the place to do this.

Before I go on, here is the paper. I recommend it; it's good reading, and only six pages long. Here's the abstract:

In 1995 Christenfeld and Hill published a paper that purported to show at one year of age, infants resemble their fathers more than their mothers. Evolution, they argued, would have produced this result since it would ensure male parental resources, since the paternity of the infant would no longer be in doubt. We believe this result is false. We present the results of two experiments (and mention a third) which are very far from replicating Christenfeld and Hill's data. In addition, we provide an evolutionary explanation as to why evolution would not have favored the result reported by Christenfeld and Hill.Other related material is available from Robert French's web site.

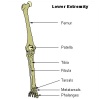

In the first study done by French, participants were presented with a 1-, 3-, or 5-year-old child's face, and the faces of either the father and two unrelated men, or the mother and two unrelated women. The participants were invited to identify the child's parent. They did indeed succeed in identifying the children's parents somewhat more often than would have been obtained by chance alone. But the participants did not identify fathers more reliably than they identified mothers.

The second study was similar, but used only 1-year-old infants. (The Christenfeld and Hill claim is that one year is the age at which children most resemble their fathers.)

French points out that although the argument from evolutionary considerations is initially attractive, it starts to disintegrate when looked at more closely. The idea is that if a child resembles its father, the father is less likely to doubt his paternity, and so is less likely to withhold resources from the child. So there might be a selection pressure in favor of resembling one's father.

But now turn this around: if a father can be sure of paternity because the children look like him, then he can also be sure when the children aren't his because they don't resemble him. This will create a very strong selection pressure in favor of children resembling their fathers. And the tendency to resemble one's father will create a positive feedback loop: the more likely kids are to look like their fathers, the more likely that children who don't resemble their fathers will be abandoned, neglected, abused, or killed. So if there is a tendency for infants to resemble their fathers more than their mothers, one would expect it to be magnified over time, and to be fairly large by now. But none of the studies (including the original Christenfeld and Hill one) found a strong tendency for children to resemble their fathers.

But, as French notes, it's hard to get people to pay attention to a negative result, to a paper that says that something interesting isn't happening.

[ Addendum 20061206: Here's the original Christenfeld and Hill paper. ]

[ Addendum 20171101: A recent web search refutes my claim that “the pop science press was totally uninterested”. Results include this article from Scientific American and a mention in the book Bumpology: The Myth-Busting Pregnancy Book for Curious Parents-To-Be. ]

[ Addendum 20171101: A more extensive study in 2004 confirmed French's results. ]

[Other articles in category /bio] permanent link

Mon, 04 Dec 2006

Clockwise

A couple of weeks ago I was over at a friend's house, and was trying

to explain to her two-year-old daughter which way to turn the knob on

her Etch-a-Sketch. But I couldn't tell her to turn it clockwise,

because she can't tell time yet, and has no idea which way is

clockwise.

It occurs to me now that I may not be giving her enough credit; she may know very well which way the clock hands go, even though she can't tell time yet. Two-year-olds are a lot smarter than most people give them credit for.

Anyway, I then began wonder what "clockwise" and "counterclockwise" were called before there were clocks with hands that went around clockwise. But I knew the answer to that one: "widdershins" is counterclockwise; "deasil" is clockwise.

Or so I thought. This turns out not to be the answer. "Deasil" is only cited by the big dictionary back to 1771, which postdates clocks by several centuries. "Widdershins" is cited back to 1545. "Clockwise" and "counter-clockwise" are only cited back to 1888! And a full-text search for "clockwise" in the big dictionary turns up nothing else. So the question of what word people used in 1500 is still a mystery to me.

That got me thinking about how asymmetric the two words "deasil" and "widdershins" are; they have nothing to do with each other. You'd expect a matched set, like "clockwise" and "counterclockwise", or maybe something based on "left" and "right" or some other pair like that. But no. "Widdershins" means "the away direction". I thought "deasil" had something to do with the sun, or the day, but apparently not; the "dea" part is akin to dexter, the right hand, and the "sil" part is obscure. Whereas the "shins" part of "widdershins" does have something to do with the sun, at least by association. That is, it is not related historically to the sun, except that some of the people using the word "widdershins" were apparently thinking it was actually "widdersun". What a mess. And the words have nothing to do with each other anyway, as you can see from the histories above; "widdershins" is 250 years older than "deasil".

The OED also lists "sunways", but the earliest citation is the same as the one for deasil.

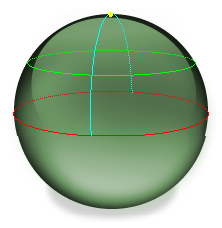

Anyway, I did not know any of this at the time, and imagined that "deasil" meant "in the direction of the sun's motion". Which it is; the sun goes clockwise through the sky, coming up on the left, rising to its twelve-o'-clock apex, and then descending on the right, the way the hands of a clock do. (Perhaps that's why the early clockmakers decided to make the hands of the clock go that way in the first place. Or perhaps it's because of the (closely related) reason that that's the direction that the shadow on a sundial moves.)

And then it hit me that in the southern hemisphere, the sun goes the other way: instead of coming up on the left, and going down on the right, the way clock hands do, it comes up on the right and goes down on the left. Wowzers! How bizarre.

I'm a bit sad that I figured this out before actually visiting the southern hemisphere and seeing it for myself, because I think I would have been totally freaked out on that first morning in New Zealand (or wherever). But now I'm forewarned that the sun goes the wrong way down there and it won't seem so bizarre when I do see it for the first time.

[Other articles in category /lang] permanent link

Thu, 30 Nov 2006

Trivial calculations

Back in September, I wrote about how I

tend to plunge ahead with straightforward calculations whenever

possible, grinding through the algebra, ignoring the clever shortcut.

I'll go back and look for the shortcut, but only if the

hog-slaughtering approach doesn't get me what I want. This is often

an advantage in computer programming, and often a disadvantage in

mathematics.

This occasionally puts me in the position of feeling like a complete ass, because I will grind through some big calculation to reach a simple answer which, in hindsight, is completely obvious.

One early instance of this that I remember occurred more than twenty years ago when a friend of mine asked me how many spins of a slot machine would be required before you could expect to hit the jackpot; assume that the machine has three wheels, each of which displays one of twenty symbols, so the chance of hitting the jackpot on any particular spin is 1/8,000. The easy argument goes like this: since the expected number of jackpots per spin is 1/8,000, and expectations are additive, 8,000 spins are required to get the expected number of jackpots to 1, and this is in fact the answer.

But as a young teenager, I did the calculation the long way. The chance of getting a jackpot in one spin is 1/8000. The chance of getting one in exactly two spins is (1 - 1/8000)·(1/8000). The chance of getting one in exactly three spins is (1 - 1/8000)2·(1/8000). And so on; so you sum the infinite series:

$$\sum_{i=1}^\infty {i{\left({k-1\over k}\right)^{i-1}} {1\over k}}$$

And if you do it right, you do indeed get exactly k. Well, I was young, and I didn't know any better.I'd like to be able say I never forgot this. I wish it were true. I did remember it a lot of the time. But I can think of a couple of times when I forgot, and then felt like an ass when I did the problem the long way.

One time was in 1996. There is a statistic in baseball called the on-base percentage or "OBP"—please don't all go to sleep at once. This statistic measures, for each player, the fraction of his plate appearances in which he is safe on base, typically by getting a hit, or by being walked. It is typically around 1/3; exceptional players have an OBP as high as 2/5 or even higher. You can also talk about the OBP of a team as a whole.

A high OBP is a very important determiner of the number of runs a baseball team will score, and therefore of how many games it will win. Players with a higher OBP are more likely to reach base, and when a batter reaches base, another batter comes to the plate. Teams with a high overall OBP therefore tend to bring more batters to the plate and so have more chances to score runs, and so do tend to score runs, than teams with a low overall OBP.

I wanted to calculate the relationship between team OBP and the expected number of batters coming to the plate each inning. I made the simplifying assumption that every batter on the team had an OBP of p, and calculated the expected number of batters per inning. After a lot of algebra, I had the answer: 3/(1-p). Which makes perfect sense: There are only two possible outcomes for a batter in each plate appearance: he can reach base, or he can be put out; these are exclusive. A batter who reaches base p of the time is put out 1-p of the time, consuming, on average, 1-p of an out. A team gets three outs in an inning; the three outs are therefore consumed after 3/(1-p) batters. Duh.

This isn't the only baseball-related mistake I've made. I once took a whole season's statistics and wrote a program to calculate the average number of innings each pitcher pitched. Okay, no problem yet. But then I realized that the average was being depressed by short relievers; I really wanted the average only for starting pitchers. But how to distinguish starting pitchers from relievers? Simple: the statistics record the number of starts for each pitcher, and relievers never start games. But then I got too clever. I decided to weight each pitcher's contribution to the average by his number of starts. Since relievers never start, this ignores relievers; it allows pitchers who do start a lot of games to influence the result more than those who only pitched a few times. I reworked the program to calculate the average number of innings pitched per start.

The answer was 9. (Not exactly, but very close.)

It was obviously 9. There was no other possible answer. There is exactly one start per game, and there are 9 innings per game,1 and some pitcher pitches in every inning, so the number of innings pitched per start is 9. Duh.

Anyway, the real point of this article is to describe a more sophisticated mistake of the same general sort that I made a couple of weeks ago. I was tinkering around with a problem in pharmacology. Suppose Joe has some snakeoleum pills, and is supposed to take one capsule every day. He would like to increase the dosage to 1.5 pills every day, but he cannot divide the capsules. On what schedule should he take the pills to get an effect as close as possible to the effect of 1.5 pills daily?

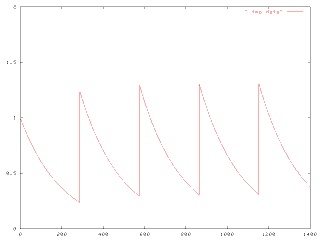

I assumed that we could model the amount of snakeoleum in Joe's body as a function f(t), which normally decayed exponentially, following f(t) = ae-kt for some constant k that expresses the rate at which Joe's body metabolizes and excretes the snakeoleum. Every so often, Joe takes a pill, and at these times d0, d1, etc., the function f is discontinuous, jumping up by 1. The value a here is the amount of snakeoleum in Joe's body at time t=0. If Joe takes one pill every day, the (maximum) amount of snakeoleum in his body will tend toward 1/(1-e-k) over time, as in the graph below:

(All graphs in this article get bigger if you click them.)

I wanted to compare this with what happens when Joe takes the pill on various other schedules. For some reason I decided it would be a good idea to add up the total amount of pill-minutes for a particular dosage schedule, by integrating f. That is, I was calculating !!\int_a^b f(t) dt!! for various a and b; for want of better values, I calculated the total amount !!\int_0^\infty f(t) dt!!.

Doing this for the case in which Joe takes a single pill at time 0 is simple; it's just !!\int_0^\infty e^{-kt} dt!!, which is simply 1/k.

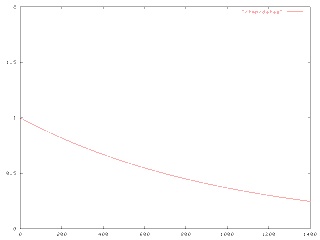

But then I wanted to calculate what happens when Joe takes a second pill, say at time M. At time M, the amount of snakeoleum left in Joe's body from the first pill is e-kM, so the function f has f(t) = e-kt for 0 ≤ t ≤ M and f(t) = (e-kM+1)e-k(t-M) for M ≤ t < ∞. The graph looks like this:

After the second pill, at t=600, it decays gradually to zero. I wanted to integrate this function. Since it's continuous on the two pieces of the interval, we break the integral into two:

$$\halign{ \hfil$#$ & $= # \hfil $ & $+ #\hfil$ \cr \int_0^\infty f(t) dt & \int_0^M f(t) dt & \int_M^\infty f(t) dt \cr & \int_0^M e^{-kt} dt & \int_M^\infty (e^{-kM} + 1)e^{-k(t-M)} dt \cr & {1 \over k }(1-e^{-kM}) & {1\over k}(e^{-kM} + 1)\cr & \ldots & \omit \cr }$$.

Well, you get the idea. The answer was 2/k.In retrospect, this is completely obvious.

This is because of the way I modeled the pills. When the decay of f(t) is exponential, as it is here, that means that the rate at which the snakeoleum is metabolized is proportional to the amount: twice as much snakeoleum means that the decay is twice as fast. or, looked at another way, each pill decays independently of the rest. Put two pills in, and an hour later you'll find that you have twice as much left as if you had only put one pill in.

Since the two pills are acting independently, you can calculate their effect independently. That is, f(t) can be decomposed into f1(t) + f2(t), where f1 is the contribution from the first pill:

And f2 is the contribution from the second pill:

But the second pill is exactly like the first pill. The only difference is that Joe takes it a little later. But since the integrals are considering the total effect from now to eternity, the time at the beginning is unimportant, and the effect of the second pill might as well be:

Just shift your head over a bit. (Mathematically, this is equivalent to substituting y = x-M in the second integral.) So of course it is the same as the first pill, and the result is exactly 2/k. When I saw the 2/k, I scratched my head for a minute, then figured it out and said "duh".

Anyway, I think what I really want is to find !!\int_0^\infty {\left(f_1(t) - f_{1.5}(t, d)\right)}^2 dt!!, where f1 is the function that describes the amount of snakeoleum when Joe takes one pill a day, and f1.5 is the function that describes the amount when Joe takes 1.5 pills every d days. But if there's one thing I think you should learn from a dumbass mistake like this, it's that it's time to step back and try to consider the larger picture for a while, so I've decided to do that before I go on.

1[ Addendum 20070124: There is a brief explanation of why the average baseball game has almost exactly 9 innings. ]

[Other articles in category /oops] permanent link

Wed, 29 Nov 2006

Legal status of corpses in 1911 England

As you might expect from someone who browses at random in the library

stacks, I own several encyclopedias, which I also browse in from time

to time. You never know what you are going to find when you do

this.

I got rid of one recently. It was a 1962 Grolier's. Obviously, it was out of date, but I was using it for general reference anyway, conscious of its shortcomings. But one day I picked it up to read its article on Thurgood Marshall. It said that Marshall was an up-and-coming young lawyer, definitely someone to watch in the future. That was too much, and I gave it away.

But anyway, my main point is to talk about the legal status of corpses. One of the encyclopedias I have is a Twelfth Edition Encyclopaedia Britannica. This contains the complete text of the famous 1911 Eleventh Edition, plus three fat supplementary volumes that were released in 1920. The Britannica folks had originally planned the Twelfth Edition for around 1930, but so much big stuff happened between 1911 and 1920 that they had to do a new edition much earlier.

The Britannica is not as much fun as I hoped it would be. But there are still happy finds. Here is one such:

CORPSE (Lat. corpus, the body), a dead human body. By the common law of England a corpse is not the subject of property nor capable of holding property. It is not therefore larceny to steal a corpse, but any removal of the coffin or grave-cloths is otherwise, such remaining the property of the persons who buried the body. It is a misdemeanour to expose a naked corpse to public view. . .

(The complete article is available online.)

[ Addendum 20191213: it is a felony in California to sexually penetrate or to have sexual contact with human remains, except as authorized by law. ]

[Other articles in category /law] permanent link

Tue, 28 Nov 2006

favicon.ico Results

A couple of days ago I asked for

suggestions for a favicon icon to represent the blog in

people's shortcut menus. There were only two responses, but they were

both very helpful, and solved the problem.

My first thought was, of course, to use an octopus, but I immediately

rejected this idea since I didn't think I would be able to draw a

recognizable octopus in 16×16 pixels. Neil Kandalgaonkar was

braver than I was: ![]() .

.

However, the concept I decided to go with was suggested by David

Eppstein, who provided this attractive interpretation:

![]() . To explain this, I need to

explain my domain name, which I haven't done here before.

. To explain this, I need to

explain my domain name, which I haven't done here before.

For nine years I was an independent consultant, working under the name Plover Systems. Why Plover? Many people assume that it is an abbreviation for "Perl lover"; this is not the case. The plover domain predates my involvement with Perl. (Some people have also interpreted it as "piss lover", a veiled statement of a fetishistic attraction to urination. It is not.)

A plover is a small bird. Typically, they make their nests on the seashore. The Egyptian plover is the little bird that is reputed to snatch food scraps from between the teeth of the crocodile. The American golden plover migrates all the way from Alaska to South America, and sometimes across the ocean to Europe.

Immediately prior to my becoming an independent consultant and setting up Plover Systems and the plover.com domain, I was employed as the senior systems engineer for Pathfinder, the Time Warner web site. I did not like this job very much. After I quit, I joked that my company had grown too big, so I downsized most of the management and employees, divested the magazine business, and cut the organization's mission back to core competences. Time Warner, the subsidiary I spun off to publish the magazines, was very large. I named my new, lean, trim company "Plover" because the plover is small and agile.

There is another reason for "Plover". For about thirty years, I have been a devoted fan of the old computer game Adventure. When time came to choose a domain name, I wanted to choose something with an Adventure connection. Here the obvious choices are xyzzy, which was already taken, and plugh, which is ugly. Both of these are magic words which, uttered at the correct spot, will teleport the player to another location. The game has a third such magic word, which is "plover"; from the right place, it transports the player to the "Plover room":

You're in a small chamber lit by an eerie green light. An extremely narrow tunnel exits to the west. A dark corridor leads NE.This room, with its green light and narrow tunnel, is depicted in David Eppstein's icon.

The Plover room is so-called because it contains "an emerald the size of a plover's egg". A plover's egg is not very big, as eggs go, because the plover is not a very large bird, as birds go. But an emerald the size of a plover's egg is enormous, as emeralds go. The description is a reference to an off-color joke that was current in the early 1970's when Adventure was written: a teenage girl, upon hearing that the human testicle is the size of a plover's egg, remarks "Oh, so that's how big a plover's egg is." I think this was somewhat more risqué in 1974 than it is today.

For his contribution, M. Eppstein has won a free two-year subscription to The Universe of Discourse. Neil Kandalgaonkar gets the runner-up prize of two free six-month subscriptions, to run concurrently. Thank you both!

[Other articles in category /meta] permanent link

Mon, 27 Nov 2006

Baseball team nicknames, again

Some addenda to my recent

article about baseball team nicknames.

Several people wrote to complain that I mismatched the cities and the nicknames in this sentence:

The American League [has] the Boston Royals, the Kansas City Tigers, the Detroit Indians, the Oakland Orioles...

My apologies for the error. It should have been the Boston Tigers, the Kansas City Indians, the Detroit Orioles, and the Oakland Royals.

Phil Varner reminded me that the Chicago Bulls are in fact a "local color" name; they are named in honor of the Chicago stockyards.

This raises a larger point, brought up by Dave Vasilevsky: My classification of names into two categories conflates some issues. Some names are purely generic, like the Boston Red Sox, and can be transplanted anywhere. Other names are immovable, like the Philadelphia Phillies. In between, we have a category of names, like the Bulls, which, although easily transportable, are in fact local references.

The Milwaukee Brewers are a good baseball example. The Brewers were named in honor of the local German culture and after Milwaukee's renown as a world center of brewing. Nobody would deny that this is a "local color" type name. But the fact remains that many cities have breweries, and the name "Brewers" would work well in many places. The Philadelphia Brewers wouldn't be a silly name, for example. The only place in the U.S. that I can think of offhand that fails as a home for the Brewers is Utah; the Utah Brewers would be a bad joke. (This brings us full circle to the observation about the Utah Jazz that inspired the original article.)

The Baltimore Orioles are another example. I cited them as an example of a generic and easily transportable name. But the Baltimore Oriole is in fact a "local color" type name; the Baltimore Oriole is named after Lord Baltimore, and is the state bird of Maryland. (Thanks again to Dave Vasilevsky and to Phil Gregory for pointing this out.)

Or consider the Seattle Mariners. The name is supposed to suggest the

great port of Seattle, and was apparently chosen for that reason. (I

have confirmed that the earlier Seattle team, the Seattle Pilots, was

so-called for the same reason.) But the name is transportable to many

other places: it's easy to imagine alternate universes with the New

York Mariners, the Brooklyn Mariners, the San Francisco Mariners, or

the Boston Mariners. Or even all five.

Or consider the Seattle Mariners. The name is supposed to suggest the

great port of Seattle, and was apparently chosen for that reason. (I

have confirmed that the earlier Seattle team, the Seattle Pilots, was

so-called for the same reason.) But the name is transportable to many

other places: it's easy to imagine alternate universes with the New

York Mariners, the Brooklyn Mariners, the San Francisco Mariners, or

the Boston Mariners. Or even all five.

And similarly, although in the previous article I classed the New York

Yankees with the "local color" names, based on the absurdity of the

Selma or the Charleston Yankees, the truth is that the Boston Yankees

only sounds strange because it didn't actually happen that way.

I thought about getting into a tremendous cross-check of all 870 name-city combinations, but decided it was too much work. Then I thought about just classing the names into three groups, and decided that the issue is too complex to do that. For example, consider the Florida Marlins. Local color, certainly. But immovable? Well, almost. The Toronto Marlins or the Kansas City Marlins would be jokes, but the Tampa Bay Marlins certainly wouldn't be. And how far afield should I look? I want to class the Braves as completely generic, but consideration of the well-known class AA Bavarian League Munich Braves makes it clear that "Braves" is not completely generic.

So in ranking by genericity, I think I'd separate the names into the following tiers:

- Pirates, Cubs, Reds, Cardinals, Giants, Red Sox, Blue Jays, White Sox, Tigers, Royals, Athletics

- Braves, Mets, Dodgers, Orioles, Yankees, Indians, Angels, Mariners, Nationals, Brewers

- Marlins, Astros, Diamondbacks, Rockies, Padres, Devil Rays, Twins

- Phillies, Rangers

Readers shouldn't take this classification as an endorsement of the

Phillies' nickname, which I think is silly. I would have preferred

the Philadelphia Brewers. Or even the Philadelphia Cheese Steaks.

Maybe they didn't need the extra fat, but wouldn't it have been great

if the 1993 Phillies had been the 1993 Cheese Steaks instead? Doesn't

John Kruk belong on a team called the Cheese Steaks?

Readers shouldn't take this classification as an endorsement of the

Phillies' nickname, which I think is silly. I would have preferred

the Philadelphia Brewers. Or even the Philadelphia Cheese Steaks.

Maybe they didn't need the extra fat, but wouldn't it have been great

if the 1993 Phillies had been the 1993 Cheese Steaks instead? Doesn't

John Kruk belong on a team called the Cheese Steaks?

Another oddity, although not from baseball: In a certain sense, the

Montreal Canadiens have an extremely generic name. And yet it's

clearly not generic at all!

[Other articles in category /lang] permanent link

Fri, 24 Nov 2006

Etymological oddity

Sometimes you find words that seem like they must be related, and then

it turns out to be a complete coincidence.

Consider pen and pencil.

Pen is from French penne, a long feather or quill pen, akin to Italian penne (the hollow, ribbed pasta), and ultimately to the word feather itself.

Pencil is from French pincel, a paintbrush, from Latin peniculus, also a brush, from penis, a tail, which is also the source of the English word penis.

A couple of weeks ago someone edited the Wikipedia article on "false cognates" to point out that day and diary are not cognate. "No way," I said, "it's some dumbass putting dumbassery into Wikipedia again." But when I checked the big dictionary, I found that it was true. They are totally unrelated. Diary is akin to Spanish dia, Latin dies, and other similar words, as one would expect. Day, however, is "In no way related to L. dies..." and is akin to Sanskrit dah = "to burn", Lithuania sagas = "hot season", and so forth.

[Other articles in category /lang/etym] permanent link

Thu, 23 Nov 2006

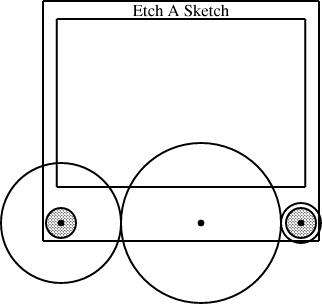

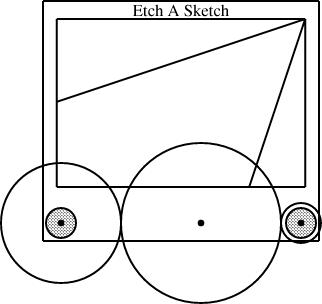

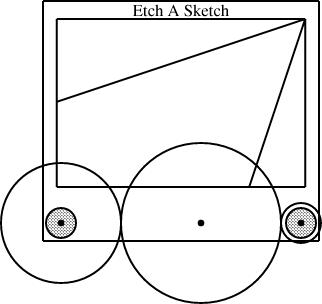

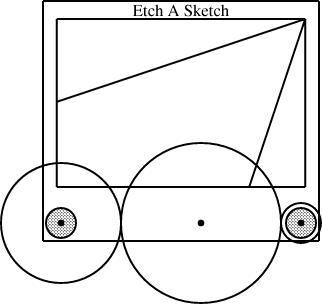

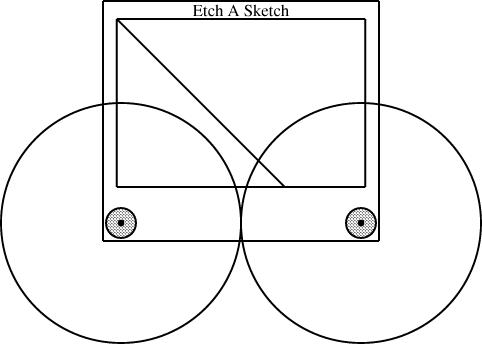

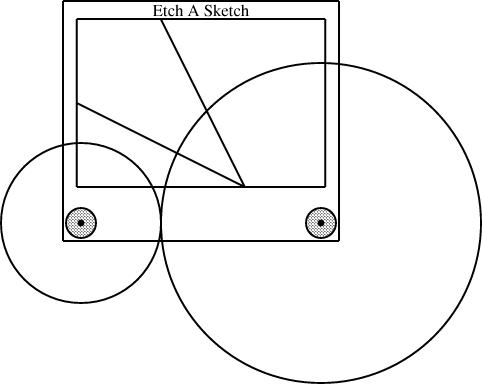

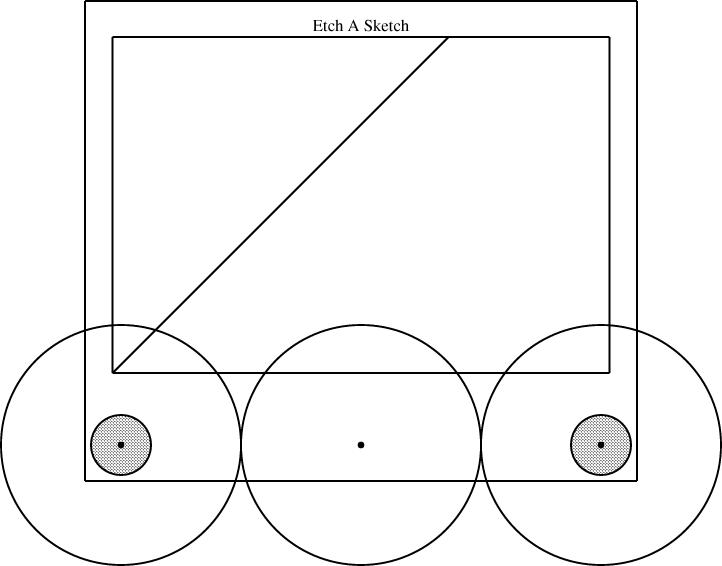

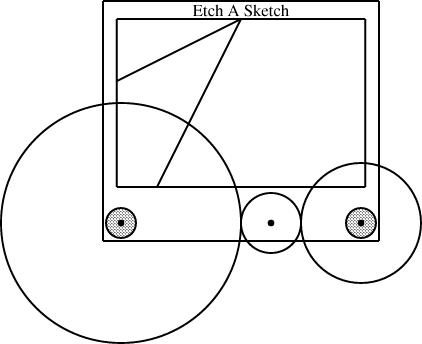

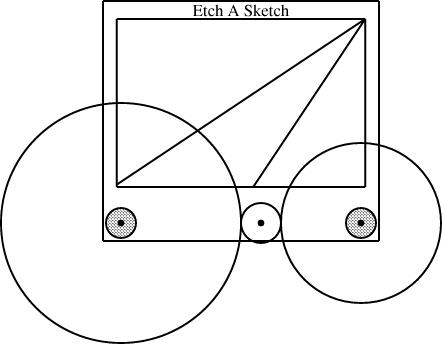

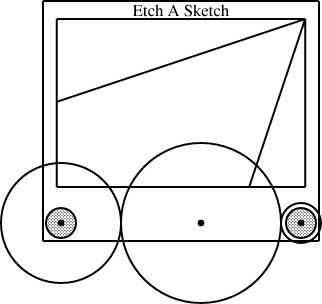

Linogram: The EaS as a component

In an earlier article, I

discussed the definition of an Etch-a-Sketch picture as a component in the linogram

drawing system. I then digressed for a day to rant

about the differences between specification-based and WYSIWYG

systems. I now return to the main topic.

Having defined the EAS component, I can use it in several diagrams. The typical diagram looks like this:

require "eas";

number WIDTH = 2;

EAS the_eas(w = WIDTH);

gear3 gears(width=WIDTH, r1=1/4, r3=1/12);

constraints {

the_eas.left = gears.g1.c;

the_eas.right = gears.g3.c;

}

The eas.lino file defines the EAS component and also

the gear3 component, which describes what the three gears

should look like. The diagram itself just chooses a global width and

communicates it to the EAS component and the gear3

component. It also communicates two of the three selected gear sizes

to the gear3, which generates the three gears and their

axles. Finally, the specification constrains the left

reference point of the Etch-a-Sketch to lie at the center of gear 1, and the

right reference point to lie at the center of gear 3.This sort of thing was exactly what I was hoping for when I started designing linogram. I wanted it to be easy to define a new component type, like EAS or gear3, and to use it as if it were a built-in type. (Indeed, as noted before, even linogram's "built-in" types are not actually built in! Everything from point on up is defined by a .lino file just like the one above, and if the user wants to redefine point or line, they are free to do so.)

(It may not be clear redefining point or line is actually a useful thing to do. But it could be. Consider, for example, what would happen if you were to change the definition of point to contain a z coordinate, in addition to the x and y coordinates it normally has. The line and box definitions would inherit this change, and become three-dimensional objects. If provided with a suitably enhanced rendering component, linogram would then become a three-dimensional diagram-drawing program. Eventually I will add this enhancement to linogram.)

I am a long-time user of the AT&T Unix tool pic, which wants to allow you to define and use compound objects in this way, but gets it badly wrong, so that it's painful and impractical. Every time I suffered through pic's ineptitude, I would think about how it might be done properly. I think linogram gets it right; pic was a major inspiration.

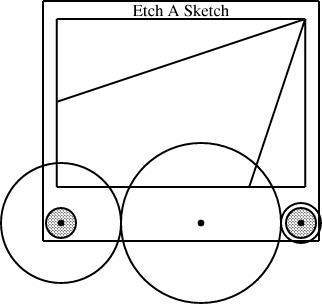

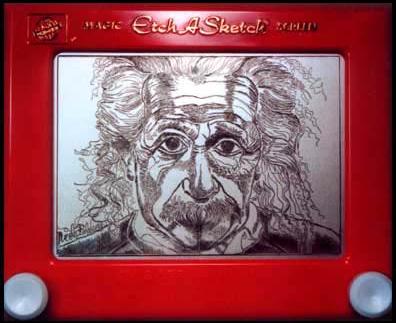

Slanty lines

Partway through drawing the Etch-a-Sketch diagrams, I had a happy idea. Since I was describing Etch-a-Sketch configurations that would draw lines with various slopes, why not include examples?

line L(slope=..., start=..., ...);

In general, though, this is too hard to handle. The resulting equations are quadratic, or worse, trigonometric, and linogram does not know how to solve those kinds of equations.

But if the slope is required to be constant, then the quadratic equations become linear, and there is no problem. And in this case, I only needed constant slopes. Once I realized this, it was easy to define a constant-slope line type:

require "line";

define sline extends line {

param number slope;

constraints {

end.y - start.y = slope * (end.x - start.x);

}

}

An sline is just like a line, but it has an

additional parameter, slope (which must be constant, and

specified at compile time, not inferred from other values) and one

extra constraint that uses it. Normally, all four of start.x,

end.x, start.y, and end.y are independent.

The constraint removes some of the independence, so that any three,

plus the slope, are sufficient to determine the fourth.The diagram above was generated from this specification:

require "eas";

require "sline";

number WIDTH = 2;

EAS the_eas(w = WIDTH);

gear3 gears(width=WIDTH, r1=1/4, r3=1/12);

sline L1(slope=1/3, start=the_eas.screen.ne);

sline L2(slope=3, start=the_eas.screen.ne);

constraints {

the_eas.left = gears.g1.c;

the_eas.right = gears.g3.c;

L1.end.x = the_eas.screen.w.x;

L2.end.y = the_eas.screen.s.y;

}

The additions here are the sline items, named L1 and L2. Both lines start at the northeast corner of the screen. Line L1 has slope 1/3, and its other endpoint is constrained to lie somewhere on the west edge of the screen. The y-coordinate of that endpoint is not specified, but is implicitly determined by the other constraints. To locate it, linogram must solve some linear equations. The complete set of constraints no the line is:

| L1.start.x = the_eas.screen.ne.x |

| L1.start.y = the_eas.screen.ne.y |

| L1.end.x = the_eas.screen.w.x |

| L1.end.y - L1.start.y = L1.slope × (L1.end.x - L1.start.x); |

| L1.end.y - L1.start.y = 1/3 × (L1.end.x - L1.start.x); |

The other line, L2, with slope 3, is handled similarly; its endpoint is constrained to lie somewhere on the south side of the screen.

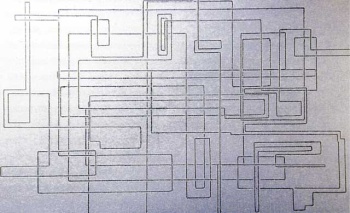

Surprise features

When I first planned linogram, I was hardly thinking of slopes at all, except to dismiss them as being intractable, along with a bunch of other things like angles and lengths. But it turned out that they are a little bit tractable, enough that linogram can get a bit of a handle on them, thanks to the param feature that allows them to be excluded from the linear equation solving.One of the signs that you have designed a system well is that it comes out to be more powerful than you expected when you designed it, and lends itself to unexpected uses. The slines are an example of that. When it occurred to me to try doing them, my first thought was "but that won't work, will it?" But it does work.

Here's another technique I hadn't specifically planned for, that is already be supported by linogram. Suppose Fred Flooney wants to use the eas library, but doesn't like the names of the reference points in the EAS component. Fred is quite free to define his own replacement, with whatever names for whatever reference points he likes

require "eas";

define freds_EAS {

EAS it;

point middle = it.screen.c;

point bernard = (it.body.e + 2*it.body.se)/3;

line diag(start=it.body.nw, end=it.body.se);

draw { it; }

}

The freds_EAS component is essentially the same as the

EAS component defined by eas.lino. It contains a

single Etch-a-Sketch, called it, and a few extra items that Fred is

interested in. If E is a freds_EAS, then

E.middle refers to the point at the center of E's

screen; E.bernard is a point two-thirds of the way between

the middle and the bottom corner of its outer edge, and

E.diag is an invisible line running diagonally across the

entire body, equipped with the usual E.diag.start,

E.diag.center, and the like. All the standard items are

still available, as E.it.vknob,

E.it.screen.left.center, and so on.

The draw section tells linogram that only the component named it—that is, the Etch-a-Sketch itself—should be drawn; this suppresses the diag line. which would otherwise have been rendered also.

If Fred inherits some other diagram or component that includes an Etch-a-Sketch, he can still use his own aliases to refer to the parts of the Etch-a-Sketch, without modifying the diagram he inherited. For example, suppose Fred gets handed my specification for the diagram above, and wants to augment it or incorporate it in a larger diagram. The diagram above is defined by a file called "eas4-3-12.lino", which in turn requires EAS.lino. Fred does not need to modify eas4-3-12.lino; he can do:

require "eas4-3-12.lino";

require "freds_eas";

freds_EAS freds_eas(it = the_eas);

constraints {

freds_eas.middle = ...;

freds_eas.bernard = ...;

}

...

Fred has created one of his extended Etch-a-Sketch components, and identified

the Etch-a-Sketch part of it with the_eas, which is the Etch-a-Sketch part of my

original diagram. Fred can then apply constraints to the

middle and bernard sub-parts of his

freds_eas, and these constraints will be propagated to the

corresponding parts the the_eas in my original diagram. Fred

can now specify relations in terms of his own peronal middle

and bernard items, and they will automatically be related to

the appropriate parts of my diagram, even though I have never heard of

Fred and have no idea what bernard is supposed to represent.Why Fred wants these names for these components, I don't know; it's just a contrived example. But the important point is that if he does want them, he can have them, with no trouble.

What next?

There are still a few things missing from linogram. The last major missing feature is arrays. The gear3 construction I used above is clumsy. It contains a series of constraints that look like:

g1.e = g2.w; g2.e = g3.w;The same file also has an analogous gear2 definition, for diagrams with only two gears. Having both gear2 and gear3, almost the same, is silly and wasteful. What I really want is to be able to write:

define gears[n] open {

gear[n] g;

constraints {

g[i].e = g[i+1].w;

}

}

and this would automatically imply components like gear2 and

gear3, with 2 and 3 gears, but denoted gear[2] and gear[3],

respectively; it would also imply gear[17] and

gear[253] with the analogous constraints.For gear[3], two constraints are generated: g[0].e = g[1].w, and g[1].e = g[2].w. Normally, arrays are cyclic, and a third constraint, g[2].e = g[0].w, would be generated as well. The open keyword suppresses this additional constraint.

This feature is under development now. I originally planned it to support splines, which can have any number of control points. But once again, I found that the array feature was going to be useful for many other purposes.

When the array feature is finished, the next step will be to create a spline type: spline[4] will be a spline with 4 control points; spline[7] will be a spline with 7 control points, and so on. PostScript will take care of drawing the splines for me, so that will be easy. I will also define a regular polygon type at that time:

define polygon[n] closed {

param number rotation = 0;

number radius;

point v[n], center;

line e[n];

constraints {

v[i] = center + radius * cis(rotation + i * 360/n);

e[i].start = v[i];

e[i].end = v[i+1];

}

}

polygon[3] will then be a rightward-pointing equilateral

triangle; constraining any two of its vertices will determine the

position of the third, which will be positioned automatically. Note

the closed keyword, which tells linogram to include the constraint

e[2].end =

v[0], which would have been omitted had open been

used instead. More complete information about linogram is available in Chapter 9 of Higher-Order Perl; complete source code is available from the linogram web site.

[Other articles in category /linogram] permanent link

Damning with faint praise

If you have used evaporated milk, you may have noticed that the label

says something like:

By adding one part water to one part of the contents of this can, a resulting milk product will be obtained which will not be below the legal standard for whole milk.

This sounds ghastly, doesn't it? "Will not be below the legal standard...". Shudder.

The purpose of this note is to let you know that:

- I have done this,

- more than once, and

- it not only wasn't as ghastly as it sounds,

- it wasn't ghastly at all.

From the warning on the label, you would expect maybe a 30% resemblance to milk; in truth, the resemblance is more like 85%. That's close enough to drink plain, if you're not too fussy, and it's certainly close enough to pour over your cereal without noticing the difference.

The wording of the label scares people off, but it works quite well, well enough that it is probably worth keeping a couple of cans in the closet for emergencies, like when you run out of milk for your cereal at 2 AM after the store is closed.

This has been a public service announcement of the Universe of Discourse. Happy Thanksgiving, everyone!

[Other articles in category /food] permanent link

Wed, 22 Nov 2006

Baseball team nicknames

Lorrie and I were in the car, and she noticed another car with a

Detroit Pistons sticker. She remarked that "Pistons" was a good name

for a basketball team, and particularly for one from Detroit. I

agreed. But then she mentioned the Utah Jazz, a terrible mismatch,

and asked me how that happened to be. Even if you don't know, you can

probably guess: They used to be the New Orleans Jazz, and the team

moved to Utah. They should have changed the name to the Teetotalers

or the Salt Flats or something, but they didn't, so now we have the

Utah Jazz. I hear that next month they're playing the Miami Fightin'

Irish.

That got us thinking about how some sports team names travel, and others don't. Jazz didn't. The Miami Heat could trade cities or names with the Phoenix Suns and nobody would notice. But consider the Chicago Bulls. They could pick up and move anywhere, anywhere at all, and the name would still be fine, just fine. Kansas City Bulls? Fine. Honolulu Bulls? Fine. Marsaxlokk Bulls? Fine.

We can distinguish two categories of names: the "generic" names, like "Bulls", and the "local color" names, like "Pistons". But I know more about baseball, so I spent more time thinking about baseball team names.

In the National League, we have the generic Braves, Cardinals, Cubs, Giants, Pirates, and Reds, who could be based anywhere, and in some cases were. The Braves moved from Boston to Milwaukee to Atlanta, although to escape from Boston they first had to change their name from the Beaneaters. The New York Giants didn't need to change their name when they moved to San Francisco, and they won't need to change their name when they move to Jyväskylä next year. (I hear that the Jyväskylä city council offered them a domed stadium and they couldn't bear to say no.)

On the other hand, the Florida Marlins, Arizona Diamondbacks, and Colorado Rockies are clearly named after features of local importance. If the Marlins were to move to Wyoming, or the Rockies to Nebraska, they would have to change their names, or turn into bad jokes. Then again, the Jazz didn't change their name when they moved to Utah.

The New York Mets are actually the "Metropolitans", so that has at least an attempt at a local connection. The Washington Nationals ditto, although the old name of the Washington Senators was better. At least in that one way. Who could root for a team called the Washington Senators? (From what I gather, not many people could.)

The Nationals replaced the hapless Montreal Expos, whose name wasn't very good, but was locally related: they were named for the 1967 Montreal World's Fair. Advice: If you're naming a baseball team, don't choose an event that will close after a year, and especially don't choose one that has already closed.

The Houston Astros, and their Astrodome filled with Astroturf, are named to recall the NASA manned space center, which opened there in 1961. The Philadelphia club is called the Phillies, which is not very clever, but is completely immovable. Boston Phillies, anyone? Pittsburgh Phillies? New York Phillies? No? I didn't think so.

I don't know why the San Diego Padres are named that, but there is plenty of Spanish religious history in the San Diego area, so I am confident in putting them in the "local color" column. Milwaukee is indeed full of Brewers; there are a lot of Germans up there, brewing up lager. (Are they back in the National League again? They seem to switch leagues every thirty years.)

That leaves just the Los Angeles Dodgers, who are a bit of an odd case. The team, as you know, was originally the Brooklyn Dodgers. The "Dodgers" nickname, as you probably didn't know, is short for "Trolley Dodgers". The Los Angeles Trolley Dodgers is almost as bad a joke as the Nebraska Rockies. Fortunately, the "Trolley" part was lost a long time ago, and we can now imagine that the team is the Los Angeles Traffic Dodgers. So much for the National League; we have six generic names out of 16, counting the Traffic Dodgers in the "local color" group, and ignoring the defunct Expos.

The American League does not do so well. They have the Boston Royals, the Kansas City Tigers, the Detroit Indians, the Oakland Orioles, and three teams that are named after sox: the Red, the White, and the Athletics.

Then there are the Blue Jays. They were originally owned by Labatt, a Canadian brewer of beer, and were so-named to remind visitors to the park of their flagship brand, Labatt's Blue. I might have a harder time deciding which group to put them in, if it weren't for the (1944-1945) Philadelphia Blue Jays. If the name is generic enough to be transplanted from Toronto to Philadelphia, it is generic. I have no idea what name the Toronto club could choose if they wanted to avail themselves of the "local color" option rather than the "generic" option; it's tempting to make a cruel joke and suggest that the name most evocative of Toronto would be the Toronto Generics. But no, that's unfair. They could always call their baseball club the Toronto Hockey Fans.