Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2026: | J |

| 2025: | JFMAMJ |

| JASOND | |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 246 |

| Programming | 100 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 22 |

| Physics | 21 |

| Perl | 17 |

| Biology | 16 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Sun, 30 Nov 2008

License plate sabotage

A number of years ago I was opening a new bank account, and the bank

clerk asked me what style of checks I wanted, with pictures clowns or

balloons or whatever. I said I wanted them to be pale blue, possibly

with wavy lines. I reasoned that there was no circumstance under

which it would be a benefit to me to have my checks be memorable or

easily-recognized.

So it is too with car license plates, and for a number of years I have toyed with the idea of getting a personalized plate with II11I11I or 0OO0OO00 or some such, on the theory that there is no possible drawback to having the least legible plate number permitted by law. (If you are reading this post in a font that renders 0 and O the same, take my word for it that 0OO0OO00 contains four letters and four digits.)

A plate number like O0OO000O increases the chance that your traffic tickets (or convictions!) will be thrown out because your vehicle has not been positively identified, or that some trivial clerical error will invalidate them.

Recently a car has appeared in my neighborhood that seems to be owned by someone with the same idea:

Other Pennsylvanians should take note. Consider selecting OO0O0O, 00O00, and other plate numbers easily confused with this one. The more people with easily-confused license numbers, the better the protection.

## Addenda ### 20250215 I spotted this one a few years back.

[Other articles in category /misc] permanent link

Mon, 24 Nov 2008

Variations on the Goldbach conjecture

- Every prime number is the sum of two even numbers.

- Every odd number is the sum of two primes.

- Every even number is the product of two primes.

[Other articles in category /math] permanent link

Wed, 12 Nov 2008

Flag variables in Bourne shell programs

Who the heck still programs in Bourne shell? Old farts like me,

occasionally. Of course, almost every time I do I ask myself why I

didn't write it in Perl. Well, maybe this will be of some value

to some fart even older than me..

Suppose you want to set a flag variable, and then later you want to test it. You probably do something like this:

if some condition; then

IS_NAKED=1

fi

...

if [ "$IS_NAKED" == "1" ]; then

flag is set

else

flag is not set

fi

Or maybe you use ${IS_NAKED:-0} or some such

instead of "$IN_NAKED". Whatever.Today I invented a different technique. Try this on instead:

IS_NAKED=false

if some condition; then

IS_NAKED=true

fi

...

if $IS_NAKED; then

flag is set

else

flag is not set

fi

The arguments both for and against it seem to be obvious, so I won't

make them.I have never seen this done before, but, as I concluded and R.J.B. Signes independently agreed, it is obvious once you see it.

[ Addendum 20090107: some followup notes ]

[Other articles in category /prog] permanent link

Tue, 11 Nov 2008

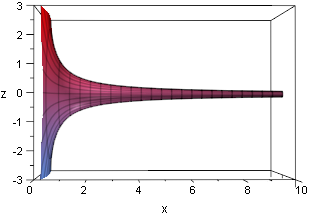

Another note about Gabriel's Horn

I forgot to mention in the

original article that I think referring to Gabriel's Horn as

"paradoxical" is straining at a gnat and swallowing a camel.

I forgot to mention in the

original article that I think referring to Gabriel's Horn as

"paradoxical" is straining at a gnat and swallowing a camel.

Presumably people think it's paradoxical that the thing should have a finite volume but an infinite surface area. But since the horn is infinite in extent, the infinite surface area should be no surprise.

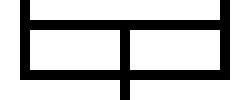

The surprise, if there is one, should be that an infinite object might contain a merely finite volume. But we swallowed that gnat a long time ago, when we noticed that the infinitely wide series of bars below covers only a finite area when they are stacked up as on the right.

[ Addendum 2014-07-03: I have just learned that this same analogy was

also described in this

math.stackexchange post of 2010. ]

[Other articles in category /math]

permanent link

Gabriel's Horn is not so puzzling

The calculations themselves do not lend much insight into what is

going on here. But I recently read a crystal-clear explanation that I

think should be more widely known.

Take out some Play-Doh and roll out a snake. The surface area of the

snake (neglecting the two ends, which are small) is the product of the

length and the circumference; the circumference is proportional to the

diameter. The volume is the product of the length and the

cross-sectional area, which is proportional to the square of the

diameter.

As you continue to roll the snake thinner and thinner, the volume

stays the same, but the surface area goes to infinity.

Gabriel's Horn does exactly the same thing, except without the rolling, because

the parts of the Horn that are far from the origin look exactly the

same as very long snakes.

There's nothing going on in the Gabriel's Horn example that isn't also happening

in the snake example, except that in the explanation of Gabriel's Horn, the

situation is obfuscated by calculus.

I read this explanation in H. Jerome Keisler's caclulus textbook.

Keisler's book is an ordinary undergraduate calculus text, except that

instead of basing everything on limits and on limiting processes, it

is based on nonstandard analysis and explicit infinitesimal

quantities. Check it out; it is

available online for free. (The discussion of Gabriel's Horn is in chapter 6,

page 356.)

[ Addendum 20081110: A bit

more about this. ]

[Other articles in category /math]

permanent link

Addenda to recent articles 200810

Several people helpfully pointed out that the notion I was looking for

here is the "cofinality" of the ordinal, which I had not heard of

before. Cofinality is fairly simple. Consider some ordered set S. Say

that an element b is an "upper bound" for an element a

if a ≤ b. A subset of S is

cofinal if it contains an upper bound for every element of

S. The cofinality of S is the minimum

cardinality of its cofinal subsets, or, what is pretty much the

same thing, the minimum order type of its cofinal subsets.

So, for example, the cofinality of ω is ℵ0, or, in the language

of order types, ω. But the cofinality of ω + 1 is only 1

(because the subset {ω} is cofinal), as is the cofinality of

any successor ordinal. My question, phrased in terms of cofinality,

is simply whether any ordinal has uncountable cofinality. As we saw,

Ω certainly does.

But some uncountable ordinals have countable cofinality. For example,

let ωn be the smallest ordinal with

cardinality ℵn for each n. In

particular, ω0 = ω, and ω1 =

Ω. Then ωω is uncountable, but has

cofinality ω, since it contains a countable cofinal subset

{ω0, ω1, ω2, ...}.

This is the kind of bullshit that set theorists use to occupy their

time.

A couple of readers brought up George Boolos, who is disturbed by

extremely large sets in something of the same way I am. Robin Houston

asked me to consider the ordinal number which is the least fixed point

of the ℵ operation, that is, the smallest ordinal number

κ such that |κ| = ℵκ. Another

way to define this is as the limit of the sequence 0, ℵ0

ℵℵ0, ... . M. Houston describes κ as

"large enough to be utterly mind-boggling, but not so huge as to defy

comprehension altogether". I agree with the "utterly mind-boggling"

part, anyway. And yet it has countable cofinality, as witnessed by

the limiting sequence I just gave.

M. Houston says that Boolos uses κ as an example of a set

that is so big that he cannot agree that it really exists. Set theory

says that it does exist, but somewhere at or before that point, Boolos

and set theory part ways. M. Houston says that a relevant essay,

"Must we believe in set theory?" appears in Logic, Logic, and

Logic. I'll have to check it out.

My own discomfort with uncountable sets is probably less nuanced, and

certainly less well thought through. This is why I presented it as a

fantasy, rather than as a claim or an argument. Just the sort of

thing for a future blog post, although I suspect that I don't have

anything to say about it that hasn't been said before, more than once.

Finally, a pseudonymous Reddit user brought up a paper of

Coquand, Hancock, and Setzer that discusses just which ordinals

are representable by the type defined above. The answer turns

out to be all the ordinals less than ωω. But

in Martin-Löf's type theory (about which more this month, I hope)

you can actually represent up to ε0. The paper is

Ordinals

in Type Theory and is linked from here.

Thanks to Charles Stewart, Robin Houston, Luke Palmer, Simon Tatham,

Tim McKenzie, János Krámar, Vedran Čačić,

and Reddit user "apfelmus" for discussing this with me.

[ Meta-addendum 20081130: My summary of Coquand, Hancock, and Setzer's

results was utterly wrong. Thanks to Charles Stewart and Peter

Hancock (one of the authors) for pointing this out to me. ]

One reader wondered what should be done about homophones of

"infinity", while another observed that a start has already been

made on "googol". These are just the sort of issues my proposed

Institute is needed to investigate.

One clever reader pointed out that "half" has the homophone "have". Except

that it's not really a homophone. Which is just right!

Take the curve y = 1/x for x ≥ 1.

Revolve it around the x-axis, generating a trumpet-shaped

surface, "Gabriel's Horn".

Buy

Elementary Calculus: An Infinitesimal Approach

from Bookshop.org

(with kickback)

(without kickback)

data Nat = Z | S Nat

data Ordinal = Zero

| Succ Ordinal

| Lim (Nat → Ordinal)

In particular, I asked

"What about Ω, the first uncountable ordinal?" Several readers

pointed out that the answer to this is quite obvious: Suppose

S is some countable sequence of (countable) ordinals. Then the

limit of the sequence is a countable union of countable sets, and so

is countable, and so is not Ω. Whoops! At least my intuition

was in the right direction.

[Other articles in category /addenda] permanent link

Election results

Regardless of how you felt about the individual candidates in the

recent American presidential election, and regardless of whether you

live in the United States of America, I hope you can

appreciate the deeply-felt sentiment that pervades this program:

#!/usr/bin/perl

my $remain = 1232470800 - time();

$remain > 0 or print("It's finally over.\n"), exit;

my @dur;

for (60, 60, 24, 100000) {

unshift @dur, $remain % $_;

$remain -= $dur[0];

$remain /= $_;

}

my @time = qw(day days hour hours minute minutes second seconds);

my @s;

for (0 .. $#dur) {

my $n = $dur[$_] or next;

my $unit = $time[$_*2 + ($n != 1)];

$s[$_] = "$n $unit";

}

@s = grep defined, @s;

$s[-1] = "and $s[-1]" if @s > 2;

print join ", ", @s;

print "\n";

[Other articles in category /politics] permanent link

Mon, 03 Nov 2008

Atypical Typing

I just got back from Nashville, Tennessee, where I delivered a talk at

OOPSLA 2008, my first

talk as an "invited

speaker". This post is a bunch of highly miscellaneous notes

about the talk.

If you want to skip the notes and just read the talk, here it is.

Talk abstract

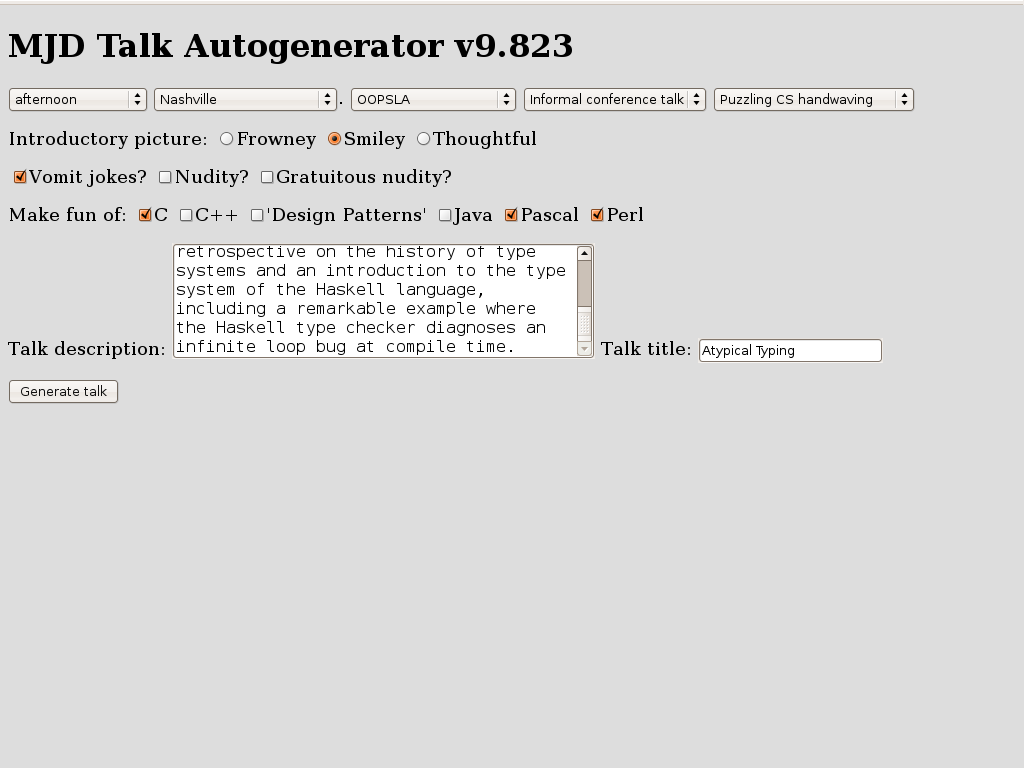

Many of the shortcomings of Java's type system were addressed by the addition of generics to Java 5.0. Java's generic types are a direct outgrowth of research into strong type systems in languages like SML and Haskell. But the powerful, expressive type systems of research languages like Haskell are capable of feats that exceed the dreams of programmers familiar only with mainstream languages.I did not say in the abstract that the talk was a retread of a talk I gave for the Perl mongers in 1999 titled "Strong Typing Doesn't Have to Suck. Nobody wants to hear that. Still, the talk underwent a major rewrite, for all the obvious reasons.In this talk I'll give a brief retrospective on the history of type systems and an introduction to the type system of the Haskell language, including a remarkable example where the Haskell type checker diagnoses an infinite loop bug at compile time.

In 1999, the claim that strong typing does not have to suck was surprising news, and particularly so to Perl Mongers. In 2008, however, this argument has been settled by Java 5, whose type system demonstrates pretty conclusively that strong typing doesn't have to suck. I am not saying that you must like it, and I am not saying that there is no room for improvement. Indeed, it's obvious that the Java 5 type system has room for improvement: if you take the SML type system of 15 years ago, and whack on it with a hammer until it's chipped and dinged all over, you get the Java 5 type system; the SML type system of the early 1990s is ipso facto an improvement. But that type system didn't suck, and neither does Java's.

So I took out the arguments about how static typing didn't have to suck, figuring that most of the OOPSLA audience was already sold on this point, and took a rather different theme: "Look, this ivory-tower geekery turned out to be important and useful, and its current incarnation may turn out to be important and useful in the same way and for the same reasons."

In 1999, I talked about Hindley-Milner type systems, and although it was far from clear at the time that mainstream languages would follow the path blazed by the HM languages, that was exactly what happened. So the HM languages, and Haskell in particular, contained some features of interest, and, had you known then how things would turn out, would have been worth looking at. But Haskell has continued to evolve, and perhaps it still is worth looking at.

Or maybe another way to put it: If the adoption of functional programming ideas into the mainstream took you by surprise, fair enough, because sometimes these things work out and sometimes they don't, and sometimes they get adopted and sometimes they don't. But if it happens again and takes you by surprise again, you're going to look like a dumbass. So start paying attention!

Haskell types are hard to explain

I spent most of the talk time running through some simple examples of Haskell's type inference algorithm, and finished with a really spectacular example that I first saw in a talk by Andrew R. Koenig at San Antonio USENIX where the type checker detects an infinite-loop bug in a sorting function at compile time. The goal of the 1999 talk was to explain enough of the ML type system that the audience would appreciate this spectacular example. The goal of the 2008 talk was the same, except I wanted to do the examples in Haskell, because Haskell is up-and-coming but ML is down-and-going.It is a lot easier to explain ML's type system than it is to explain Haskell's. Partly it's because ML is simpler to begin with, but also it's because Haskell is so general and powerful that there are very few simple examples! For example, in SML one can demonstrate:

(* SML *)

val 3 : int;

val 3.5 : real;

which everyone can understand.But in Haskell, 3 has the type (Num t) ⇒ t, and 3.5 has the type (Fractional t) ⇒ t. So you can't explain the types of literal numeric constants without first getting into type classes.

The benefit of this, of course, is that you can write 3 + 3.5 in Haskell, and it does the right thing, whereas in ML you get a type error. But it sure does make it a devil to explain.

Similarly, in SML you can demonstrate some simple monomorphic functions:

not : bool → bool real : int → real sqrt : real → real floor : real → intOf these, only not is simple in Haskell:

not :: Bool → Bool

fromInteger :: (Num a) ⇒ Integer → a -- analogous to 'real'

sqrt :: (Floating a) ⇒ a → a

floor :: (RealFrac a, Integral b) ⇒ a → b

There are very few monomorphic functions in the Haskell standard

prelude.

Slides

I'm still using the same slide-generation software I used in 1999, which makes me happy. It's a giant pile of horrible hacks, possibly the worst piece of software I've ever written. I'd like to hold it up as an example of "worse is better", but actually I think it only qualifies for "bad is good enough". I should write a blog article about this pile of hacks, just to document it for future generations.

Conference plenary sessions

This was the first "keynote session" I had been to at a conference in several years. One of the keynote speakers at a conference I attended was such a tedious, bloviating windbag that I walked out and swore I would never attend another conference plenary session. And I kept that promise until last week, when I had to attend, because now I was not only the bloviating windbag behind the lectern, but an oath-breaker to boot. This is the "shameful confession" alluded to on slide 3.

On the other hand...

One of the highest compliments I've ever received. It says "John McCarthy will be there. Mark Jason Dominus, too." Wow, I'm almost in the same paragraph with John McCarthy.McCarthy didn't actually make it, unfortunately. But I did get to meet Richard Gabriel and Gregor Kiczales. And Daniel Weinreb, although I didn't know who he was before I met him. But now I'm glad I met Daniel Weinreb. During my talk I digressed to say that anyone who is appalled by Perl's regular expression syntax should take a look at Common Lisp's format feature, which is even more appalling, in much the same way. And Weinreb, who had been sitting in the front row and taking copious notes, announced "I wrote format!".

More explaining of jokes

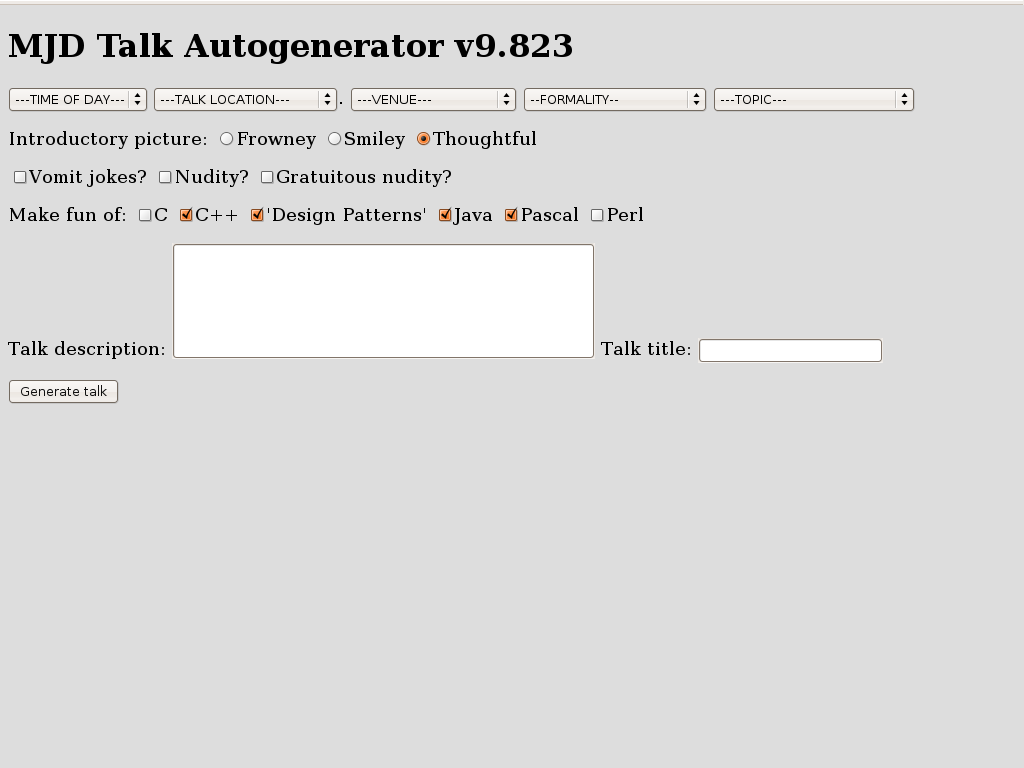

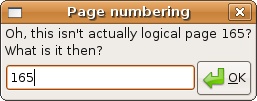

As I get better at giving conference talks, the online slides communicate less and less of the amusing part of the content. You might find it interesting to compare the 1999 version of this talk with the 2008 version.One joke, however, is too amusing to leave out. At the start of the talk, I pretended to have forgotten my slides. "No problem," I said. "All my talks these days are generated automatically by the computer anyway. I'll just rebuild it from scratch." I then displayed this form, which initialliy looked like this:

Wadler's anecdote

I had the chance to talk to Philip Wadler, one of the designers of Haskell and of the Java generics system, before the talk. I asked him about the history of the generics feature, and he told me the following story: At this point in the talk I repeated an anecdote that Wadler told me. After he and Odersky had done the work on generics in their gj and "Pizza" projects, Odersky was hired by Sun to write the new Java compiler. Odersky thought the generics were a good idea, so he put them into the compiler. At first the Sun folks always ran the compiler with the generics turned off. But they couldn't rip out the generics support completely, because they needed it in the compiler in order to get it to compile its own source code, which Odersky had written with generics. So Sun had to leave the feature in, and eventually they started using it, and eventually they decided they liked it. I related this story in the talk, but it didn't make it onto the slides, so I'm repeating it here.I had never been to OOPSLA, so I also asked Wadler what the OOPSLA people would want to hear about. He mentioned STM, but since I don't know anything about STM I didn't say anything about it.

View it online

The slides are online.[ Addendum 20081031: Thanks to a Max Rabkin for pointing out that Haskell's analogue of real is fromInteger. I don't know why this didn't occur to me, since I mentioned it in the talk. Oh well. ]

[Other articles in category /talk] permanent link

Fri, 31 Oct 2008

A proposed correction to an inconsistency in English orthography

English contains exactly zero homophones of "zero", if one ignores the

trivial homophone "zero", as is usually done.

English also contains exactly one homophone of "one", namely "won".

English does indeed contain two homophones of "two": "too" and "to".

However, the expected homophones of "three" are missing. I propose to rectify this inconsistency. This is sure to make English orthography more consistent and therefore easier for beginners to learn.

I suggest the following:

thrieI also suggest the founding of a well-funded institute with the following mission:

threigh

thurry

- Determine the meanings of these three new homophones

- Conduct a public education campaign to establish them in common use

- Lobby politicians to promote these new words by legislation, educational standards, public funding, or whatever other means are appropriate

- Investigate the obvious sequel issues: "four" has only "for" and "fore" as homophones; what should be done about this?

Happy Halloween. All Hail Discordia.

[ Addendum 20081106: Some readers inexplicably had nothing better to do than to respond to this ridiculous article. ]

[Other articles in category /lang] permanent link

Fri, 10 Oct 2008

Representing ordinal numbers in the computer and elsewhere

Lately I have been reading Andreas Abel's paper "A semantic analysis

of structural recursion", because it was a referred to by David

Turner's 2004 paper on total

functional programming.

The Turner paper is a must-read. It's about functional programming in languages where every program is guaranteed to terminate. This is more useful than it sounds at first.

Turner's initial point is that the presence of ⊥ values in languages like Haskell spoils one's ability to reason from the program specification. His basic example is simple:

loop :: Integer -> Integer

loop x = 1 + loop x

Taking the function definition as an equation, we subtract (loop x)

from both sides and get

0 = 1which is wrong. The problem is that while subtracting (loop x) from both sides is valid reasoning over the integers, it's not valid over the Haskell Integer type, because Integer contains a ⊥ value for which that law doesn't hold: 1 ≠ 0, but 1 + ⊥ = 0 + ⊥.

Before you can use reasoning as simple and as familiar as subtracting an expression from both sides, you first have to prove that the value of the expression you're subtracting is not ⊥.

By banishing nonterminating functions, one also banishes ⊥ values, and familiar mathematical reasoning is rescued.

You also avoid a lot of confusing language design issues. The whole question of strictness vanishes, because strictness is solely a matter of what a function does when its argument is ⊥, and now there is no ⊥. Lazy evaluation and strict evaluation come to the same thing. You don't have to wonder whether the logical-or operator is strict in its first argument, or its second argument, or both, or neither, because it comes to the same thing regardless.

The drawback, of course, is that if you do this, your language is no longer Turing-complete. But that turns out to be less of a problem in practice than one would expect.

The paper was so interesting that I am following up several of its precursor papers, including Abel's paper, about which the Turner paper says "The problem of writing a decision procedure to recognise structural recursion in a typed lambda calculus with case-expressions and recursive, sum and product types is solved in the thesis of Andreas Abel." And indeed it is.

But none of that is what I was planning to discuss. Rather, Abel introduces a representation for ordinal numbers that I hadn't thought much about before.

I will work up to the ordinals via an intermediate example. Abel introduces a type Nat of natural numbers:

Nat = 1 ⊕ NatThe "1" here is not the number 1, but rather a base type that contains only one element, like Haskell's () type or ML's unit type. For concreteness, I'll write the single value of this type as '•'.

The ⊕ operator is the disjoint sum operator for types. The elements of the type S ⊕ T have one of two forms. They are either left(s) where s∈S or right(t) where t∈T. So 1⊕1 is a type with exactly two values: left(•) and right(•).

The values of Nat are therefore left(•), and right(n) for any element n of Nat. So left(•), right(left(•)), right(right(left(•))), and so on. One can get a more familiar notation by defining:

| 0 | = | left(•) |

| Succ(n) | = | right(n) |

So much for the natural numbers. Abel then defines a type of ordinal numbers, as:

Ord = (1 ⊕ Ord) ⊕ (Nat → Ord)In this scheme, an ordinal is either left(left(•)), which represents 0, or left(right(n)), which represents the successor of the ordinal n, or right(f), which represents the limit ordinal of the range of the function f, whose type is Nat → Ord.

We can define abbreviations:

| Zero | = | left(left(•)) |

| Succ(n) | = | left(right(n)) |

| Lim(f) | = | right(f) |

id :: Nat → Ord

id 0 = Zero

id (n + 1) = Succ(id n)

then ω = Lim(id). Then we easily get ω+1 =

Succ(ω), etc., and the limit of this function is 2ω:

plusomega :: Nat → Ord

plusomega 0 = Lim(id)

plusomega (n + 1) = Succ(plusomega n)

We can define an addition function on ordinals:

+ :: Ord → Ord → Ord

ord + Zero = ord

ord + Succ(n) = Succ(ord + n)

ord + Lim(f) = Lim(λx. ord + f(x))

This gets us another way to make 2ω: 2ω =

Lim(λx.id(x) + ω).Then this function multiplies a Nat by ω:

timesomega :: Nat → Ord

timesomega 0 = Zero

timesomega (n + 1) = ω + (timesomega n)

and Lim(timesomega) is ω2. We can go on like this.But here's what puzzled me. The ordinals are really, really big. Much too big to be a set in most set theories. And even the countable ordinals are really, really big. We often think we have a handle on uncountable sets, because our canonical example is the real numbers, and real numbers are just decimal numbers, which seem simple enough. But the set of countable ordinals is full of weird monsters, enough to convince me that uncountable sets are much harder than most people suppose.

So when I saw that Abel wanted to define an arbitrary ordinals as a limit of a countable sequence of ordinals, I was puzzled. Can you really get every ordinal as the limit of a countable sequence of ordinals? What about Ω, the first uncountable ordinal?

Well, maybe. I can't think of any reason why not. But it still doesn't seem right. It is a very weird sequence, and one that you cannot write down. Because suppose you had a notation for all the ordinals that you would need. But because it is a notation, the set of things it can denote is countable, and so a fortiori the limit of all the ordinals that it can denote is a countable ordinal, not Ω.

And it's all very well to say that the sequence starts out (0, ω, 2ω, ω2, ωω, ε0, ε1, εε0, ...), or whatever, but the beginning of the sequence is totally unimportant; what is important is the end, and we have no way to write the end or to even comprehend what it looks like.

So my question to set theory experts: is every limit ordinal the least upper bound of some countable sequence of ordinals?

I hate uncountable sets, and I have a fantasy that in the mathematics of the 23rd Century, uncountable sets will be looked back upon as a philosophical confusion of earlier times, like Zeno's paradox, or the luminiferous aether.

[ Addendum 20081106: Not every limit ordinal is the least upper bound of some countable sequence of (countable) ordinals, and my guess that Ω is not was correct, but the proof is so simple that I was quite embarrassed to have missed it. More details here. ]

[ Addendum 20160716: In the 8 years since I wrote this article, the link to Turner's paper at Middlesex has expired. Fortunately, Miëtek Bak has taken it upon himself to create an archive containing this paper and a number of papers on related topics. Thank you, M. Bak! ]

[Other articles in category /math] permanent link

Wed, 01 Oct 2008

The Lake Wobegon Distribution

Michael

Lugo mentioned a while back that most distributions are normal. He

does not, of course, believe any such silly thing, so please do not

rush to correct him (or me). But the remark reminded me of how many

people do seem to believe that most distributions are normal.

More than once on internet mailing lists I have encountered people who

ridiculed others for asserting that "nearly all x are above [or

below] average". This is a recurring joke on Prairie Home

Companion, broadcast from the fictional town of Lake Wobegon,

where "all the women are strong, all the men are good looking, and all

the children are above average." And indeed, they can't all be above

average. But they could nearly all be above average. And this

is actually an extremely common situation.

To take my favorite example: nearly everyone has an above-average number of legs. I wish I could remember who first brought this to my attention. James Kushner, perhaps?

But the world abounds with less droll examples. Consider a typical corporation. Probably most of the employees make a below-average salary. Or, more concretely, consider a small company with ten employees. Nine of them are paid $40,000 each, and one is the owner, who is paid $400,000. The average salary is $76,000, and 90% of the employees' salaries are below average.

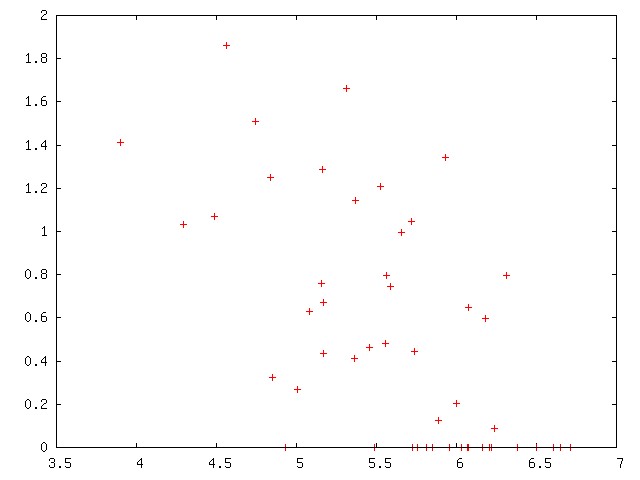

The situation is familiar to people interested in baseball statistics because, for example, most baseball players are below average. Using Sean Lahman's database, I find that 588 players received at least one at-bat in the 2006 National League. These 588 players collected a total of 23,501 hits in 88,844 at-bats, for a collective batting average of .265. Of these 588, only 182 had an individual batting average higher than 265. 69% of the baseball players in the 2006 National League were below-average hitters. If you throw out the players with fewer than 10 at-bats, you are left with 432 players of whom 279, or 65%, hit worse than their collective average of 23430/88325 = .265. Other statistics, such as earned-run averages, are similarly skewed.

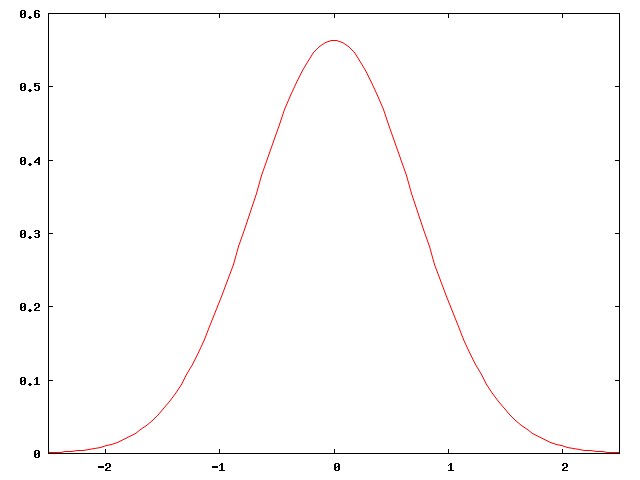

The reason for this is not hard to see. Baseball-hitting talent in the general population is normally distributed, like this:

But major-league baseball players are not the general population. They are carefully selected, among the best of the best. They are all chosen from the right-hand edge of the normal curve. The people in the middle of the normal curve, people like me, play baseball in Clark Park, not in Quankee Stadium.

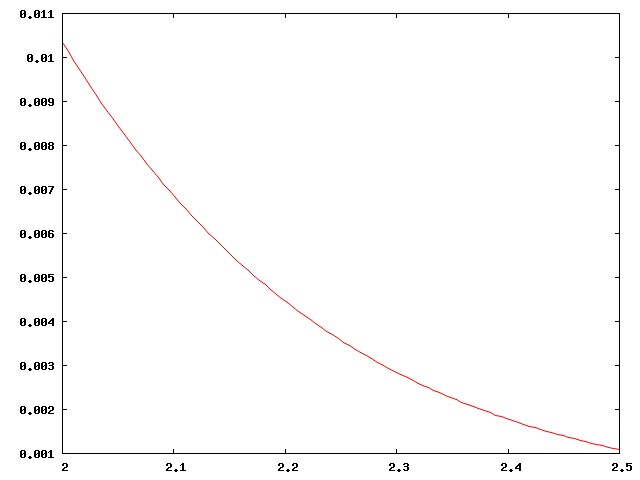

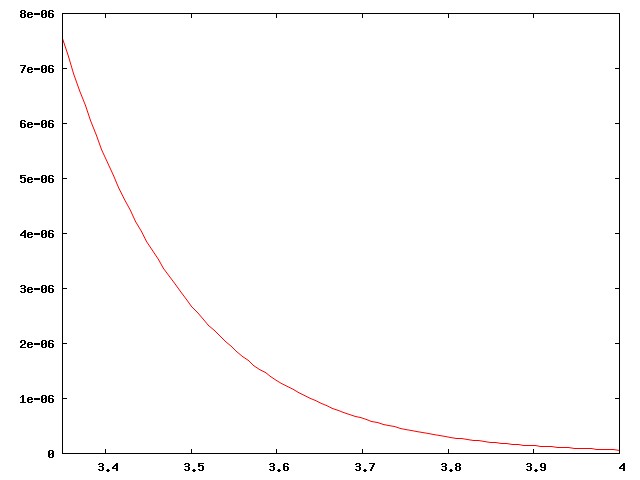

Here's the right-hand corner of the curve above, highly magnified:

Actually I didn't present the case strongly enough. There are around 800 regular major-league ballplayers in the USA, drawn from a population of around 300 million, a ratio of one per 375,000. Well, no, the ratio is smaller, since the U.S. leagues also draw the best players from Mexico, Venezuela, Canada, the Dominican Republic, Japan, and elsewhere. The curve above is much too inclusive. The real curve for major-league ballplayers looks more like this:

This has important implications for the analysis of baseball. A player who is "merely" above average is a rare and precious resource, to be cherished; far more players are below average. Skilled analysts know that comparisons with the "average" player are misleading, because baseball is full of useful, effective players who are below average. Instead, analysts compare players to a hypothetical "replacement level", which is effectively the leftmost edge of the curve, the level at which a player can be easily replaced by one of those kids from triple-A ball.

In the Historical Baseball Abstract, Bill James describes some great team, I think one of the Cincinnati Big Red Machine teams of the mid-1970s, as "possibly the only team in history that was above average at every position". That's an important thing to know about the sport, and about team sports in general: you don't need great players to completely clobber the opposition; it suffices to have players that are merely above average. But if you're the coach, you'd better learn to make do with a bunch of players who are below average, because that's what you have, and that's what the other team will beat you with.

The right-skewedness of the right side of a normal distribution has implications that are important outside of baseball. Stephen Jay Gould wrote an essay about how he was diagnosed with cancer and given six months to live. This sounds awful, and it is awful. But six months was the expected lifetime for patients with his type of cancer—the average remaining lifetime, in other words—and in fact, nearly everyone with that sort of cancer lived less than six months, usually much less. The average was only skewed up as high as six months because of a few people who took years to die. Gould realized this, and then set about trying to find out how the few long-lived outliers survived and what he could do to turn himself into one of the long-lived freaks. And he succeeded, and lived for twenty years, dying eventually at age 60.

My heavens, I just realized that what I've written is an article about the "long tail". I had no idea I was being so trendy. Sorry, everyone.

[Other articles in category /math] permanent link

Fri, 26 Sep 2008

Sprague-Grundy theory

I'm on a small mailing list for math geeks, and there's this one guy

there, Richard Penn, who knows everything. Whenever I come up with

some idle speculation, he has the answer. For example, back in 2003 I

asked:

Let N be any positive integer. Does there necessarily exist a positive integer k such that the base-10 representation of kN contains only the digits 0 through 4?M. Penn was right there with the answer.

Yesterday, M. Penn asked a question to which I happened to know the answer, and I was so pleased that I wrote up the whole theory in appalling detail. Since I haven't posted a math article in a while, and since the mailing list only has about twelve people on it, I thought I would squeeze a little more value out of it by posting it here.

Richard Penn asked:

N dots are placed in a circle. Players alternate moves, where a move consists of crossing out any one of the remaining dots, and the dots on each side of it (if they remain). The winner is the player who crosses out the last dot. What is the optimal strategy with 19 dots? with 20? Can you generalize?M. Penn observed that there is a simple strategy for the 20-dot circle, but was not able to find one for the 19-dot circle. But solving such problems in general is made easy by the Sprague-Grundy theory, which I will explain in detail.

0. Short Spoilers

Both positions are wins for the second player to move.The 20-dot case is trivial, since any first-player move leaves a row of 17 dots, from which the second player can leave two disconnected rows of 7 dots each. Then any first-player move in one of these rows can be effectively answered by the second player in the other row.

The 19-dot case is harder. The first player's move leaves a row of 16 dots. The second player can win by removing 3 dots to leave disconnected rows of 6 and 7 dots. After this, the strategy is complicated, but is easily found by the Sprague-Grundy theory. It's at the end of this article if you want to skip ahead.

Sprague-Grundy theory is a complete theory of all finite impartial games, which are games like this one where the two players have exactly the same moves from every position.

The theory says:

- Every such game position has a "value", which is a non-negative integer.

- A position is a second-player win if and only if its value is zero.

- The value of a position can be calculated from the values of the positions to which the players can move, in a simple way.

- The value of a collection of disjoint positions (such as two disconnected rows of dots) can be calculated from the values of its component positions in a simple way.

| Buy Winning Ways for Your Mathematical Plays, Vol. 1 from Bookshop.org (with kickback) (without kickback) |

1. Nim

In the game of Nim, one has some piles of beans, and a legal move is to remove some or all of the beans from any one pile. The winner is the player who takes the last bean. Equivalently, the winner is the last player who has a legal move.Nim is important because every position in every impartial game is somehow equivalent to a position in Nim, as we will see. In fact, every position in every impartial game is equivalent to a Nim position with at most one heap of beans! Since single Nim-heaps are trivially analyzed, one can completely analyze any impartial game position by calculating the Nim-heap to which it is equivalent.

2. Disjoint sums of games

Definition: The "disjoint sum" A # B of two games A and B is a new game whose rules are as follows: a legal move in A # B is either a move in A or a move in B; the winner is the last player with a legal move.Three easy exercises:

- # is commutative.

- # is associative.

- Let (a,b,c...) represent the Nim position with heaps a, b, c, etc. Then the game (a,b,c,...) is precisely (a) # (b) # (c) # ... .

0 # a = a # 0 = afor all games a. 0 is a win for the previous player: the next player to move has no legal moves, and loses.

We will call the next player to move "P1", and the player who just moved "P2".

Note that a Nim-heap of 0 beans is precisely the 0 game.

3. Sums of Nim-heaps

We usually represent a single Nim-heap with n beans as "∗n". I'll do that from now on.We observed that ∗0 is a win for the second player. Observe now that when n is positive, ∗n is a win for the first player, by a trivial strategy.

From now on we will use the symbol "=" to mean a weaker relation on games than strict equality. Two games A and B will be equivalent if their outcomes are the same in a rather strong sense:

A = B means that for any game X, A # X is a winning position if and only if B # X is also.Taking X = 0, the condition A = B implies that both games have the same outcome in isolation: if one is a first-player win, so is the other. But the condition is stronger than that. Both ∗1 and ∗2 are first-player wins, but ∗1 ≠ ∗2, because ∗1 # ∗1 is a second-player win, while ∗2 # ∗1 is a first-player win.

Exercise: ∗x = ∗y if and only if x = y.

It so happens that the disjoint sum of two Nim-heaps is equivalent to a single Nim-heap:

Nim-sum theorem: ∗a # ∗b = ∗(a ⊕ b), Where ⊕ is the bitwise exclusive-or operation.

I'll omit the proof, which is pretty easy to find. ⊕ is often described as "write a and b in binary, and add, ignoring all carries." For example 1 ⊕ 2 = 3, and 13 ⊕ 7 = 10. This implies that ∗1 # ∗2 = ∗3, and that ∗13 # ∗7 = ∗10.

Although I omitted the proof that # for Nim-heaps is essentially the ⊕ operation in disguise, there are many natural implications of this that you can use to verify that the claim is plausible. For example:

- The Nim-sum theorem implies that ∗0 is a neutral element for #, which we already knew.

- Since a ⊕ a = 0, we have:

∗a # ∗a = ∗0 for all a

That is, ∗a # ∗a is a win for P2. And indeed, P2 has an obvious strategy: whatever P1 does in one pile, P2 does in the other pile. P2 never runs out of legal moves until after P1 does, and so must win. - Since a ⊕ a = 0, we have, more generally:

∗a # ∗a # X = X for all a, X

No matter what X is, its outcome is the same as that of ∗a # ∗a # X. Why?Suppose you are the player with a winning strategy for playing X alone. Then it is easy to see that you have a winning strategy in ∗a # ∗a # X, as follows: ignore the ∗a # ∗a component, until your opponent moves in it, when you should copy their move in the other half of that component. Eventually the ∗a # ∗a part will be used up (that is, reduced to ∗0 # ∗0 = 0) and your opponent will be forced to move in X, whereupon you can continue your winning strategy there until you win.

- According to the ⊕ operation, ∗1 # ∗2 = ∗3, and so ∗1 # ∗2

# ∗3 = ∗3 # ∗3 = 0, so P2 should have a winning strategy in ∗1 #

∗2 # ∗3. Which he does: If P1 removes any entire heap, P2 can win by

equalizing the remaining heaps, leaving ∗1 # ∗1 = 0 or ∗2 # ∗2 =

0, which he wins easily. If P1 equalizes any two heaps, P2 can remove

the third heap, winning the same way.

- Let's reconsider the game of the previous paragraph, but

change the ∗1 to something else.

2 ⊕ 3 ⊕ x > 0 so

if ∗x ≠ 1, ∗2 # ∗3 # ∗x = ∗y,

where y>0. Since

∗y is a single nonempty Nim-heap, it is obviously a win for P1,

and so ∗2 # ∗3 # ∗x should be equivalent, also a win for P1.

What is P1's winning strategy in ∗2 # ∗3 # ∗x? It's easy.

If x > 1, then P1 can reduce ∗x to ∗1, leaving ∗2 #

∗3 # ∗1, which we saw is a winning position. And if x = 0,

then P1 can move to ∗2 # ∗2 and win.

4. The MEX rule

The important thing about disjoint sums is that they abstract away the strategy. If you have some complicated set of Nim-heaps ∗a # ∗b # ... # ∗z, you can ignore them and pretend instead that they are a single heap ∗(a ⊕ b ⊕ ... ⊕ z). Your best move in the compound heap can be easily worked out from the corresponding best move in the fictitious single heap.For example, how do you figure out how to play in ∗2 # ∗3 # ∗x? You consider it as (∗2 # ∗3) # ∗x = ∗1 # ∗x. That is, you pretend that the ∗2 and the ∗3 are actually a single heap of size 1. Then your strategy is to win in ∗1 # ∗x, which you obviously do by reducing ∗x to size 1, or, if ∗x is already ∗0, by changing ∗1 to ∗0.

Now, that is very facile, but ∗2 # ∗3 is not the same game as ∗1, because from ∗1 there is just one legal move, which is to ∗0. Whereas from ∗2 # ∗3 there are several moves. It might seem that your opponent could complicate the situation, say by moving from ∗2 # ∗3 to ∗3, which she could not do if it were really ∗1.

But actually this extra option can't possibly help your opponent, because you have an easy response to that move, which is to move right back to ∗1! If pretending that ∗2 # ∗3 was ∗1 was good before, it is certainly good after you make it ∗1 for real.

From ∗2 # ∗3 there are a whole bunch of moves:

Move to ∗3But you can disregard the first four of these, because they are reversible: if some player X has a winning strategy that works by pretending that ∗2 # ∗3 is identical with ∗1, then the extra options of moving to ∗2 and ∗3 won't help X's opponent, because X can reverse those moves and turn the ∗2 # ∗3 component back into ∗1. So we can ignore these options, and say that there's just one move from ∗2 # ∗3 worth considering further, namely to ∗2 # ∗2 = 0. Since this is exactly the same set of moves that is available from ∗1, ∗2 # ∗3 behaves just like ∗1 in all situations, and have just proved that ∗2 # ∗3 = ∗1.

Move to ∗2

Move to ∗1 # ∗3 = ∗2

Move to ∗2 # ∗1 = ∗3

Move to ∗2 # ∗2 = ∗0

Unlike the other moves, the move from ∗2 # ∗3 to ∗0 is not reversible. Once someone turns ∗2 # ∗3 into ∗0, by equalizing the piles, it cannot then be turned back into ∗1, or anything else.

Considering this in more generality, suppose we have some game position P where the options are to move to one of several possible Nim-heaps, and M is the smallest Nim-heap that is not among the options. Then P = ∗M. Why? Because P has just the same options that ∗M has, namely the options of moving to one of ∗0 ... ∗(M-1). P also has some extra options, but we can ignore these because they're reversible. If you have a winning strategy in X # ∗M, then you have a winning strategy in X # P also, as follows:

- If your

opponent plays in X, then follow your strategy for X #

∗M, since the same move will also be available in X # P.

- If your opponent makes P into ∗y, with y <

M, then they've discarded their extra options, which are now

irrelevant; play as you would if they had moved from

X # ∗M to X # ∗y.

- If your opponent makes P into ∗y, with y >

M, then just move from ∗y to ∗M, leaving

X # ∗M, which you can win.

For example, let's consider what happens if we augment Nim by adding a special token, called ♦. A player may, in lieu of a regular move, replace ♦ by a pile of beans of any positive size. What effect does this have on Nim?

Since the legal moves from ♦ are {∗1, ∗2, ∗3, ...} and the MEX is 0, ♦ should behave like ∗0. That is, adding a ♦ token to any position should leave the outcome unaffected. And indeed it does. If you have a winning strategy in game G, then you have a winning strategy in G # ♦ also, as follows: If your opponent plays in G, reply in G. If your opponent replaces ♦ with a pile of beans, remove it, leaving only G.

Exercise: Let G be a game where all the legal moves are to Nim-heaps. Then G is a win for P1 if and only if one of the legal moves from G is to ∗0, and a win for P2 if and only if none of the legal moves from G is to ∗0.

5. The Sprague-Grundy theory

An "impartial game" is one where both players have the same moves from every position.Sprague-Grundy theorem: Any finite impartial game is equivalent to some Nim-heap ∗n, which is the "Nim-value" of the game.

Now let's consider Richard Penn's game, which is impartial. A legal move is to cross out any dot, and the adjacent dot or dots, if any.

The Sprague-Grundy theorem says that every row of dots in Penn's game is equivalent to some Nim-heap. Let's tabulate the size of this heap (the Nim-value) for each row of n dots. We'll represent a row of n dots as [οοοοο...ο]. Obviously, [] = ∗0 so the Nim-value of [] is 0. Also obviously, [ο] = ∗1, since they're exactly the same game.

[οο] = ∗1 also, since the only legal move from [οο] is to [] = 0, and the MEX of {0} is 1.

The legal moves from [οοο] are to [] = ∗0 and [ο] = ∗1, so {∗0, ∗1}, and the MEX is 2. So [οοο] = ∗2.

Let's check that this is working. Since the Nim-value of [οοο] is 2, the theory predicts that [οοο] # ∗2 = 0 and so should be a win for P2. P2 should be able to pretend that [οοο] is actually ∗2.

Suppose P1 turns the ∗2 into ∗1, moving to [οοο] # ∗1. Then P2 should turn [οοο] into ∗1 also, which he can do by crossing out an end dot and the adjacent one, leaving [ο] # ∗1, which he easily wins. If P1 turns ∗2 into ∗0, moving to [οοο] # ∗0, then P2 should turn [οοο] into ∗0 also, which he can do by crossing out the middle and adjacent dots, leaving [] # ∗0, which he wins immediately.

If P1 plays in the [οοο] component, she must move to [] or to [ο], each equivalent to some Nim-heap of size x < 2, and P2 can answer by reducing the true Nim-heap ∗2 to contain x beans also.

Continuing our analysis of rows of dots: In Penn's game, the legal moves from [οοοο] are to [οο] and [ο]. Both of these have Nim-value ∗1, so the MEX is 0.

Easy exercise: Since [οοοο] is supposedly equivalent to ∗0, you should be able to show that a player who has a winning strategy in some game G also has a winning strategy in G + [οοοο].

The legal moves from [οοοοο] are to [οοο], [οο], and [ο] # [ο]. The Nim-values of these three games are ∗2, ∗1, and ∗0 respectively, so the MEX is 3 and [οοοοο] = ∗3.

The legal moves from [οοοοοο] are to [οοοο], [οοο], and [ο] # [οο]. The Nim-values of these three games are 0, 2, and 0, so [οοοοοο] = ∗1.

6. Richard Penn's game analyzed

|

The table says that a row of 19 dots should be a win for P1, if she reduces the Nim-value from 3 to 0. And indeed, P1 has an easy winning strategy, which is to cross the 3 dots in the middle of the row, replacing [οοοοοοοοοοοοοοοοοοο] with [οοοοοοοο] # [οοοοοοοο]. But no such easy strategy obtains in a row of 20 dots, which, indeed, is a win for P2.

The original question involved circles of dots, not rows. But from a circle of n dots there is only one legal move, which is to a row of n-3 dots. From a circle of 20 dots, the only legal move is to [ο × 17] = ∗2, which should be a win for P1. P1 should win by changing ∗2 to ∗0, so should look for the move from [ο × 17] to ∗0. This is the obvious solution Richard Penn discovered: move to [οοοοοοο] # [οοοοοοο]. So the circle of 20 dots is an easy win for P2, the second player.

But for the circle of 19 dots the answer is the same, a win for the second player. The first player must move to [ο × 16] = ∗2, and then the second player should win by moving to a 0 position. [ο × 16] must have such a move, because if it didn't, the MEX rule would imply that its Nim-value was 0 instead of 2. So what's the second player's zero move here? There are actually two options. The second player can win by playing to [ο × 14], or by splitting the row into [οοοοοο] # [οοοοοοο].

7. Complete strategy for 19-bean circle

Just for completeness, let's follow one of these purportedly winning

moves in detail. I claimed that the second player could win by moving

to [οοοοοο] # [οοοοοοο]. But what next?

First recall that any isolated row of four dots, [οοοο], can be disregarded, because any first-player move in such a row can be answered by a second-player move that crosses out the rest of the row. And any pair of isolated rows of one or two dots, [ο] or [οο], can be similarly disregarded, because any move that crosses out one can be answered by a move that crosses out the other. So in what follows, positions like [οο] # [ο] # [οοοο] will be assumed to have been won by the second player, and we will say that the second player "has an easy win" if he has a move to such a position.

- The first player has three possible moves in the left [οοοοοο] component, as follows:

- If the first player moves to [οοοο] # [οοοοοοο], the second

player has an easy win by moving to [οοοο] # [οοοο].

- If the first player moves to [οοο] # [οοοοοοο] = ∗2 # ∗1, the

second player should reduce the left component to ∗1, by moving to [ο] #

[οοοοοοο]. Then no matter what the first player does, the second

player has an easy win.

- If the first player moves to [ο] # [οο] # [οοοοοοο] = ∗1 # ∗1 # ∗1, the second player can disregard the

[ο] # [οο] component. The second player instead plays to [ο] # [οο]

# [οοοο] and wins.

- If the first player moves to [οοοο] # [οοοοοοο], the second

player has an easy win by moving to [οοοο] # [οοοο].

- The first player has four moves in the right [οοοοοοο] component, as follows:

- If the first player moves to [οοοοοο] # [οοοοο] = ∗1 # ∗3,

the second player should move from ∗3 to ∗1. There must be a move

in [οοοοο] to a position with Nim-value 1. (If there weren't, [οοοοο]

would have Nim-value 1 instead of 3, by the MEX rule.) Indeed, the

second player can move to [οοοοοο] # [οο]. Now whatever the first

player does the second player has an easy win, either to [οοοο] or to

X # X for some row X.

- If the first player moves to [οοοοοο] # [οοοο] = ∗1 # ∗0, the second player should move from ∗1 to ∗0.

There must be a move in [οοοοοο] to a position with Nim-value 0, and indeed there is: the second player moves

to [οοοο] # [οοοο] and wins.

- If the first player moves to [οοοοοο] # [ο] # [οοο] = ∗1 # ∗1 #

∗2, the second player can disregard the ∗1 # ∗1 component and

should move in the ∗2 component, to ∗0, which he does by eliminating

it entirely, leaving the first player with [οοοοοο] # [ο]. After any

move by the first player the second player has an easy win.

- If the first player moves to [οοοοοο] # [οο] # [οο] = ∗1 # ∗1 #

∗1, the second player has a number of good choices. The simplest

thing to do is to disregard the [οο] # [οο] component and move in the

[οοοοοο] to some position with Nim-value 0. Moving to [οοοο] # [οο] #

[οο] suffices.

- If the first player moves to [οοοοοο] # [οοοοο] = ∗1 # ∗3,

the second player should move from ∗3 to ∗1. There must be a move

in [οοοοο] to a position with Nim-value 1. (If there weren't, [οοοοο]

would have Nim-value 1 instead of 3, by the MEX rule.) Indeed, the

second player can move to [οοοοοο] # [οο]. Now whatever the first

player does the second player has an easy win, either to [οοοο] or to

X # X for some row X.

But the important point here is not the strategy itself, which is hard to remember, and which could have been found by computer search. The important thing to notice is that computing the table of Nim-values for each row of n dots is easy, and once you have done this, the rest of the strategy almost takes care of itself. Do you need to find a good move from [οοοοοοο] # [οοοοοοοοο] # [οοοοοοοοοο]? There's no need to worry, because the table says that this can be viewed as ∗1 # ∗3 # ∗3, and so a good move is to reduce the ∗1 component, the [οοοοοοο], to ∗0, say by changing it to [οοοο] or to [οο] # [οο]. Whatever your opponent does next, calculating your reply will be similarly easy.

[Other articles in category /math] permanent link

Thu, 18 Sep 2008

data Mu f = In (f (Mu f))

Last week I wrote about one

of two mindboggling pieces of code that appears in the paper Functional

Programming with Overloading and Higher-Order Polymorphism, by

Mark P. Jones. Today I'll write about the other one. It looks like

this:

data Mu f = In (f (Mu f)) -- (???)

I bet a bunch of people reading this on Planet Haskell are nodding and

saying "Oh, that!"When I first saw this I couldn't figure out what it was saying at all. It was totally opaque. I still have trouble recognizing in Haskell what tokens are types, what tokens are type constructors, and what tokens are value constructors. Code like (???) is unusually confusing in this regard.

Normally, one sees something like this instead:

data Maybe f = Nothing | Just f

Here f is a type variable; that is, a variable that ranges over

types. Maybe is a type constructor, which is like a function

that you can apply to a type to get another type. The most familiar

example of a type constructor is List:

data List e = Nil | Cons e (List e)

Given any type f, you can apply the type constructor

List to f to get a new type List f.

For example, you can apply List to Int to get the

type List Int. (The Haskell built-in list type constructor

goes by the funny name of [], but works the same way. The

type [Int] is a synonym for ([] Int).)Actually, type names are type constructors also; they're argumentless type constructors. So we have type constructors like Int, which take no arguments, and type constructors like List, which take one argument. Haskell also has type constructors that take more than one argument. For example, Haskell has a standard type constructor called Either for making union types:

data Either a b = Left a | Right b;

Then the type Either Int String contains values like Left

37 and Right "Cotton Mather".To keep track of how many arguments a type constructor has, one can consider the, ahem, type, of the type constructor. But to avoid the obvious looming terminological confusion, the experts use the word "kind" to refer to the type of a type constructor. The kind of List is * → *, which means that it takes a type and gives you back a type. The kind of Either is * → * → *, which means that it takes two types and gives you back a type. Well, actually, it is curried, just like regular functions are, so that Either Int is itself a type constructor of kind * → * which takes a type a and returns a type which could be either an Int or an a. The nullary type constructor Int has kind *.

Continuing the "Maybe" example above, f is a type, or a constructor of kind *, if you prefer. Just is a value constructor, of type f → Maybe f. It takes a value of type f and produces a value of type Maybe f.

Now here is a crucial point. In declarations of type constructors, such as these:

data Either a b = ...

data List e = ...

data Maybe f = ...

the type variables a, b, e, and f actually

range over type constructors, not over types. Haskell can infer the

kinds of the type constructors Either, List, and

Maybe, and also the kinds of the type variables, from the

definitions on the right of the = signs. In this case, it

concludes that all four variables must have kind *, and so really do

represent types, and not higher-order type constructors. So you can't

ask for Either Int List because List is known to

have kind * → *, and Haskell needs a type constructor of kind * to

serve as an argument to Either. But with a different definition, Haskell might infer that a type variable has a higher-order kind. Here is a contrived example, which might be good for something, perhaps. I'm not sure:

data TyCon f = ValCon (f Int)

This defines a type constructor TyCon with kind (* → *) → *,

which can be applied to any type constuctor f that has kind *

→ *, to yield a type. What new type? The new type TyCon

f is isomorphic to the type f of Int. For

example, TyCon List is basically the same as List

Int. The value Just 37 has type Maybe Int,

and the value ValCon (Just 37) has type TyCon

Maybe.Similarly, the value [1, 2, 3] has type [Int], which, you remember, is a synonym for [] Int. And the value ValCon [1, 2, 3] has type TyCon [].

Now that the jargon is laid out, let's look at (???) again:

data Mu f = In (f (Mu f)) -- (???)

When I was first trying to get my head around this, I had trouble

seeing what the values were going to be. It looks at first like it

has no bottom. The token f here, like in the TyCon

example, is a variable that ranges over type constructors with kind *

→ *, so could be List or Maybe or [],

something that takes a type and yields a new type. Mu itself

has kind (* → *) → *, taking something like f and yielding a

type. But what's an actual value? You need to apply the value

constructor In to a value of type f (Mu

f), and it's not immediately clear where to get such a

thing.I asked on #haskell, and Cale Gibbard explained it very clearly. To do anything useful you first have to fix f. Let's take f = Maybe. In that particular case, (???) becomes:

data Mu Maybe = In (Maybe (Mu Maybe))

So the In value constructor will take a value of type

Maybe (Mu Maybe) and return a value of type

Mu Maybe. Where do we get a value of type

Maybe (Mu Maybe)? Oh, no problem: the value Nothing

is polymorphic, and has type Maybe a for all a,

so in particular it has type Maybe (Mu Maybe). Whatever

Maybe (Mu Maybe) is, it is a Maybe-type, so it has a

Nothing value. So we do have something to get started

with.Since Nothing is a Maybe (Mu Maybe) value, we can apply the In constructor to it, yielding the value In Nothing, which has type Mu Maybe. Then applying Just, of type a → Maybe a, to In Nothing, of type Mu Maybe, produces Just (In Nothing), of type Maybe (Mu Maybe) again. We can repeat the process as much as we want and produce as many values of type Mu Maybe as we want; they look like these:

In Nothing

In (Just (In Nothing))

In (Just (In (Just (In Nothing))))

In (Just (In (Just (In (Just (In Nothing))))))

...

And that's it, that's the type Mu Maybe, the set of those

values. It will look a little simpler if we omit the In

markers, which don't really add much value. We can just agree to omit

them, or we can get rid of them in the code by defining some semantic

sugar:

nothing = In Nothing

just = In . Just

Then the values of Mu Maybe look like this:

nothing

just nothing

just (just nothing)

just (just (just nothing))

...

It becomes evident that what the Mu operator does is to close

the type under repeated application. This is analogous to the way the

fixpoint combinator works on values. Consider the usual definition of

the fixpoint combinator:

Y f = f (Y f)

Here f is a function of type a → a. Y f

is a fixed point of f. That is, it is a value x of type

a such that f x = x. (Put x = Y

f in the definition to see this.)The fixed point of a function f can be computed by considering the limit of the following sequence of values:

⊥

f(⊥)

f(f(⊥))

f(f(f(⊥)))

...

This actually finds the least fixed point of f, for a certain definition of "least". For many functions f, like x → x + 1, this finds the uninteresting fixed point ⊥, but for many f, like x → λ n. if n = 0 then 1 else n * x(n - 1), it's something better.

Mu is analogous to Y. Instead of operating on a function f from values to values, and producing a single fixed-point value, it operates on a type constructor f from types to types, and produces a fixed-point type. The resulting type T is the least fixed point of the type constructor f, the smallest set of values such that f T = T.

Consider the example of f = Maybe again. We want to find a type T such that T = Maybe T. Consider the following sequence:

{ ⊥ }

Maybe { ⊥ }

Maybe(Maybe { ⊥ })

Maybe(Maybe(Maybe { ⊥ }))

...

The first item is the set that contains nothing but the bottom value, which we might call t0. But t0 is not a fixed point of Maybe, because Maybe { ⊥ } also contains Nothing. So Maybe { ⊥ } is a different type from t0, which we can call t1 = { Nothing, ⊥ }.

The type t1 is not a fixed point of Maybe either, because Maybe t1 evidently contains both Nothing and Just Nothing. Repeating this process, we find that the limit of the sequence is the type Mu Maybe = { ⊥, Nothing, Just Nothing, Just (Just Nothing), Just (Just (Just Nothing)), ... }. This type is fixed under Maybe.

It might be worth pointing out that this is not the only such fixed point, but is is the least fixed point. One can easily find larger types that are fixed under Maybe. For example, postulate a special value Q which has the property that Q = Just Q. Then Mu Maybe ∪ { Q } is also a fixed point of Maybe. But it's easy to see (and to show, by induction) that any such fixed point must be a superset of Mu Maybe. Further consideration of this point might take me off to co-induction, paraconsistent logic, Peter Aczel's nonstandard set theory, and I'd never get back again. So let's leave this for now.

So that's what Mu really is: a fixed-point operator for type constructors. And having realized this, one can go back and look at the definition and see that oh, that's precisely what the definition says, how obvious:

Y f = f (Y f) -- ordinary fixed-point operator

data Mu f = In (f (Mu f)) -- (???)

Given f, a function from values to values, Y(f)

calculates a value x such that x = f(x).

Given f, a function from types to types, Mu(f) calculates

a type T such that f(T) = T. That's why the

definitions are identical. (Except for that annoying In

constructor, which really oughtn't to be there.)You can use this technique to construct various recursive datatypes. For example, Mu Maybe turns out to be equivalent to the following definition of the natural numbers:

data Number = Zero | Succ Number;

Notice the structural similarity with the definition of Maybe:

data Maybe a = Nothing | Just a;

One can similarly define lists:

data Mu f = In (f (Mu f))

data ListX a b = Nil | Cons a b deriving Show

type List a = Mu (ListX a)

-- syntactic sugar

nil :: List a

nil = In Nil

cons :: a → List a → List a

cons x y = In (Cons x y)

-- for example

ls = cons 3 (cons 4 (cons 5 nil)) -- :: List Integer

lt = (cons 'p' (cons 'y' (cons 'x' nil))) -- :: List Char

Or you could similarly do trees, or whatever. Why one might want to

do this is a totally separate article, which I am not going to write

today.Here's the point of today's article: I find it amazing that Haskell's type system is powerful enough to allow one to defined a fixed-point operator for functions over types.

We've come a long way since FORTRAN, that's for sure.

A couple of final, tangential notes: Google search for "Mu f = In (f (Mu f))" turns up relatively few hits, but each hit is extremely interesting. If you're trying to preload your laptop with good stuff to read on a plane ride, downloading these papers might be a good move.

The Peter Aczel thing seems to be less well-known that it should be. It is a version of set theory that allows coinductive definitions of sets instead of inductive definitions. In particular, it allows one to have a set S = { S }, which standard set theory forbids. If you are interested in co-induction you should take a look at this. You can find a clear explanation of it in Barwise and Etchemendy's book The Liar (which I have read) and possibly also in Aczel's book Non Well-Founded Sets (which I haven't read).

[Other articles in category /prog] permanent link

Thu, 11 Sep 2008

Return return

Among the things I read during the past two months was the paper Functional

Programming with Overloading and Higher-Order Polymorphism, by

Mark P. Jones. I don't remember why I read this, but it sure was

interesting. It is an introduction to the new, cool features of

Haskell's type system, with many examples. It was written in 1995

when the features were new. They're no longer new, but they are still

cool.

There were two different pieces of code in this paper that wowed me. When I started this article, I was planning to write about #2. I decided that I would throw in a couple of paragraphs about #1 first, just to get it out of the way. This article is that couple of paragraphs.

[ Addendum 20080917: Here's the article about #2. ]

Suppose you have a type that represents terms over some type v of variable names. The v type is probably strings but could possibly be something else:

data Term v = TVar v -- Type variable | TInt -- Integer type | TString -- String type | Fun (Term v) (Term v) -- Function typeThere's a natural way to make the Term type constructor into an instance of Monad:

instance Monad Term where

return v = TVar v

TVar v >>= f = f v

TInt >>= f = TInt

TString >>= f = TString

Fun d r >>= f = Fun (d >>= f) (r >>= f)

That is, the return operation just lifts a variable name to

the term that consists of just that variable, and the bind

operation just maps its argument function over the variable names in

the term, leaving everything else alone.Jones wants to write a function, unify, which performs a unification algorithm over these terms. Unification answers the question of whether, given two terms, there is a third term that is an instance of both. For example, consider the two terms a → Int and String → b, which are represented by Fun (TVar "a") TInt and Fun TString (TVar "b"), respectively. These terms can be unified, since the term String → Int is an instance of both; one can assign a = TString and b = TInt to turn both terms into Fun TString TInt.

The result of the unification algorithm should be a set of these bindings, in this example saying that the input terms can be unified by replacing the variable "a" with the term TString, and the variable "b" with the term TInt. This set of bindings can be represented by a function that takes a variable name and returns the term to which it should be bound. The function will have type v → Term v. For the example above, the result is a function which takes "a" and returns TString, and which takes "b" and returns TInt. What should this function do with variable names other than "a" and "b"? It should say that the variable named "c" is "replaced" by the term TVar "c", and similarly other variables. Given any other variable name x, it should say that the variable x is "replaced" by the term TVar x.

The unify function will take two terms and return one of these substitutions, where the substition is a function of type v → Term v. So the unify function has type:

unify :: Term v → Term v → (v → Term v)

Oh, but not quite. Because unification can also fail. For example,

if you try to unify the terms a → b and Int,

represented by Fun (TVar "a") (TVar "b") and TInt

respectively, the unfication should fail, because there is no term

that is an instance of both of those; one represents a function and

the other represents an integer. So unify does not actually

return a substitution of type v → Term v. Rather, it

returns a monad value, which might contain a substitution, if the

unification is successful, and otherwise contains an error value. To handle

the example above, the unify function will contain a case

like this:

unify TInt (Fun _ _) = fail ("Cannot unify" ....)

It will fail because it is not possible to unify functions and

integers.If unification is successful, then instead of using fail, the unify function will construct a substitution and then return it with return. Let's consider the result of unifying TInt with TInt. This unification succeeds, and produces a trivial substitition with no bindings. Or more precisely, every variable x should be "replaced" by the term TVar x. So in this case the substitution returned by unify should be the trivial one, a function which takes x and returns TVar x for all variable names x.

But we already have such a function. This is just what we decided that Term's return function should do, when we were making Term into a monad. So in this case the code for unify is:

unify TInt TInt = return returnYep, in this case the unify function returns the return function.

Wheee!

At this point in the paper I was skimming, but when I saw return return, I boggled. I went back and read it more carefully after that, you betcha.

That's my couple of paragraphs. I was planning to get to this point and then say "But that's not what I was planning to discuss. What I really wanted to talk about was...". But I think I'll break with my usual practice and leave the other thing for tomorrow.

Happy Diada Nacional de Catalunya, everyone!

[ Addendum 20080917: Here's the article about the other thing. ]

[Other articles in category /prog] permanent link

Tue, 09 Sep 2008

Factorials are not quite as square as I thought

(This is a followup to yesterday's article.)

Let s(n) be the smallest perfect square larger than n. Then to have n! = a2 - 1 we must have a2 = s(n!), and in particular we must have s(n!) - n! square.

This actually occurs for n in { 4, 5, 6, 7, 8, 9, 10, 11 }, and since 11 was as far as I got on the lunch line yesterday, I had an exaggerated notion of how common it is. had I worked out another example, I would have realized that after n=11 things start going wrong. The value of s(12!) is 218872, but 218872 - 12! = 39169, and 39169 is not a square. (In fact, the n=11 solution is quite remarkable; which I will discuss at the end of this note.)

So while there are (of course) solutions to 12! = a2 - b2, and indeed where b is small compared to a, as I said, the smallest such b takes a big jump between 11 and 12. For 4 ≤ n ≤ 11, the minimal b takes the values 1, 1, 3, 1, 9, 27, 15, 18. But for n = 12, the solution with the smallest b has b = 288.

Calculations with Mathematica by Mitch Harris show that one has n! = s(n!) - b2 only for n in {1, 4, 5, 6, 7, 8, 9, 10, 11, 13, 14, 15, 16}, and then not for any other n under 1,000. The likelihood that I imagine of another solution for n! = a2 - 1, which was already not very high, has just dropped precipitously.

My thanks to M. Harris, and also to Stephen Dranger, who also wrote in with the results of calculations.

Having gotten this far, I then asked OEIS about the sequence 1, 1, 3, 1, 9, 27, 15, 18, and (of course) was delivered a summary of the current state of the art in n! = a2 - 1. Here's my summary of the summary.

The question is known as "Brocard's problem", and was posed by Brocard in 1876. No solutions are known with n > 7, and it is known that if there is a solution, it must have n > 109. According to the Mathworld article on Brocard's problem, it is believed to be "virtually certain" that there are no other solutions.

The calculations for n ≤ 109 are described in this unpublished paper of Berndt and Galway, which I found linked from the Mathworld article. The authors also investigated solutions of n! = a2 - b2 for various fixed b between 2 and 50, and found no solutions with 12 ≤ n ≤ 105 for any of them. The most interesting was the 11! = 63182 - 182 I mentioned already.

[ The original version of this article contained some confusion about whether s(n) was the largest square less than n, or the largest number whose square was less than n. Thanks to Roie Marianer for pointing out the error. ]

[Other articles in category /math] permanent link

Factorials are almost, but not quite, square

This weekend I happened to notice that 7! = 712 - 1. Is this a

strange coincidence? Well, not exactly, because it's not hard to see

that

$$n! = a^{2} - b^{2}\qquad (*)$$

will always have solutions where b is small compared to a. For example, we have 11! = 63182 - 182.But to get b=1 might require a lot of luck, perhaps more luck than there is. (Jeremy Kahn once argued that |2x - 3y| = 1 could have no solutions other than the obvious ones, essentially because it would require much more fabulous luck than was available. I sneered at this argument at the time, but I have to admit that there is something to it.)

Anyway, back to the subject at hand. Is there an example of n! = a2 -1 with n > 7? I haven't checked yet.

In related matters, it's rather easy to show that there are no nontrivial examples with b=0.

It would be pretty cool to show that equation (*) implied n = O(f(b)) for some function f, but I would not be surprised to find out that there is no such bound.

This kept me amused for twenty minutes while I was in line for lunch, anyway. Incidentally, on the lunch line I needed to estimate √11. I described in an earlier article how to do this. Once again it was a good trick, the sort you should keep handy if you are the kind of person who needs to know √11 while standing in line on 33rd Street. Here's the short summary: √11 = √(99/9) = √((100-1)/9) = √((100/9)(1 - 1/100) = (10/3)√(1 - 1/100) ≈ (10/3)(1 - 1/200) = (10/3)(199/200) = 199/60.

[ Addendum 20080909: There is a followup article. ]

[Other articles in category /math] permanent link

Sat, 12 Jul 2008

runN revisited

Exactly one year ago I discussed

runN, a utility that I invented for running the same

command many times, perhaps in parallel. The program continues to be

useful to me, and now Aaron Crane has reworked it and significantly

improved the interface. I found his discussion enlightening. He put

his finger on a lot of problems that had been bothering me that I had

not quite been able to pin down.

Check it out. Thank you, M. Crane.

[Other articles in category /prog] permanent link

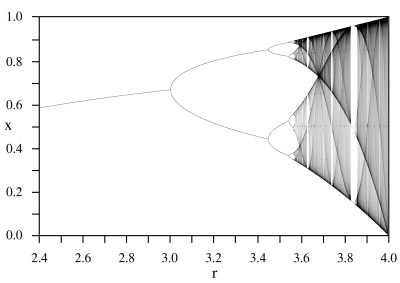

Period three and chaos

In the copious spare time I have around my other major project, I am

tinkering with various stuff related to Möbius functions. Like all

the best tinkering projects, the Möbius functions are connected to

other things, and when you follow the connections you can end up in

many faraway places.

A Möbius function is simply a function of the form f : x → (ax + b) / (cx + d) for some constants a, b, c, and d. Möbius functions are of major importance in complex analysis, where they correspond to certain transformations of the Riemann sphere, but I'm mostly looking at the behavior of Möbius functions on the reals, and so restricting a, b, c, and d to be real.

One nice thing about the Möbius functions is that you can identify the

Möbius function f : x → (ax + b) /

(cx + d) with the matrix  ,

because then composition of Möbius functions is the same as

multiplication of the corresponding matrices, and so the inverse of a

Möbius function with matrix M is just the function that

corresponds to M-1. Determining whether a set of

Möbius functions is closed under composition is the same as

determining whether the corresponding matrices form a semigroup; you

can figure out what happens when you iterate a Möbius function by

looking at the eigenvalues of M, and so on.

,

because then composition of Möbius functions is the same as

multiplication of the corresponding matrices, and so the inverse of a

Möbius function with matrix M is just the function that