Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2026: | J |

| 2025: | JFMAMJ |

| JASOND | |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

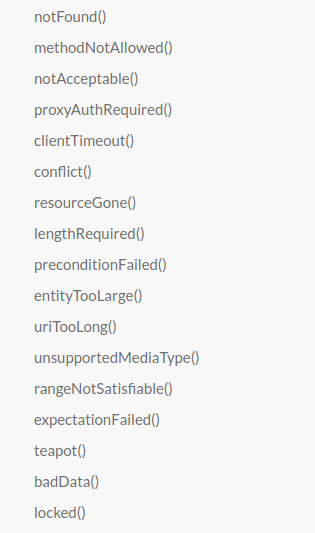

In this section:

Subtopics:

| Mathematics | 246 |

| Programming | 100 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 23 |

| Physics | 21 |

| Perl | 17 |

| Biology | 16 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Mon, 26 Jan 2026

An anecdote about backward compatibility

A long time ago I worked on a debugger program that our company used to debug software that it sold that ran on IBM System 370. We had IBM 3270 CRT terminals that could display (I think) eight colors (if you count black), but the debugger display was only in black and white. I thought I might be able to make it a little more usable by highlighting important items in color.

I knew that the debugger used a macro called WRTERM to write text to

the terminal, and I thought maybe the description of this macro in the

manual might provide some hint about how to write colored text.

In those days, that office didn't have online manuals, instead we had shelf after shelf of yellow looseleaf binders. Finding the binder you wanted was an adventure. More than once I went to my boss to say I couldn't proceed without the REXX language reference or whatever. Sometimes he would just shrug. Other times he might say something like “Maybe Matthew knows where that is.”

I would go ask Matthew about it. Probably he would just shrug. But if he didn't, he would look at me suspiciously, pull the manual from under a pile of papers on his desk, and wave it at me threateningly. “You're going to bring this back to me, right?”

See, because if Matthew didn't hide it in his desk, he might become the person who couldn't find it when he needed it.

Matthew could have photocopied it and stuck his copy in a new binder, but why do that when burying it on his desk was so much easier?

For years afterward I carried around my own photocopy of the REXX language reference, not because I still needed it, but because it had cost me so much trouble and toil to get it. To this day I remember its horrible IBM name: SC24-5239 Virtual Machine / System Product System Product Interpreter Reference. That's right, "System Product" was in there twice. It was the System Product Interpreter for the System Product, you see.

Anyway, I'm digressing. I did eventually find a copy of the IBM

Assembler Product Macro Reference Document or whatever it was called,

and looked up WRTERM and to my delight it took an optional parameter

named COLOR. Jackpot!

My glee turned to puzzlement. If omitted, the default value for

COLOR was BLACK.

Black? Not white? I read further.

And I learned that the only other permitted value was RED, and only

if your terminal had a “two-color ribbon”.

[Other articles in category /prog] permanent link

Sun, 21 Sep 2025

My new git utility `what-changed-twice` needs a new name

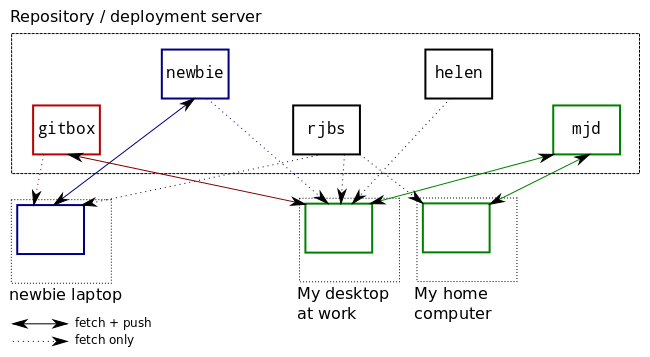

As I have explained in the past, my typical workflow is to go

along commiting stuff that might or might not make sense, then clean it

all up at the end, doing multiple passes with git-add and git-rebase

to get related changes into the same commit, and then to order the

commits in a sensible way. Yesterday I built a new utility that I found

helpful. I couldn't think of a name for it, so I called it

what-changed-twice, which is not great but my I am bad at naming

things and my first attempt was analyze-commits. I welcome

suggestions. In this article I will call it Fred.

What is Fred for? I have a couple of uses for it so far.

Often as I work I'll produce a chain of commits that looks like this:

470947ff minor corrections

d630bf32 continue work on `jq` series

c24b8b24 wip

f4695e97 fix link

a8aa1a5c sp

5f1d7a61 WIP

a337696f Where is the quincunx on the quincunx?

39fe1810 new article: The fivefold symmetry of the quince

0a5a8e2e update broken link

196e7491 sp

bdc781f6 new article: fpuzhpx

40c52f47 merge old and new seasons articles and publish

b59441cd finish updating with Star Wars Droids

537a3545 droids and BJ and the Bear

d142598c Add nicely formatted season tables to this old article

19340470 mention numberphile video

It often happens that I will modify a file on Monday, modify it some more on Tuesday, correct a spelling error on Wednesday. I might have made 7 sets of changes to the main file, of which 4 are related, 2 others are related to each other but not to the other 4, and the last one is unrelated to any of the rest. When a file has changed more than once, I need to see what changed and then group the changes into related sets.

The sp commits are spelling corrections; if the error was made in the

same unmerged topic branch I will want to squash the correction into

the original commit so that the error never appears at all.

Some files changed only once, and I don't need to think about those at this stage. Later I can go back and split up those commits if it seems to make the history clearer.

Fred takes the output of git-log for the commits you are interested

in:

$ git log --stat -20 main...topic | /tmp/what-changed-twice

It finds which files were modified in which commits, and it prints a report about any file that was modified in more than one commit:

calendar/seasons.blog 196 40 d1

math/centrifuge.blog 193 33

misc/straight-men.blog 53 b5 bd

prog/jq-2.blog 33 5f d6

193 1934047

196 196e749

33 33a2304

40 40c52f4

53 537a354

5f 5f1d7a6

b5 b59441c

bd bdc781f

d1 d142598

d6 d630bf3

The report is in two parts. At the top, the path of each file that

changed more than once in the log, and the (highly-abbreviated) commit

IDs of the commits in which it changed. For example,

calendar/seasons.blog changed in commits 196, 40, and d1. The

second part of the report explains that 196 is actually an

abbreviation for commit 196e749.

Now I can look to see what else changed in those three commits:

$ git show --stat 196e749 40c52f4 d142598

then look at the changes to calendar/seasons.blog in those three

commits

$ git show 196e74 40c52f4 d142598 -- calendar/seasons.blog

and then decide if there are any changes I might like to squash together.

Many other files changed on the branch, but I only have to concern myself with four.

There's bonus information too. If a commit is not mentioned in the report, then it only changed files that didn't change in any other commit. That means that in a rebase, I can move that commit literally anywhere else in the sequence without creating a conflict. Only the commits in the report can cause conflicts if they are reordered.

I write most things in Python these days, but this one seemed to cry out for Perl. Here's the code.

Hmm, maybe I'll call it squash-what.

[Other articles in category /prog/git] permanent link

Fri, 29 Nov 2024

A complex bug with a ⸢simple⸣ fix

Last month I did a fairly complex piece of systems programming that worked surprisingly well. But it had one big bug that took me a day to track down.

One reason I find the bug interesting is that it exemplifies the sort of challenges that come up in systems programming. The essence of systems programming is that your program is dealing with the state of a complex world, with many independent agents it can't control, all changing things around. Often one can write a program that puts down a wrench and then picks it up again without looking. In systems programming, the program may have to be prepared for the possibility that someone else has come along and moved the wrench.

The other reason the bug is interesting is that although it was a big bug, fixing it required only a tiny change. I often struggle to communicate to nonprogrammers just how finicky and fussy programming is. Nonprogrammers, even people who have taken a programming class or two, are used to being harassed by crappy UIs (or by the compiler) about missing punctuation marks and trivially malformed inputs, and they think they understand how fussy programming is. But they usually do not. The issue is much deeper, and I think this is a great example that will help communicate the point.

The job of my program, called sync-spam, was to move several weeks of

accumulated email from system S to system T. Each message was

probably spam, but its owner had not confirmed that yet, and the

message was not yet old enough to be thrown away without confirmation.

The probably-spam messages were stored on system S in a directory hierarchy with paths like this:

/spam/2024-10-18/…

where 2024-10-18 was the date the message had been received. Every

message system S had received on October 18 was somewhere under

/spam/2024-10-18.

One directory, the one for the current date, was "active", and new

messages were constantly being written to it by some other programs

not directly related to mine. The directories for the older dates

never changed. Once sync-spam had dealt with the backlog of old messages, it

would continue to run, checking periodically for new messages in the

active directory.

The sync-spam program had a database that recorded, for each message, whether

it had successfully sent that message from S to T, so that it

wouldn't try to send the same message again.

The program worked like this:

- Repeat forever:

- Scan the top-level

spamdirectory for the available dates - For each date D:

- Scan the directory for D and find the messages in it. Add to the database any messages not already recorded there.

- Query the database for the list of messages for date D that have not yet been sent to T

- For each such message:

- Attempt to send the message

- If the attempt was successful, record that in the database

- Wait some appropriate amount of time and continue.

- Scan the top-level

Okay, very good. The program would first attempt to deal with all the

accumulated messages in roughly chronological order, processing the

large backlog. Let's say that on November 1 it got around to scanning

the active 2024-11-01 directory for the first time. There are many

messages, and scanning takes several minutes, so by the time it

finishes scanning, some new messages will be in the active directory that

it hasn't seen. That's okay. The program will attempt to send the

messages that it has seen. The next time it comes around to

2024-11-01 it will re-scan the directory and find the new messages

that have appeared since the last time around.

But scanning a date directory takes several minutes, so we would prefer not to do it if we don't have to. Since only the active directory ever changes, if the program is running on November 1, it can be sure that none of the directories from October will ever change again, so there is no point in its rescanning them. In fact, once we have located the messages in a date directory and recorded them in the database, there is no point in scanning it again unless it is the active directory, the one for today's date.

So sync-spam had an elaboration that made it much more efficient. It was

able to put a mark on a date directory that meant "I have completely

scanned this directory and I know it will not change again". The

algorithm was just as I said above, except with these elaborations.

- Repeat forever:

- Scan the top-level

spamdirectory for the available dates - For each date D:

- If the directory for D is marked as having already been scanned, we already know exactly what messages are in it, since they are already recorded in the database.

- Otherwise:

- Scan the directory for D and find the messages in it. Add to the database any messages not already recorded there.

- If D is not today's date, mark the directory for D as having been scanned completely, because we need not scan it again.

- Query the database for the list of messages for date D that have not yet been sent to T

- For each such message:

- Attempt to send the message

- If the attempt was successful, record that in the database

- Wait some appropriate amount of time and continue.

- Scan the top-level

It's important to not mark the active directory as having been completely scanned, because new messages are continually being deposited into it until the end of the day.

I implemented this, we started it up, and it looked good. For several

days it processed the backlog of unsent messages from

September and October, and it successfully sent most of them. It

eventually caught up to the active directory for the current date, 2024-11-01, scanned

it, and sent most of the messages. Then it went back and started over

again with the earliest date, attempting to send any messages that it

hadn't sent the first time.

But a couple of days later, we noticed that something was wrong.

Directories 2024-11-02 and 2024-11-03 had been created and were

well-stocked with the messages that had been received on those dates. The

program had found the directories for those dates and had marked them as having been

scanned, but there were no messages from those dates in its database.

Now why do you suppose that is?

(Spoilers will follow the horizontal line.)

I investigate this in two ways. First, I made sync-spam's logging more

detailed and looked at the results. While I was waiting for more logs

to accumulate, I built a little tool that would generate a small,

simulated spam directory on my local machine, and then I ran sync-spam

against the simulated messages, to make sure it was doing what I

expected.

In the end, though, neither of these led directly to my solving the problem; I just had a sudden inspiration. This is very unusual for me. Still, I probably wouldn't have had the sudden inspiration if the information from the logging and the debugging hadn't been percolating around my head. Fortune favors the prepared mind.

The problem was this: some other agent was creating the 2024-11-02

directory a bit prematurely, say at 11:55 PM on November 1.

Then sync-spam came along in the last minutes of November 1 and started its

main loop. It scanned the spam directory for available dates, and

found 2024-11-02. It processed the unsent messages from the

directories for earlier dates, then looked at 2024-11-02 for the

first time. And then, at around 11:58, as per above it would:

- Scan the directory for 2024-11-02 and find the messages in it. Add to the database any messages not already recorded there.

There weren't any yet, because it was still 11:58 on November 1.

- If 2024-11-02 is not today's date, mark the directory as having been scanned completely, because we need not scan it again.

Since the 2024-11-02 directory was not the one for today's date —

it was still 11:58 on November 1 — sync-spam recorded that it had

scanned that directory completely and need

not scan it again.

Five minutes later, at 00:03 on November 2, there would be new

messages in the 2024-11-02, which was now the active directory, but

sync-spam wouldn't look for them, because it had already marked 2024-11-02

as having been scanned completely.

This complex problem in this large program was completely fixed by changing:

if ($date ne $self->current_date) {

$self->mark_this_date_fully_scanned($date_dir);

}

to:

if ($date lt $self->current_date) {

$self->mark_this_date_fully_scanned($date_dir);

}

(ne and lt are Perl-speak for "not equal to" and "less than".)

Many organizations have their own version of a certain legend, which tells how a famous person from the past was once called out of retirement to solve a technical problem that nobody else could understand. I first heard the General Electric version of the legend, in which Charles Proteus Steinmetz was called out of retirement to figure out why a large complex of electrical equipment was not working.

In the story, Steinmetz walked around the room, looking briefly at each of the large complicated machines. Then, without a word, he took a piece of chalk from his pocket, marked one of the panels, and departed. When the puzzled engineers removed that panel, they found a failed component, and when that component was replaced, the problem was solved.

Steinmetz's consulting bill for $10,000 arrived the following week. Shocked, the bean-counters replied that $10,000 seemed an exorbitant fee for making a single chalk mark, and, hoping to embarrass him into reducing the fee, asked him to itemize the bill.

Steinmetz returned the itemized bill:

| One chalk mark | $1.00 |

| Knowing where to put it | $9,999.00 |

| TOTAL | $10,000.00 |

This felt like one of those times. Any day when I can feel a connection with Charles Proteus Steinmetz is a good day.

This episode also makes me think of the following variation on an old joke:

A: Ask me what is the most difficult thing about systems programming.

B: Okay, what is the most difficult thing ab—

A: TIMING!

[Other articles in category /prog/bug] permanent link

Sat, 16 Dec 2023

My Git pre-commit hook contained a footgun

The other day I made some changes to a program, but when I ran the tests they failed in a very bizarre way I couldn't understand. After a bit of investigation I still didn't understand. I decided to try to narrow down the scope of possible problems by reverting the code to the unmodified state, then introducing changes from one file at a time.

My plan was: commit all the new work, reset the working directory back to the last good commit, and then start pulling in file changes. So I typed in rapid succession:

git add -u

git commit -m 'broken'

git branch wat

git reset --hard good

So the complete broken code was on the new branch wat.

Then I wanted to pull in the first file from wat. But when I

examined wat there were no changes.

Wat.

I looked all around the history and couldn't find the changes. The

wat branch was there but it was on the current commit, the one with

none of the changes I wanted. I checked in the reflog for the commit

and didn't see it.

Eventually I looked back in my terminal history and discovered the

problem: I had a Git pre-commit hook which git-commit had

attempted to run before it made the new commit. It checks for strings

I don't usually intend to commit, such as XXX and the like.

This time one of the files had something like that. My pre-commit

hook had printed an error message and exited with a failure status, so

git-commit aborted without making the commit. But I had typed the

commands in quick succession without paying attention to what they

were saying, so I went ahead with the git-reset without even seeing

the error message. This wiped out the working tree changes that I had

wanted to preserve.

Fortunately the git-add had gone through, so the modified files were

in the repository anyway, just hard to find. And even more

fortunately, last time this happened to me, I wrote up

instructions about what to do.

This time around recovery was quicker and easier. I knew I only

needed to recover stuff from the last add command, so instead of

analyzing every loose object in the repository, I did

find .git/objects -mmin 10 --type f

to locate loose objects that had been modified in the last ten minutes. There were only half a dozen or so. I was able to recover the lost changes without too much trouble.

Looking back at that previous article, I see that it said:

it only took about twenty minutes… suppose that it had taken much longer, say forty minutes instead of twenty, to rescue the lost blobs from the repository. Would that extra twenty minutes have been time wasted? No! … The rescue might have cost twenty extra minutes, but if so it was paid back with forty minutes of additional Git expertise…

To that I would like to add, the time spent writing up the blog article was also well-spent, because it meant that seven years later I didn't have to figure everything out again, I just followed my own instructions from last time.

But there's a lesson here I'm still trying to figure out. Suppose I want to prevent this sort of error in the future. The obvious answer is “stop splatting stuff onto the terminal without paying attention, jackass”, but that strategy wasn't sufficient this time around and I couldn't think of any way to make it more likely to work next time around.

You have to play the hand you're dealt. If I can't fix myself, maybe I

can fix the software. I would like to make some changes to the

pre-commit hook to make it easier to recover from something like

this.

My first idea was that the hook could unconditionally save the staged changes somewhere before it started, and then once it was sure that it would complete it could throw away the saved changes. For example, it might use the stash for this.

(Although, strangely, git-stash does

not seem to have an easy way to say “stash the current changes, but

without removing them from the working tree”. Maybe git-stash save

followed by git-stash apply would do what I wanted? I have not yet

experimented with it.)

Rather than using the stash, the hook might just commit everything

(with commit -n to prevent infinite loops) and then reset the commit

immediately, before doing whatever it was planning to do. Then if it

was successful, Git would make a second, permanent commit and we could

forget about the one made by the hook. But if something went wrong,

the hook's commit would still be in the reflog. This doubles the

number of commits you make. That doesn't take much time, because Git

commit creation is lightning fast. But it would tend to clutter up

the reflog.

Thinking on it now, I wonder if a better approach isn't to turn the pre-commit hook into a post-commit hook. Instead of a pre-commit hook that does this:

- Check for errors in staged files

- If there are errors:

- Fix the files (if appropriate)

- Print a message

- Fail

- Otherwise:

- Exit successfully

- (

git-commitcontinues and commits the changes)

- If there are errors:

How about a post-commit hook that does this:

- Check for errors in the files that changed in the current head commit

- If there are errors:

- Soft-reset back to the previous commit

- Fix the files (if appropriate)

- Print a message

- Fail

- Otherwise:

- Exit successfully

- If there are errors:

Now suppose I ignore the failure, and throw away the staged changes. It's okay, the changes were still committed and the commit is still in the reflog. This seems clearly better than my earlier ideas.

I'll consider it further and report back if I actually do anything about this.

Larry Wall once said that too many programmers will have a problem, think of a solution, and implement it, but it works better if you can think of several solutions, then implement the one you think is best.

That's a lesson I think I have learned. Thanks, Larry.

Addendum

I see that Eric Raymond's version of the jargon file, last revised December 2003, omits “footgun”. Surely this word is not that new? I want to see if it was used on Usenet prior to that update, but Google Groups search is useless for this question. Does anyone have suggestions for how to proceed?

[Other articles in category /prog/git] permanent link

Sun, 26 Nov 2023

A Qmail example of dealing with unavoidable race conditions

[ I recently posted about a race condition bug reported by Joe Armstrong and said “this sort of thing is now in the water we swim in, but it wasn't yet [in those days of olde].” This is more about that. ]

I learned a lot by reading everything Dan Bernstein wrote about

the design of qmail. A good deal of

it is about dealing with potential issues just like Armstrong's. The

mail server might crash at any moment, perhaps because someone

unplugged the server. In DJB world, it is unacceptable for mail to be

lost, ever, and also for the mail queue structures to be corrupted if

there was a crash. That sounds obvious, right? Apparently it wasn't;

sendmail would do those things.

(I know someone wants to ask what about Postfix? At the time Qmail was released, Postfix was still called ‘VMailer’. The ‘V’ supposedly stood for “Venema” but the joke was that the ‘V’ was actually for “vaporware” because that's what it was.)

A few weeks ago I was explaining one of Qmail's data structures to a junior programmer. Suppose a local user queues an outgoing message that needs to be delivered to 10,000 recipients in different places. Some of the deliveries may succeed immediately. Others will need to be retried, perhaps repeatedly. Eventually (by default, ten days) delivery will time out and a bounce message will be delivered back to the sender, listing the recipients who did not receive the delivery. How does Qmail keep track of this information?

2023 junior programmer wanted to store a JSON structure or something. That is not what Qmail does. If the server crashes halfway through writing a JSON file, it will be corrupt and unreadable. JSON data can be written to a temporary file and the original can be replaced atomically, but suppose you succeed in delivering the message to 9,999 of the 10,000 recipients and the system crashes before you can atomically update the file? Now the deliveries will be re-attempted for those 9,999 recipients and they will get duplicate copies.

Here's what Qmail does instead. The file in the queue directory is in the following format:

Trecip1@host1■Trecip2@host2■…Trecip10000@host10000■

where ■ represents a zero byte. To 2023 eyes this is strange and uncouth, but to a 20th-century system programmer, it is comfortingly simple.

When Qmail wants to attempt a delivery to recip1346@host1346 it has

located that address in the file and seen that it has a T (“to-do”)

on the front. If it had been a D (“done”) Qmail would know that

delivery to that address had

already succeeded, and it would not attempt it again.

If delivery does succeed, Qmail updates the T to a D:

if (write(fd,"D",1) != 1) { close(fd); break; }

/* further errors -> double delivery without us knowing about it, oh well */

close(fd);

return;

The update of a single byte will be done all at once or not at all. Even writing two bytes is riskier: if the two bytes span a disk block boundary, the power might fail after only one of the modified blocks has been written out. With a single byte nothing like that can happen. Absent a catastrophic hardware failure, the data structure on the disk cannot become corrupted.

Mail can never be lost. The only thing that can go wrong here is if the local system crashes in between the successful delivery and the updating of the byte; in this case the delivery will be attempted again, to that one user.

Addenda

I think the data structure could even be updated concurrently by more than one process, although I don't think Qmail actually does this. Can you run multiple instances of

qmail-sendthat share a queue directory? (Even if you could, I can't think of any reason it would be a good idea.)I had thought the update was performed by

qmail-remote, but it appears to be done byqmail-send, probably for security partitioning reasons.qmail-localruns as a variable local user, so it mustn't have permission to modify the queue file, or local users would be able to steal email.qmail-remotedoesn't have this issue, but it would be foolish to implement the same functionality in two places without a really good reason.

[Other articles in category /prog] permanent link

Sat, 25 Nov 2023

Puzzling historical artifact in “Programming Erlang”?

Lately I've been reading Joe Armstrong's book Programming Erlang, and today I was brought up short by this passage from page 208:

Why Spawning and Linking Must Be an Atomic Operation

Once upon a time Erlang had two primitives,

spawnandlink, andspawn_link(Mod, Func, Args)was defined like this:spawn_link(Mod, Func, Args) -> Pid = spawn(Mod, Func, Args), link(Pid), Pid.Then an obscure bug occurred. …

Can you guess the obscure bug? I don't think I'm unusually skilled at concurrent systems programming, and I'm certainly no Joe Armstrong, but I thought the problem was glaringly obvious:

The spawned process died before the link statement was called, so the process died but no error signal was generated.

I scratched my head over this for quite some time. Not over the technical part, but about how famous expert Joe Amstrong could have missed this.

Eventually I decided that it was just that this sort of thing is now in the water we swim in, but it wasn't yet in the primeval times Armstrong was writing about. Sometimes problems are ⸢obvious⸣ because it's thirty years later and everyone has thirty years of experience dealing with those problems.

Another example

I was reminded of a somewhat similar example. Before the WWW came, a sysadmin's view of network server processes was very different than it is now. We thought of them primarily as attack surfaces, and ran as few as possible, as little as possible, and tried hard to prevent anyone from talking to them.

Partly this was because encrypted, authenticated communications

protocols were still an open research area. We now have ssh and

https layers to build on, but in those days we built on sand.

Another reason is that networking itself was pretty new, and we didn't

yet have a body of good technique for designing network services and

protocols, or for partitioning trust.

We didn't know how to write

good servers, and the ones that had been written were bad, often very

bad. Even thirty years ago, sendmail was notorious and had been a

vector for mass security failures, and even something as

innocuous-seeming as finger had turned out to have major issues.

When the Web came along, every sysadmin was thrust into a terrifying new world in which users clamored to write network services that could be talked to at all times by random Internet people all over the world. It was quite a change.

[ I wrote more about system race conditions, but decided to postpone it to Monday. Check back then. ]

[Other articles in category /prog] permanent link

Mon, 23 Oct 2023Katara is taking a Data Structures course this year. The most recent assignment gave her a lot of trouble, partly because it was silly and made no sense, but also because she does not yet know an effective process for writing programs, and the course does not attempt to teach her. On the day the last assignment was due I helped her fix the remaining bugs and get it submitted. This is the memo I wrote to her to memorialize the important process issues that I thought of while we were working on it.

You lost a lot of time and energy dealing with issues like: Using

vim; copying files back and forth with scp; losing the network connection; the college shared machine is slow and yucky.It's important to remove as much friction as possible from your basic process. Otherwise it's like trying to cook with dull knives and rusty pots, except worse because it interrupts your train of thought. You can't do good work with bad tools.

When you start the next project, start it in VScode in the beginning. And maybe set aside an hour or two before you start in earnest, just to go through the VSCode tutorial and familiarize yourself with its basic features, without trying to do that at the same time you are actually thinking about your homework. This will pay off quickly.

It's tempting to cut corners when writing code. For example:

It's tempting to use the first variable or function name you think of instead of taking a moment to think of a suggestive one. You had three classes in your project, all with very similar names. You might imagine that this doesn't matter, you can remember which is which. But remembering imposes a tiny cost every time you do it. These tiny costs seem insignificant. But they compound.

It's tempting to use a short, abbreviated variable or method name instead of a longer more recognizable one because it's quicker to type. Any piece of code is read more often than it is written, so that is optimizing in the wrong place. You need to optimize for quick and easy reading, at the cost of slower and more careful writing, not the other way around.

It's tempting to write a long complicated expression instead of two or three shorter ones where the intermediate results are stored in variables. But then every time you look at the long expression you have to pause for a moment to remember what is going on.

It's tempting to repeat the same code over and over instead of taking the time to hide it behind an interface. For example your project was full of

array[d-1900]all over. This minus-1900 thing should have been hidden inside one of the classes (I forget which). Any code outside that had to communicate with this class should have done so with full year numbers like 1926. That way, when you're not in that one class, you can ignore and forget about the issue entirely. Similarly, if code outside a class is doing the same thing in more than once place, it often means that the class needs another method that does that one thing. You add that method, and then the code outside can just call the method when it needs to do the thing. You advance the program by extending the number of operations it can perform without your thinking of them.If something is messy, it is tempting to imagine that it doesn't matter. It does matter. Those costs are small but compound. Invest in cleaning it up messy code, because if you don't the code will get worse and worse until the mess is a serious impediment. This is like what happens when you are cooking if you don't clean up as you go. At first it's only a tiny hindrance, but if you don't do it constantly you find yourself working in a mess, making mistakes, and losing and breaking things.

Debugging is methodical. Always have clear in your mind what question you are trying to answer, and what your plan is for investigating that question. The process looks like this:

I don't like that it is printing out 0 instead of 1. Why is it doing that? Is the printing wrong, or is the printing correct but the data is wrong?

I should go into the function that does the printing, and print out the data in the simplest way possible, to see if it is correct. (If it's already printing out the data in the simplest way possible, the problem must be in the data.)

(Supposing that the it's the data that is bad) Where did the bad data come from? If it came from some other function, what function was it? Did that function make up the wrong data from scratch, or did it get it from somewhere else?

If the function got the data from somewhere else, did it pass it along unchanged or did it modify the data? If it modified the data, was the data correct when the function got it, or was it already wrong?

The goal here is to point the Finger of Blame: What part of the code is really responsible for the problem? First you accuse the code that actually prints the wrong result. Then that code says “Nuh uh, it was like that when I got it, go blame that other guy that gave it to me.” Eventually you find the smoking gun.

Novice programmers often imagine that they can figure out what is wrong from looking at the final output and intuiting the solution Sherlock Holmes style. This is mistaken. Nobody can do this. Debugging is an engineering discipline: You come up with a hypothesis, then test the hypothesis. Then you do it again.

Ask Dad for assistance when appropriate. I promise not to do anything that would violate the honor code.

Something we discussed that I forgot to include in the memo that we discussed is: After you fix something significant, or add significant new functionality, make a checkpoint copy of the entire source code. This can be as simple as simply copying it all into separate folder. That way, when you are fixing the next thing, if you mess up and break everything, it's easy to get back to a known-good state. The computer is really clumsy to use for many tasks, but it's just great at keeping track of information, so exploit that when you can.

I think CS curricula should have a class that focuses specifically on these issues, on the matter of how do you actually write software?

But they never do.

[Other articles in category /prog] permanent link

Wed, 13 Sep 2023

Horizontal and vertical complexity

Note: The jumping-off place for this article is a conference talk which I did not attend. You should understand this article as rambling musings on related topics, not as a description of the talk or a response to it or a criticism of it or as a rebuttal of its ideas.

A co-worker came back from PyCon reporting on a talk called “Wrapping up the Cruft - Making Wrappers to Hide Complexity”. He said:

The talk was focussed on hiding complexity for educational purposes. … The speaker works for an educational organisation … and provided an example of some code for blinking lights on a single board machine. It was 100 lines long, you had to know about a bunch of complexity that required you to have some understanding of the hardware, then an example where it was the initialisation was wrapped up in an import and for the kids it was as simple as selecting a colour and which led to light up. And how much more readable the code was as a result.

The better we can hide how the sausage is made the more approachable and easier it is for those who build on it to be productive. I think it's good to be reminded of this lesson.

I was on fully board with this until the last bit, which gave me an uneasy feeling. Wrapping up code this way reduces horizontal complexity in that it makes the top level program shorter and quicker. But it increases vertical complexity because there are now more layers of function calling, more layers of interface to understand, and more hidden magic behavior. When something breaks, your worries aren't limited to understanding what is wrong with your code. You also have to wonder about what the library call is doing. Is the library correct? Are you calling it correctly? The difficulty of localizing the bug is larger, and when there is a problem it may be in some module that you can't see, and that you may not know exists.

Good interfaces successfuly hide most of this complexity, but even in the best instances the complexity has only been hidden, and it is all still there in the program. An uncharitable description would be that the complexity has been swept under the carpet. And this is the best case! Bad interfaces don't even succeed in hiding the complexity, which keeps leaking upward, like a spreading stain on that carpet, one that warns of something awful underneath.

Advice about how to write programs bangs the same drum over and over and over:

- Reduce complexity

- Do the simplest thing that could possibly work

- You ain't gonna need it

- Explicit is better than implicit

But here we have someone suggesting the opposite. We should be extremely wary.

There is always a tradeoff. Leaky abstractions can increase the vertical complexity by more than they decrease the horizontal complexity. Better-designed abstractions can achieve real wins.

It’s a hard, hard problem. That’s why they pay us the big bucks.

Ratchet effects

This is a passing thought that I didn't consider carefully enough to work into the main article.

A couple of years ago I wrote an article called Creeping featurism and the ratchet effect about how adding features to software, or adding more explanations to the manual, is subject to a “ratcheting force”. The benefit of the change is localized and easy to imagine:

You can imagine a confused person in your head, someone who happens to be confused in exactly the right way, and who is miraculously helped out by the presence of the right two sentences in the exact right place.

But the cost of the change is that the manual is now a tiny bit larger. It doesn't affect any specific person. But it imposes a tiny tax on everyone who uses the manual.

Similarly adding a feature to software has an obvious benefit, so there's pressure to add more features, and the costs are hidden, so there's less pressure in the opposite direction.

And similarly, adding code and interfaces and libraries to software has an obvious benefit: look how much smaller the top-level code has become! But the cost, that the software is 0.0002% more complex, is harder to see. And that cost increases imperceptibly, but compounds exponentially. So you keep moving in the same direction, constantly improving the software architecture, until one day you wake up and realize that it is unmaintainable. You are baffled. What could have gone wrong?

Kent Beck says, “design isn't free”.

Anecdote

The original article is in the context of a class for beginners where the kids just want to make the LEDs light up. If I understand the example correctly, in this context I would probably have made the same choice for the same reason.

But I kept thinking of an example where I made the opposite choice. I

taught an introduction to programming in C class about thirty years

ago. The previous curriculum had considered pointers an advanced topic

and tried to defer them to the middle of the semester. But the author

of the curriculum had had a big problem: you need pointers to deal with

scanf. What to do?

The solution chosen by the previous curriculum was to supply the students with a library of canned input functions like

int get_int(void); /* Read an integer from standard input */

These used scanf under the hood. (Under the carpet, one might say.)

But all the code with pointers was hidden.

I felt this was a bad move. Even had the library been a perfect abstraction (it wasn't) and completely bug-free (it wasn't) it would still have had a giant flaw: Every minute of time the students spent learning to use this library was a minute wasted on something that would never be of use and that had no intrinsic value. Every minute of time spent on this library was time that could have been spent learning to use pointers! People programming in C will inevitably have to understand pointers, and will never have to understand this library.

My co-worker from the first part of this article wrote:

The better we can hide how the sausage is made the more approachable and easier it is for those who build on it to be productive.

In some educational contexts, I think this is a good idea. But not if you are trying to teach people sausage-making!

[Other articles in category /prog] permanent link

Mon, 27 Feb 2023

I wish people would stop insisting that Git branches are nothing but refs

I periodically write about Git, and sometimes I say something like:

Branches are named sequences of commits

and then a bunch of people show up and say “this is wrong, a branch is nothing but a ref”. This is true, but only in a very limited and unhelpful way. My description is a more useful approximation to the truth.

Git users think about branches and talk about branches. The Git documentation talks about branches and many of the commands mention branches. Pay attention to what experienced users say about branches while using Git, and it will be clear that they do not think of branches simply as just refs. In that sense, branches do exist: they are part of our mental model of how the repository works.

Are you a Git user who wants to argue about this? First ask yourself what we mean when we say “is your topic branch up to date?” “be sure to fetch the dev branch” “what branch did I do that work on?” “is that commit on the main branch or the dev branch?” “Has that work landed on the main branch?” “The history splits in two here, and the left branch is Alice's work but the right branch is Bob's”. None of these can be understood if you think that a branch is nothing but a ref. All of these examples show that when even the most sophisticated Git users talk about branches, they don't simply mean refs; they mean sequences of commits.

Here's an example from the official Git documentation, one of many: “If the upstream branch already contains a change you have made…”. There's no way to understand this if you insist that “branch” here means a ref or a single commit. The current Git documentation contains the word “branch” over 1400 times. Insisting that “a branch is nothing but a ref” is doing people disservice, because they are going to have to unlearn that in order to understand the documentation.

Some unusually dogmatic people might still argue that a branch is nothing but a ref. “All those people who say those things are wrong,” they might say, “even the Git documentation is wrong,” ignoring the fact that they also say those things. No, sorry, that is not the way language works. If someone claims that a true shoe is is really a Javanese dish of fried rice and fish cake, and that anyone who talks about putting shoes on their feet is confused or misguided, well, that person is just being silly.

The reason people say this, the disconnection is that the Git

software doesn't have any formal representation of branches.

Conceptually, the branch is there; the git commands just don't

understand it. This is the most important mismatch between the

conceptual model and what the Git software actually does.

Usually when a software model doesn't quite match its domain, we recognize that it's the software that is deficient. We say “the software doesn't represent that concept well” or “the way the software deals with that is kind of a hack”. We have a special technical term for it: it's a “leaky abstraction”. A “leaky abstraction” is when you ought to be able to ignore the underlying implementation, but the implementation doesn't reflect the model well enough, so you have to think about it more than you would like to.

When there's a leaky abstraction we don't normally try to pretend that the software's deficient model is actually correct, and that everyone in the world is confused. So why not just admit what's going on here? We all think about branches and talk about branches, but Git has a leaky abstraction for branches and doesn't handle branches very well. That's all, nothing unusual. Sometimes software isn't perfect.

When the Git software needs to deal with branches, it has to finesse

the issue somehow. For some commands, hardly any finesse is required.

When you do git log dev to get the history of the dev branch, Git

starts at the commit named dev and then works its way back, parent

by parent, to all the ancestor commits. For history logs, that's

exactly what you want! But Git never has to think of the branch as a

single entity; it just thinks of one commit at a time.

When you do git-merge, you might think you're merging two branches,

but again Git can finesse the issue. Git has to look at a little bit

of history to figure out a merge base, but after that it's not merging

two branches at all, it's merging two sets of changes.

In other cases Git uses a ref to indicate the end point of the branch (called the ‘tip’), and sorta infers the start point from context. For example, when you push a branch, you give the software a ref to indicate the end point of the branch, and it infers the start point: the first commit that the remote doesn't have already. When you rebase a branch, you give the software a ref to indicate the end point of the branch, and the software infers the start point, which is the merge-base of the start point and the upstream commit you're rebasing onto. Sometimes this inference goes awry and the software tries to rebase way more than you thought it would: Git's idea of the branch you're rebasing isn't what you expected. That doesn't mean it's right and you're wrong; it's just a miscommunication.

And sometimes the mismatch isn't well-disguised. If I'm looking at

some commit that was on a branch that was merged to master long ago,

what branch was that exactly? There's no way to know, if the ref was

deleted. (You can leave a note in the commit message, but that is not

conceptually different from leaving a post-it on your monitor.) The

best I can do is to start at the current master, work my way back in

history until I find the merge point, then study the other commits

that are on the same topic branch to try to figure out what was going

on. What if I merged some topic branch into master last week, other

work landed after that, and now I want to un-merge the topic? Sorry,

Git doesn't do that. And why not? Because the software doesn't

always understand branches in the way we might like. Not because the

question doesn't make sense, just because the software doesn't always

do what we want.

So yeah, the the software isn't as good as we might like. What software is? But to pretend that the software is right, and that all the defects are actually benefits is a little crazy. It's true that Git implements branches as refs, plus also a nebulous implicit part that varies from command to command. But that's an unfortunate implementation detail, not something we should be committed to.

[ Addendum 20230228: Several people have reminded me that the suggestions of the next-to-last paragraph are possible in some other VCSes, such as Mercurial. I meant to mention this, but forgot. Thanks for the reminder. ]

[Other articles in category /prog/git] permanent link

Sun, 04 Dec 2022

Software horror show: SAP Concur

This complaint is a little stale, but maybe it will still be interesting. A while back I was traveling to California on business several times a year, and the company I worked for required that I use SAP Concur expense management software to submit receipts for reimbursement.

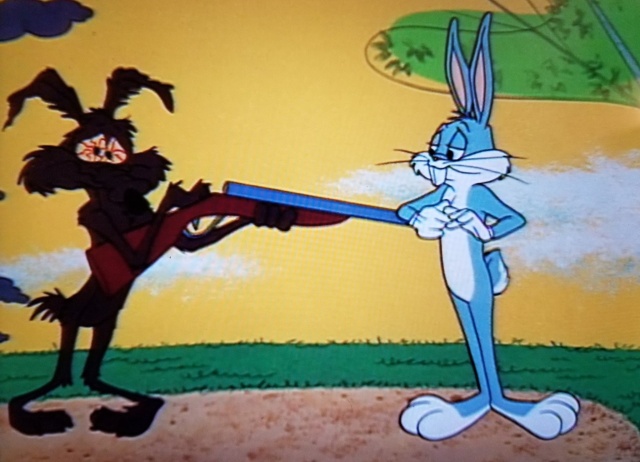

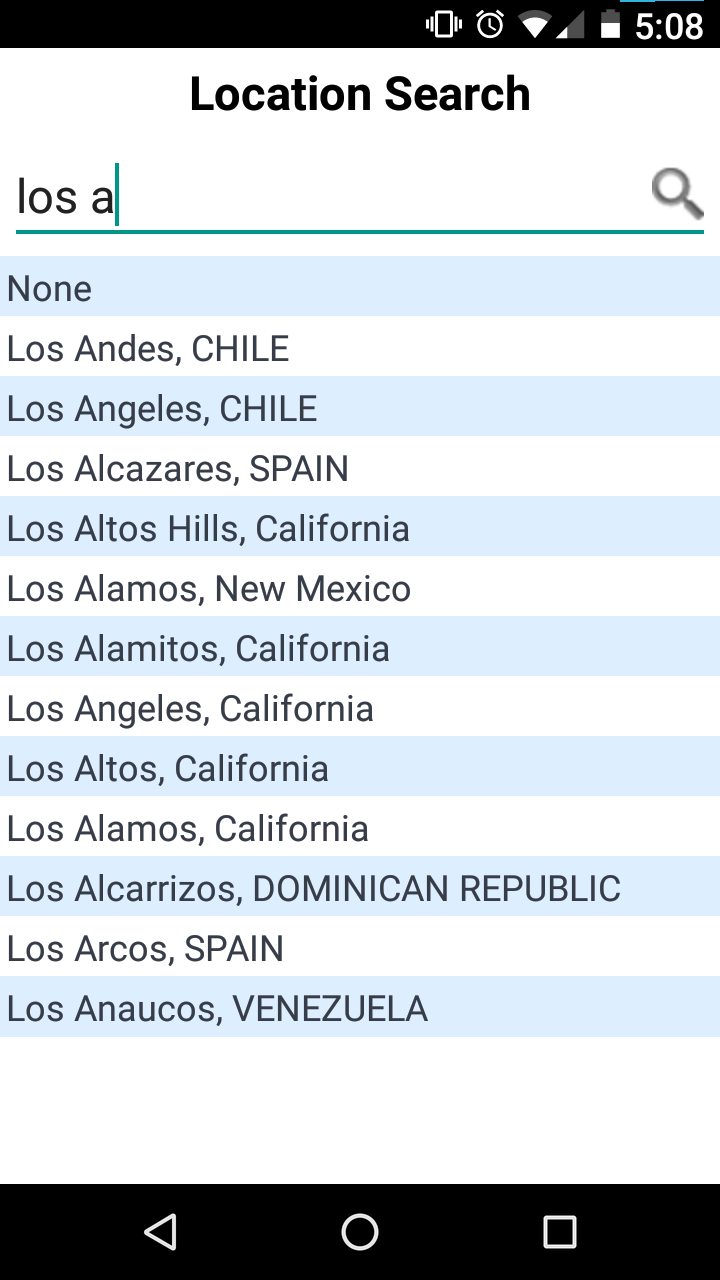

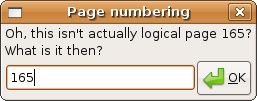

At one time I would have had many, many complaints about Concur. But today I will make only one. Here I am trying to explain to the Concur phone app where my expense occurred, maybe it was a cab ride from the airport or something.

I had to interact with this control every time there was another expense to report, so this is part of the app's core functionality.

There are a lot of good choices about how to order this list. The best ones require some work. The app might use the phone's location feature to figure out where it is and make an educated guess about how to order the place names. (“I'm in California, so I'll put those first.”) It could keep a count of how often this user has chosen each location before, and put most commonly chosen ones first. It could store a list of the locations the user has selected before and put the previously-selected ones before the ones that had never been selected. It could have asked, when the expense report was first created, if there was an associated location, say “California”, and then used that to put California places first, then United States places, then the rest. It could have a hardwired list of the importance of each place (or some proxy for that, like population) and put the most important places at the top.

The actual authors of SAP Concur's phone app did none of these things. I understand. Budgets are small, deadlines are tight, product managers can be pigheaded. Sometimes the programmer doesn't have the resources to do the best solution.

But this list isn't even alphabetized.

There are two places named Los Alamos; they are not adjacent. There are two places in Spain; they are also not adjacent. This is inexcusable. There is no resource constraint that is so stringent that it would prevent the programmers from replacing

displaySelectionList(matches)

with

displaySelectionList(matches.sorted())

They just didn't.

And then whoever reviewed the code, if there was a code review, didn't

say “hey, why didn't you use displaySortedSelectionList here?”

And then the product manager didn't point at the screen and say “wouldn't it be better to alphabetize these?”

And the UX person, if there was one, didn't raise any red flag, or if they did nothing was done.

I don't know what Concur's software development and release process is like, but somehow it had a complete top-to-bottom failure of quality control and let this shit out the door.

I would love to know how this happened. I said a while back:

Assume that bad technical decisions are made rationally, for reasons that are not apparent.

I think this might be a useful counterexample. And if it isn't, if the individual decision-makers all made choices that were locally rational, it might be an instructive example on how an organization can be so dysfunctional and so filled with perverse incentives that it produces a stack of separately rational decisions that somehow add up to a failure to alphabetize a pick list.

Addendum : A possible explanation

Dennis Felsing, a former employee of SAP working on their HANA database, has suggested how this might have come about. Suppose that the app originally used a database that produced the results already sorted, so that no sorting in the client was necessary, or at least any omitted sorting wouldn't have been noticed. Then later, the backend database was changed or upgraded to one that didn't have the autosorting feature. (This might have happened when Concur was acquired by SAP, if SAP insisted on converting the app to use HANA instead of whatever it had been using.)

This change could have broken many similar picklists in the same way. Perhaps there was large and complex project to replace the database backend, and the unsorted picklist were discovered relatively late and were among the less severe problems that had to be overcome. I said “there is no resource constraint that is so stringent that it would prevent the programmers from (sorting the list)”. But if fifty picklists broke all at the same time for the same reason? And you weren't sure where they all were in the code? At the tail end of a large, difficult project? It might have made good sense to put off the minor problems like unsorted picklists for a future development cycle. This seems quite plausible, and if it's true, then this is not a counterexample of “bad technical decisions are made rationally for reasons that are not apparent”. (I should add, though, that the sorting issue was not fixed in the next few years.)

In the earlier article I said “until I got the correct explanation, the only explanation I could think of was unlimited incompetence.” That happened this time also! I could not imagine a plausible explanation, but M. Felsing provided one that was so plausible I could imagine making the decision the same way myself. I wish I were better at thinking of this kind of explanation.

[Other articles in category /prog] permanent link

Fri, 04 Nov 2022

A map of Haskell's numeric types

I keep getting lost in the maze of Haskell's numeric types. Here's the map I drew to help myself out. (I think there might have been something like this in the original Haskell 1998 report.)

Ovals are typeclasses. Rectangles are types. Black mostly-straight arrows show instance relationships. Most of the defined functions have straightforward types like !!\alpha\to\alpha!! or !!\alpha\to\alpha\to\alpha!! or !!\alpha\to\alpha\to\text{Bool}!!. The few exceptions are shown by wiggly colored arrows.

Basic plan

After I had meditated for a while on this picture I began to understand the underlying organization. All numbers support !!=!! and !!\neq!!. And there are three important properties numbers might additionally have:

Ord: ordered; supports !!\lt\leqslant\geqslant\gt!! etc.Fractional: supports divisionEnum: supports ‘pred’ and ‘succ’

Integral types are both Ord and Enum, but they are not

Fractional because integers aren't closed under division.

Floating-point and rational types are Ord and Fractional but not

Enum because there's no notion of the ‘next’ or ‘previous’ rational

number.

Complex numbers are numbers but not Ord because they don't admit a

total ordering. That's why Num plus Ord is called Real: it's

‘real’ as constrasted with ‘complex’.

More stuff

That's the basic scheme. There are some less-important elaborations:

Real plus Fractional is called RealFrac.

Fractional numbers can be represented as exact rationals or as

floating point. In the latter case they are instances of

Floating. The Floating types are required to support a large

family of functions like !!\log, \sin,!! and π.

You can construct a Ratio a type for any a; that's a fraction

whose numerators and denominators are values of type a. If you do this, the

Ratio a that you get is a Fractional, even if a wasn't one. In particular,

Ratio Integer is called Rational and is (of course) Fractional.

Shuff that don't work so good

Complex Int and Complex Rational look like they should exist, but

they don't really. Complex a is only an instance of Num when a

is floating-point. This means you can't even do 3 :: Complex

Int — there's no definition of fromInteger.

You can construct values of type Complex Int, but you can't do

anything with them, not even addition and subtraction. I think the

root of the problem is that Num requires an abs

function, and for complex numbers you need the sqrt function to be

able to compute abs.

Complex Int could in principle support most of the functions

required by Integral (such as div and mod) but Haskell

forecloses this too because its definition of Integral requires

Real as a prerequisite.

You are only allowed to construct Ratio a if a is integral.

Mathematically this is a bit odd. There is a generic construction,

called the field of quotients, which takes

a ring and turns it into a field, essentially by considering all the

formal fractions !!\frac ab!! (where !!b\ne 0!!), and with !!\frac ab!!

considered equivalent to !!\frac{a'}{b'}!! exactly when !!ab' = a'b!!.

If you do this with the integers, you get the rational numbers; if you

do it with a ring of polynomials, you get a field of rational functions, and

so on. If you do it to a ring that's already a field, it still

works, and the field you get is trivially isomorphic to the original

one. But Haskell doesn't allow it.

I had another couple of pages written about yet more ways in which the numeric class hierarchy is a mess (the draft title of this article was "Haskell's numbers are a hot mess") but I'm going to cut the scroll here and leave the hot mess for another time.

[ Addendum: Updated SVG and PNG to version 1.1. ]

[Other articles in category /prog/haskell] permanent link

Sun, 23 Oct 2022

This search algorithm is usually called "group testing"

Yesterday I described an algorithm that locates the ‘bad’ items among a set of items, and asked:

does this technique have a name? If I wanted to tell someone to use it, what would I say?

The answer is: this is group testing, or, more exactly, the “binary splitting” version of adaptive group testing, in which we are allowed to adjust the testing strategy as we go along. There is also non-adaptive group testing in which we come up with a plan ahead of time for which tests we will perform.

I felt kinda dumb when this was pointed out, because:

- A typical application (and indeed the historically first application) is for disease testing

- My previous job was working for a company doing high-throughput disease testing

- I found out about the job when one of the senior engineers there happened to overhear me musing about group testing

- Not only did I not remember any of this when I wrote the blog post, I even forgot about the disease testing application while I was writing the post!

Oh well. Thanks to everyone who wrote in to help me! Let's see, that's Drew Samnick, Shreevatsa R., Matt Post, Matt Heilige, Eric Harley, Renan Gross, and David Eppstein. (Apologies if I left out your name, it was entirely unintentional.)

I also asked:

Is the history of this algorithm lost in time, or do we know who first invented it, or at least wrote it down?

Wikipedia is quite confident about this:

The concept of group testing was first introduced by Robert Dorfman in 1943 in a short report published in the Notes section of Annals of Mathematical Statistics. Dorfman's report – as with all the early work on group testing – focused on the probabilistic problem, and aimed to use the novel idea of group testing to reduce the expected number of tests needed to weed out all syphilitic men in a given pool of soldiers.

Eric Harley said:

[It] doesn't date back as far as you might think, which then makes me wonder about the history of those coin weighing puzzles.

Yeah, now I wonder too. Surely there must be some coin-weighing puzzles in Sam Loyd or H.E. Dudeney that predate Dorfman?

Dorfman's original algorithm is not the one I described. He divides the items into fixed-size groups of n each, and if a group of n contains a bad item, he tests the n items individually. My proposal was to always split the group in half. Dorfman's two-pass approach is much more practical than mine for disease testing, where the test material is a body fluid sample that may involve a blood draw or sticking a swab in someone's nose, where the amount of material may be limited, and where each test offers a chance to contaminate the sample.

Wikipedia has an article about a more sophisticated of the binary-splitting algorithm I described. The theory is really interesting, and there are many ingenious methods.

Thanks to everyone who wrote in. Also to everyone who did not. You're all winners.

[ Addendum 20221108: January First-of-May has brought to my attention section 5c of David Singmaster's Sources in Recreational Mathematics, which has notes on the known history of coin-weighing puzzles. To my surprise, there is nothing there from Dudeney or Loyd; the earliest references are from the American Mathematical Monthly in 1945. I am sure that many people would be interested in further news about this. ]

[Other articles in category /prog] permanent link

Fri, 21 Oct 2022

More notes on deriving Applicative from Monad

A year or two ago I wrote about what you do if you already have a Monad and you need to define an Applicative instance for it. This comes up in converting old code that predates the incorporation of Applicative into the language: it has these monad instance declarations, and newer compilers will refuse to compile them because you are no longer allowed to define a Monad instance for something that is not an Applicative. I complained that the compiler should be able to infer this automatically, but it does not.

My current job involves Haskell programming and I ran into this issue again in August, because I understood monads but at that point I was still shaky about applicatives. This is a rough edit of the notes I made at the time about how to define the Applicative instance if you already understand the Monad instance.

pure is easy: it is identical to return.

Now suppose we

have >>=: how can we get <*>? As I eventually figured out last

time this came up, there is a simple solution:

fc <*> vc = do

f <- fc

v <- vc

return $ f v

or equivalently:

fc <*> vc = fc >>= \f -> vc >>= \v -> return $ f v

And in fact there is at least one other way to define it is just as good:

fc <*> vc = do

v <- vc

f <- fc

return $ f v

(Control.Applicative.Backwards

provides a Backwards constructor that reverses the order of the

effects in <*>.)

I had run into this previously

and written a blog post about it.

At that time I had wanted the second <*>, not the first.

The issue came up again in August because, as an exercise, I was trying to

implement the StateT state transformer monad constructor from scratch. (I found

this very educational. I had written State before, but StateT was

an order of magnitude harder.)

I had written this weird piece of code:

instance Applicative f => Applicative (StateT s f) where

pure a = StateT $ \s -> pure (s, a)

stf <*> stv = StateT $

\s -> let apf = run stf s

apv = run stv s

in liftA2 comb apf apv where

comb = \(s1, f) (s2, v) -> (s1, f v) -- s1? s2?

It may not be obvious why this is weird. Normally the definition of

<*> would look something like this:

stf <*> stv = StateT $

\s0 -> let (s1, f) = run stf s0

let (s2, v) = run stv s1

in (s2, f v)

This runs stf on the initial state, yielding f and a new state

s1, then runs stv on the new state, yielding v and a final state

s2. The end result is f v and the final state s2.

Or one could just as well run the two state-changing computations in the opposite order:

stf <*> stv = StateT $

\s0 -> let (s1, v) = run stv s0

let (s2, f) = run stf s1

in (s2, f v)

which lets stv mutate the state first and gives stf the result

from that.

I had been unsure of whether I wanted to run stf or stv first. I

was familiar with monads, in which the question does not come up. In

v >>= f you must run v first because you will pass its value

to the function f. In an Applicative there is no such dependency, so I

wasn't sure what I neeeded to do. I tried to avoid the question by

running the two computations ⸢simultaneously⸣ on the initial state

s0:

stf <*> stv = StateT $

\s0 -> let (sf, f) = run stf s0

let (sv, v) = run stv s0

in (sf, f v)

Trying to sneak around the problem, I was caught immediately, like a

small child hoping to exit a room unseen but only getting to the

doorway. I could run the computations ⸢simultaneously⸣ but on the

very next line I still had to say what the final state was in the end:

the one resulting from computation stf or the one resulting from

computation stv. And whichever I chose, I would be discarding the

effect of the other computation.

My co-worker Brandon Chinn

opined that this must

violate one of the

applicative functor laws.

I wasn't sure, but he was correct.

This implementation of <*> violates the applicative ”interchange”

law that requires:

f <*> pure x == pure ($ x) <*> f

Suppose f updates the state from !!s_0!! to !!s_f!!. pure x and

pure ($ x), being pure, leave it unchanged.

My proposed implementation of <*> above runs the two

computations and then updates the state to whatever was the result of

the left-hand operand, sf discarding any updates performed by the right-hand

one. In the case of f <*> pure x the update from f is accepted

and the final state is !!s_f!!.

But in the case of pure ($ x) <*> f the left-hand operand doesn't do

an update, and the update from f is discarded, so the final state is

!!s_0!!, not !!s_f!!. The interchange law is violated by this implementation.

(Of course we can't rescue this by yielding (sv, f v) in place of

(sf, f v); the problem is the same. The final state is now the

state resulting from the right-hand operand alone, !!s_0!! on the

left side of the law and !!s_f!! on the right-hand side.)

Stack Overflow discussion

I worked for a while to compose a question about this for Stack Overflow, but it has been discussed there at length, so I didn't need to post anything:

<**>is a variant of<*>with the arguments reversed. What does "reversed" mean?- How arbitrary is the "ap" implementation for monads?

- To what extent are Applicative/Monad instances uniquely determined?

That first thread contains this enlightening comment:

Functors are generalized loops

[ f x | x <- xs];Applicatives are generalized nested loops

[ (x,y) | x <- xs, y <- ys];Monads are generalized dynamically created nested loops

[ (x,y) | x <- xs, y <- k x].

That middle dictum provides another way to understand why my idea of running the effects ⸢simultaneously⸣ was doomed: one of the loops has to be innermost.

The second thread above (“How arbitrary is the ap implementation for

monads?”) is close to what I was aiming for in my question, and

includes a wonderful answer by Conor McBride (one of the inventors of

Applicative). Among other things, McBride points out that there are at least

four reasonable Applicative instances consistent with the monad

definition for nonempty lists.

(There is a hint in his answer here.)

Another answer there sketches a proof that if the applicative

”interchange” law holds for some applicative functor f, it holds for

the corresponding functor which is the same except that its <*>

sequences effects in the reverse order.

[Other articles in category /prog/haskell] permanent link

Wed, 19 Oct 2022

What's this search algorithm usually called?

Consider this problem:

Input: A set !!S!! of items, of which an unknown subset, !!S_{\text{bad}}!!, are ‘bad’, and a function, !!\mathcal B!!, which takes a subset !!S'!! of the items and returns true if !!S'!! contains at least one bad item:

$$ \mathcal B(S') = \begin{cases} \mathbf{false}, & \text{if $S'\cap S_{\text{bad}} = \emptyset$} \\ \mathbf{true}, & \text{otherwise} \\ \end{cases} $$

Output: The set !!S_{\text{bad}}!! of all the bad items.

Think of a boxful of electronic components, some of which are defective. You can test any subset of components simultaneously, and if the test succeeds you know that each of those components is good. But if the test fails all you know is that at least one of the components was bad, not how many or which ones.

The obvious method is simply to test the components one at a time:

$$ S_{\text{bad}} = \{ x\in S \mid \mathcal B(\{x\}) \} $$

This requires exactly !!|S|!! calls to !!\mathcal B!!.

But if we expect there to be relatively few bad items, we may be able to do better:

- Call !!\mathcal B(S)!!. That is, test all the components at once. If none is bad, we are done.

- Otherwise, partition !!S!! into (roughly-equal) halves !!S_1!! and !!S_2!!, and recurse.

In the worst case this takes (nearly) twice as many calls as just calling !!\mathcal B!! on the singletons. But if !!k!! items are bad it requires only !!O(k\log |S|)!! calls to !!\mathcal B!!, a big win if !!k!! is small compared with !!|S|!!.

My question is: does this technique have a name? If I wanted to tell someone to use it, what would I say?

It's tempting to say "binary search" but it's not very much like binary search. Binary search finds a target value in a sorted array. If !!S!! were an array sorted by badness we could use something like binary search to locate the first bad item, which would solve this problem. But !!S!! is not a sorted array, and we are not really looking for a target value.

Is the history of this algorithm lost in time, or do we know who first invented it, or at least wrote it down? I think it sometimes pops up in connection with coin-weighing puzzles.

[ Addendum 20221023: this is the pure binary-splitting variation of adaptive group testing. I wrote a followup. ]

[Other articles in category /prog] permanent link

Tue, 18 Oct 2022In Perl I would often write a generic tree search function:

# Perl

sub search {

my ($is_good, $children_of, $root) = @_;

my @queue = ($root);

return sub {

while (1) {

return unless @queue;

my $node = shift @queue;

push @queue, $children_of->($node);

return $node if $is_good->($node);

}

}

}

For example, see Higher-Order Perl, section 5.3.

To use this, we provide two callback functions. $is_good checks

whether the current item has the properties we were searching for.

$children_of takes an item and returns its children in the tree.

The search function returns an iterator object, which, each time it is

called, returns a single item satisfying the $is_good predicate, or

undef if none remains. For example, this searches the space of all

strings over abc for palindromic strings:

# Perl

my $it = search(sub { $_[0] eq reverse $_[0] },

sub { return map "$_[0]$_" => ("a", "b", "c") },

"");

while (my $pal = $it->()) {

print $pal, "\n";

}

Many variations of this are possible. For example, replacing push

with unshift changes the search from breadth-first to depth-first.

Higher-Order Perl shows how to modify it to do heuristically-guided search.

I wanted to do this in Haskell, and my first try didn’t work at all:

-- Haskell

search1 :: (n -> Bool) -> (n -> [n]) -> n -> [n]

search1 isGood childrenOf root =

s [root]

where

s nodes = do

n <- nodes

filter isGood (s $ childrenOf n)

There are two problems with this. First, the filter is in the wrong

place. It says that the search should proceed downward only from the

good nodes, and stop when it reaches a not-good node.

To see what's wrong with this, consider a search for palindromes. Th

string ab isn't a palindrome, so the search would be cut off

at ab, and never proceed downward to find aba or abccbccba.

It should be up to childrenOf to decide how to

continue the search. If the search should be pruned at a particular

node, childrenOf should return an empty list of children. The

$isGood callback has no role here.

But the larger problem is that in most cases this function will compute

forever without producing any output at all, because the call to s

recurses before it returns even one list element.

Here’s the palindrome example in Haskell:

palindromes = search isPalindrome extend ""

where

isPalindrome s = (s == reverse s)

extend s = map (s ++) ["a", "b", "c"]

This yields a big fat !!\huge \bot!!: it does nothing, until memory is exhausted, and then it crashes.

My next attempt looked something like this:

search2 :: (n -> Bool) -> (n -> [n]) -> n -> [n]

search2 isGood childrenOf root = filter isGood $ s [root]

where

s nodes = do

n <- nodes

n : (s $ childrenOf n)

The filter has moved outward, into a single final pass over the

generated tree. And s now returns a list that at least has the node

n on the front, before it recurses. If one doesn’t look at the nodes

after n, the program doesn’t make the recursive call.

The palindromes program still isn’t right though. take 20

palindromes produces:

["","a","aa","aaa","aaaa","aaaaa","aaaaaa","aaaaaaa","aaaaaaaa",

"aaaaaaaaa", "aaaaaaaaaa","aaaaaaaaaaa","aaaaaaaaaaaa",

"aaaaaaaaaaaaa","aaaaaaaaaaaaaa", "aaaaaaaaaaaaaaa",

"aaaaaaaaaaaaaaaa","aaaaaaaaaaaaaaaaa", "aaaaaaaaaaaaaaaaaa",

"aaaaaaaaaaaaaaaaaaa"]

It’s doing a depth-first search, charging down the leftmost branch to

infinity. That’s because the list returned from s (a:b:rest) starts

with a, then has the descendants of a, before continuing with b

and b's descendants. So we get all the palindromes beginning with

“a” before any of the ones beginning with "b", and similarly all

the ones beginning with "aa" before any of the ones beginning with

"ab", and so on.

I needed to convert the search to breadth-first, which is memory-expensive but at least visits all the nodes, even when the tree is infinite:

search3 :: (n -> Bool) -> (n -> [n]) -> n -> [n]

search3 isGood childrenOf root = filter isGood $ s [root]

where

s nodes = nodes ++ (s $ concat (map childrenOf nodes))

This worked. I got a little lucky here, in that I had already had the

idea to make s :: [n] -> [n] rather than the more obvious s :: n ->

[n]. I had done that because I wanted to do the n <- nodes thing,

which is no longer present in this version. But it’s just what we need, because we

want s to return a list that has all the nodes at the current

level (nodes) before it recurses to compute the nodes farther down.

Now take 20 palindromes produces the answer I wanted:

["","a","b","c","aa","bb","cc","aaa","aba","aca","bab","bbb","bcb",