Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2026: | J |

| 2025: | JFMAMJ |

| JASOND | |

| 2024: | JFMAMJ |

| JASOND | |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 245 |

| Programming | 100 |

| Language | 95 |

| Miscellaneous | 75 |

| Book | 50 |

| Tech | 49 |

| Etymology | 35 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 25 |

| Law | 22 |

| Physics | 21 |

| Perl | 17 |

| Biology | 16 |

| Brain | 15 |

| Calendar | 15 |

| Food | 15 |

Comments disabled

Wed, 13 Sep 2023

Horizontal and vertical complexity

Note: The jumping-off place for this article is a conference talk which I did not attend. You should understand this article as rambling musings on related topics, not as a description of the talk or a response to it or a criticism of it or as a rebuttal of its ideas.

A co-worker came back from PyCon reporting on a talk called “Wrapping up the Cruft - Making Wrappers to Hide Complexity”. He said:

The talk was focussed on hiding complexity for educational purposes. … The speaker works for an educational organisation … and provided an example of some code for blinking lights on a single board machine. It was 100 lines long, you had to know about a bunch of complexity that required you to have some understanding of the hardware, then an example where it was the initialisation was wrapped up in an import and for the kids it was as simple as selecting a colour and which led to light up. And how much more readable the code was as a result.

The better we can hide how the sausage is made the more approachable and easier it is for those who build on it to be productive. I think it's good to be reminded of this lesson.

I was on fully board with this until the last bit, which gave me an uneasy feeling. Wrapping up code this way reduces horizontal complexity in that it makes the top level program shorter and quicker. But it increases vertical complexity because there are now more layers of function calling, more layers of interface to understand, and more hidden magic behavior. When something breaks, your worries aren't limited to understanding what is wrong with your code. You also have to wonder about what the library call is doing. Is the library correct? Are you calling it correctly? The difficulty of localizing the bug is larger, and when there is a problem it may be in some module that you can't see, and that you may not know exists.

Good interfaces successfuly hide most of this complexity, but even in the best instances the complexity has only been hidden, and it is all still there in the program. An uncharitable description would be that the complexity has been swept under the carpet. And this is the best case! Bad interfaces don't even succeed in hiding the complexity, which keeps leaking upward, like a spreading stain on that carpet, one that warns of something awful underneath.

Advice about how to write programs bangs the same drum over and over and over:

- Reduce complexity

- Do the simplest thing that could possibly work

- You ain't gonna need it

- Explicit is better than implicit

But here we have someone suggesting the opposite. We should be extremely wary.

There is always a tradeoff. Leaky abstractions can increase the vertical complexity by more than they decrease the horizontal complexity. Better-designed abstractions can achieve real wins.

It’s a hard, hard problem. That’s why they pay us the big bucks.

Ratchet effects

This is a passing thought that I didn't consider carefully enough to work into the main article.

A couple of years ago I wrote an article called Creeping featurism and the ratchet effect about how adding features to software, or adding more explanations to the manual, is subject to a “ratcheting force”. The benefit of the change is localized and easy to imagine:

You can imagine a confused person in your head, someone who happens to be confused in exactly the right way, and who is miraculously helped out by the presence of the right two sentences in the exact right place.

But the cost of the change is that the manual is now a tiny bit larger. It doesn't affect any specific person. But it imposes a tiny tax on everyone who uses the manual.

Similarly adding a feature to software has an obvious benefit, so there's pressure to add more features, and the costs are hidden, so there's less pressure in the opposite direction.

And similarly, adding code and interfaces and libraries to software has an obvious benefit: look how much smaller the top-level code has become! But the cost, that the software is 0.0002% more complex, is harder to see. And that cost increases imperceptibly, but compounds exponentially. So you keep moving in the same direction, constantly improving the software architecture, until one day you wake up and realize that it is unmaintainable. You are baffled. What could have gone wrong?

Kent Beck says, “design isn't free”.

Anecdote

The original article is in the context of a class for beginners where the kids just want to make the LEDs light up. If I understand the example correctly, in this context I would probably have made the same choice for the same reason.

But I kept thinking of an example where I made the opposite choice. I

taught an introduction to programming in C class about thirty years

ago. The previous curriculum had considered pointers an advanced topic

and tried to defer them to the middle of the semester. But the author

of the curriculum had had a big problem: you need pointers to deal with

scanf. What to do?

The solution chosen by the previous curriculum was to supply the students with a library of canned input functions like

int get_int(void); /* Read an integer from standard input */

These used scanf under the hood. (Under the carpet, one might say.)

But all the code with pointers was hidden.

I felt this was a bad move. Even had the library been a perfect abstraction (it wasn't) and completely bug-free (it wasn't) it would still have had a giant flaw: Every minute of time the students spent learning to use this library was a minute wasted on something that would never be of use and that had no intrinsic value. Every minute of time spent on this library was time that could have been spent learning to use pointers! People programming in C will inevitably have to understand pointers, and will never have to understand this library.

My co-worker from the first part of this article wrote:

The better we can hide how the sausage is made the more approachable and easier it is for those who build on it to be productive.

In some educational contexts, I think this is a good idea. But not if you are trying to teach people sausage-making!

[Other articles in category /prog] permanent link

Sun, 10 Sep 2023Last month Toph and I went on vacation to Juneau, Alaska. I walked up to look at the glacier, but mostly we just enjoyed the view and the cool weather. But there were some surprises.

One day we took a cab downtown, and our driver, Edwell John, asked where we were visiting from, as cab drivers do. We said we were from Philadelphia, and he told us he had visited Philadelphia himself.

“I was repatriating Native artifacts,” he said.

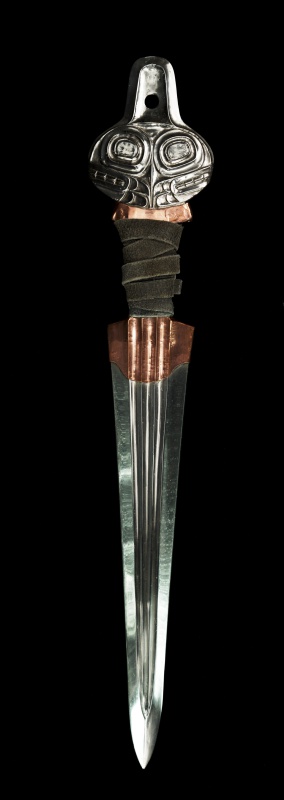

Specifically, he had gone to the University of Pennsyvania Museum, to take back the Killer Whale Dagger named Keet Gwalaa. This is a two foot long dagger that was forged by Tlingit people in the 18th century from meteorite steel.

This picture comes from the Penn Museum. (I think this isn't the actual dagger, but a reproduction they had made after M. John took back the original.)

This was very exciting! I asked “where is the dagger now?” expecting that it had been moved to a museum in Angoon or something.

“Oh, I have it,” he said.

I was amazed. “What, like on you?”

“No, at my house. I'm the clan leader of the Killer Whale clan.”

Then he took out his phone and showed us a photo of himself in his clan leader garb, carrying the dagger.

Here's an article about M. John visiting the Smithsonian to have them 3-D scan the Killer Whale hat. Then the Smithsonian had a replica hat made from the scan.

[Other articles in category /art] permanent link

Sat, 09 Sep 2023

My favorite luxurious office equipment is low-tech

This is about the stuff I have in my office that I could live without but wouldn't want to. Not stuff like “a good chair” because a good chair is not optional. And not stuff like “paper”. This is the stuff that you might not have thought about already.

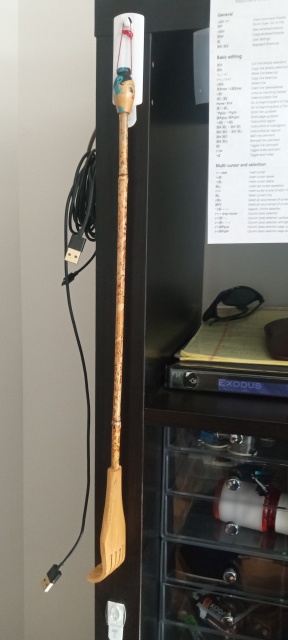

The back scratcher at right cost me about $1 and brings me joy every time I use it. My back is itchy, it is distracting me from work, aha, I just grab the back scratcher off the hook and the problem is solved in ten seconds. Not only is it a sensual pleasure, but also I get the satisfaction of a job done efficiently and effectively.

Computer programmers often need to be reminded that the cheap, simple, low-tech solution is often the best one. Perfection is achieved not when there is nothing more to add, but when there is nothing more to take away. I see this flawlessly minimal example of technology every time I walk into my office and it reminds me of the qualities I try to put into my software.

These back scratchers are available everywhere. If your town has a dollar store or an Asian grocery, take a look. I think the price has gone up to $2.

When I was traveling a lot for ZipRecruiter, I needed a laptop stand. (Using a laptop without a stand is bad for your neck.) I asked my co-workers for recommendations and a couple of them said that the Roost was nice. It did seem nice, but it cost $75. So I did Google search for “laptop stand like Roost but cheap” and this is what I found.

This is a Nexstand. The one in this picture is about ten years old. It has performed flawlessly. It has never failed. There has never been any moment when I said “ugh, this damn thing again, always causing problems.”

It folds up and deploys in seconds.

It weighs eight ounces. That's 225 grams.

It takes up the smallest possible amount of space in my luggage. Look at the picture at left. LOOK AT IT I SAY.

The laptop height is easily adjustable.

The Nexstand currently sells for $25–35. (The Roost is up to $90.)

This is another “there is nothing left to take away” item. It's perfect the way it is. This picture shows it quietly doing its job with no fuss, as it does every day.

This last item has changed my life. Not drastically, but significantly, and for the better.

This is a Vobaga electric mug warmer. You put your mug on it, and the coffee or tea or whatever else is in the mug stays hot, but not too hot to drink, indefinitely.

The button on the left turns the power on and off. The button on the right adjusts the temperature: blue for warm, purple for warmer, and red for hot. (The range is 104–149°F (40–65°C). I like red.) After you turn off the power, the temperature light blinks for a while to remind you not to put your hand on it.

That is all it does, it is not programmable, it is not ⸢smart⸣, it does not require configuration, it does not talk to the Internet, it does not make any sounds, it does not spy on me, it does not have a timer, it does do one thing and it does it well, and I never have to drink lukewarm coffee.

The power cord is the only flaw, because it plugs into wall power and someone might trip on it and spill your coffee, but it is a necessary flaw. You can buy a mug warmer that uses USB power. When I first looked into mug warmers I was puzzled. Surely, I thought, a USB connection does not deliver enough power to keep a mug of coffee warm? At the time, this was correct. USB 2 can deliver 5V at up to 0.5A, a total of 2.5 watts of power. That's only 0.59 calorie per second. Ridiculous. The Vobaga can deliver 20 watts. That is enough.

Vobaga makes this in several colors (not that anything is wrong with black) and it costs around $25–30. The hot round thing is 4 inches in diameter (10 cm) and neatly fits all my mugs, even the big ones. It does not want to go in the dishwasher but easily wipes clean with a damp cloth. I once spilled the coffee all over it but it worked just fine once it dried out because it is low tech.

It's just another one of those things that works, day in and day out, without my having to think about it, unless I feel like gloating about how happy it makes me.

[ Addendum: I have no relationship with any of these manufacturers except as a satisfied customer of their awesome products. Do I really need to say that? ]

[Other articles in category /tech] permanent link

Tue, 05 Sep 2023

Mystery of the missing skin tone

Slack, SMS, and other similar applications that display emoji have a skin-tone modifier that adjusts the emoji appearance to one of five skin tones.

For example, there is a generic thumbs-up emoji 👍. Systems may support five variants, which are coded as the thumbs-up followed by one of five “diversity modifier” characters: 👍🏻👍🏼👍🏽👍🏾👍🏿. Depending on your display, you might see a series of five different-toned thumbs-ups, or five generic thumbs-ups each followed by a different skin tone swatch. Or on a monochrome display, you might see stippled versions.

Slack refers to these modifiers as skin-tone-2 through

skin-tone-6. What happened to skin-tone-1? It's not used, so I

tried to find out why. (Spoiler: I failed.)

Slack and other applications adopted this system direct from Unicode the modifier characters are part of the Unicode emoji standard, called UTS #51. UTS51 defines the five modifiers. The official short names for these are:

light skin tone

medium-light skin tone

medium skin tone

medium-dark skin tone

dark skin tone

And the official Unicode character names for the characters are respectively

EMOJI MODIFIER FITZPATRICK TYPE 1-2

EMOJI MODIFIER FITZPATRICK TYPE 3

EMOJI MODIFIER FITZPATRICK TYPE 4

EMOJI MODIFIER FITZPATRICK TYPE 5

EMOJI MODIFIER FITZPATRICK TYPE 6

So this is why Slack has no :skin-tone-1:; already the Unicode

standard combines skin types 1 and 2.

“Fitzpatrick” here refers to the Fitzpatrick scale:

The Fitzpatrick scale … is a numerical classification schema for human skin color. It was developed in 1975 by American dermatologist Thomas B. Fitzpatrick as a way to estimate the response of different types of skin to ultraviolet light. It was initially developed on the basis of skin color to measure the correct dose of UVA for PUVA therapy …

The standard cites this document from the Australian Radiation Protection and Nuclear Safety Agency which has a 9-question questionnaire you can use to find out which of the six categories your own skin is in. And it does have six categories, not five. Categories 1 and 2 are the lightest two: Category 1 is the pasty-faced freckled gingers and the people who look like boiled ham. Category 2 is next-lightest and includes yellow-tinted Central European types like me.

(The six categories are accompanied by sample photos of people, and the ARPNSA did a fine job of finding attractive and photogenic models in every category, even the pasty gingers and boiled ham people.)

But why were types 1 and 2 combined? I have not been able to find out. The original draft for UTR #51 was first announced in November 2014, with the diversity modifiers already in their current form. (“… a mechanism using 5 new proposed characters…”) The earliest available archived version of the standard is from the following month and its “diversity” section is substantially the same as the current versions.

I hoped that one of the Unicode mailing lists would have some of the antecedent discussion, and even went so far as to download the entire archives of the Unicode Mail List for offline searching, but I found nothing earlier than the UTR #51 announcement itself, and nothing afterward except discussions about whether the modifiers would apply to 💩 or to 🍺.

Do any of my Gentle Readers have information about this, or suggestions of further exploration?

[Other articles in category /tech] permanent link

Math SE report 2023-06: funky-looking Hasse diagrams, and what is a polynomial anyway?

Is !!x^4-x^4 = 0!! a fourth-degree equation?

This is actually a really good question! (You can tell because it's quick to ask and complicated to answer.) It goes back to a very fundamental problem that beginners in mathematics, which is that there is a difference between an object's true nature and the way it happens to be written down. And often these problems are compounded because there is no way to talk about the object except by referring to how it is written down.

OP says:

The best definition I could find for the degree of an equation is the following:

The highest power of the unknown term whose coefficient isn't zero in a given equation

And they are bothered by this, and rightly so. I was almost derailed at this point into writing an article about what an equation is, but I'm going to put it off for another day, because I think to get to this person's question what we really need to do is to say what a polynomial is.

One way is to describe it as an expression in a certain form, but this is a bit roundabout. It's like describing a rational number as an expression of the form !!\frac n d!! where !!n!! and !!d!! are relatively prime integers. Under this sort of definition, !!x^4-x^4!! isn't a polynomial at all, because it's not an expression of the correct form.

But I think the right way to define a polynomial is that it's an element of the free ring over some ring !!C!! of coefficients. This leaves completely open the question of how a polynomial is written, or what it looks like. It becomes a theorem that polynomials are in one-to-one correspondence with finite sequences of elements of !!C!!. Then we can say that the “degree” of a polynomial is one less than the length of the corresponding finite sequence, or something like that.

[ Sometimes we make an exception for the zero polynomial and say its degree is !!-\infty!!, to preserve the law !!\operatorname{deg}(pq) = \operatorname{deg}(p)+\operatorname{deg}(q)!!.) ]

In this view the zero polynomial is simply the zero element of the ring. The polynomial called “!!x^4!!” is the fourth power of the free element !!x!!.

Since the polynomials are elements of a ring, addition, subtraction, and multiplication come along automatically, and we can discuss the value of the expression !!x^4-x^4!!, which by the usual properties of !!-!! is also the zero polynomial.

Anyway that all is pretty much what I said:

!!x^4-x^4!! is just a way to write the polynomial !!0!!, which is not a fourth-degree polynomial. Similarly !!x^5+x^4-x^5!! is not a fifth-degree polynomial.

There's an underlying reality here, the abstract elements of the ring !!R[x]!!. And then there's a representation theorem, which is that elements of !!R[x]!! are in one-to-one correspondence with finite sequences of elements of !!R!!. The ring laws give us ways to normalize arbitrary expressions involving polynomials. And then there's also the important functor that turns every polynomial ring into a ring of functions, turning the polynomial !!x^4!! into the function !!x\mapsto x^4!!.

This kind of abstract approach isn't usually explained in secondary or tertiary education, and I'm not sure it would be an improvement if it were. (You'd have to explain rings, for one thing.) But the main conceptual point is that there is a difference between the thing itself (say, !!x^4!!) and the way we happen to write the thing (say, !!x^5+x^4-x^5!!), and some properties are properties of the thing itself and others are properties of expressions, of the way the thing has been written. The degree of a polynomial is a property of the thing itself, not of the way it happens to be written, so both of those expressions are ways to write the same polynomial, which is fourth-degree, regardless of the fact that in one of them, “the highest power of the unknown term whose coefficient isn't zero” is five.

There is one example of this abstraction that everyone learns in childhood, rational numbers. I lean hard on this example, because most people know it pretty well, even if they don't realize it yet. !!\frac15!! and !!\frac6{30}!! are the same thing, written in two different ways. Mathematicians will, without even thinking about it, speak of the numerator of a rational number, and without batting an eyelash will say that the numerator of the rational number !!\frac{6}{30}!! is !!1!!. The fraction !!\frac6{30}!! is a mere notation that represents a rational number, in this case the rational number !!\frac15!!, and this rational number has a numerator of !!1!!.

Beginning (and intermediate) computer programmers also have this issue, that the thing itself, usually some embedding of data in the computer's memory, may be serialized into a string in multiple ways. There's a string that represents the thing, and then there's the thing itself, but it's hard to talk about the thing itself because anything you can write is a string. I wish this were made explicit in CS education. Computer programmers who don't pick up on this crucial point, at least on an intuitive level, do not progress past intermediate.

What are the names given to statements that can be true or false?

I think I totally flubbed this one. OP is really concerned with open and closed formulas. For example, “!!x > 2!!” is true, or false, depending on the value of !!x!!. And OP astutely noted that while “!!x>4 \to x> 2!!” is always true, its meaning still depends on the value of !!x!!.

I did get around to explaining that part of the issue, eventually. The crucial point, which is that there are formulas which may have free variables and then there are statements which do not, is buried at the end after a lot of barely-relevant blather about Quinian quasiquotation. What was I thinking? Perhaps I should go back and shorten the answer to just the relevant part.

How does one identify the weakest preconditions in Hoare triples?

I wrote a detailed explanation of how one identifies weakest

preconditions in Hoare triples, before realizing that actually OP

understood this perfectly, and their

confusion was because their book wrote “{x≠1}” where it should have

had “{x≠-1}”.

Sheesh.

Artifacts of mathematical logic

This was fun. OP wants actual physical artifacts that embody concepts from mathematical logic, the way one models of the platonic solids embody concepts from solid geometry.

I couldn't think of anything good, but then Michael Weiss brought up Lewis Carroll's Game of Logic. This reminded me that Martin Gardner had written a book about embodiments of simple logic, including the Carroll one, so I mentioned that. It's a fun book. Check out the account of Ramon Llull, who missed being canonized because his martyrdom looked a bit too much like FAFO.

I find this answer a little unsatisfying though. The logic machines in Gardner's book do only a little boolean algebra, or maybe at best a tiny bit of predicate logic. But I'd love to see a physical system that somehow encapsulated sequent calculus or natural deduction or something like that. Wouldn't it be cool to have blocks stuck together with magnets or physical couplings, and if you could physically detach the !!B!! from !!A\to B!! only if you already had an assemblage that matched !!A!! exactly? I have no idea how you'd do it. Maybe a linear logic model would be more feasible: once you used !!A!! with !!A\to B!! to get !!B!!, you wouldn't be able to use either one again.

We need some genius to come and invent some astonishing mechanism that formerly seemed impossible. I wonder if Ernő Rubik is available?

Joachim Breitner's Incredible Proof Machine is a fun thing along these lines, but it's not at all an artifact.

Is there a name for this refinement of the subset ordering?

This was my question. I've never seen this ordering elsewhere, but it has a simple description. We start with a totally ordered finite set !!S!!. Then if !!A!! and !!B!! are subsets of !!S!!, we deem !!A \preceq B!! if there is an injective mapping !!f:A\to B!! where !!a \le f(a)!! for each !!a\in A!!.

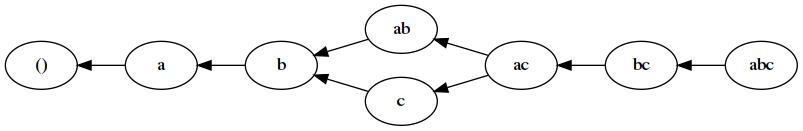

So for example, if !!S!! has three elements !!a\lt b\lt c!! then the ordering I want, on !!2^S!!, has this Hasse diagram:

!!\{b\}\prec\{a,b\}!! because we can match the !!b!!'s. And !!\{a,b\}\prec \{a, c\}!! because we can match !!a!! with !!a!! and !!b!! with !!c!!. But !!\{c\}\not\prec\{a, b\}!! because we can't match !!c!! with either !!a!! or with !!b!!, and !!\{a,b\}\not\prec\{c\}!! because, while we can match either of !!a!! or !!b!! with !!c!!, we aren't allowed to match both of them with !!c!!.

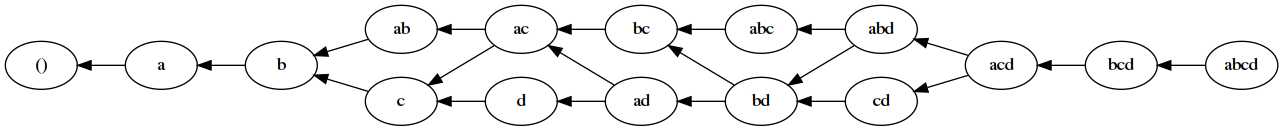

Here's the corresponding Hasse diagram for !!|S|=4!!:

Maybe a better way to describe this is: the bottom element is !!\varnothing!!. To go up the lattice one step, you either increment one of the elements of the current set, or you insert an !!a!! if there isn't one already. So from !!\{b,d\}!! you can either increment the !!b!! to move up to !!\{c, d\}!! or you can insert an !!a!! to move up to !!\{a, b, d\}!!.

This ordering comes up in connection with a problem I've thought about a lot: Say you have a number !!N!! and you want to find !!AB=N!! with !!A!! and !!B!! as close together as possible. Even if you have the prime factorization of !!N!! available, it's not usually clear what the answer is. (In general it's NP-hard.)

If !!N!! is the product of two primes, !!N=p_1p_2!! the answer is obvious. And if !!N!! is a product of three primes !!N =p_1p_2p_3!! there is a definitive answer. Without loss of generality, !!p_1 ≤ p_2 ≤ p_3!!, and the answer is simply that !!A=p_1p_2, B=p_3!! is always optimal.

But if !!N =p_1p_2p_3p_4!! it can go two different ways. Assuming !!p_1 ≤ p_2 ≤ p_3 ≤ p_4!!, it usually turns out that the optimal solution is !!A=p_1p_4, B=p_2p_3!!. But sometimes the optimal solution is !!A=p_1p_2p_3, B=p_4!!. These are the only two possibilities.

Which ways of splitting the prime factors might be optimal relates to those Hasse diagrams above. The possibly-optimal splits between !!A!! and !!B!! correspond to nodes that are just at the boundary of the left and right halves of the diagram.

Nobody had an answer for what this order was called, so I could not look it up. This is OK, I will figure it all out eventually.

[Other articles in category /math/se] permanent link

Mon, 04 Sep 2023

Gantō's axe does have computational content

A while back I was thinking about this theorem of intuitionistic logic:

$$((P\to Q)\land ((P\to \bot) \to Q)) \to {(Q\to\bot)\to \bot} \tag{$\color{darkred}{\heartsuit}$}$$

Since it's intuitionistically valid, its proof can be converted into a program with the corresponding type. But the function that you get seemed to be computationally vacuous, since it could only be called with arguments that were impossible to obtain, or else with arguments that render the whole thing silly. For the former, you can make it work in general if you have at hand a function of type !!(P\to\bot)\to Q!! — but how could you? And for the latter, you can make it work if !!Q=\mathtt{int}!!, in which case you don't really need the program at all since !!(Q\to\bot)\to\bot!! is trivially true.

Several people replied to me about this, but the most interesting response I got was from Simon Tatham, who observed that !!\color{darkred}{\heartsuit}!! is still intuitionistically valid if you replace !!\bot!! with arbitrary !!R!!:

$$((P\to Q)\land ((P\to R) \to Q)) \to {(Q\to R)\to R} \tag{$\color{purple}{\spadesuit}$}$$

The proof is essentially the same:

- Suppose you have: !!f : P\to Q!! and !!g:(P\to R) \to Q!!

- then if you also have !!h: Q\to R!!, you can compose it with !!f!! to get !!h\circ f : P\to R!!

- which you can feed to !!g!! to get !!g(h\circ f) : Q!!

- and then feed that to !!h!! to get !!h(g(h\circ f)) : R!!.

But unlike !!\color{darkred}{\heartsuit}!!, this is computationally interesting.

M. Tatham gives this example: Take !!R!! to be !!\mathtt{bool}!!. We can understand a function !!X \to R!! as a subset of !!X!!, represented by its characteristic function. Say that !!Q!! is the type of subsets of !!P!!, perhaps in some representation that is more concrete than characteristic functions. If you'd like a more specific example, take !!P!! to be the natural numbers, and !!Q!! to be (recursive) sets of natural numbers as represented by (possibly infinite) lists in strictly increasing order.

Then:

!!f!! is some arbitrary function that assigns, to each element of !!P!!, a related subset of !!P!!. Say for example that !!f(p)!! is the semispatulated closure of !!p!!.

!!g!! could be the isomorphism that converts a subset of !!P!!, represented as a characteristic function, into one represented as a !!Q!!.

!!h : Q\to \mathtt{bool}!! is a single subset of !!Q!!, represented as a characteristic function on !!Q!!. Say, !!h(q)!! is true if and only the set !!q\subset P!! has the Cosell property.

With these interpretations, !!(h\circ f)(p) : P\to \mathtt{bool}!! tells you whether !!p!!'s semispatulated closure has the Cosell property, and !!g(h\circ f)!! is the element of !!Q!! that represents, !!Q!!-style, the set of all such !!p!!.

Then !!h(g(h\circ f)) : R!! computes whether this set itself also has the Cosell property: true if so, false if not.

This is certainly nontrivial, and if the made-up properties were replaced by real ones, it could even be interesting.

One could also replace !!R=\mathtt{bool}!! with some other set, and then instead of a characteristic function, !!X\to R!! would be some sort of valuation function.

I had asked:

Is the whole thing just trivial because there is no interesting way to instantiate data objects with the right types? Or is there some real computational content here?

I think M. Tatham's example shows that there is, and that the apparent vacuity of the theorem !!\color{darkred}{\heartsuit}!! arose only because !!R!! had been replaced with the empty set.

[Other articles in category /math/logic] permanent link