Mark Dominus (陶敏修)

mjd@pobox.com

Archive:

| 2024: | JFMA |

| 2023: | JFMAMJ |

| JASOND | |

| 2022: | JFMAMJ |

| JASOND | |

| 2021: | JFMAMJ |

| JASOND | |

| 2020: | JFMAMJ |

| JASOND | |

| 2019: | JFMAMJ |

| JASOND | |

| 2018: | JFMAMJ |

| JASOND | |

| 2017: | JFMAMJ |

| JASOND | |

| 2016: | JFMAMJ |

| JASOND | |

| 2015: | JFMAMJ |

| JASOND | |

| 2014: | JFMAMJ |

| JASOND | |

| 2013: | JFMAMJ |

| JASOND | |

| 2012: | JFMAMJ |

| JASOND | |

| 2011: | JFMAMJ |

| JASOND | |

| 2010: | JFMAMJ |

| JASOND | |

| 2009: | JFMAMJ |

| JASOND | |

| 2008: | JFMAMJ |

| JASOND | |

| 2007: | JFMAMJ |

| JASOND | |

| 2006: | JFMAMJ |

| JASOND | |

| 2005: | OND |

In this section:

Subtopics:

| Mathematics | 238 |

| Programming | 99 |

| Language | 92 |

| Miscellaneous | 67 |

| Book | 49 |

| Tech | 48 |

| Etymology | 34 |

| Haskell | 33 |

| Oops | 30 |

| Unix | 27 |

| Cosmic Call | 25 |

| Math SE | 23 |

| Physics | 21 |

| Law | 21 |

| Perl | 17 |

| Biology | 15 |

Comments disabled

Sun, 31 Dec 2006

Daylight chart

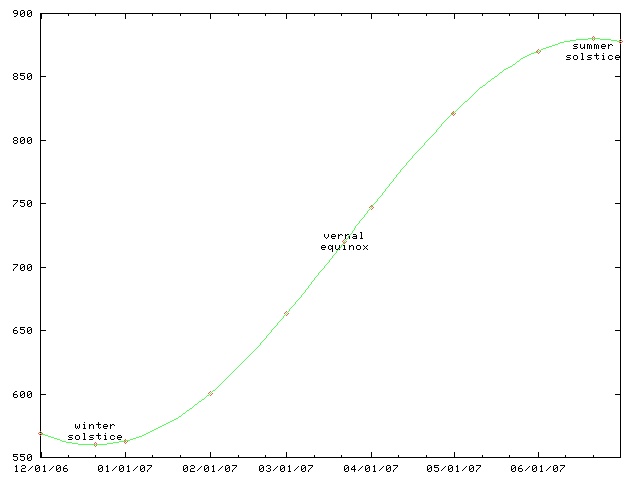

My wife is always sad this time of the year because the days are so

short and dark. I made a chart like this one a couple of years ago

so that she could see the daily progress from dark to light as the

winter wore on to spring and then summer.

This chart only works for Philadelphia and other places at the same latitude, such as Boulder, Colorado. For higher latitutdes, the y axis must be stretched. For lower latitudes, it must be squished. In the southern hemisphere, the whole chart must be flipped upside-down.

(The chart will work for places inside the Arctic or Antarctic circles, if you use the correct interpretations for y values greater than 1440 minutes and less than 0 minutes.)

This time of year, the length of the day is only increasing by about thirty seconds per day. (Decreasing, if you are in the southern hemishphere.) On the equinox, the change is fastest, about 165 seconds per day. (At latitudes 40°N and 40°S.) After that, the rate of increase slows, until it reaches zero on the summer solstice; then the days start getting shorter again.

As with all articles in the physics section of my blog, readers are cautioned that I do not know what I am talking about, although I can spin a plausible-sounding line of bullshit. For this article, readers are additionally cautioned that I failed observational astronomy twice in college.

[Other articles in category /physics] permanent link

Sat, 30 Dec 2006

Notes on Neal Stephenson's Baroque novels

Earlier this year I was reading books by Robert Hooke, John Wilkins,

Sir Thomas Browne, and other Baroque authors; people kept writing to

me to advise me to read Neal Stephenson's "Baroque cycle", in which

Hooke and Wilkins appear as characters.

I ignored this advice for a while, because those books are really fat, and because I hadn't really liked the other novels of Stephenson's that I'd read.

But I do like Stephenson's non-fiction. His long, long article about undersea telecommunications cables was one of my favorite reads of 1996, and I still remember it years later and reread it every once in a while. I find his interminable meandering pointless and annoying in his fiction, where I'm not sure why I should care about all the stuff he's describing. When the stuff is real, it's a lot easier to put up with it.

My problems with Stephenson's earlier novels, The Diamond Age and Snow Crash, will probably sound familiar: they're too long; they're disorganized; they don't have endings; too many cannons get rolled onstage and never fired.

Often "too long" is a pinheaded criticism, and when I see it I'm immediately wary. How long is "too long"? It calls to mind the asinine complaint from Joseph II that Mozart's music had "too many notes". A lot of people who complain that some book is "too long" just mean that they were too lazy to commit the required energy. When I say that Stephenson's earlier novels were "too long", I mean that he had more good ideas than he could use, and put a lot of them into the books even when they didn't serve the plot or the setting or the characters. A book is like a house. It requires a plan, and its logic dictates portions of the plan. You don't put in eleven bathtubs just because you happen to have them lying around, and you don't stick Ionic columns on the roof just because Home Depot had a sale on Ionic columns the week you were building it.

So the first thing about Stephenson's Baroque Trilogy is that it's not actually a trilogy. Like The Lord of the Rings, it was published in three volumes because of physical and commercial constraints. But the division into three volumes is essentially arbitrary.

The work totals about 2,700 pages. Considered as a trilogy, this is three very long books. Stephenson says in the introduction that it is actually eight novels, not three. He wants you to believe that he has actually written eight middle-sized books. But he hasn't; he is lying, perhaps in an attempt to shut up the pinheads who complain that his books are "too long". This is not eight middle-sized books. It is one extremely long book.

The narrative of the Baroque cycle is continuous, following the same characters from about 1650 up through about 1715. There is a framing story, introduced in the first chapters, which is followed by a flashback that lasts about 1,600 pages. Events don't catch up to the frame story until the third volume. If you consider Quicksilver to be a novel, the opening chapters are entirely irrelevant. If you consider it to be three novels, the opening chapters of the first novel are entirely irrelevant. It starts nowhere and ends nowhere, a vermiform appendix. But as a part of a single novel, it's not vestigial at all; it's a foreshadowing of later developments, which are delivered in volume III, or book 6, depending on how you count.

Another example: The middle volume, titled The Confusion, alternates chapters from two of the eight "novels" that make up the cycle. Events in these two intermingled ("con-fused") novels take place concurrently. Stephenson claims that they are independent, but they aren't.

So from now on I'm going to drop the pretense that this is a trilogy or a "cycle", and I'm just going to call this novel the "Baroque novel".

This was quite a surprise to me. The world is full of incoherent ramblers, and most of them, if you really take the time to listen to them carefully, and at length, turn out to be completely full of shit. You get nothing but more incoherence.

Stephenson at 600 pages is a semi-coherent rambler; to really get what he is saying, you have to turn him up to 2,700 pages. Most people would have been 4.5 times as incoherent; Stephenson is at least 4.5 times as lucid. His ideas are great; he just didn't have enough space to explain them before! The Baroque novel has a single overarching theme, which is the invention of the modern world. One of the strands of this theme is the invention of science, and the modern conception of science; another is the invention of money, and the modern conception of money.

I've written before about what I find so interesting about the Baroque thinkers. Medieval, and even Renaissance thought seems very alien to me. In the baroque writers, I have the first sense of real understanding, of people grappling with the same sorts of problems that I do, in the same sorts of ways. For example, I've written before about John Wilkins' attempt to manufacture a universal language of thought. People are still working on this. Many of the particular features of Wilkins' attempt come off today as crackpottery, but to the extent that they do, it's only because we know now that these approaches won't work. And the reason we know that today is that Wilkins tried those approaches in 1668 and it didn't work.

I find that almost all of Stephenson's annoying habits are much less annoying in the context of historical fiction. For example, many plot threads are left untied at the end. Daniel Waterhouse (fictional) becomes involved with Thomas Newcomen (real) and his Society for the Raising of Water by Fire. (That is, using steam engines to pump water out of mines.) This society figures in the plot of the last third of the novel, but what becomes of it? Stephenson drops it; we don't find out. In a novel, this would be annoying. But in a work of historical fiction, it's no problem, because we know what became of Newcomen and his steam engines: They worked well enough for pumping out coal mines, where a lot of coal was handy to fire them, and well enough to prove the concept, which really took off around 1775 when a Scot named James Watt made some major improvements. Sometime later, there were locomotives and nuclear generating plants. You can read all about it in the encyclopedia.

Another way in which Stephenson's style works better in historical fiction than in speculative fiction is in his long descriptions of technologies and processes. When they're fictitious technologies and imaginary processes, it's just wankery, a powerful exercise of imagination for no real purpose. Well, maybe the idea will work, and maybe it won't, and it is necessarily too vague to really give you a clear idea of what is going on. But when the technologies are real ones, the descriptions are illuminating and instructive. You know that the idea will work. The description isn't vague, because Stephnson had real source material to draw on, and even if you don't get a clear idea, you can go look up the details yourself, if you want. And Stephenson is a great explainer. As I said before, I love his nonfiction articles.

A lot of people complain that his novels don't have good endings. He's gotten better at wrapping things up, and to the extent that he hasn't, that's all right, because, again, the book is a historical novel, and history doesn't wrap up. The Baroque novel deals extensively with the Hanoverian succession to the English throne. Want to know what happened next? Well, you probably do know: a series of Georges, Queen Victoria, et cetera, and here we are. And again, if you want, the details are in the encyclopedia.

So I really enjoyed this novel, even though I hadn't liked Stephenson's earlier novels. As I was reading it, I kept thinking how glad I was that Stephenson had finally found a form that suits his talents and his interests.

[ Addendum 20170728: I revisited some of these thoughts in connection with Stephenson's 2016 novel Seveneves. ]

[ Addendum 20191216: I revived an article I wrote in 2002 about Stephenson's first novel The Big U. ]

[Other articles in category /book] permanent link

Wed, 27 Dec 2006

Linogram development: 20061227 Update

Defective constraints (see yesterday's article)

are now handled. So the example from yesterday's

article has now improved to:

define regular_polygon[N] closed {

param number radius, rotation=0;

point vertex[N], center;

line edge[N];

constraints {

vertex[i] = center + radius * cis(rotation + 360*i/N);

edge[i].start = vertex[i];

edge[i].end = vertex[i+1];

}

}

[Other articles in category /linogram] permanent link

Tue, 26 Dec 2006

Linogram development: 20061226 Update

I have made significant progress on the "arrays" feature. I

recognized that it was actually three features; I have two of them

implemented now. Taking the example from further down the page:

define regular_polygon[N] closed {

param number radius, rotation=0;

point vertex[N], center;

line edge[N];

constraints {

vertex[i] = center + radius * cis(rotation + 360*i/N);

edge[i].start = vertex[i];

edge[i].end = vertex[i+1];

}

}

The stuff in green is working just right; the stuff in red is not.The following example works just fine:

number p[3];

number q[3];

constraints {

p[i] = q[i];

q[i] = i*2;

}

What's still missing? Well, if you write

number p[3];

number q[3];

constraints {

p[i] = q[i+1];

}

This should imply three constraints on elements of p and

q:

p0 = q1

p1 = q2

p2 = q3

The third of these is defective, because there is no q3. If the figure is "closed" (which is the default) the subscripts should wrap around, turning the defective constraint into p2 = q0 instead. If the figure is declared "open", the defective constraint should simply be discarded.

The syntax for parameterized definitions (define regular_polygon[N] { ... }) is still a bit up in the air; I am now leaning toward a syntax that looks more like define regular_polygon { param index N; ... } instead.

The current work on the "arrays" feature is in the CVS repository on the branch arrays; get the version tagged tests-pass to get the most recent working version. Most of the interesting work has been on the files lib/Chunk.pm and lib/Expression.pm.

[Other articles in category /linogram] permanent link

Wed, 20 Dec 2006

Reader's disease

Reader's disease is an occupational hazard of editors and literary

critics. Critics are always looking for the hidden meaning, the

clever symbol, and, since they are always looking for it, they always

find it, whether it was there or not. (Discordians will recognize

this as a special case of the law of fives.) Sometimes the

critics are brilliant, and find hidden meanings that are undoubtedly

there even though the author was unaware of them; more often, they are

less fortunate, and sometimes they even make fools of themselves.

The idea of reader's disease was introduced to me by professor David Porush, who illustrated it with the following anecdote. Nathaniel Hawthorne's The Scarlet Letter is prefaced by an introduction called The Custom-House, in which the narrator claims to have found documentation of Hester Prynne's story in the custom house where he works. The story itself is Hawthorne's fantasy, but the custom house is not; Hawthorne did indeed work in a custom house for many years.

Porush's anecdote concerned Mary Rudge, the daughter of Ezra Pound. Rudge, reading the preface of The Scarlet Letter, had a brilliant insight: the custom house, like so many other buildings of the era, was a frame house and was built in the shape of the letter "A". It therefore stands as a physical example of the eponymous letter.

Rudge was visiting Porush in the United States, and told him about her discovery.

"That's a great theory," said Porush, "But it doesn't look anything like a letter 'A'."

Rudge argued the point.

"Mary," said Porush, "I've seen it. It's a box."

Rudge would not be persuaded, so together they got in Porush's car and drove to Salem, Massachusetts, where the custom house itself still stands.

But Rudge, so enamored of her theory that she could not abandon it, concluded that some alternative explanation must be true: the old custom house had burnt down and been rebuilt, or the one in the book was not based on the real one that Hawthorne had worked in, or Porush had led her to the wrong building.

But anyway, all that is just to introduce my real point, which is to relate one of the most astounding examples of reader's disease I have ever encountered personally. The Mary Rudge story is secondhand; for all I know Porush made it up, or exaggerated, or I got the details wrong. But this example I am about to show you is in print, and is widely available.

I have a nice book called The Treasury of the Encyclopædia Britannica, which is a large and delightful collection of excerpts from past editions of the Britannica, edited by Clifton Fadiman. This is out of print now, but it was published in 1992 and is easy to come by.

Each extract is accompanied by some introductory remarks by Fadiman and sometimes by one of the contributing editors. One long section in the book concerns early articles about human flight; in the Second Edition (published 1778-1783) there was an article on "Flying" by then editor James Tytler. Contributing editor Bruce L. Felknor's remarks include the following puzzled query:

What did Tytler mean by his interjection of "Fa" after Friar Bacon's famous and fanciful claim that man had already succeeded in flying? It hardly seems a credulous endorsement, an attitude sometimes attributed to Tytler.

Fadiman adds his own comment on this:

As for Tytler's "Fa": Could it have been an earlier version of our "Faugh!"? In any case we suddenly hear an unashamed human voice.

Gosh, what could Tytler have meant by this curious interjection? A credulous endorsement? An exclamation of disgust? An unedited utterance of the unashamed human voice? Let's have a look:

The secret consisted in a couple of large thin hollow copper-globes exhausted of air; which being much lighter than air, would sustain a chair, whereon a person might sit. Fa. Francisco Lana, in his Prodromo, proposes the same thing...

Felknor and Fadiman have mistaken "Fa" for a complete sentence. But it is apparently an abbreviation of Father Francisco Lana's ecclesiastical title.

Oops.

[Other articles in category /book] permanent link

Tue, 12 Dec 2006

ssh-agent

When you do a remote login with ssh, the program needs to use your

secret key to respond to the remote system's challenge. The secret

key is itself kept scrambled on the disk, to protect it from being

stolen, and requires a passphrase to be unscrambled. So the remote

login process prompts you for the passphrase, unscrambles the secret

key from the disk, and then responds to the challenge.

Typing the passphrase every time you want to do a remote login or run a remote process is a nuisance, so the ssh suite comes with a utility program called "ssh-agent". ssh-agent unscrambles the secret key and remembers it; it then provides the key to any process that can contact it through a certain network socket.

The process for login is now:

plover% eval `ssh-agent`

Agent pid 23918

plover% ssh-add

Need passphrase for /home/mjd/.ssh/identity

Enter passphrase for mjd@plover: (supply passphrase here)

plover% ssh remote-system-1

(no input required here)

...

plover% ssh remote-system-2

(no input required here either)

...

The important thing here is that once ssh-agent is started up and

supplied with the passphrase, which need be done only once, ssh itself

can contact the agent through the socket and get the key as many times

as needed.How does ssh know where to contact the agent process? ssh-agent prints an output something like this one:

SSH_AUTH_SOCK=/tmp/ssh-GdT23917/agent.23917; export SSH_AUTH_SOCK;

SSH_AGENT_PID=23918; export SSH_AGENT_PID;

echo Agent pid 23918;

The eval `...` command tells the shell to execute this output

as code; this installs two variables into the shell's environment,

whence they are inherited by programs run from the shell, such as ssh.

When ssh runs, it looks for the SSH_AUTH_SOCK variable. Finding it, it

connects to the specified socket, in

/tmp/ssh-GdT23917/agent.23917, and asks the agent for the

required private key.Now, suppose I log in to my home machine, say from work. The new login shell does not have any environment settings pertaining to ssh-agent. I can, of course, run a new agent for the new login shell. But there is probably an agent process running somewhere on the machine already. If I run another every time I log in, the agent processes will proliferate. Also, running a new agent requires that the new agent be supplied with the private key, which would require that I type the passphrase, which is long.

Clearly, it would be more convenient if I could tell the new shell to contact the existing agent, which is already running. I can write a command which finds an existing agent and manufactures the appropriate environment settings for contacting that agent. Or, if it fails to find an already-running agent, it can just run a new one, just as if ssh-agent had been invoked directly. The program is called ssh-findagent.

I put a surprising amount of work into this program. My first idea was that it can look through /tmp for the sockets. For each socket, it can try to connect to the socket; if it fails, the agent that used to be listening on the other end is dead, and ssh-findagent should try a different socket. If none of the sockets are live, ssh-findagent should run the real ssh-agent. But if there is an agent listening on the other end, ssh-findagent can print out the appropriate environment settings. The socket's filename has the form /tmp/ssh-XXXPID/agent.PID; this is the appropriate value for the SSH_AUTH_SOCK variable.

The SSH_AGENT_PID variable turned out to be harder. I thought I would just get it from the SSH_AUTH_SOCK value with a pattern match. Wrong. The PID in the SSH_AUTH_SOCK value and in the filename is not actually the pid of the agent process. It is the pid of the parent of the agent process, which is typically, but not always, 1 less than the true pid. There is no reliable way to calculate the agent's pid from the SSH_AUTH_SOCK filename. (The mismatch seems unavoidable to me, and the reasons why seem interesting, but I think I'll save them for another blog article.)

After that I had the idea of having ssh-findagent scan the process table looking for agents. But this has exactly the reverse problem: You can find the agent processes in the process table, but you can't find out which socket files they are attached to.

My next thought was that maybe linux supports the getpeerpid system call, which takes an open file descriptor and returns the pid of the peer process on other end of the descriptor. Maybe it does, but probably not, because support for this system call is very rare, considering that I just made it up. And anyway, even if it were supported, it would be highly nonportable.

I had a brief hope that from a pid P, I could have ssh-findagent look in /proc/P/fd/* and examine the process file descriptor table for useful information about which process held open which file. Nope. The information is there, but it is not useful. Here is a listing of /proc/31656/fd, where process 31656 is an agent process:

lrwx------ 1 mjd users 64 Dec 12 23:34 3 -> socket:[711505562]

File descriptors 0, 1, and 2 are missing. This is to be expected; it

means that the agent process has closed stdin, stdout, and stderr.

All that remains is descriptor 3, clearly connected to the socket.

Which file represents the socket in the filesystem? No telling. All

we have here is 711595562, which is an index into some table of

sockets inside the kernel. The kernel probably doesn't know the

filename either. Filenames appear in directories, where they are

associated with file ID numbers (called "i-numbers" in Unix jargon.)

The file ID numbers can be looked up in the kernel "inode" table to

find out what kind of files they are, and, in this case, what socket

the file represents. So the kernel can follow the pointers from the

filesystem to the inode table to the open socket table, or from the

process's open file table to the open socket table, but not from the

process to the filesystem.Not easily, anyway; there is a program called lsof which grovels over the kernel data structures and can associate a process with the names of the files it has open, or a filename with the ID numbers of the processes that have it open. My last attempt at getting ssh-findagent to work was to have it run lsof over the possible socket files, getting a listing of all processes that had any of the socket files open, and, for each process, which file. When it found a process-file pair in the output of lsof, it could emit the appropriate environment settings.

The final code was quite simple, since lsof did all the heavy lifting. Here it is:

#!/usr/bin/perl

open LSOF, "lsof /tmp/ssh-*/agent.* |"

or die "couldn't run lsof: $!\n";

<LSOF>; # discard header

my $line = <LSOF>;

if ($line) {

my ($pid, $file) = (split /\s+/, $line)[1,-1];

print "SSH_AUTH_SOCK=$file; export SSH_AUTH_SOCK;

SSH_AGENT_PID=$pid; export SSH_AGENT_PID;

echo Agent pid $pid;

";

exit 0;

} else {

print "echo starting new agent\n";

exec "ssh-agent";

die "Couldn't run new ssh-agent: $!\n";

}

I complained about the mismatch between the agent's pid and the pid in

the socket filename on IRC, and someone there mentioned offhandedly

how they solve the same problem. It's hard to figure out what the settings should be. So instead of trying to figure them out, one should save them to a file when they're first generated, then load them from the file as required.

Oh, yeah. A file. Duh.

It looks like this: Instead of loading the environment settings from ssh-agent directly into the shell, with eval `ssh-agent`, you should save the settings into a file:

plover% ssh-agent > ~/.ssh/agent-env

Then tell the shell to load the

settings from the file:

plover% . ~/.ssh/agent-env

Agent pid 23918

plover% ssh remote-system-1

(no input required here)

The . command reads the file and acquires the settings. And

whenever I start a fresh login shell, it has no settings, but I can

easily acquire them again with . ~/.ssh/agent-env,

without running another agent process.

I wonder why I didn't think of this before I started screwing around

with sockets and getpeerpid and /proc/*/fd and

lsof and all that rigmarole.Oh well, I'm not yet a perfect sage.

[ Addendum 20070105: I have revisited this subject and the reader's responses. ]

[Other articles in category /oops] permanent link

Wed, 06 Dec 2006

Serendipitous web searches

In yesterday's article about a

paper of Robert French, I mentioned that I had run across it while

looking for something entirely unrelated. The unrelated thing is

pretty interesting itself.

Back in the 1950's

and 1960's, James V. McConnell at the University of Michigan was

doing some really interesting work on learning and memory in planaria

flatworms, shown at right. They used to publish their papers in their

own private journal of flatworm science, The Journal of

Biological Psychology. They didn't have enough material for a

full journal, so when you were done reading the Journal,

you could flip it over and read the back half, which was a

planaria-themed humor magazine called The Worm-Runner's

Digest. I swear I'm not making this up.

Back in the 1950's

and 1960's, James V. McConnell at the University of Michigan was

doing some really interesting work on learning and memory in planaria

flatworms, shown at right. They used to publish their papers in their

own private journal of flatworm science, The Journal of

Biological Psychology. They didn't have enough material for a

full journal, so when you were done reading the Journal,

you could flip it over and read the back half, which was a

planaria-themed humor magazine called The Worm-Runner's

Digest. I swear I'm not making this up.

(I think it's time to revive the planaria-themed humor magazine. Planaria are funny even when they aren't doing anything in particular. Look at those googly eyes!)

(For some reason I've always found planaria fascinating, and I've known about them from an early age. We would occasionally visit my cousin in Oradell, who had a stuffed toy which was probably intended to be a snake, but which I invariably identified as a flatworm. "We're going to visit your Uncle Ronnie," my parents would say, and I would reply. "Can I play with Susan's flatworm?")

Anyway, to get on with the point of this article, McConnell made the astonishing discovery that memory has an identifiable chemical basis. He trained flatworms to run mazes, and noted how long it took to do so. (The mazes were extremely simple T shapes. The planarian goes in the bottom foot of the T. Food goes in one of the top arms, always the same one. Untrained planaria swim up the T and then turn one way or the other at random; trained planaria know to head toward the arm where the food always is. Pretty impressive, for a worm.)

Then McConnell took the trained worms and ground them up and fed them to untrained worms. The untrained worms learned to run the maze a lot faster than the original worms had, apparently demonstrating that there was some sort of information in the trained worms that survived being ground up and ingested. The hypothesis was that the information was somehow encoded in RNA molecules, and could be physically transferred from one individual to another. Isn't that a wonderful dream?

You can still see echoes of this in the science fiction of the era. For example, a recurring theme in Larry Niven's early work is "memory RNA", people getting learning injections, and pills that impart knowledge when you swallow them. See World Out of Time and The Fourth Profession, for example. And I once had a dream that I taught a giant planarian to speak Chinese, then fried it in cornmeal and ate it, after which I was able to speak Chinese. So when I say it's a wonderful dream, I'm speaking both figuratively and literally.

Unfortunately, later scientists were not able to reproduce McConnell's findings, and the "memory RNA" theory has been discredited. How to explain the cannibal flatworms' improved learning times, then? It seems to have been sloppy experimental technique. The original flatworms left some sort of chemical trail in the mazes, that remained after they had been ground up. McConnell's team didn't wash the mazes in between tests, and the cannibal flatworms were able to follow the trails later; it had nothing to do with their diet. Bummer.

So a couple of years ago I was poking around, looking for more information about this, and in particular for a copy of McConnell's famous paper Memory transfer through cannibalism in planarium, which I didn't find. But I did find the totally unrelated Robert French paper. It mentions McConnell, as an example of another cool-sounding and widely-reported theory that took a long time to dislodge, because it's hard to produce clear evidence that cannibal flatworms aren't in fact learning from their lunch meat, and because the theory that they aren't learning is so much less interesting-sounding than the theory that they are. News outlets reported a lot about the memory RNA breakthrough, and much less about the later discrediting of the theory.

French's paper, you will recall, refutes the interesting-sounding hypothesis that infants resemble their fathers more strongly then they do their mothers, and has many of the same difficulties.

There are counterexamples. Everyone seems to have heard that the Fleischmann and Pons tabletop cold fusion experiment was an error. And the Hwang Woo-Suk stem cell fraud is all over the news these days.

[Other articles in category /bio] permanent link

Tue, 05 Dec 2006

Do infants resemble their fathers more than their mothers?

Back in 1995, Christenfeld and Hill published a paper claimed to have

found evidence that infants tended to resemble their fathers more than

they resembled their mothers. The evolutionary explanation for this,

it was claimed, is that children who resemble their fathers are less

likely to be abandoned by them, because their paternity would be less

likely to be doubted. The pop science press got hold of

it—several years later, as they often do—and it was widely

reported for a while. Perhaps you heard about it.

A couple years ago, while looking for something entirely unrelated, I ran across the paper of French et al. titled The Resemblance of One-year-old Infants to Their Fathers: Refuting Christenfeld & Hill. French and his colleagues had tried to reproduce Christenfeld and Hill's results, with little success; they suggested that the conclusion was false, and offered a number of arguments as to why the purported resemblance should not exist. Of course, the pop science press was totally uninterested.

At the time, I thought, "Wow, I wish I had a way to get a lot of people to read this paper." Then last month I realized that my widely-read blog is just the place to do this.

Before I go on, here is the paper. I recommend it; it's good reading, and only six pages long. Here's the abstract:

In 1995 Christenfeld and Hill published a paper that purported to show at one year of age, infants resemble their fathers more than their mothers. Evolution, they argued, would have produced this result since it would ensure male parental resources, since the paternity of the infant would no longer be in doubt. We believe this result is false. We present the results of two experiments (and mention a third) which are very far from replicating Christenfeld and Hill's data. In addition, we provide an evolutionary explanation as to why evolution would not have favored the result reported by Christenfeld and Hill.Other related material is available from Robert French's web site.

In the first study done by French, participants were presented with a 1-, 3-, or 5-year-old child's face, and the faces of either the father and two unrelated men, or the mother and two unrelated women. The participants were invited to identify the child's parent. They did indeed succeed in identifying the children's parents somewhat more often than would have been obtained by chance alone. But the participants did not identify fathers more reliably than they identified mothers.

The second study was similar, but used only 1-year-old infants. (The Christenfeld and Hill claim is that one year is the age at which children most resemble their fathers.)

French points out that although the argument from evolutionary considerations is initially attractive, it starts to disintegrate when looked at more closely. The idea is that if a child resembles its father, the father is less likely to doubt his paternity, and so is less likely to withhold resources from the child. So there might be a selection pressure in favor of resembling one's father.

But now turn this around: if a father can be sure of paternity because the children look like him, then he can also be sure when the children aren't his because they don't resemble him. This will create a very strong selection pressure in favor of children resembling their fathers. And the tendency to resemble one's father will create a positive feedback loop: the more likely kids are to look like their fathers, the more likely that children who don't resemble their fathers will be abandoned, neglected, abused, or killed. So if there is a tendency for infants to resemble their fathers more than their mothers, one would expect it to be magnified over time, and to be fairly large by now. But none of the studies (including the original Christenfeld and Hill one) found a strong tendency for children to resemble their fathers.

But, as French notes, it's hard to get people to pay attention to a negative result, to a paper that says that something interesting isn't happening.

[ Addendum 20061206: Here's the original Christenfeld and Hill paper. ]

[ Addendum 20171101: A recent web search refutes my claim that “the pop science press was totally uninterested”. Results include this article from Scientific American and a mention in the book Bumpology: The Myth-Busting Pregnancy Book for Curious Parents-To-Be. ]

[ Addendum 20171101: A more extensive study in 2004 confirmed French's results. ]

[Other articles in category /bio] permanent link

Mon, 04 Dec 2006

Clockwise

A couple of weeks ago I was over at a friend's house, and was trying

to explain to her two-year-old daughter which way to turn the knob on

her Etch-a-Sketch. But I couldn't tell her to turn it clockwise,

because she can't tell time yet, and has no idea which way is

clockwise.

It occurs to me now that I may not be giving her enough credit; she may know very well which way the clock hands go, even though she can't tell time yet. Two-year-olds are a lot smarter than most people give them credit for.

Anyway, I then began wonder what "clockwise" and "counterclockwise" were called before there were clocks with hands that went around clockwise. But I knew the answer to that one: "widdershins" is counterclockwise; "deasil" is clockwise.

Or so I thought. This turns out not to be the answer. "Deasil" is only cited by the big dictionary back to 1771, which postdates clocks by several centuries. "Widdershins" is cited back to 1545. "Clockwise" and "counter-clockwise" are only cited back to 1888! And a full-text search for "clockwise" in the big dictionary turns up nothing else. So the question of what word people used in 1500 is still a mystery to me.

That got me thinking about how asymmetric the two words "deasil" and "widdershins" are; they have nothing to do with each other. You'd expect a matched set, like "clockwise" and "counterclockwise", or maybe something based on "left" and "right" or some other pair like that. But no. "Widdershins" means "the away direction". I thought "deasil" had something to do with the sun, or the day, but apparently not; the "dea" part is akin to dexter, the right hand, and the "sil" part is obscure. Whereas the "shins" part of "widdershins" does have something to do with the sun, at least by association. That is, it is not related historically to the sun, except that some of the people using the word "widdershins" were apparently thinking it was actually "widdersun". What a mess. And the words have nothing to do with each other anyway, as you can see from the histories above; "widdershins" is 250 years older than "deasil".

The OED also lists "sunways", but the earliest citation is the same as the one for deasil.

Anyway, I did not know any of this at the time, and imagined that "deasil" meant "in the direction of the sun's motion". Which it is; the sun goes clockwise through the sky, coming up on the left, rising to its twelve-o'-clock apex, and then descending on the right, the way the hands of a clock do. (Perhaps that's why the early clockmakers decided to make the hands of the clock go that way in the first place. Or perhaps it's because of the (closely related) reason that that's the direction that the shadow on a sundial moves.)

And then it hit me that in the southern hemisphere, the sun goes the other way: instead of coming up on the left, and going down on the right, the way clock hands do, it comes up on the right and goes down on the left. Wowzers! How bizarre.

I'm a bit sad that I figured this out before actually visiting the southern hemisphere and seeing it for myself, because I think I would have been totally freaked out on that first morning in New Zealand (or wherever). But now I'm forewarned that the sun goes the wrong way down there and it won't seem so bizarre when I do see it for the first time.

[Other articles in category /lang] permanent link